Thesis

In the evolving world of software development, product velocity has transformed from a desirable trait to a fundamental requirement for engineering organizations. Starting in 2001, the dominant approach to software development was dubbed the Age of Agile; influential technical leaders authored a Manifesto for Agile Software Development. This new wave of thought marked a significant shift in development for organizations that adopted agile practices driven by a focus on challenging traditional management assumptions of the 20th century. The Agile Manifesto introduced four core principles:

“Individuals and interactions over processes and tools, Working software over comprehensive documentation, Customer collaboration over contract negotiation, Responding to change over following a plan”

These principles laid the foundation for the agile method, which transformed product development and organizations' approaches to the entire software development lifecycle. The agile method contrasts sharply with the traditional waterfall method, which was characterized by a rigid, linear, phase-by-phase process, often lacking the flexibility to adapt to evolving project requirements. In contrast, agile emphasizes an iterative and flexible approach, breaking the development process into smaller, manageable chunks. This methodology prioritizes collaboration, continuous feedback, and regular iterations, enabling teams to swiftly respond to changing requirements and deliver working software in shorter cycles.

The tangible benefits of adopting agile practices in organizations are evident. One 2021 study found that businesses that have undergone successful agile transformations become five to ten times faster in their project delivery. The QSM software almanac reports a reduction in typical software project delivery times from 16.1 months in the 1980s to 7.7 months by the early 2000s, underscoring the efficiency gains brought about by agile methodologies.

However, enhancing product velocity isn’t solely a matter of development practices; it also involves streamlining the software deployment process. Historically, deployment has been a complex and time-consuming aspect of the software development lifecycle. In the early 2000s, developers used simple tools like FTP and web interfaces to deploy their applications. However, these manual and error-prone methods often resulted in inconsistencies between development, testing, and production environments, giving rise to the familiar programmer maxim, “It works on my machine.”

The advent of version control systems like Git and SVN marked a significant improvement in the deployment process. Nonetheless, deployments remained cumbersome, especially as applications grew more complex. The growth in app sizes exemplifies this increasing complexity; for instance, the top 10 iPhone apps by number of downloads in the United States ballooned from approximately 550 MB to 2.2 GB between 2016 and 2021, expanding by approximately 298%.

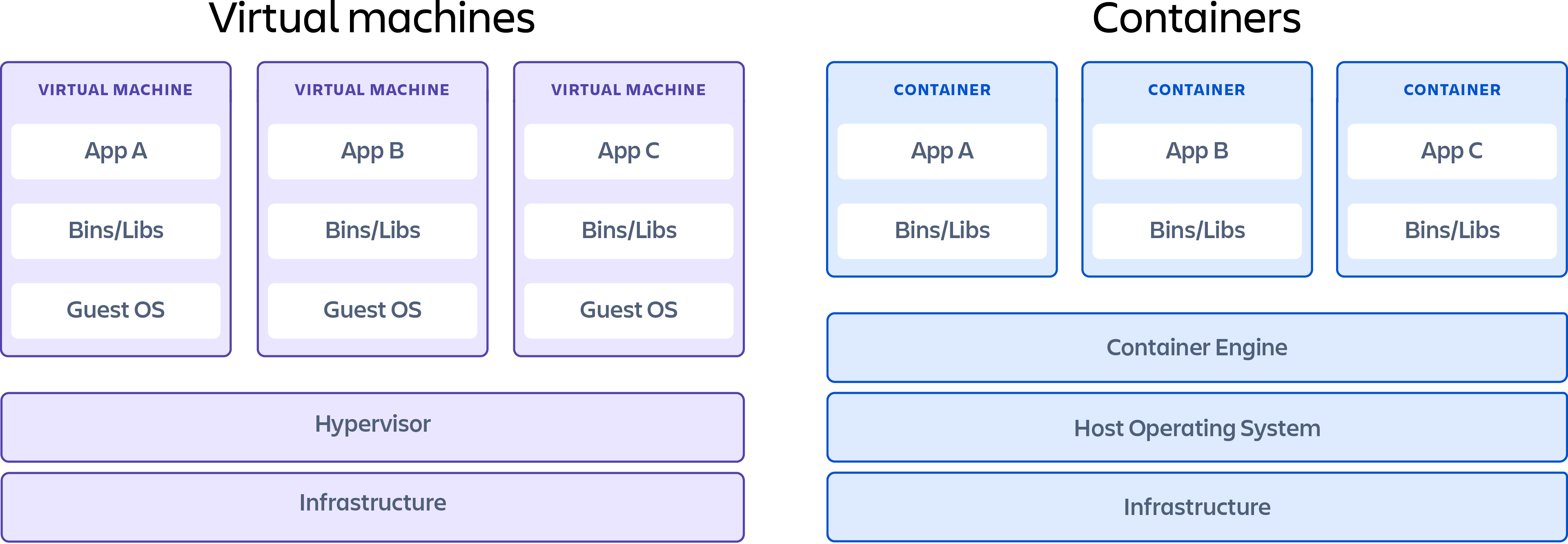

As applications expanded in size and functionality, the sheer number of dependencies required for consistent operation across different environments became a significant hurdle. Traditional deployment methods struggled to manage these intricate dependencies, leading to frequent environment-specific issues and prolonged troubleshooting efforts. Developers needed a more efficient and reliable way to encapsulate application code and associated libraries and configurations. This necessity spurred the search for innovative packaging solutions that could simplify deployments, ensure consistency, and reduce the risk of incompatibilities across various stages of the development lifecycle.

One of the solutions to this challenge has been the development of containerization. This technology enables developers to package an application and its dependencies into a single self-contained unit. Think of software containers like shipping containers. They are designed to hold everything needed to transport goods (or, in this case, an application) safely and efficiently, regardless of the mode of transportation (or operating system). As shipping containers can be quickly loaded onto a plane, train, or truck, software containers can be easily deployed and managed across different environments and operating systems without worrying about compatibility issues or inconsistencies.

Containers (process isolation) have existed in specific development settings for several decades, particularly in the Linux ecosystem. Linux containers use kernel features like cgroups (control groups) and namespaces to manage system resources and provide process isolation. However, container management often requires a deep understanding of Linux internals and command-line tools. Technologies like Linux Containers provided the foundational capabilities, but needed to be more user-friendly, making it difficult for developers without extensive system administration experience to leverage them effectively.

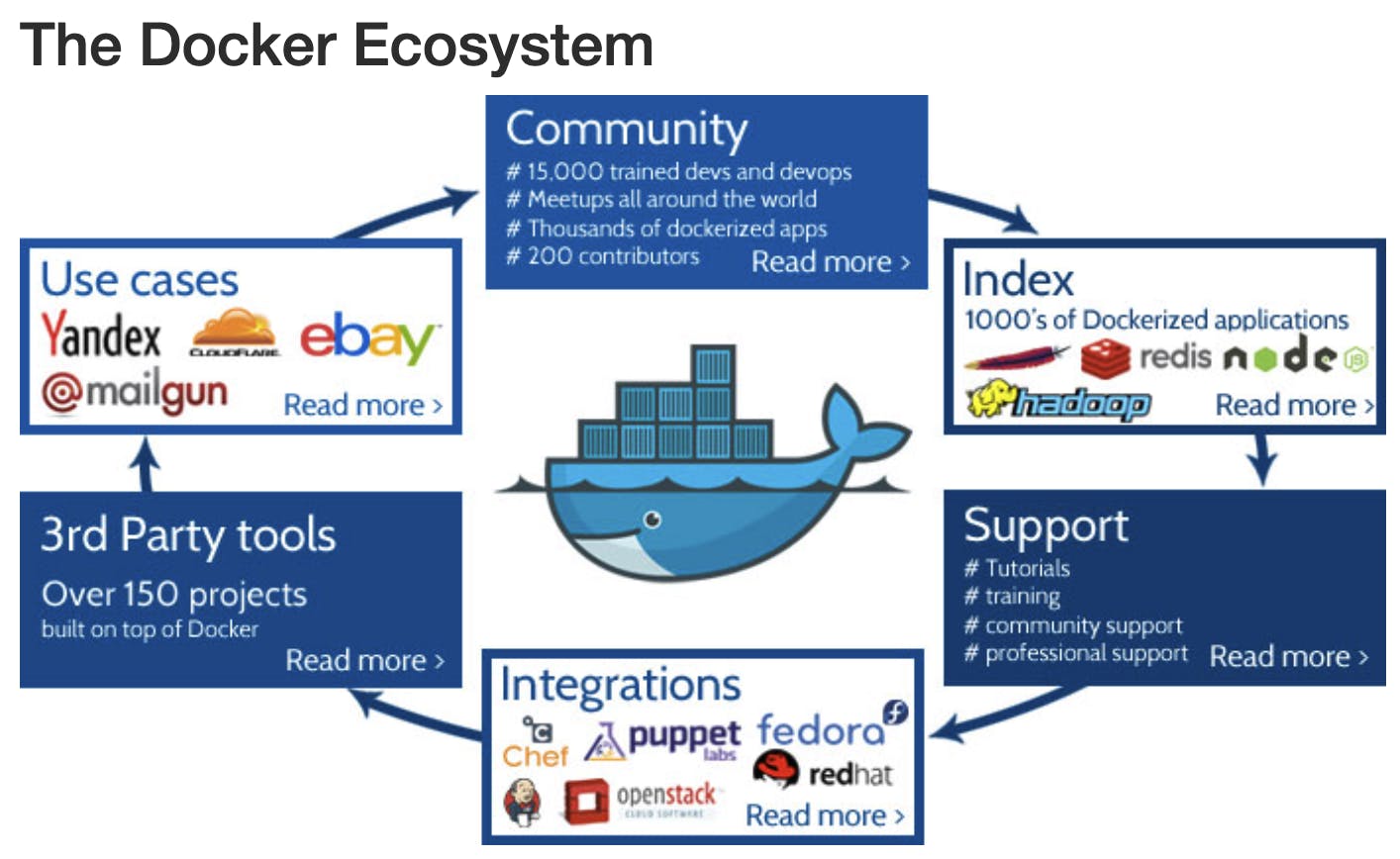

Docker changed this by expanding access to container technology across development platforms. Container technology adoption accelerated when Docker introduced an open-sourced Docker Engine in 2013. Docker Engine set a new standard for container use with easy tools for developers and defined a universal approach for how containers should be packaged. This de facto standard was later formalized with the creation of the Open Container Initiative (OCI) in 2015, which Docker helped establish. The OCI now develops open standards for container runtimes and image formats. This ensures that containers are portable and can be run by any compliant engine, not just Docker's. In fact, Docker contributed its own runtime, containerd, to the Cloud Native Computing Foundation (CNCF), where it now serves as a foundational component for many container platforms.

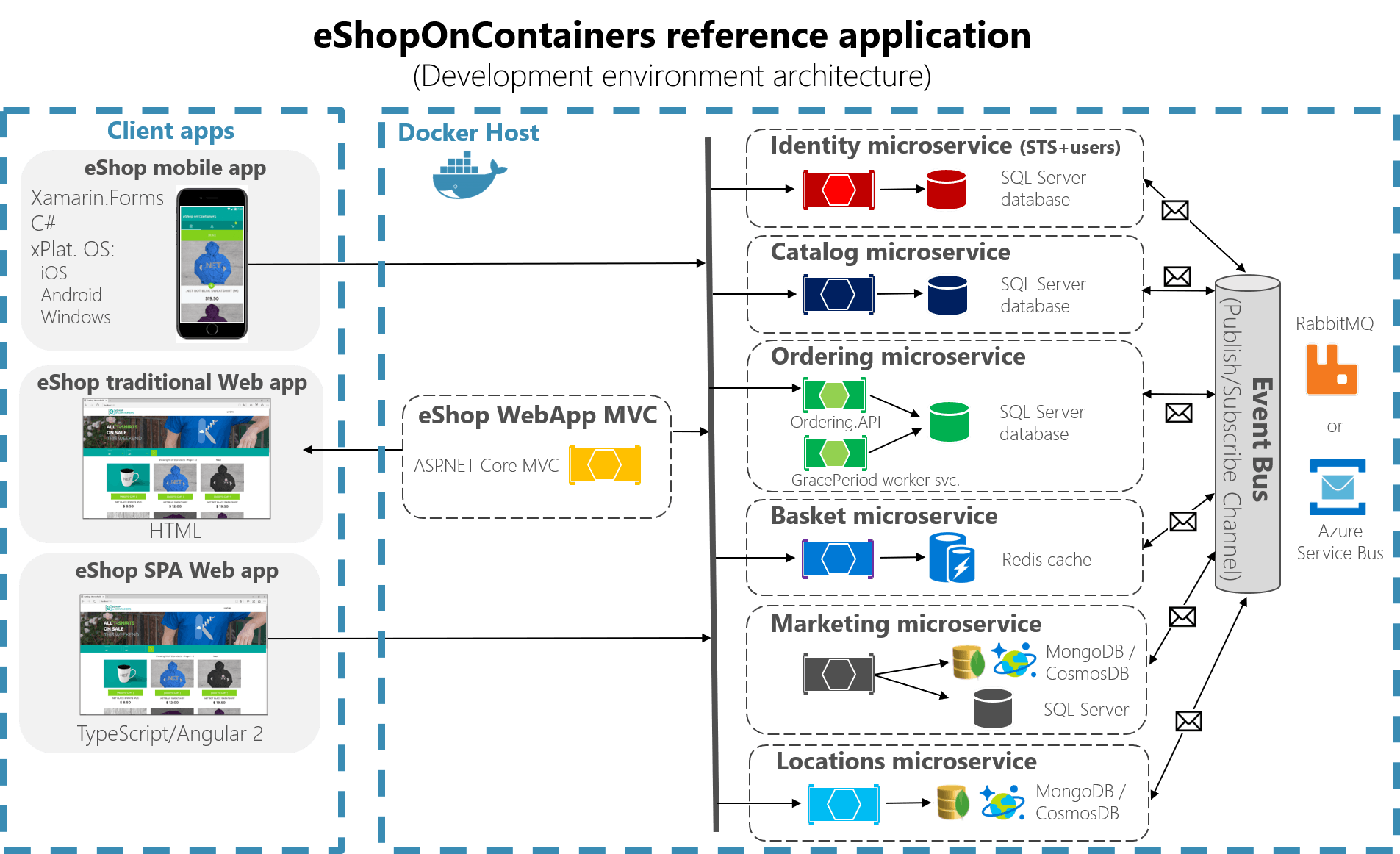

As applications and organizations have grown in complexity and scale, many modern engineering organizations have begun to transition to microservice architecture. This system design approach structures an application as a collection of loosely coupled, independently deployable services. Each “microservice” focuses on a specific business capability and communicates with other services through well-defined APIs. You can imagine microservices as the various departments in a retail store. Each department operates independently, specializes in specific functions or items, and collaborates with the other departments in the store to achieve the company’s overall objectives. Former Docker CEO Ben Golub introduces Docker’s role in the shift to microservice architecture and the increased agility that derives from it as follows:

“Traditionally, teams of 1,000 developers would build monolithic applications tightly coupled to specific servers. You'd have long, slow release cycles of weeks or months in which developers can't update software quickly because any change can break the app. With Docker, you can assemble applications as if you're building something from Legos, where different pieces (called microservices) can be interchanged but are compatible with each other and work across thousands of servers. So a billing module can be a service, which is separate from a reporting module, which is separate from a sign-up module. You can reuse these modules for different applications. And Docker lets you put any app into the digital equivalent of a shipping container, which means you can move it to any server in the cloud or on-premise, any operating environment, and it will run consistently.”

Founding Story

Docker was founded in 2008 by Solomon Hykes, Sebastien Pahl, and Kamel Founadi under the name dotCloud. Hykes was born to an American father and a French-Canadian mother in New York City. At age four, his family moved to France, where he spent most of his childhood. Hykes became interested in computer science at a young age and began coding at seven. He then spent his teenage years running servers at a local internet cafe in exchange for free internet access. After graduating from high school, Hykes enrolled in an advanced engineering school in France called Epitech, where he later met Pahl. Hykes described his interest at the time this way:

“I’ve always been really fascinated by this general trend of taking lots of computers and wiring them up together and using them as one computer. I thought it was the coolest thing ever, and started to think of that pool of machines as something that can continue to exist even as the individual machines come and go. That was always kind of my thing even at school, and most of my projects had revolved around that cool concept of upgrading the definition of a computer, really.”

Together with Pahl and Founadi, Hykes started a company called dotCloud. Their goal at the time was “to harness an obscure technology called containers, and use it to create what [they] called ‘tools of mass innovation’: programming tools which anyone could use.” dotCloud was a Platform as a Service (PaaS) provider that allowed developers to host, assemble, and run their applications on the service. In 2011, Hykes noted that “web developers have this very specific problem – they write these web apps, and it’s hard for them to get them online, so let’s help them do that.“ Its key innovation was not inventing containers from scratch, but rather building a user-friendly engine and tooling on top of existing Linux kernel features (like cgroups and namespaces). This abstraction layer made a powerful but complex technology accessible to the average developer for the first time.

Existing PaaS providers like Heroku and Google App Engine already gave developers options to “take their mind off of server administration and focus entirely on writing and deploying code”, but they were limited to certain development stacks due to the difficulty of managing different versions of libraries and runtimes, which proved inflexible for companies that wanted to add a new programming language midway through a project. The goal of dotCloud was, therefore, to build a web hosting platform that extended the capability of existing PaaS providers by allowing developers greater flexibility in their choices of development stacks, giving way to a Heroku, but for multiple languages.

To enable language-agnostic web hosting capable of supporting various programming languages, runtimes, and environments, dotCloud developed a sophisticated service infrastructure. This involved building API functionality to abstract the client’s existing application stack and code into containers. By encapsulating applications and their dependencies, dotCloud could deploy clients’ packaged software units with minimal interference across diverse environments, ensuring consistency and reliability in the deployment process for virtually any application stack.

However, while this approach was innovative in early 2011, dotCloud faced significant challenges in a highly competitive market. The web hosting service landscape was increasingly crowded with well-funded competitors, and the complexity of managing multiple development stacks for integration with their service proved daunting in practice. These hurdles hindered dotCloud’s ability to scale effectively and differentiate itself in a saturated market.

Despite these obstacles, dotCloud managed to deliver consistent quarter-over-quarter revenue growth. However, by late 2012, the company members still felt like they were “pushing a boulder up a hill.” After receiving a “modest” acquisition offer, the team recognized the pressing need for further differentiation and scalability beyond their existing offerings. This realization led to a strategic decision to pivot in 2013, shifting focus from building a multi-language web hosting service to enhancing and scaling the underlying container technology that powered their deployment platform.

This important change in direction culminated in the birth of Docker. In practice, dotCloud went through this pivot by open-sourcing its container technology and branding the product as Docker. Solomon Hykes explained and introduced the concept and framework for Docker containers to the public at PyCon in March 2013. To signify this shift in focus, dotCloud also rebranded its company name as Docker.

The origin of the Docker name has not been confirmed, but many think that the name is inspired by “dockworkers,” manual laborers responsible for loading and unloading ships. In regions like Great Britain and Ireland, such dockworkers are colloquially known as “dockers.” This renaming underscored the company’s new priority on developing container technology. While the newly renamed Docker continued to offer Platform-as-a-Service offerings under the dotCloud brand, by mid-2013, most of their resources were devoted to expanding the Docker brand and ecosystem. Solomon Hykes elaborated on the decision to open-source Docker this way:

“Despite our plans to build a commercial business around Docker, we remain committed to being truly open source. We will stay fully open under the Apache License, we will not pursue an open core model, and will continue to follow the open design pattern, with broad based contributions and maintainers from outside the company. In addition, we are committed to creating a level playing field, with clear and fair rules for all companies who want to launch commercial offerings on top of Docker”

Source: Docker

Introducing Docker containers in March 2013 led to swift and widespread adoption in the developer community. By October 2013, Docker had been downloaded 100K times, attracted 12.1K stars on GitHub, and became one of the fastest-growing open-source projects. Major companies like Rackspace, eBay, and CloudFlare also began using Docker internally. Significantly, Docker’s pivot also turned its former competitors into collaboration opportunities. Heroku released Dokku in November 2013 as a self-hosted alternative to Heroku, building off of the container infrastructure provided by Docker.

Despite the initial traction that would lead to millions of downloads and help the company raise $436 million in total funding as of December 2025, Docker faced significant challenges in translating its initial surge in popularity into financial success. This was driven in part by misalignment between the go-to-market strategy and the actual value proposition of Docker. The company spent a lot of time and resources educating enterprise teams about the need for Docker, which would later detract from the bottom-up adoption that the company was seeing from developers, who were the primary users and advocates of the platform. Furthermore, the open-source model, although crucial for rapid adoption, posed challenges for monetization. The company’s freemium model, which allowed Docker Desktop to be downloaded for free, created a large base of consumption that did not necessarily translate into revenue.

In 2018, Hykes announced his departure from Docker. Hykes's departure was followed by a period of strategic reevaluation, which led to Scott Johnston, the company’s former Chief Product Officer, being chosen as the company's new CEO. It also culminated in Docker selling its enterprise platform and customers to Mirantis in an acquisition in November 2019. Docker justified the sale in a statement:

“After conducting thorough analysis with the management team and the Board of Directors, we determined that Docker had two very distinct and different businesses: one an active developer business, and the other a growing enterprise business. We also found that the product and the financial models were vastly different. This led to the decision to restructure the company and separate the two businesses, which is the best thing for customers and to enable Docker’s industry-leading technology to thrive”

The sale involved recapitalizing the company to “expand Docker Desktop and Docker Hub’s roles in the developer workflow for modern apps.” As of 2025, Docker’s mission as a development platform is to simplify the process of building, sharing, and running container applications by handling complicated setup tasks so developers can dedicate their time to coding.

Product

Docker provides a platform for developers to package, ship, and run applications in containers. By creating a container that includes the application and its dependencies, developers can use Docker to deploy it on any system that supports Docker without needing to modify the application. This portability enables easy deployment across multiple systems. Additionally, Docker provides tools for creating, managing, and orchestrating containers, making it easier for developers to work with and deploy containerized applications.

Source: LinkedIn

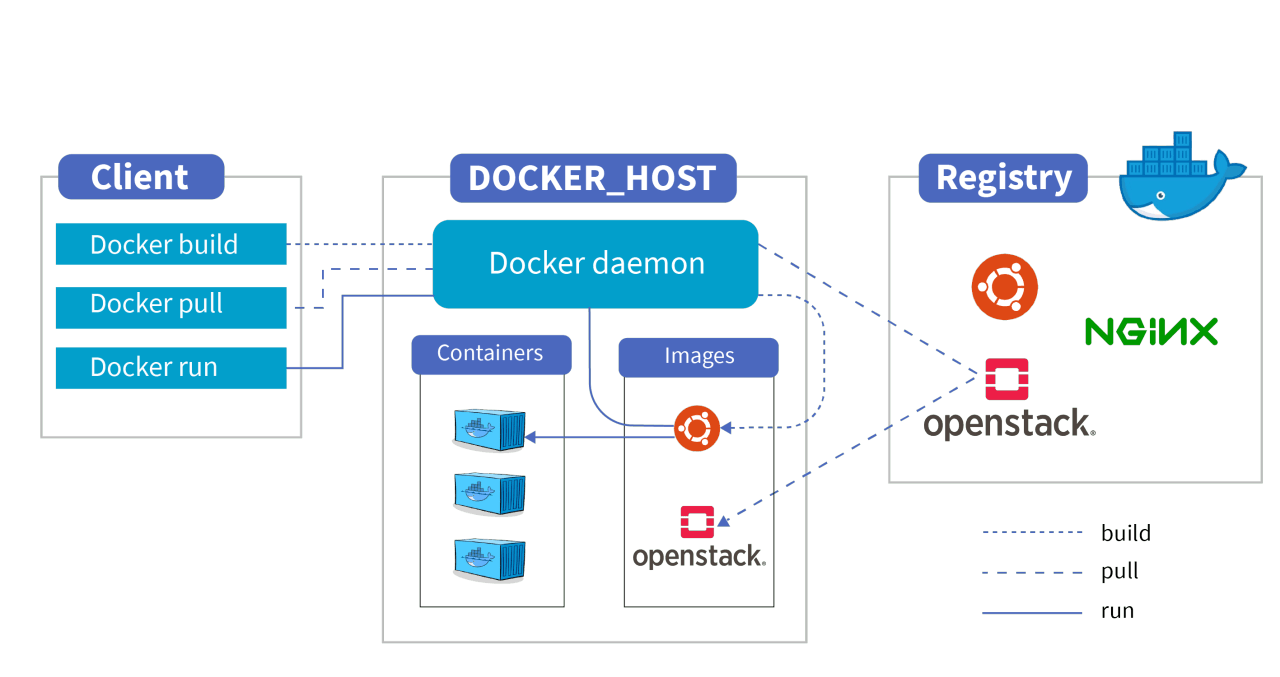

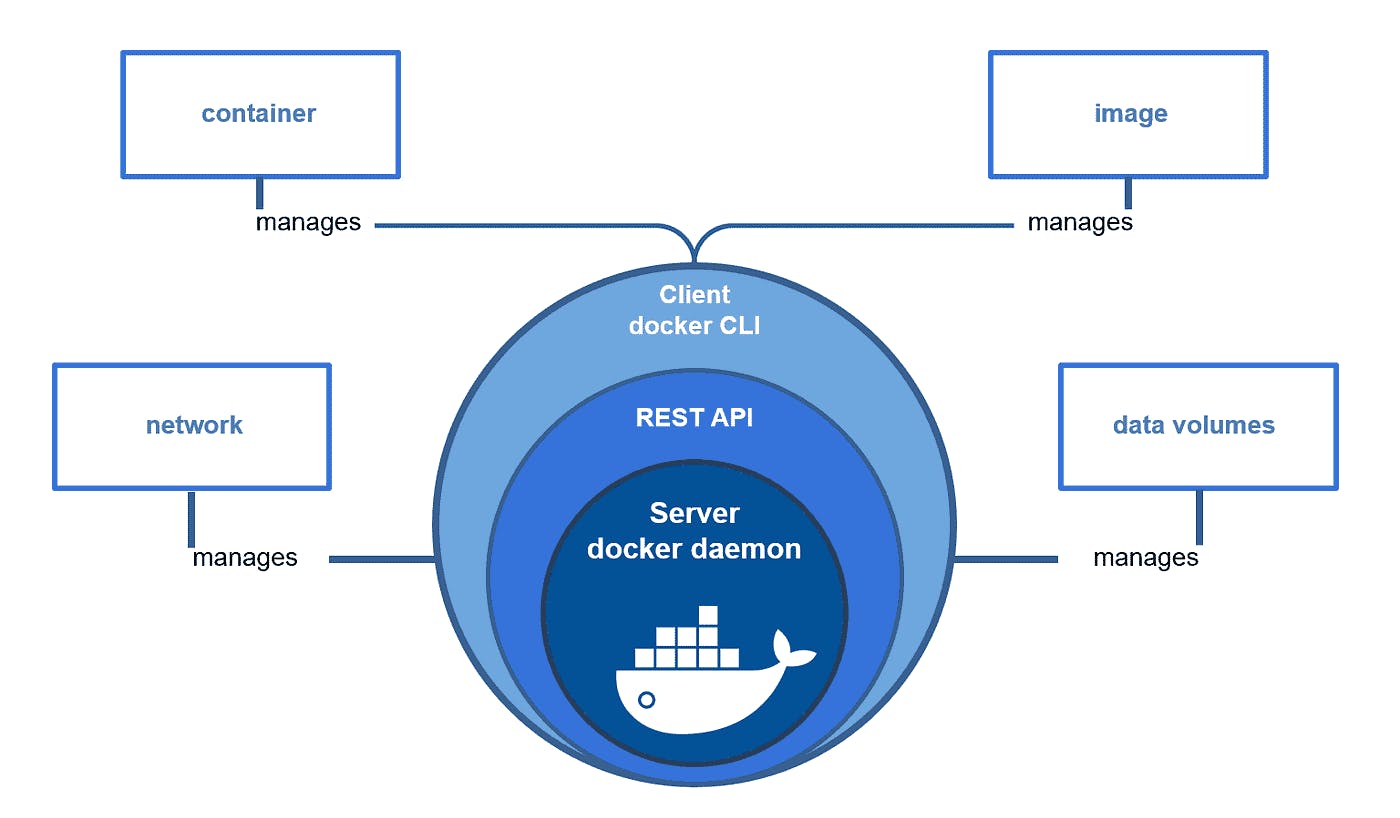

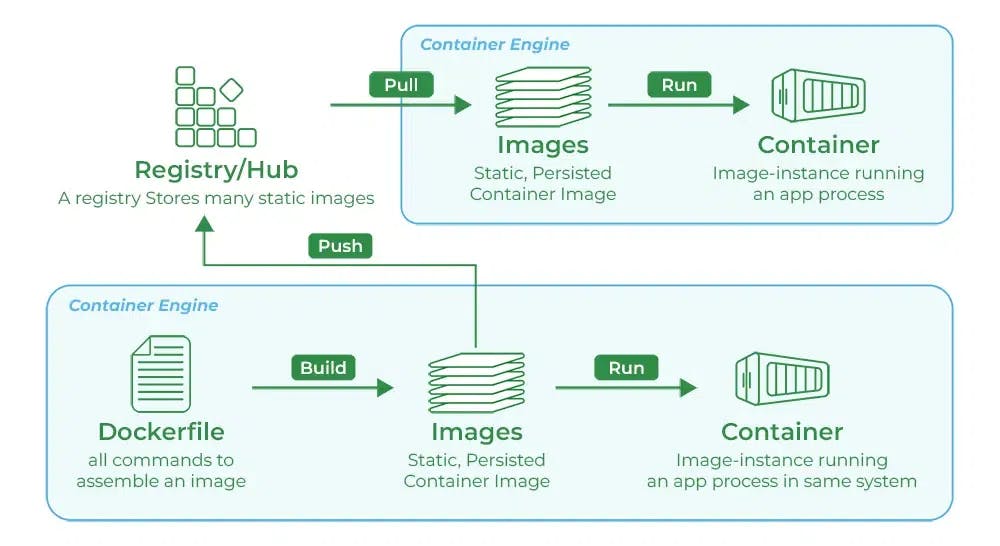

At the most basic level, Docker’s containerization architecture consists of several key components:

Docker Engine: The core of Docker, also known as the daemon, is responsible for creating and managing containers. It provides the runtime environment for containers and executes containerized applications.

Docker Images: Immutable, read-only files that contain the application code, libraries, and environment settings needed to run an application. Images serve as the blueprint for creating containers.

Docker Containers: instances of Docker images representing live, running applications. They are lightweight and isolated, sharing the host system’s operating system kernel but running independently of each other.

Docker Hub: A cloud-based registry service that allows developers to store, share, and manage Docker images. It is a central repository for publicly available images and facilitates collaboration within the developer community.

To illustrate how these Docker components interact, consider a simplified example of deploying a web application:

The image is pushed to Docker Hub, making it accessible to other developers and deployment environments.

The deployment environment pulls the image from Docker Hub and uses Docker Engine to create a container from the image.

The container runs the web application, ensuring consistent performance and behavior across different environments, from development to production.

Source: Microsoft

Docker is an open platform enabling developers to build, ship, and run container applications. Therefore, the product suite for Docker includes tools for creating and deploying containers, analyzing containers, and managing deployed containers.

Docker Engine

Source: Medium

Docker Engine is an open-source containerization technology for building and containerizing applications. It acts as a client-server application with a long-running daemon process, APIs for interacting with the daemon, and a command-line interface (CLI) client. The daemon creates and manages Docker objects such as images, containers, networks, and volumes.

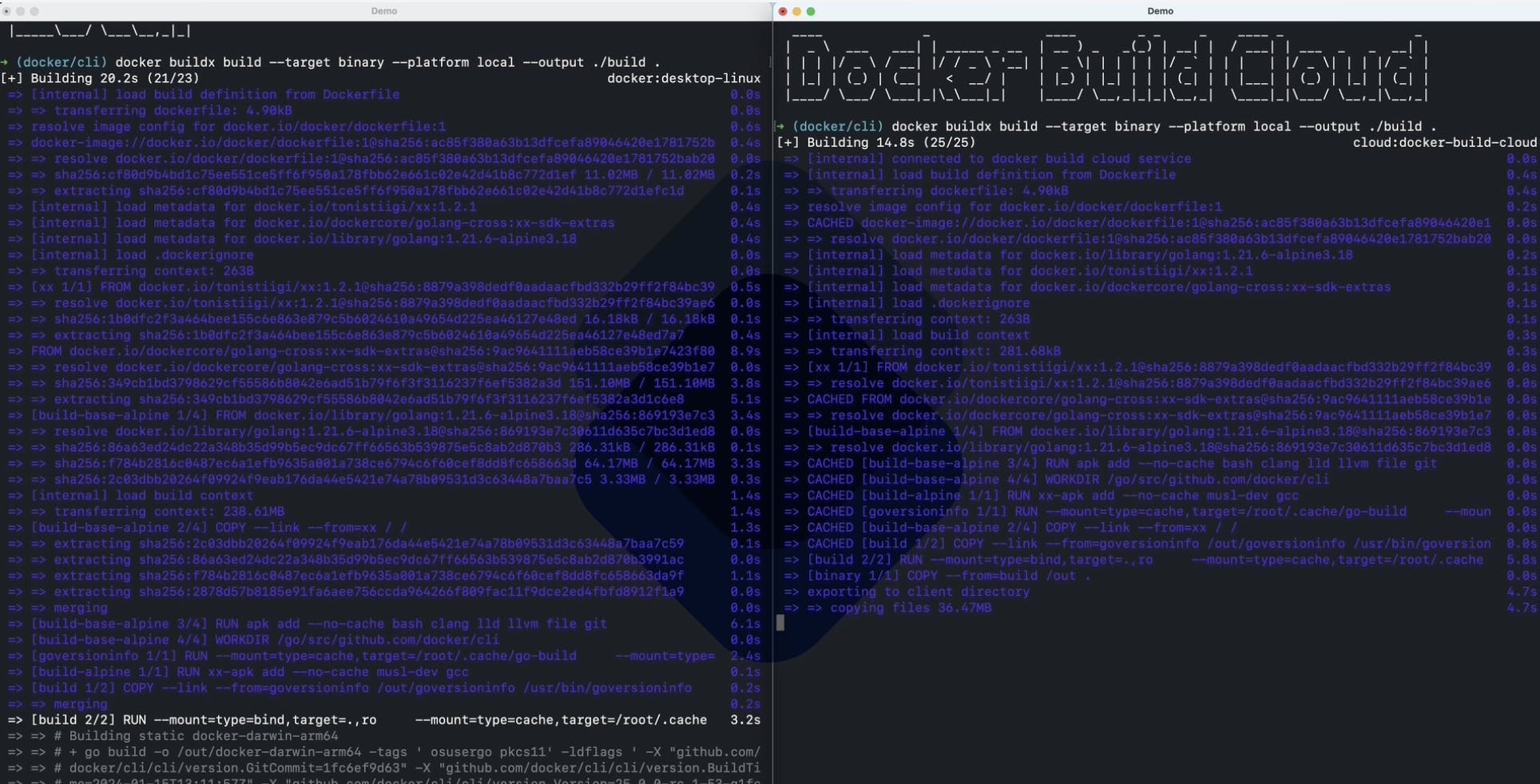

Docker Build Cloud

Source: Docker

Docker Build Cloud is a fully managed cloud service launched in January 2024 that allows development teams to offload container image builds to the cloud, achieving build speed improvements of up to 39x.

Key features include:

Cloud-based builders: Native AMD64 and ARM64 builders

Shared cache: Teams working on the same repository can avoid redundant builds, with cached results instantly accessible across team members

Parallel builds: Multiple builds can run simultaneously, significantly accelerating development workflows

Multi-architecture support: Native builds for different CPU architectures without requiring slow emulators

CI/CD integration: Seamless integration with existing workflows, including GitHub Actions and other CI solutions

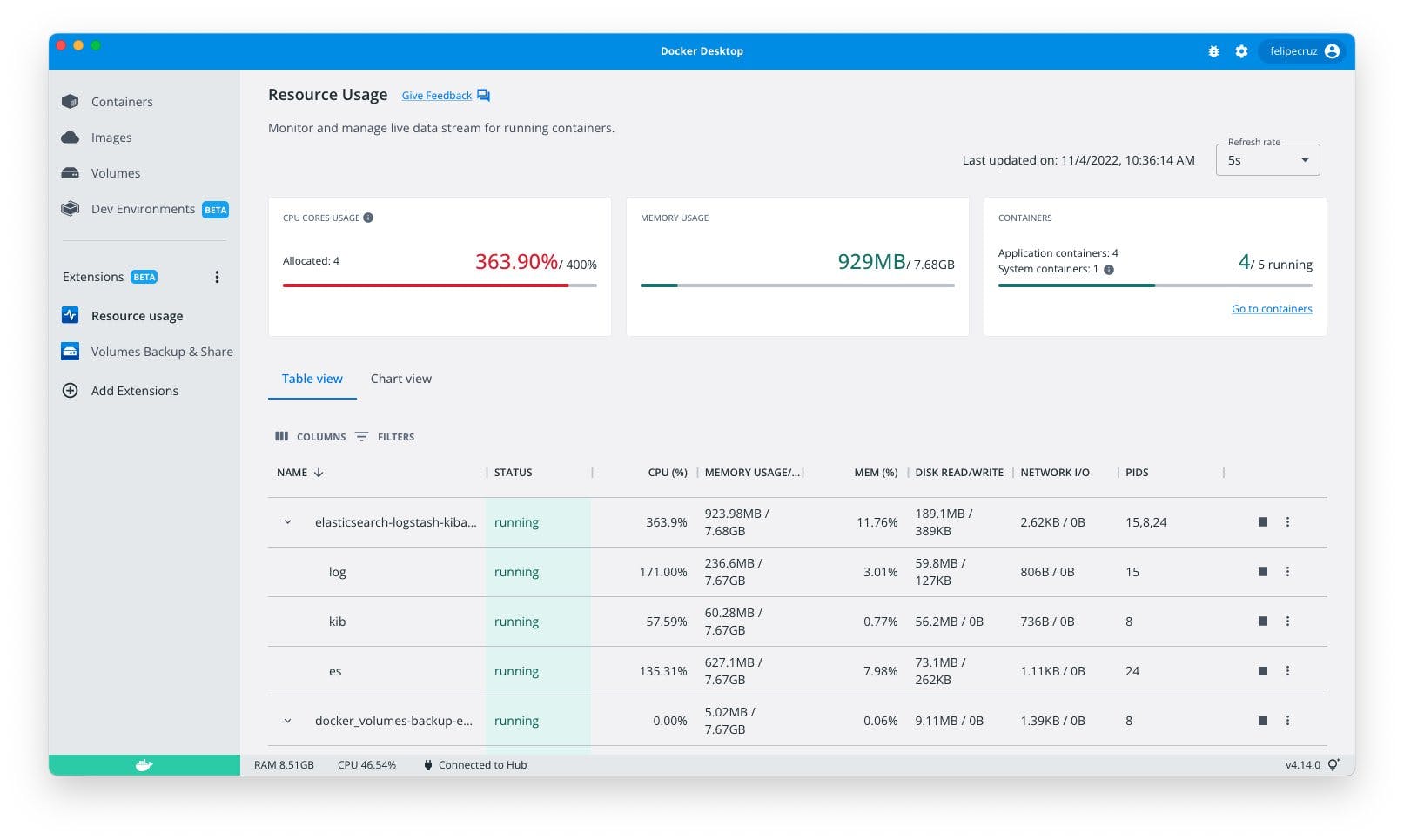

Docker Desktop

Source: Docker

Docker Desktop is a one-click-install application for Mac, Linux, or Windows environments that lets developers build, share, and run containerized applications and microservices. It provides a straightforward Graphical User Interface (GUI) for managing containers, applications, and images directly from the machine, reducing the time spent on complex setups. It also features the Docker Extensions Marketplace, which allows developers to add third-party tools for debugging, security, and networking directly into the Docker Desktop interface.

In January 2025, Docker announced the launch of Gordon, an AI agent for Docker. In the announcement, Docker explained how its efforts to leverage AI often ran into similar problems. Using AI tools would require context switching across apps, websites, etc., while integrating AI directly into a product was more seamless than using external chat services, but was harder to accomplish. In response, Docker integrated Gordon into Docker Desktop.

Gordon enables users to leverage Gordon within Docker Desktop for use cases such as debugging container launches, or even automated functions like automatically creating buttons within the Docker Desktop UI to “directly get suggestions or run actions.” As of January 2025, Gordon was leveraging the OpenAI API.

While the de facto industry standard for container orchestration is Kubernetes, an open-source project originally developed by Google, Docker has fully integrated Kubernetes into Docker Desktop. With a single click, developers can enable a complete, local Kubernetes cluster on their machine. This allows them to develop and test their applications on Kubernetes in a local environment before deploying to production clusters in the cloud, solidifying Docker Desktop's role as the central tool for containerized application development, regardless of the orchestrator used.

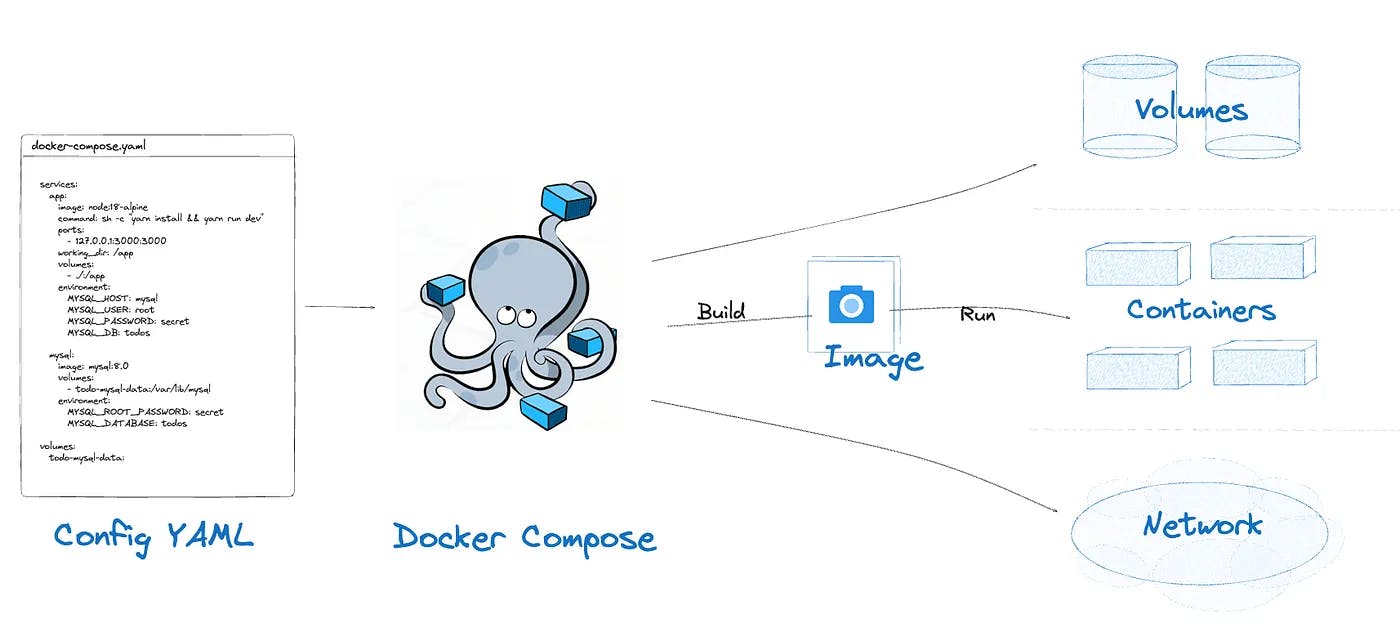

Docker Compose

Source: AWS Builder Center

Docker Compose is a tool for defining and running multi-container Docker applications. It uses a YAML file to configure an application's services, networks, and volumes. With a single command, developers can create and start all the services from their configuration. This makes it an essential tool for setting up complex local development environments that might consist of a backend server, a database, and a caching service, all running in isolated containers. In July 2025, Docker rolled out agent building blocks in Compose, further integrating the service into Docker’s broader suite of AI features.

Docker Hub

Source: GeeksforGeeks

Docker Hub is a cloud-based repository service that allows users to store, share, and manage Docker container images. It provides features like repositories for storing images, automated builds from GitHub and Bitbucket, webhooks for triggering actions, and vulnerability scanning to ensure security. Key features include Docker Official Images, which are a curated set of secure and optimized images, and the Verified Publisher program, which provides trusted images from commercial partners.

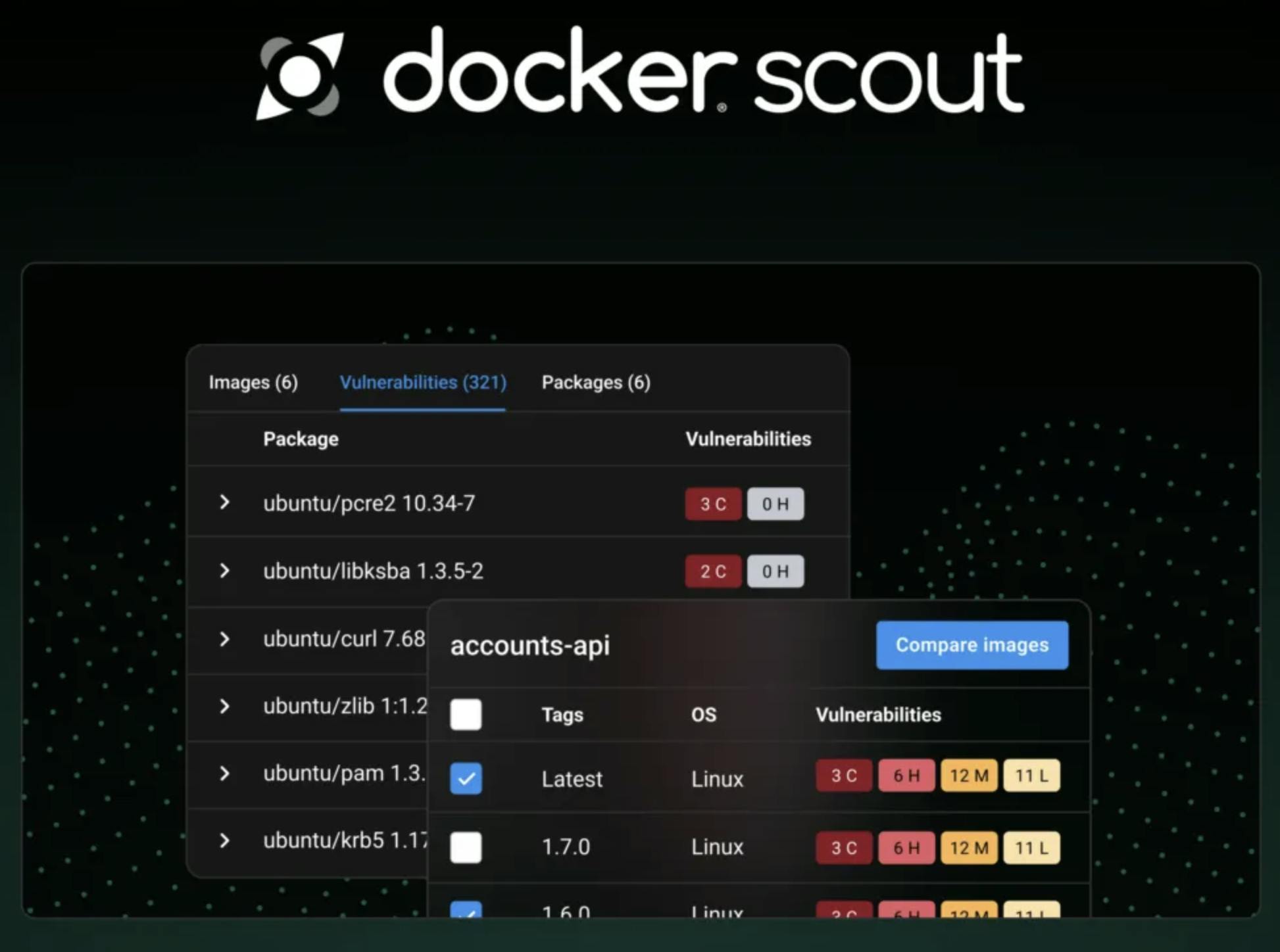

Docker Scout

Source: Docker

Docker Scout provides near real-time, actionable insights to address cloud-native application security issues before they hit production. It offers analysis and context into components, libraries, tools, and processes, guiding developers toward smarter development decisions and ensuring reliability and security.

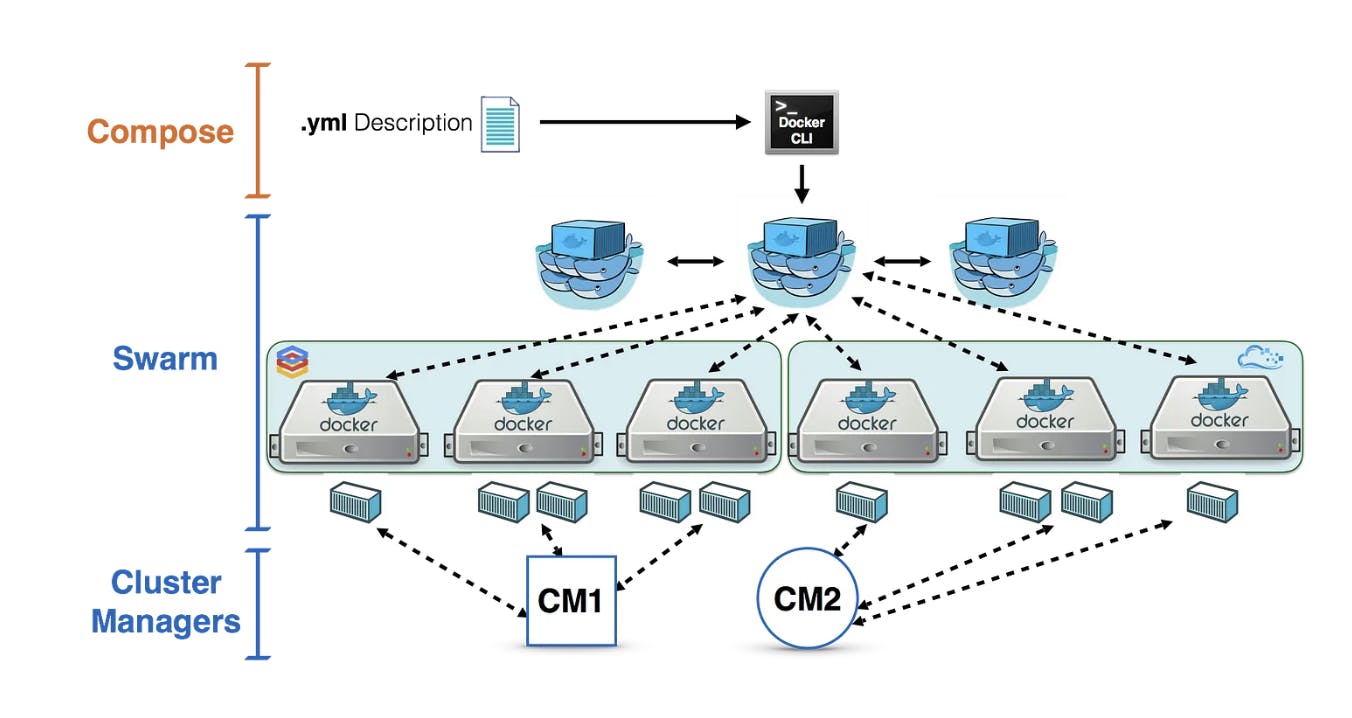

Docker Swarm

Source: Medium

Docker Swarm is a native clustering and scheduling tool for Docker containers, enabling orchestration and scaling. It allows users to create swarms, distribute applications in the cluster, and manage the swarm's behavior without needing additional orchestration software. Docker Swarm is based on a master-worker architecture, where tasks are distributed to worker nodes by the manager node. It uses a YAML file to define the combined service's services, networks, and volumes.

Market

Customer

The target customer profile for Docker is a software developer, DevOps engineer, or IT professional seeking to streamline application development and deployment through containerization. Docker users are typically involved in building, shipping, and running applications consistently across different computing environments, ensuring that software behaves similarly in development, testing, and production. Docker’s target audience values efficiency, scalability, and portability, often embracing modern practices like microservice architecture and cloud-native applications.

Docker also appeals to organizations ranging from startups to large enterprises aiming to accelerate their software development lifecycle. Companies like PayPal, Visa, and Spotify leverage Docker to improve infrastructure efficiency, manage microservices at scale, and speed up deployment cycles. Educational institutions and training programs can also utilize Docker to teach contemporary software development methodologies, preparing the next generation of tech professionals.

Companies such as PayPal and Spotify have reported notable results after adopting Docker. For instance, PayPal achieved a 90% reduction in deployment times by using Docker orchestration, and Spotify saw deployment times drop by as much as 90%, alongside a 75% reduction in server costs. Additionally, organizations automating Docker deployments have noted improvements in frequency, with some companies deploying code up to seven times more frequently than traditional methods. Docker’s mission is to “provide a collaborative app development platform of tools, content, services, and community for dev teams and their manager.”

Docker saw an eightfold increase in usage by ML engineers and data scientists from 2022 to 2023, with the company noting that Docker is already a pivotal part of the AI/ML development ecosystem. Additionally, Docker's integration with AI tools like GitHub Copilot and the introduction of the AI agent Gordon demonstrates the company's focus on serving developers working AI-integrated technologies.

Market Size

As of 2025, Docker operates in the rapidly expanding global application containerization market, which has experienced significant growth due to the rising adoption of cloud-native applications, microservices architecture, and DevOps practices. The application container market was valued at approximately $5.8 billion in 2024 and is projected to reach $31.5 billion by 2030, growing at a CAGR of about 33.5%.

The acceleration of the market is projected to be fueled by the need for solutions that enhance portability and streamline the software development lifecycle. Docker plays a key role in this market by providing accessible tools that enable developers and IT professionals to leverage containerization effectively. The integration of Docker with container orchestration platforms like Kubernetes has further amplified its adoption across various industries, such as finance, healthcare, technology, and e-commerce.

Competition

The main competitors to Docker come from two distinct parts of the Docker product suite: containerization platforms (focused on building and running containers) and container orchestration solutions (focused on managing containers at scale).

Containerization Platforms

Podman: Developed by Red Hat, Podman is an open-source, daemon-less container engine that allows users to run, manage, and deploy containers without needing a central daemon like Docker Engine. Podman emphasizes security by enabling rootless containers, allowing non-privileged users to run containers without elevated permissions. It is compatible with Docker images and commands, making it an attractive alternative for those seeking enhanced security and simplicity in container management.

containerd: containerd is a container runtime that focuses on simplicity, robustness, and portability. Originally part of Docker, containerd was donated to the Cloud Native Computing Foundation and has become a core component in Kubernetes environments. It provides a minimal set of functionalities for executing containers and is used by other container platforms as an underlying runtime.

LXC/LXD: LXC (Linux Containers) is a lightweight virtualization technology that allows multiple isolated Linux systems (containers) to run on a system host. LXD builds on LXC to offer a system container manager that provides a user experience similar to virtual machines but with container performance and density benefits. While LXC/LXD predates Docker and targets slightly different use cases, they are alternatives for users needing full-system containers.

Container Orchestration Solutions

Kubernetes: Developed by Google and launched in 2014, Kubernetes is an open-source platform designed for automating the deployment, scaling, and management of containerized applications. While Docker excels at building, packaging, and running containers on individual machines, a team of Google engineers realized in 2013 that managing large-scale deployments of containers across multiple machines required a more sophisticated tool. Kubernetes was created to fill this need, automating the deployment, scaling, and management of containers at scale.

Docker allows developers to package applications and their dependencies into containers, like putting everything required into a box. But when managing many such "boxes" across a fleet of machines, you need a system to ensure they're deployed, scaled, and maintained efficiently, which is where Kubernetes comes in. Named after the Greek word for "pilot," Kubernetes ensures the smooth journey of containers by orchestrating their safe and efficient deployment across a distributed system.

Kubernetes is typically considered to offer more advanced features than Docker’s native Docker Swarm. It has been widely adopted by companies of all sizes to manage cloud-native applications. According to one report, more than 90% of the world’s organizations will be running containerized orchestration applications in production by 2027, up from fewer than 40% in 2021.

Red Hat OpenShift: OpenShift, developed by Red Hat, is a Kubernetes-based platform that simplifies the building, deployment, and management of containerized applications. It extends Kubernetes' built-in functionality by tightly integrating with DevOps workflows, offering out-of-the-box solutions for continuous integration and continuous deployment (CI/CD), which makes deploying and scaling applications faster and more secure.

In 2019, IBM closed a $34 billion acquisition of Red Hat, indicating a desire to scale its hybrid-cloud offerings. Some 50% of the top Fortune 100 businesses were reportedly using OpenShift in 2019.

Amazon Web Services (ECS and EKS): Amazon Web Services offers two primary container orchestration services. Amazon Elastic Container Service (ECS) is a fully managed service, and Amazon Elastic Kubernetes Service (EKS) is a managed service that makes it easy to run Kubernetes on AWS with minimal interference.

OrbStack: OrbStack is a macOS-exclusive alternative to Docker Desktop designed to be faster and more lightweight. OrbStack is specifically engineered for macOS users who frequently work with containers, offering better performance and resource efficiency compared to Docker Desktop on Mac systems.

Rancher Desktop: Rancher Desktop is a free, open-source platform designed for developers working with Kubernetes. Rancher Desktop provides native Kubernetes support and compatibility with both Docker and containerd runtimes. It offers a visual interface for managing containers and Kubernetes clusters, making it an attractive alternative to Docker Desktop, especially for teams focused on Kubernetes development.

Business Model

Source: Docker

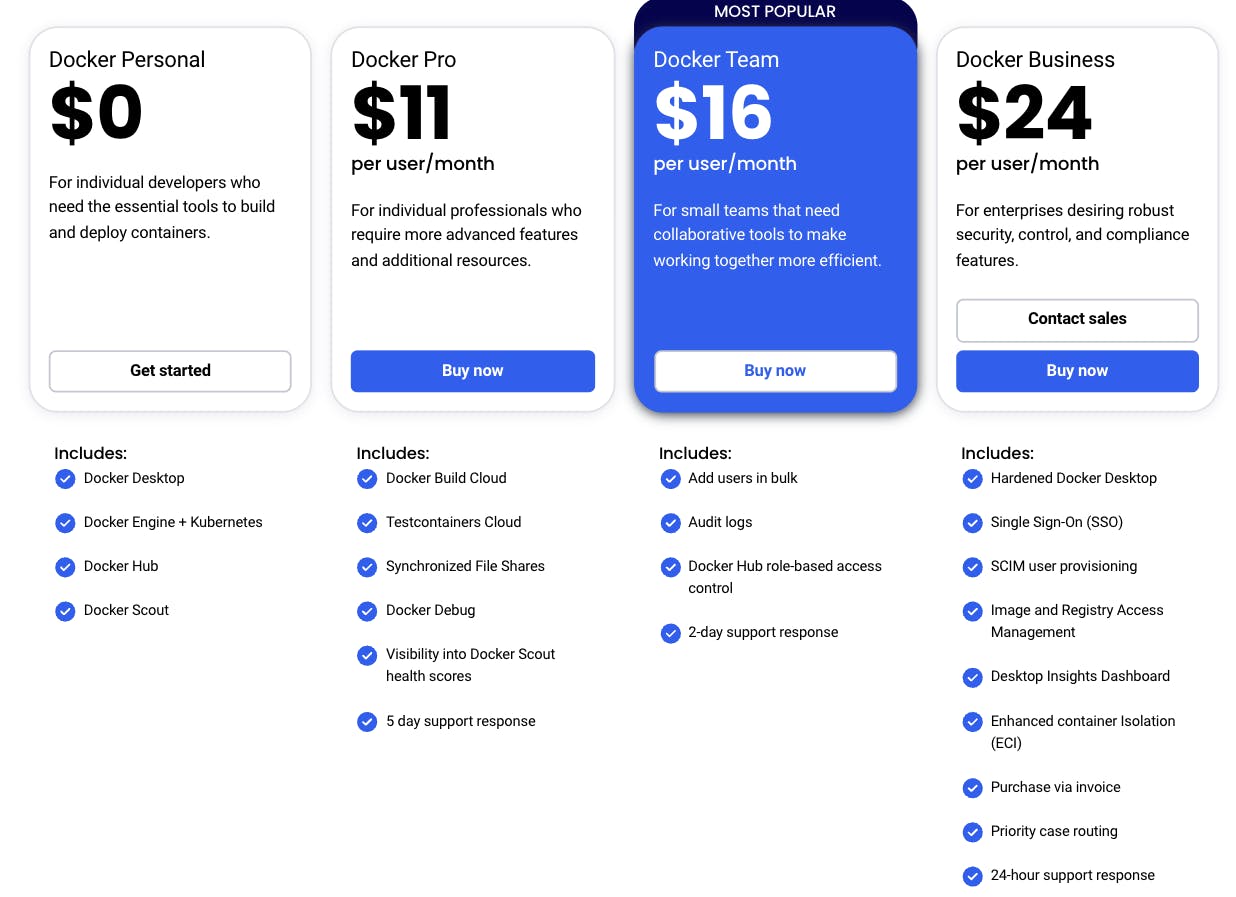

Docker utilizes a freemium business model, offering its core containerization platform for free while providing paid subscriptions with enhanced features and support for professional and enterprise users. The foundational tools, including the Docker Engine and Docker CLI, are open-source, enabling developers to build, share, and run containerized applications without initial costs. This approach has cultivated a fairly active community, promoting adoption of Docker’s technology. It offers four tiers:

Docker Personal: Free for personal use, education, open-source projects, and small businesses with fewer than 250 employees and less than $10 million in annual revenue.

In August 2021, Docker announced updates to its subscription model, introducing Docker Business and modifying the licensing terms for Docker Desktop. Under the new terms, commercial use of Docker Desktop requires a paid subscription for companies exceeding the specified thresholds of employees or revenue.

Traction

As of March 2024, Docker reported over 17 million developers using its platform globally and over 79K customers. Docker Hub, considered by some to be the world’s most extensive library of container images, has 26 million monthly active IPs accessing 15 million repos on Docker Hub, pulling them 25 billion times per month as of March 2024. Docker’s Moby project has 71.2K stars, 18.9K forks, and more than 2.2K contributors as of December 2025; Docker Compose, meanwhile, has 36.6K stars and 5.7K forks as of December 2025. In August 2024, Docker was ranked the most-used tool (in the "other tools" category) by professional developers, with 59% using it in their work, according to Stack Overflow’s 2024 Developer Survey.

Docker reportedly grew ARR past $50 million in 2021, up from $11 million in 2020. Unverified estimates indicated that then, over the course of 2024, the company grew ARR by ~125% to $207 million.

Valuation

Docker has raised a total of $435 million since its first pre-seed funding in 2010. In March 2022, Docker raised a $105 million Series C led by Bain Capital Ventures. The March 2022 funding round left Docker with a valuation of $2.1 billion.

According to the press release for this funding round, this brought the company’s total funding to $163 million as of March 2022. This discrepancy in reported funding totals is likely due to the sale of Docker’s enterprise platform and customers to Mirantis in November 2019, which likely involved a recapitalization of the business, resetting the company’s cap table. After that recapitalization, Docker raised $35 million in Series A financing in 2019, led by Benchmark Capital, and a $23 million Series B from Tribe Capital in March 2021, prior to its March 2022 Series C.

Key Opportunities

Improving Productivity for Faster Time-to-Market

By containerizing applications, Docker ensures consistency across different environments, meaning developers can build, test, and deploy applications quickly without spending as much time worrying about dependency hell or “it works on my machine”-type issues. This uniformity reduces the time spent troubleshooting environment-specific issues, allowing teams to focus more upfront on feature development and innovation.

Moreover, Docker’s efficient resource utilization and lightweight containers enable rapid scaling and deployment. Development teams can quickly iterate on features, deploy updates, and roll back changes with minimal downtime, accelerating the software development cycle.

Companies leveraging Docker often report significant improvements in collaboration and agility. For instance, The Warehouse Group, a leading New Zealand retailer, adopted Docker to simplify application building and deployment. They reported an annualized saving of approximately 52K developer hours and achieved faster time-to-market features, with some deployments moving from code to production in less than an hour. Likewise, BBC News used Docker to automate their deployment pipeline, enabling them to scale up rapidly during high-traffic events and ensure millions of viewers could stream content without interruptions.

Facilitating Microservices Architecture

As applications have increased, many companies are transitioning to microservice architecture to enhance flexibility and maintainability. Docker has played a pivotal role in this shift by providing a robust containerization platform that simplifies microservice development, deployment, and management. By encapsulating each service within its container, Docker ensures that microservices are independent, portable, and easily scalable.

One of the standout features of Docker in a microservice architecture is its ability to scale services independently. Each container can be scaled up or down based on demand without affecting other services. This flexibility ensures optimal resource utilization and allows businesses to respond swiftly to changing workloads. As an illustration, during peak shopping session times, an e-commerce platform could independently scale its payment processing microservice to handle increased transaction volume.

Source: Atlassian

One of Docker’s key cost-efficiency advantages is its ability to enhance scalability. Traditional virtual machines often lead to underutilized server capacity due to substantial overhead. Docker containers, however, share the host system’s kernel, allowing multiple containers to run concurrently on the same hardware with minimal overhead. This efficient use of resources means companies can deploy more applications on fewer servers, directly reducing infrastructure costs.

Additionally, Docker excels in resource optimization by precisely controlling system resources such as CPU and memory. Specific resources can be allocated to containers to meet their needs, minimizing waste and ensuring optimal application performance.

Edge Computing and IoT Integration

IoT Analytics estimated there were 18.8 billion internet of things (IoT) devices as of September 2024, up 13% from 16.6 billion at year's end 2023, with the number of edge-enabled IoT devices to climb to 40 billion by 2030. Developers have demonstrated the potential for massive IoT deployments at DockerCon, with examples showing how containers can enable deployments of more than 100K IoT devices using platforms like Raspberry Pi. This massive IoT growth represents a significant opportunity for Docker to capture value in industrial IoT, smart manufacturing, and distributed computing applications where containerized edge deployments can provide competitive advantages.

Integration with Emerging Technologies

Growing integrations with edge computing and AI/ML are pivotal in enhancing Docker’s effectiveness in leveraging the growth of these trends. Edge computing provides faster real-time insights by bringing enterprise applications closer to their data sources, leading to quicker response times and better bandwidth. Docker’s containerization technology allows for efficiently distributing computing resources across various edge devices.

By 2026, Gartner estimates that more than 80% of enterprises will have used generative AI APIs or deployed generative AI-enabled applications. Docker's 2024 AI Trends Report shows marked growth in ML engineering and data science within the Docker ecosystem. From approximately 1% in their 2022 survey to 8% in 2023, ML engineers and data scientists represent a rapidly expanding user base. Docker also helps facilitate the deployment of these AI/ML models as services. Once containerized, these AI/ML services can be deployed on any system that supports Docker, allowing for easy scale and management.

In March 2024, Docker announced a partnership with NVIDIA to support building and running AI/ML applications. The partnership integrates the NVIDIA AI Enterprise software platform with Docker to enable developers to build, test, and deploy AI applications efficiently.

Docker has significantly expanded its AI capabilities with the introduction of the GenAI Stack, announced at DockerCon 2023 with partners Neo4j, LangChain, and Ollama. The stack provides pre-configured LLMs, such as Llama2, GPT-3.5, and GPT-4, to jumpstart AI projects, with Ollama simplifying the local management of open source LLMs and Neo4j serving as the default database with graph and native vector search capabilities.

In line with the above, Docker’s introduction of Gordon represents the future of working with Docker. By 2025, Docker aims to expand the agent's capabilities with features like customizing experiences with more context from registries, enhanced GitHub Copilot integrations, and deeper presence across development tools.

Key Risks

Inability to Monetize Open-Source

Docker’s reliance on open-source technology poses a significant monetization challenge. The company’s business model, centered around providing support and value-added services, faces the inherent difficulty of generating revenue from freely available software.

One of the main hurdles Docker has faced is competition from other companies and cloud providers that leveraged its open-source technology to offer their services. Major cloud providers like Amazon Web Services, Microsoft Azure, and Google Cloud Platform have integrated Docker’s container technology into their offerings, often bundling it with additional services and support. This integration has usually overshadowed Docker’s commercial products, making it more difficult for the company to capture market share in enterprise solutions.

Additionally, the emergence of Kubernetes, an open-source container orchestration system initially developed by Google, shifted the industry’s focus from containerization to container orchestration and management. Kubernetes has become the de facto standard for container orchestration, and while Docker has tried to compete with its orchestration tools like Docker Swarm, it has struggled to keep pace. This shift has further inhibited Docker’s efforts to monetize, as enterprises adopted Kubernetes alongside or even in place of Docker’s enterprise offerings.

Despite introducing enterprise-focused products and seeking added value through security features, management tools, and customer support, Docker needed help differentiating its paid offerings from the free, open-source alternatives. In 2019, these challenges culminated in Docker selling its enterprise business to Mirantis. This move allowed Docker to refocus on its core market of developers and the tools they use. Still, the fundamental challenge of building a sustainable revenue model around open-source software has remained a critical issue.

Security Risks of Container Technology

Containers all share the same underlying hardware system below the operating system layer, and it is possible that an exploit in one container could break out of the container and affect the shared hardware. Most popular container runtimes have public repositories of pre-built containers. There is a security risk in using one of these public images, as they may contain exploits or be vulnerable to being hijacked by nefarious actors. Gartner has predicted a likely tripling of attacks on software supply chains by 2025. Additionally, Docker Hub has previously shown vulnerabilities to developers pushing malware images up for download and long-standing bugs that hackers have exploited.

For example, in October 2024, hackers were found to be targeting Docker remote API servers in order to deploy crypto miners on compromised instances. Later, in December 2024, a similar issue occurred when one report found that certain malware was being targeted at Docker remote API servers that were publicly exposed. Even when not being attacked, Docker has had some issues with malware detection. For example, in January 2025, Docker Desktop was blocked on macOS “due to malware warnings after some files were signed with an incorrect code-signing certificate.” Docker addressed another critical vulnerability in August 2025, where a malicious container running on Docker Desktop could access the Docker Engine and launch additional containers without requiring the Docker socket to be mounted, potentially allowing unauthorized access to user files on the host system. Docker was able to offer some temporary workarounds, but this could be further damaging to Docker’s reputation for being secure.

Summary

Docker has helped drive forward the streamlining of software development cycles, first by setting a new standard for containers with Docker Engine, and later with the advancement of microservice architecture. Over time, Docker has evolved in its approach, attempting to address both enterprise and developer use cases. After the sale of its enterprise business in 2019, Docker has refocused on providing functionality for developers.

Over time, Docker has grown to support over 79K customers and generated $135 million in 2022 revenue. Despite that success, Docker is still in an uphill battle as it attempts to monetize open-source software while also competing with extensive frameworks, like Kubernetes, as well as attempting to differentiate itself from cloud providers, like AWS and Azure, who are forking Docker’s open source functionality and providing it with their own additional services.