Thesis

Just about every company relies on cloud providers for on-demand servers, storage, containers, and APIs, enabling faster deployment and easier scaling. As of 2022, the global cloud computing market was valued at $446.5 billion and is projected to surpass $1.6 trillion by 2030, growing at a CAGR of 17.4%. The industry's rapid growth is driven by microservices, container tools like Docker and Kubernetes, and API-first design, which break applications into modular components that are easier to develop, deploy, and maintain independently. As the cloud computing industry expands, so do some underlying challenges, namely operational complexity, cost management, and computation ability.

Developers often encounter challenges when working with traditional cloud service providers due to complex configurations and management requirements. For instance, a lack of training or expertise has been reported to be a significant hurdle in cloud computing adoption, with a steep learning curve associated with these platforms. Many cloud computing platforms also have direct dependencies with other applications, requiring developers to have a deep understanding of both the cloud environment and the applications involved. These complexities introduce the need for developers to be well-versed in using such platforms and can also increase development time and potential errors.

The adoption of AI and generative AI tools, which require much larger datasets, computational power, and infrastructure, has significantly increased cloud expenditures. Enterprises experienced an average rise of 30% in cloud costs in 2024, and 72% of businesses find AI-driven cloud spending unmanageable. Further, as organizations increasingly integrate AI-powered workloads into their operations, organizations are looking for faster and more efficient computing infrastructure. Using AI requires a significant transformation of a company’s infrastructure, as existing systems are inadequate for handling the large amounts of data and computational power required by AI applications. For example, training OpenAI's GPT-3 model required approximately 355 GPU-years and cost around $4.6 million. Data centers, driven by AI workloads, are projected to account for up to 21% of global electricity demand by 2030, a large increase from 1-2% in 2024.

Modal was founded to provide a smoother developer experience, an optimal pricing model, and fast computational power. Modal’s infrastructure is built from scratch, allowing for containerized launch and computation that the company claims is significantly faster than the world’s fastest Graphics Processing Unit (GPU) provider. The infrastructure was also built to target key developer pain points, such as setting up configuration or debugging, improving developer experience, and efficiency. Lastly, the usage-based pricing model charges customers solely for the compute resources they consume, rather than charging a constant cost, especially when traffic is low.

Founding Story

Source: Redpoint Ventures

Modal was founded in January 2021 by Erik Bernhardsson (CEO). Akshat Bubna (CTO) joined as co-founder in August 2021.

Bernhardsson attended Danderyds Matematikgymnasium, a small program for top math-performing students in Sweden, from 2000 to 2003. He then continued his education at KTH Royal Institute of Technology from 2003 to 2008, where he received his Master’s in Physics. While at KTH, he spent a gap semester at Technische Universität Berlin in 2006. During school, he experimented with various developer products and gained two years of full-time work experience by the time he graduated.

Bubna pursued his undergraduate degree in math and computer science at MIT from 2014 to 2017. He won a bronze medal in 2013 at the International Olympiad in Informatics (IOI). He later won a gold medal in 2014, being the first Indian Student to do so. Bubna was also an early engineer at Scale AI.

With prior software engineering internship experience, Bernhardsson started his career as an engineering manager at Spotify, leading the team responsible for aggregating/analyzing data, data visualizations, optimization, A/B testing, etc. After two years at Spotify, he joined Graham Capital Management for six months, where he primarily wrote C++ scripts for high-frequency trading. In August 2011, he went back to being an engineering manager at Spotify, where he built up a team to develop a music recommender system from scratch using large-scale machine learning algorithms. In February 2015, he left Spotify again and joined Better.com, a fintech firm, as CTO. Joining a year after the startup was founded, Bernhardsson grew the team from to 300, setting up workplace systems, presenting to investors, and developing a lot of the technology himself (especially on the AI/ML side).

Having spent most of his career on data, machine learning, and using existing cloud providers, Bernhardsson experienced the gap in the established offerings first-hand, inspiring him to found Modal. Specifically, he was focused on creating a more seamless developer experience. Bernhardsson started development during the COVID-19 pandemic. His goal, based on his own developer experience, was to make cloud development as good as local development. Quickly realizing he couldn’t use existing systems like Docker and Kubernetes, he spent the first two years developing foundational infrastructure, including things like his own file system, scheduler, and container.

Six months into development, he recruited Bubna to join the team. In Bernhardsson’s eyes, Bubna brought both strong technical skills, familiarity with the pain points of data practitioners and developers, product intuition, and an understanding of how to use and deploy tools in unique ways. Together, Bernhardsson and Bubna had two goals when developing: first, faster computing, and second, using a Python SDK to allow easy application development that could turn normal functions into serverless functions. Bernhardsson used his experience as a developer to guide him as he constructed what he believed to be the “ideal” developer experience for Modal.

The two of them spent a year with no customers and another six months with no revenue, but they had a lot of confidence in what they were building. Developing during the COVID-19 pandemic made the fundraising process easier for Bernhardsson, and he was able to raise money without a solid prototype. Although Modal was started before the launch of generative AI, the company’s first real traction was when Stable Diffusion came out, enabling Modal to provide a serverless platform for generative AI.

Product

Product Overview

Source: Modal

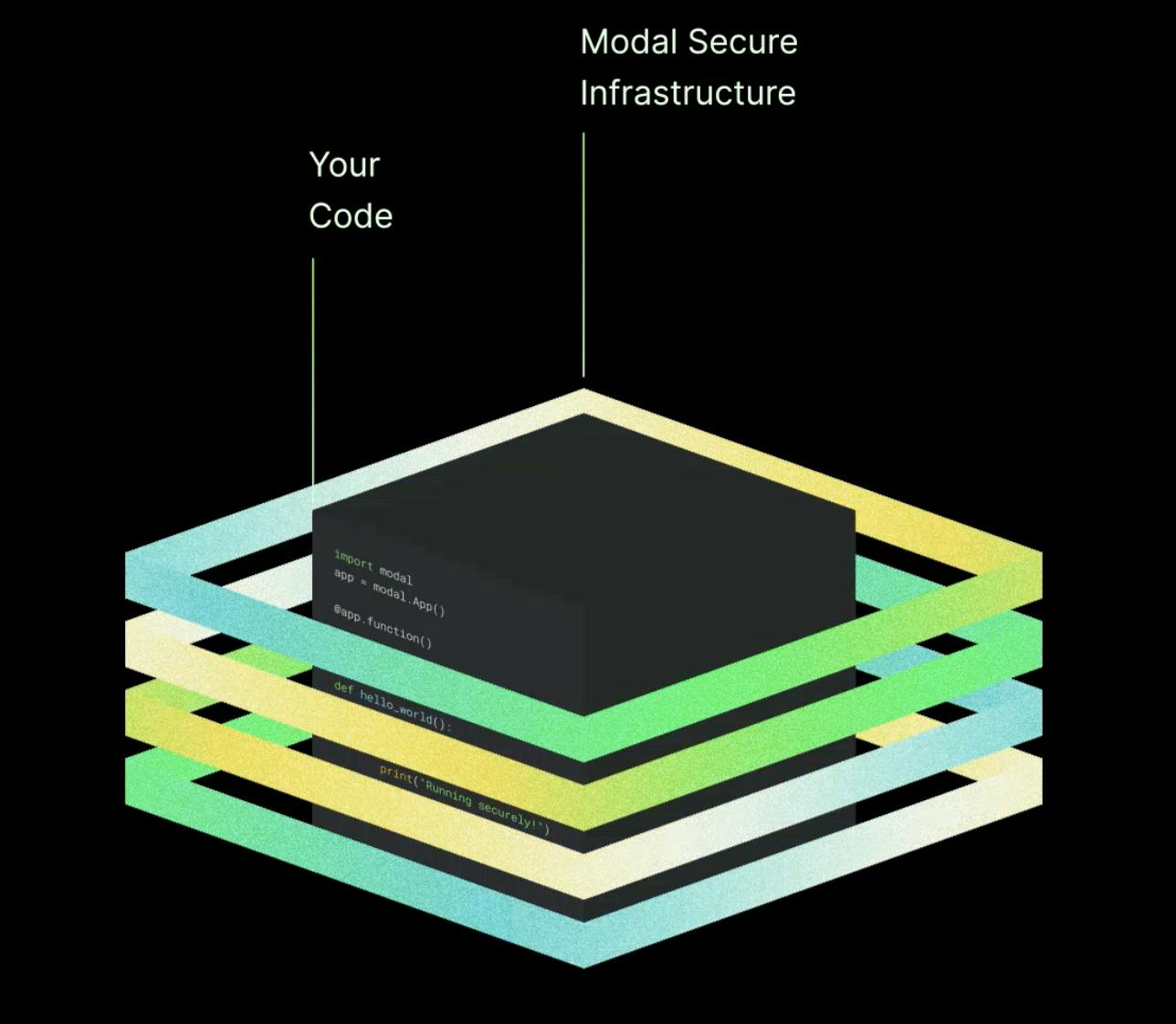

Modal provides a high-performance cloud environment with serverless GPU offerings. The platform is designed to help developers and researchers run compute-intensive workloads like machine learning inference, data processing, and batch jobs, without having to manage infrastructure configuration or management, and offers what the company claims to be almost instantaneous deployment. The team initially built the product in Python for testing viability and then rewrote the core infrastructure in Rust to optimize performance and reliability. The innovation in Modal’s product offering comes from its custom Rust-based container, efficient image building, and implementation of a customer filesystem with FUSE using a process known as lazy loading.

Modal handles containerization, autoscaling, and resource provisioning, allowing users to focus on building applications instead of configuring servers. It supports use cases such as generative AI, fine-tuning models, and large-scale batch processing, all with per-second billing and zero idle costs. Modal also offers features like web endpoints, job scheduling, and integrations with tools like Datadog and OpenTelemetry.

Use Cases

Model Inference APIs

Modal seeks to enable easier deployment and scaling of large language models (LLMs) like GPT variants without managing infrastructure. Developers can expose models as HTTPS endpoints with a single command, avoiding the need for container setup, server provisioning, or load balancer configuration. One advantage of Modal’s approach that the company points to is fast cold starts, which let containers load model weights in seconds using an optimized file system, which is much faster than traditional setups. Modal also supports dynamic batching through the @model.batched() decorator, which automatically groups incoming requests to maximize GPU usage and reduce cost. Additionally, Modal handles automatic resource allocation, providing the required CPU, GPU, and memory for each workload without requiring developers to manage scaling logic or tune infrastructure. These features make Modal well-suited for high-performance LLM applications like chatbots, embeddings APIs, and custom inference services.

Fine-Tuning

Modal also supports training and fine-tuning of machine learning models by giving developers instant access to powerful GPUs like A100s and H100s without needing to manage any infrastructure. Containers are created within seconds, and compute resources are automatically scaled based on the training job’s needs. Developers can run multiple experiments in parallel using Python without worrying about idle costs or cluster setup. Modal also supports mounting datasets and model weights from persistent cloud volumes, streamlining workflows across training runs. Modal offers live cloud debugging through an interactive shell and support for breakpoints, allowing developers to test and troubleshoot models directly in the cloud. This makes it easy to iterate quickly on training pipelines and focus on improving model performance.

Job Queues & Batch Processing

Modal simplifies large-scale batch workloads like video processing, data embedding, and scheduled analytics. Developers can write Python functions and run them as thousands of parallel jobs without managing servers or containers. Modal provides a built-in scheduler that lets users define cron jobs, retries, and batching behavior using Python decorators, removing the need for external orchestration tools like Airflow or Celery. Jobs scale automatically based on demand, with resources spun down when not in use, improving efficiency and cost. Debugging is cloud-native, with live shells and logs available during execution, and observability is built-in through integrations with Datadog and OpenTelemetry, making it easy to monitor performance and spot failures in real time. These features are especially useful for teams running recurring or high-volume backend jobs.

Modal supports high-performance image, video, and 3D processing tasks without the complexity of GPU management. Developers can deploy models like Stable Diffusion for image generation, YOLO for object detection, and Tesseract OCR for document parsing with a few lines of code. These pipelines can run as APIs or batch jobs, scaling automatically based on demand. Modal eliminates the need for setting up dependencies or managing environments; everything runs in isolated containers with no configuration required. This makes it easy to build and deploy custom computer vision or document processing systems that are production-ready and cost-efficient. Modal takes care of infrastructure development, so teams can focus on their models and workflows.

Sandboxed Code Execution

Modal allows for the safe execution of untrusted or user-submitted code by running each task in isolated, temporary containers, which are ideal for online coding platforms, data science notebooks, educational tools, or any app requiring dynamic code evaluation. Each run, known as a sandbox, is fully isolated, with restricted permissions and resources, ensuring one job cannot interfere with another. Modal supports interactive sessions, such as Jupyter-like notebooks or live coding environments, and offers built-in debugging tools like live shells and logs, making it easy to trace issues during execution. With secure environments, quick spin-up, and low overhead, Modal simplifies building platforms that execute arbitrary code safely and reliably.

Computational Bio

Modal supports compute-intensive workloads in bioinformatics and life sciences, such as protein folding, molecular modeling, and large-scale simulation tasks. Researchers can launch GPU-accelerated jobs on demand without setting up clusters or managing infrastructure. For example, the team behind Chai-1, an open-source protein folding model, uses Modal to run inference and training workflows at scale. Modal’s support for mounting cloud volumes makes it easy to work with large biological datasets across multiple jobs, while autoscaling ensures that resources are provided only when needed. The platform also provides built-in support for debugging and observability, helping researchers iterate quickly and catch issues during long-running experiments. With fast provisioning, GPU support, and parallel execution, Modal offers a flexible and efficient backend for computational biology projects.

Value Proposition

Modal differs from current incumbent providers (AWS Lambda, Kubernetes, Docker, etc) in three ways: developer experience, infrastructure, and cost:

Developer Experience: Modal simplifies the developer experience by allowing APIs, web services, and functions to be deployed as HTTPS endpoints with a single command—no containerization, server setup, and no configuration required. It automatically allocates CPU, GPU, and memory based on workload demands, eliminating the need for complex autoscaling or infrastructure tuning. Developers can schedule tasks using Python decorators without relying on tools like Airflow or Celery, making cron jobs, retries, and batching easy to manage. Modal also streamlines debugging with an interactive cloud shell and breakpoint support, and integrates with observability tools like Datadog and OpenTelemetry for real-time logging and monitoring.

Infrastructure: Modal’s infrastructure is built entirely from scratch in Rust, enabling near-instant container launches, often under a second, compared to the multi-minute delays seen in other serverless systems. Developed over 1.5 years, Modal can scale to 100 GPUs almost instantly, a capability reportedly unmatched by other providers, and eliminates cold starts typical of Kubernetes or Docker. This speed powers efficient feedback loops for rapid iteration and debugging, and supports AI/ML workloads that require massive, on-demand compute. Around 90% of Modal’s usage is for AI/ML model deployment, where its automatic resource allocation removes the need for manual provisioning or cluster management, simplifying large-scale, high-performance compute.

Cost: Modal also implements a usage-based pricing model that charges customers solely for the compute resources they consume, measured by CPU cycles, seconds, and GPU usage. This approach means users incur costs only when the model is actively being used, as opposed to being charged even when the model has no traffic. In contrast, some competitors use a more static pricing structure, billing customers even during periods of low or no usage.

While Modal currently focuses on container execution, Bernhardsson’s vision is to build an entire stack to support the end-to-end process of creating and deploying an AI/ML model, including aspects like notebook support and training. Modal has already built its own file system and is now focusing on snapshotting GPU and CPU memory, allowing stored states to be saved to disk and restored from a memory dump. This approach eliminates the need for initialization by directly copying memory blocks into the CPU and GPU, further reducing startup times and improving performance.

Market

Customer

Modal primarily serves startups or enterprises that are heavily leveraging AI/ML across generative AI, biotech, and industries using 3D rendering. Modal users are data teams and engineers within the company, especially those working on AI. The focus on AI/ML comes from the large amounts of GPU power and fast processing required to run these programs. Modal’s usage-based pricing model can be more attractive to startups that are still building a customer base and have fluctuating or low model usage rates. Some enterprises may also have fluctuating usage rates. For example, a chatbot would have higher usage rates during the day but lower rates at night.

Modal is offering a serverless platform, allowing developers to focus on code development rather than infrastructure management and scaling. It is also working to decrease the processing time of AI. For example, Modal signed a partnership with AWS to combine AWS’ infrastructure with Modal’s serverless platform, making it easier and faster for companies to build and scale AI applications

Source: Modal

Some of Modal’s biggest enterprise customers include Substack, Ramp*, and Suno.

For example, Ramp utilized Modal to fine-tune LLMs and optimize its receipt processing workflow, automating the steps of capturing, extracting, validating, and categorizing receipt data for financial management. That process reduced the need for manual intervention by 34%. Modal's flexibility enabled Ramp to run multiple model experiments in parallel, which led to a significant improvement in the accuracy of its receipt management system. Modal's infrastructure support also allowed Ramp to scale more cost-effectively, saving approximately 79% compared to other major LLM providers. Ramp also used Modal to speed up batch processing tasks, such as stripping Personally Identifiable Information (PII) from 25K invoices, reducing a three-day task to just 20 minutes at a fraction of the cost.

OpenPipe is one of Modal’s smaller customers. OpenPipe is a Seattle-based startup founded in 2023 that specializes in fine-tuning LLMs to cater to specific client needs. Kyle Corbitt, the CTO, describes his experience with Modal as “the easiest way to experiment as we develop new fine-tuning techniques. We've been able to validate new features faster and beat competitors because of how quickly we can try new ideas.”

Market Size

Bernhardsson breaks down market needs into three tiers — API providers, container providers, and raw infrastructure providers:

API Providers: These providers offer pre-built AI APIs, making it easy for developers to integrate AI features without managing infrastructure (e.g., OpenAI, Anthropic, Hugging Face Inference API). It is primarily used by developers who don’t want custom models and can adapt a general wrapper to their use case. The global API marketplace market was valued at approximately $18 billion in 2024 and is projected to expand at a CAGR of 18.9% from 2025 to 2030, expected to reach around $49.5 billion by 2030. The API management market was valued at $4.3 billion in 2023 and is expected to grow to $34.2 billion by 2032, reflecting a CAGR of 25.9% during the forecast timeframe. This growth is primarily driven by an increasing adoption of cloud services, a need for businesses to connect separate systems, and a growing demand for flexible and secure API solutions that help companies improve and modernize their operations, typically with AI.

Container Providers: These platforms allow users to run arbitrary code in a containerized environment, offering more flexibility than APIs. Developers have more control over AI models and can customize models while staying in a managed execution environment such as Modal, Banana.dev, or Replicate. It is primarily used by developers who want to customize existing models or create their own models without having to manage full infrastructure. The global application container market was estimated at $5.9 billion in 2024 and is anticipated to grow at a CAGR of 33.5% from 2025 to 2030, potentially reaching $31.5 billion by 2030. The Container as a Service (CaaS) market is projected to register a CAGR of 35% during the forecast period, which is driven by the need for scalable application deployment solutions. This growth is driven by a shift to more flexible software designs, the need for better ways to manage development and operations, and the demand for adaptable and scalable cloud-based solutions.

Raw Infrastructure Providers: These are bare-metal GPU or VPM providers that give full control over the hardware but require users to manage everything else (e.g., AWS EC2, Lambda Labs, RunPod, CoreWeave). It is primarily used by advanced teams that are building custom AI training/inference stacks, such as AI Research labs, or large-scale production teams with complete flexibility over infrastructure, but at a higher cost of complexity. The global containerized data center market was valued at $13.6 billion in 2024 and is expected to grow at a CAGR of 27.4% from 2025 to 2030, aiming for a market size of $56 billion by 2030. The container market was valued at $120.7 billion in 2023 and is projected to reach $146.8 billion by 2031, reflecting a CAGR of 5% during the forecast period. Growth in this sector is mostly driven by the increasing demand for data storage, the expansion of global trade requiring efficient shipping solutions, and the need for scalable infrastructure to support technologies like AI and IoT.

Competition

Modal could be seen as an alternative to a variety of competitors, including companies like Salesforce, AWS Lambda, Kinsta, Cloudways, and DigitalOcean. However, Modal is uniquely positioned at the second tier of “Container Providers” mentioned above. Modal differentiates itself from other companies at this tier with its developer experience, infrastructure, and cost.

Docker

Founded in 2008, Docker provides a platform for developers to build, share, and run applications using containerization technology. The containers package software and its dependencies, ensuring consistency across environments and simplifying deployment. Docker has raised a total of $435.9 million in funding as of May 2025, with investors including Bain Capital Ventures, Tribe Capital, Benchmark, and Insight Partners. In March 2022, the company raised a $105 million Series C, led by Bain Capital Ventures.

Docker and Modal both offer containerized environments to help developers deploy applications more easily. However, Modal focuses heavily on real-time editing of code thanks to fast computation, and its platform is designed to handle large-scale, enterprise-level AI workloads. Docker, on the other hand, is often used for personal projects or smaller-scale deployments, making it more suitable for local development or smaller teams, but it may lack the level of real-time computation and serverless infrastructure that Modal provides. Additionally, Docker’s pricing model is more static and does not offer the flexible, usage-based pricing that Modal uses, which can be more cost-efficient for businesses with fluctuating demands.

Kubernetes

Kubernetes was built in 2014 and is an open-source platform for automating the deployment, scaling, and management of large containerized applications. It groups containers into logical units for easy management and discovery, enhancing the efficiency of application orchestration. Kubernetes is an open-source project under the Cloud Native Computing Foundation (CNCF).

Both Modal and Kubernetes focus on containerization to streamline application deployment. However, Modal differentiates itself with a developer-centric approach, offering real-time code editing enabled by fast computation, serverless infrastructure, and a usage-based pricing model.

Portainer

Founded in 2017, Portainer is an easy-to-use interface for managing containers, including Docker and Kubernetes environments. It focuses on simplifying container management tasks like deployment, networking, and monitoring. The company has raised $13.4 million in funding from investors such as Bessemer Venture Partners and Movac.

Like Modal, Portainer focuses on containerization but differs in that it primarily targets container management for small to medium teams, while Modal provides serverless infrastructure, real-time code editing, and a usage-based pricing model ideal for enterprise-level AI applications.

AWS Fargate

AWS Fargate was introduced in 2017 as a feature within Amazon AWS. It is a serverless compute engine for Amazon ECS and EKS that lets users run containers without managing servers or EC2 instances. It abstracts the underlying infrastructure, enabling users to define and run container tasks with fine-grained resource settings, but requires deeper integration with AWS networking, IAM, and cluster configuration.

While AWS Fargate removes the need to manage servers, it still requires configuring ECS or EKS clusters, networking, IAM roles, and task definitions. Modal, in contrast, allows developers to deploy and scale code with a single Python decorator and no infrastructure setup. It also provides GPU-backed compute in under a second, which is ideal for AI/ML workloads that Fargate isn’t optimized to handle natively.

Azure Container Apps

Azure Container Apps were launched as a part of Microsoft Azure in May 2022. Azure Container Apps is a fully managed serverless container service designed to run microservices and background tasks. It supports autoscaling based on events (HTTP requests, queues, etc) and includes built-in features for Dapr, service discovery, and ingress, making it ideal for independently deployed and modular services that communicate via APIs in the Azure ecosystem.

Azure Container Apps supports serverless microservices and autoscaling, but remains tightly integrated into the Azure ecosystem and often requires YAML configurations, Dapr setup, and external workflow tools. Modal simplifies this by offering a Python-native interface with built-in job scheduling, automatic scaling, and real-time debugging, without needing to manage containers or infrastructure. This makes Modal a more ideal platform for fast-moving teams that are building and deploying computation-heavy applications.

Business Model

Source: Modal

Modal follows a usage-based pricing model, where users only pay for the resources they actually use, without incurring charges for idle compute time.

GPU Pricing: Pricing for GPUs varies based on the type of GPU, with rates starting at $0.000164 per second for Nvidia T4 GPUs and going up to $0.001097 per second for Nvidia H100 GPUs.

CPU Pricing: Costs for CPU usage start at $0.0000131 per core per second, with a minimum of 0.125 cores per container.

Memory Pricing: Memory is charged at $0.00000222 per GiB per second.

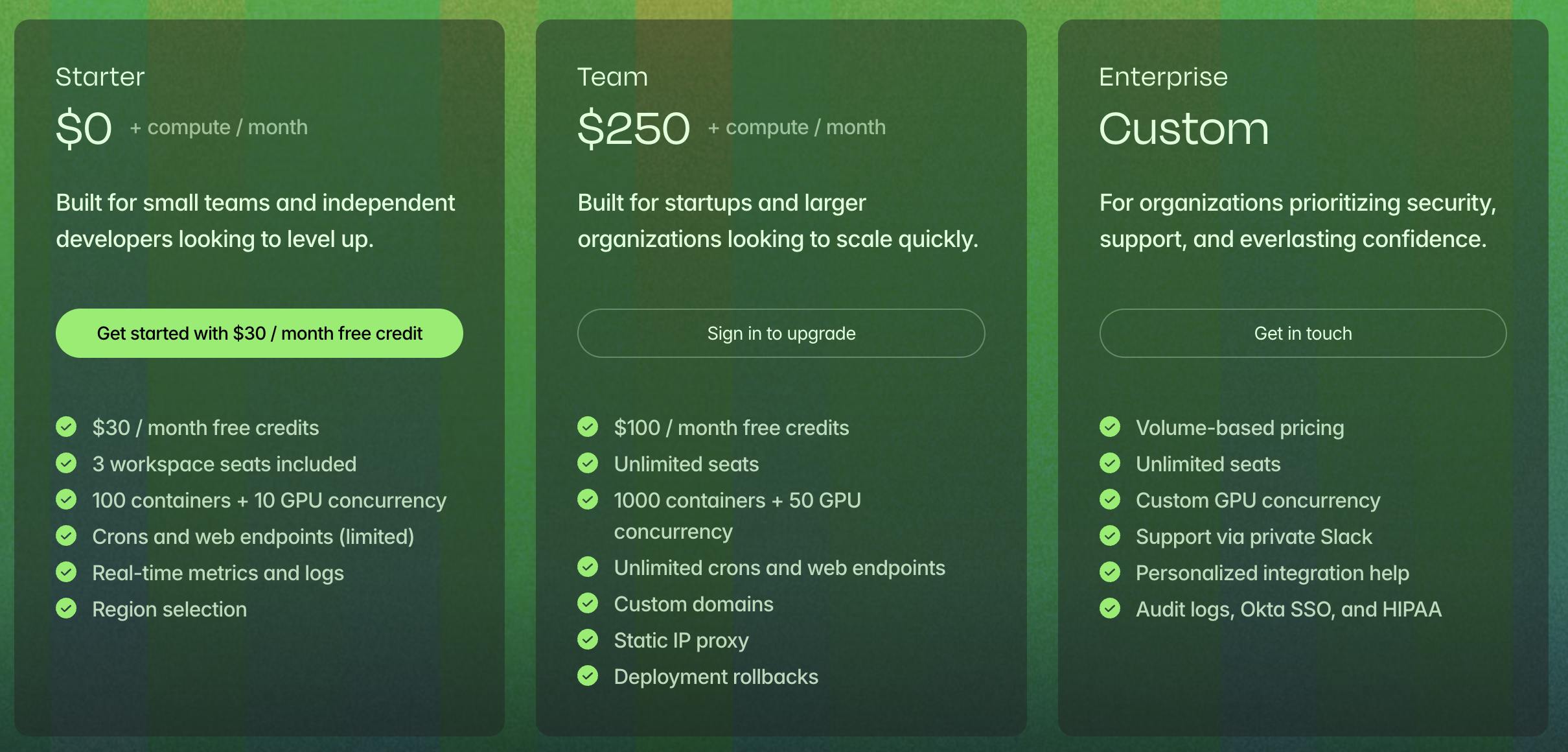

Modal offers three pricing plans:

Starter Plan: Free for up to $30/month in credits, 100 containers, 10 GPU concurrency, and limited features.

Team Plan: Priced at $250/month, includes unlimited seats, 1000 containers, 50 GPU concurrency, and more advanced features like custom domains and deployment rollbacks.

Enterprise Plan: Custom pricing for larger organizations, offering volume-based pricing, unlimited seats, custom GPU concurrency, and dedicated support.

Startups and researchers can also apply for up to $50K in free credits. Additionally, Modal supports AWS Marketplace usage for committed spend.

Traction

For the first few years since its founding in 2021, Modal was focused on developing its core infrastructure. In October 2023, the company announced its official launch, and early adopters included Ramp, Substack, and SphinxBio. As of December 2023, Modal was reportedly at an annual revenue of approximately $42K according to one unverified estimate. By April 2024, Modal had reached 8-figures in revenue, tripled its headcount, scaled its infrastructure to flexibly support thousands of GPUs, and expanded its customer base to include over 100 large enterprises.

Valuation

In October 2023, Modal raised a $16 million Series A at a pre-money valuation of $138 million from Amplify Partners and Redpoint. In April 2024, the company raised an additional $25 million from Left Lane Capital, with total funding raised of $32 million.

Key Opportunities

AI Infrastructure Demand

The global AI infrastructure market has been expanding rapidly due to increasing demand for AI applications across industries like finance, healthcare, retail, and entertainment. The market is projected to grow from $28.7 billion in 2022 to $96.6 billion by 2027. This growth creates the need for scalable, secure, and efficient infrastructure capable of supporting high-performance computing (HPC) workloads at scale.

Specifically, there has been a shift to inference computing (making real-time predictions using trained AI models) used in technology like autonomous vehicles and real-time recommendation systems. The AI inference market is projected to expand from $106.2 billion in 2025 to $255 billion by 2030. The applications require immediate data processing and energy-efficient computations, which Modal’s platform is well-suited for.

The cloud is the leading environment for AI and machine learning development, with nearly 9.7 million developers worldwide running their AI workloads with cloud infrastructure. More than 94% of organizations with more than 1K employees have a significant portion of their workloads in the cloud. Major tech companies are committing over $300 billion to AI infrastructure in 2025. Companies like Amazon, Alphabet, Microsoft, and Meta are ramping up investments in AI-specific hardware, data centers, and other infrastructure that supports machine learning and AI workloads. These investments reflect the growing recognition of the importance of AI and an opportunity for Modal to jump in.

Regulatory Changes

The government is putting increased emphasis on building out AI infrastructure and leading initiatives to create more data centers, chip plants, and energy infrastructure. Such policies can create a favorable environment for companies like Modal to expand their services. Additionally, strict data protection laws are being applied to AI applications globally, with regulations such as the EU's GDPR setting guidelines for automated decision-making and ensuring that AI systems comply with data privacy rights. Modal must navigate and comply with these regulations.

Ethics & AI

Consumers and businesses are increasingly concerned about the ethical implications of AI. In 2023, 52% of Americans expressed concern about AI and supported AI regulation. The government is also starting to implement orders on AI safety. Bernhardsson doesn’t support increased regulation or AI concerns, stating that “I don’t think we should regulate anything until we see the damage it can cause.” Modal’s serverless architecture is well-positioned to support users navigating evolving compliance requirements. Features like containerized execution, ephemeral jobs, and isolated environments offer the technical foundations for auditability, governance, and permission controls, which are critical for companies operating in healthcare, finance, or other sensitive domains. Modal can differentiate itself as a trusted compute layer for AI developers who need both performance and control.

Key Risks

Dependence on NVIDIA GPUs

Modal's serverless platform is built to leverage GPU acceleration, primarily utilizing Nvidia’s hardware. While Nvidia has been a dominant provider in the AI training space, its position in the inference market is being challenged by both established companies and startups offering more cost-effective and specialized solutions.

Startups like Groq, Cerebras, and SambaNova are developing AI inference chips that promise higher efficiency and lower costs compared to traditional GPUs. These companies focus on optimizing inference workloads, which are becoming more prevalent as AI applications move from training to deployment. For instance, Groq's architecture is designed to deliver low-latency inference, making it suitable for real-time AI applications.

Established players like AMD and Intel are also entering the AI inference space with their own hardware solutions. AMD's MI300 series and Intel's Gaudi processors are tailored for AI workloads. In addition, cloud service providers are contributing to this shift by developing their own AI inference chips. AWS has introduced Inferentia, a custom chip designed to deliver high performance at a lower cost for AI inference tasks. AWS claims that Inferentia offers up to 2.3 times higher throughput and up to 70% lower cost per inference compared to comparable GPU instances.

The emergence of these alternatives presents a risk for Modal. If the industry shifts towards these new inference solutions, Modal may need to adapt its platform to support a broader range of hardware. Failure to do so could limit its appeal to customers seeking the most cost-effective and efficient AI inference options.

AI Ethics, Privacy, & Intellectual Property

Modal's users include AI/ML developers, with approximately 90% of its workloads dedicated to machine learning applications, including LLMs, embeddings, and computer vision tasks. This focus exposes Modal to the escalating legal and reputational challenges associated with AI technologies.

The use of copyrighted materials in training AI models has become an ongoing legal issue. High-profile lawsuits have been filed against major AI developers like OpenAI and Meta, alleging unauthorized use of copyrighted content to train their generative AI models. For instance, authors and media organizations have accused these companies of infringing on their IP rights by using their works without proper licensing.

Additionally, AI systems often process vast amounts of personal data, raising concerns about compliance with data protection regulations such as GDPR, which mandates strict guidelines on data processing, storage, and consent. Non-compliance can result in significant penalties. AI companies must ensure that their data handling practices align with these regulations to avoid legal repercussions.

Lastly, the deployment of AI technologies has led to ethical dilemmas, including biases in AI decision-making, a lack of transparency, and potential misuse. Missteps in AI, such as creating deepfakes or biased algorithms, are among the top threats to corporate brand reputations. Companies associated with unethical AI practices risk losing public trust and facing backlash from stakeholders.

Given these challenges, Modal must proactively address legal and ethical considerations in its operations. Implementing robust compliance frameworks, ensuring transparency in AI processes, and engaging with customers on ethical AI use are essential steps to mitigate potential risks.

Containerization Security Concerns

Modal’s platform is built around containerization, which allows for rapid deployment and scalability of compute jobs. However, containerization has raised concerns around data security and privacy due to the shared nature of resources. Issues like insecure container images and misconfigured container runtimes could allow unauthorized access to sensitive data, leading to business data breaches.

Containers share the host OS kernel, meaning a single vulnerability (e.g., misconfigured container runtime, insecure image) can expose multiple workloads. Attackers can exploit misconfigurations or vulnerabilities in the container image or runtime to access sensitive data. In 2023, 87% of container images were found to have critical or high-severity vulnerabilities.

Modal must also ensure its containerized infrastructure aligns with global data protection laws like GDPR and CCPA. For example, ephemeral compute environments, one of Modal’s core strengths, may make it harder to provide audit trails or demonstrate data retention practices, which are required for compliance. Modal would need to provide enterprise customers with guarantees around data residency, logging, and isolation, especially in regulated industries like finance and healthcare.

For larger customers, especially those in compliance-sensitive sectors, Modal’s success depends on its ability to prove that its containerized infrastructure meets enterprise-grade security and regulatory standards. Failure to do so could limit its adoption in exactly the markets where AI workloads are most likely to scale.

Summary

Modal offers a serverless compute platform aimed at addressing common pain points in AI infrastructure, including slow deployment times, high operational complexity, and inefficient cost structures. Built from scratch with a Rust-based backend, the platform supports rapid container launches, dynamic scaling, and GPU-intensive workloads.

Modal targets developers and data teams in AI-driven industries, providing tools for model inference, fine-tuning, batch processing, and sandboxed execution, with pricing based on actual resource consumption. The company has raised $32 million as of April 2024, reports usage across more than 100 enterprise customers, and positions itself within a growing market for container-based AI infrastructure projected to reach over $96 billion by 2027. The opportunity for Modal going forward will rely on its ability to balance increasing demand for AI applications with potential compliance and security risks.