Thesis

Advances in multimodal AI are expanding human–machine interaction beyond text-based chatbots toward real-time voice systems that process not only words but also vocal characteristics such as tone, prosody, and affect. Prosody refers to the rhythm, stress, and intonation of speech, while affect describes the emotional signals conveyed through voice and expression. This shift is occurring alongside growth in the affective computing market, which includes voice and emotion recognition technologies and was valued at $62.5 billion in 2023. As of 2023, industry projections estimated the market could reach $62.5 billion by 2030, representing a reported compound annual growth rate of approximately 30.6% from 2024-2030.

Across sectors, organizations are increasingly noting that AI systems may need to account for emotional cues in addition to linguistic content to support more effective interactions and user satisfaction, as reflected in market analyses of emotion detection and recognition technologies. Academic research similarly suggests that sensitivity to emotional context can be important for safe and effective AI deployment, particularly in higher-risk settings such as healthcare, education, and customer support, where misunderstandings may carry greater consequences.

Despite growing interest in emotionally responsive systems, much of mainstream conversational AI remains largely transactional. As of June 2024, widely deployed assistants such as Siri and Alexa were reported to rely primarily on predefined responses and rule-based tone modulation, with ongoing limitations in areas such as turn-taking, long-term context retention, and recognition of emotional signals, even after more than a decade of development. User reports in 2024 and 2025 suggested that advanced large language models, including GPT-4–based voice systems, had difficulty interpreting emotional nuance or expressing affect through speech. While some companies focus on expressive text-to-speech generation, such as ElevenLabs or Play.ht, and others offer standalone emotion detection APIs, relatively few attempt to combine these capabilities within a single system.

Hume AI focuses on integrating emotion recognition and emotion-aware speech generation within real-time voice models. Its systems are trained on multimodal datasets derived from prior affective science research. If effective at scale, this integrated approach could support more emotionally adaptive voice interactions, though its significance will depend on demonstrated performance and adoption relative to modular alternatives.

Founding Story

Hume AI was founded in March 2021 by Alan Cowen (CEO, Chief Scientist) to develop voice AI models designed to understand and convey emotional intelligence.

Cowen holds a PhD in Psychology from UC Berkeley and previously led the Affective Computing team at Google AI. Cowen’s academic work laid the foundations for semantic space theory, a computational approach to understanding how nuances of voice, face, body, and gesture are central to human connection and emotional experience. This framework forms the backbone of Hume AI’s models and approach to emotion understanding. The founding team also includes John Beadle (Founding Investor, CFO, Board Member), a co-founder and managing partner at Aegis Ventures, and Janet Ho (COO), formerly a managing partner at Aegis Ventures with operational and product expertise from Zynga and Rakuten.

Cowen founded Hume AI after becoming increasingly concerned that AI technologies, such as voice assistants and large language models, lacked sensitivity to human emotion and well-being. He left Google in 2021 to start Hume AI with the mission of building AI systems “optimized for human well-being.” The name “Hume AI” references one of David Hume’s ideas that emotions drive choice and well-being, framing the company’s focus on measuring, understanding, and improving emotional experience through AI. As Union Square Ventures’ Andy Weissman describes:

“The company aims to do for AI technology what Bob Dylan did for music: endow it with EQ and concern for human well-being. Dr. Alan Cowen, who leads Hume AI’s fantastic team of engineers, AI scientists, and psychologists, developed a novel approach to emotion science called semantic space theory. This theory is what’s behind the data-driven methods that Hume AI uses to capture and understand complex, subtle nuances of human expression and communication—tones of language, facial and bodily expressions, the tune, rhythm, and timbre of speech, ‘umms’ and ‘ahhs.’”

Product

Product Overview

Hume AI offers a suite of models/APIs and a developer platform that enable companies and developers to build emotionally-intelligent voice- and expression-based experiences. As of January 2026, the company’s three core products included Empathetic Voice Interface (speech-to-speech), Octave (text-to-speech), and Expression Measurement API.

These products build on Cowen and the team’s extensive data-driven emotion research, which encompasses millions of human reactions to videos, music, and art. The team has also analyzed expressive patterns in historical sculpture and applied deep learning to global video datasets, uncovering more than 30 dimensions of emotional meaning. Its research spans semantic space theory, which demonstrates that emotions exist on gradients and blends across cultures, as well as the central role of vocal prosody in emotional communication. In its models, Hume AI employs a technique known as “reinforcement learning from human expression” (RLHE), which hones outputs based on the preferred qualities of any human voice.

The company has published over 40 articles with over 3K citations as of January 2026. All research is conducted with a strong ethical framework under the Hume Initiative, which enforces principles like beneficence, emotional primacy, transparency, inclusivity, and consent, ensuring AI deployment prioritizes emotional well-being and avoids misuse. Together, these research foundations and ethical guardrails underpin the capabilities of Hume AI’s core products.

Empathic Voice Interface (EVI)

Source: Hume AI

EVI is Hume AI’s flagship speech-to-speech (STS) product, released in conjunction with their Series B funding announcement in March 2024. Trained on data from millions of human interactions, EVI accepts live audio input and returns both generated audio and transcripts augmented with measures of vocal expression. The system supports real-time streaming via WebSocket, analyzing tone, prosody, and language before producing a generated voice output. It uses Hume AI’s prosody model to evaluate the tune, rhythm, and timbre of speech, enabling more contextually and emotionally aware responses.

EVI 3, released May 2025, allows users to speak to any of the more than 100K custom voices created on the platform, each with an inferred personality and its own natural tone of voice based on user prosody and language. EVI 3 delivers responses in under 300 ms, with a practical latency, the interval between the end of user speech and assistant reply, of 1.2 seconds, outperforming OpenAI’s GPT-4o and Gemini Live API. The system can also stop immediately when a user interjects and resume with appropriate context. Hume AI’s newest model, as of January 2026, EV4-mini, adds multilingual support across English, Japanese, Korean, Spanish, French, Portuguese, Italian, German, Russian, Hindi, and Arabic.

EVI can be applied across a range of applications. Users can simulate interviews or coaching sessions with dynamic tone adjustment, build emotionally aware digital companions for seniors, kids, or wellness contexts, and create digital assistants that modulate tone. EVI is also integrated into Hume AI’s iOS offering, enabling real-time voice interaction on mobile devices.

Octave

Source: Hume AI

Octave is Hume AI’s omni-capable text-to-speech (TTS) and speech-language engine, described as the first TTS model that “understands what it’s saying.” Unlike traditional TTS systems, Octave interprets the meaning and context of text, allowing it to act out characters, shift emotional tone, and deliver expressive, nuanced speech. The model infers when to whisper, shout, or speak calmly based on how the script shapes the tune and rhythm of speech.

Compared to the first December 2024 model, Octave 2, released in October 2025, was 40% faster and more efficient, generating high-quality audio with latencies as low as ~100ms as of October 2025. Users can generate custom voices by prompt or by cloning a short sample, defining parameters such as accent, age, gender, and speaking style, and control delivery through “acting instructions” (e.g., whisper, shout, slow, accent). Octave 2 is deployed on advanced inference hardware in collaboration with SambaNova, which worked with Hume AI to build a dedicated inference stack optimized for the model’s architecture.

Octave supports a range of applications, including narration for video, podcasting, and audiobooks, education content delivered with emotionally varied voices, and expressive digital avatars for games and virtual experiences. Hume AI actively evaluates Octave’s performance, claiming favorable results against competitors like ElevenLabs in internal benchmarks on quality and expressiveness. The company also runs a public evaluation platform known as the Expressive TTS Arena, where users can test models side by side.

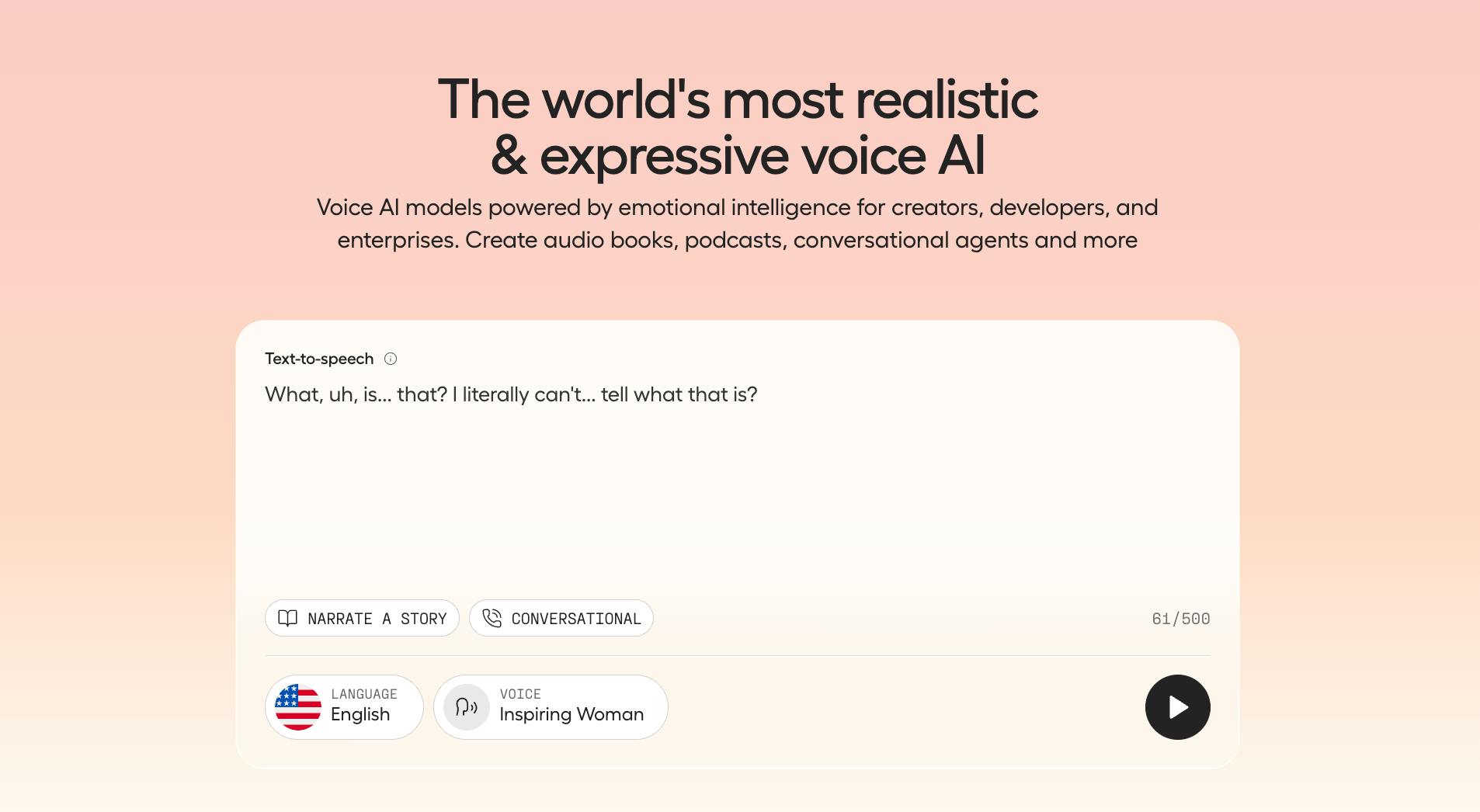

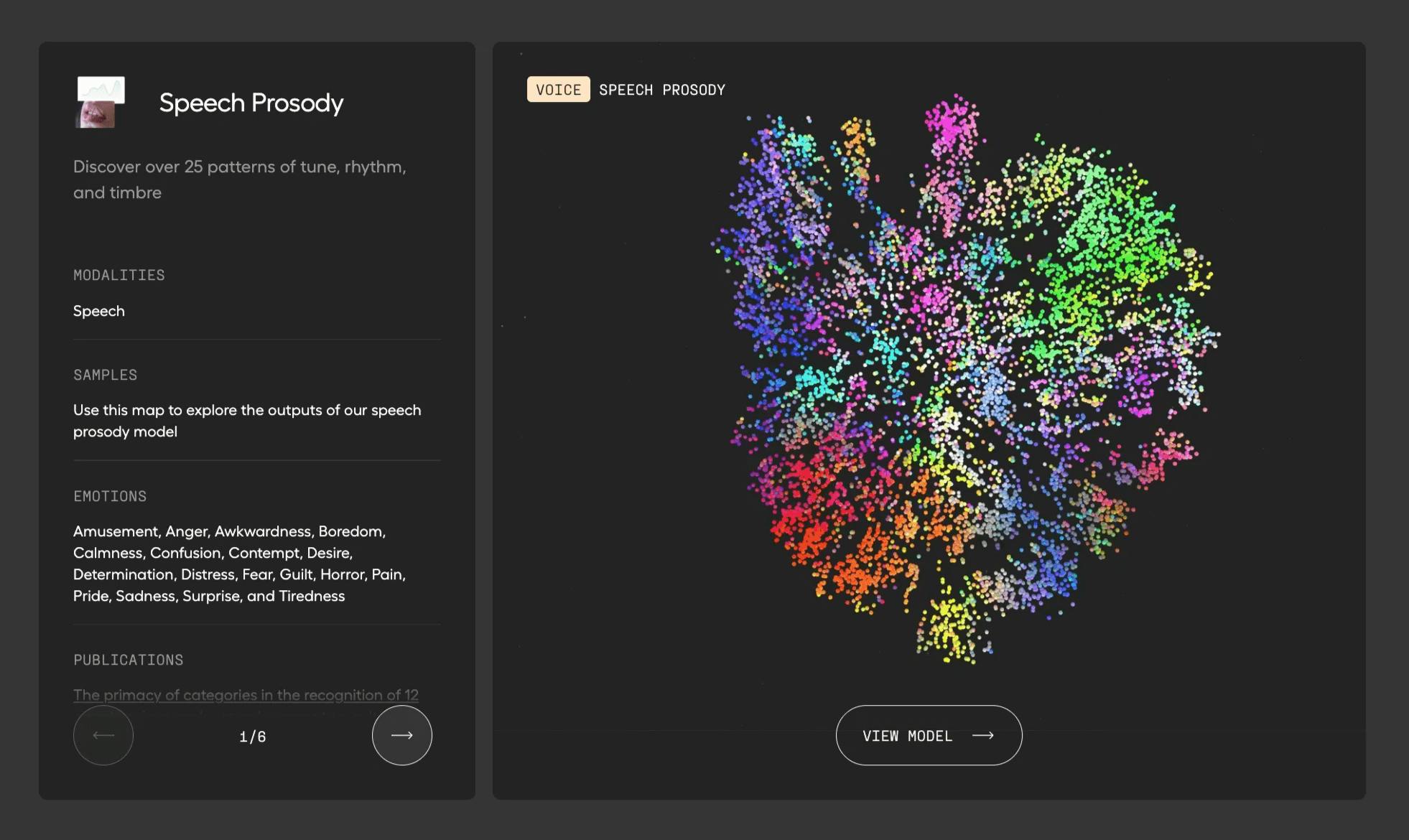

Expression Measurement

Source: Hume AI

Hume AI’s Expression Measurement API is built on its 10+ years of research and advances in semantic space theory. The API provides access to Hume AI’s foundational technology for analyzing human expressive behavior across audio, video, and images. The technology can analyze video or images to detect 48 distinct dimensions of facial movements associated with emotional signals like awe, confusion, joy, or pain. It also measures the non-linguistic elements of speech (e.g., tone, rhythm, timbre) and non-speech vocalizations (e.g., laughter, sighs, gasps, and cries), mapping each across 48 emotional dimensions. For text, the system can assess the emotional tone of transcripts across 53 dimensions, enabling multimodal understanding.

The API supports a range of use cases. The technology can assist in monitoring patient tone and emotion during therapy or check-ins, or detect caller frustration or distress in call center analytics. In research and product teams, users can use the API to analyze sentiment patterns in user interviews, usability studies, and customer experience (CX) feedback.

Market

Customer

Hume AI likely targets organizations and builders who recognize the value of emotional intelligence in improving user experience, engagement, safety, or specific outcomes within their applications. As of January 2026, the company’s customer base was diverse, with usage from sectors like customer support, health and well-being, education, automotive, robotics, and publishing. Hume AI reported over 100K developers and businesses using its APIs as of November 2025.

Its users include customer experience and contact-center platforms seeking to replace cold Interactive Voice Response systems or text bots with empathic, real-time conversational voice agents. Companies such as Vonova have integrated EVI to build emotionally aware support agents, resulting in 40% lower operational costs and 20% higher resolution rates. In health and wellness, companies like hpy, a software service for therapists, integrated EVI and the Expression Measurement API to detect patient mood and increase follow-through on therapeutic tasks by 70% as of March 2025. Automotive and hardware enterprises are also experimenting with emotionally adaptive voice interfaces, including a Fortune 100 automotive company partnering with Hume AI to prototype personality-driven car assistants.

Beyond enterprise deployments, Hume AI’s developer ecosystem includes startups building interactive companions, education bots, and AI-driven entertainment or gaming experiences that require expressive, customizable, or emotion-aware voices.

Market Size

Emotionally intelligent voice AI spans across affective computing, voice and multimodal interfaces, and AI customer experience systems. The broader affective computing market, which covers emotion AI for voice, facial, and text-based analysis, was valued at approximately $42.9 billion in 2023 and was projected to reach about $388.3 billion by 2030, growing at a 30.6% CAGR from 2024-2030 as of 2023. Growth in affective computing is driven by rising demand for sentiment analysis and emotional intelligence in customer service and marketing, alongside broader adoption across sectors seeking more personalized user experiences. Additional momentum comes from increased deployment of service robots and advances in audio-visual sensor technologies, which enable affective computing to improve human–machine interaction in areas like healthcare, logistics, hospitality, and transportation.

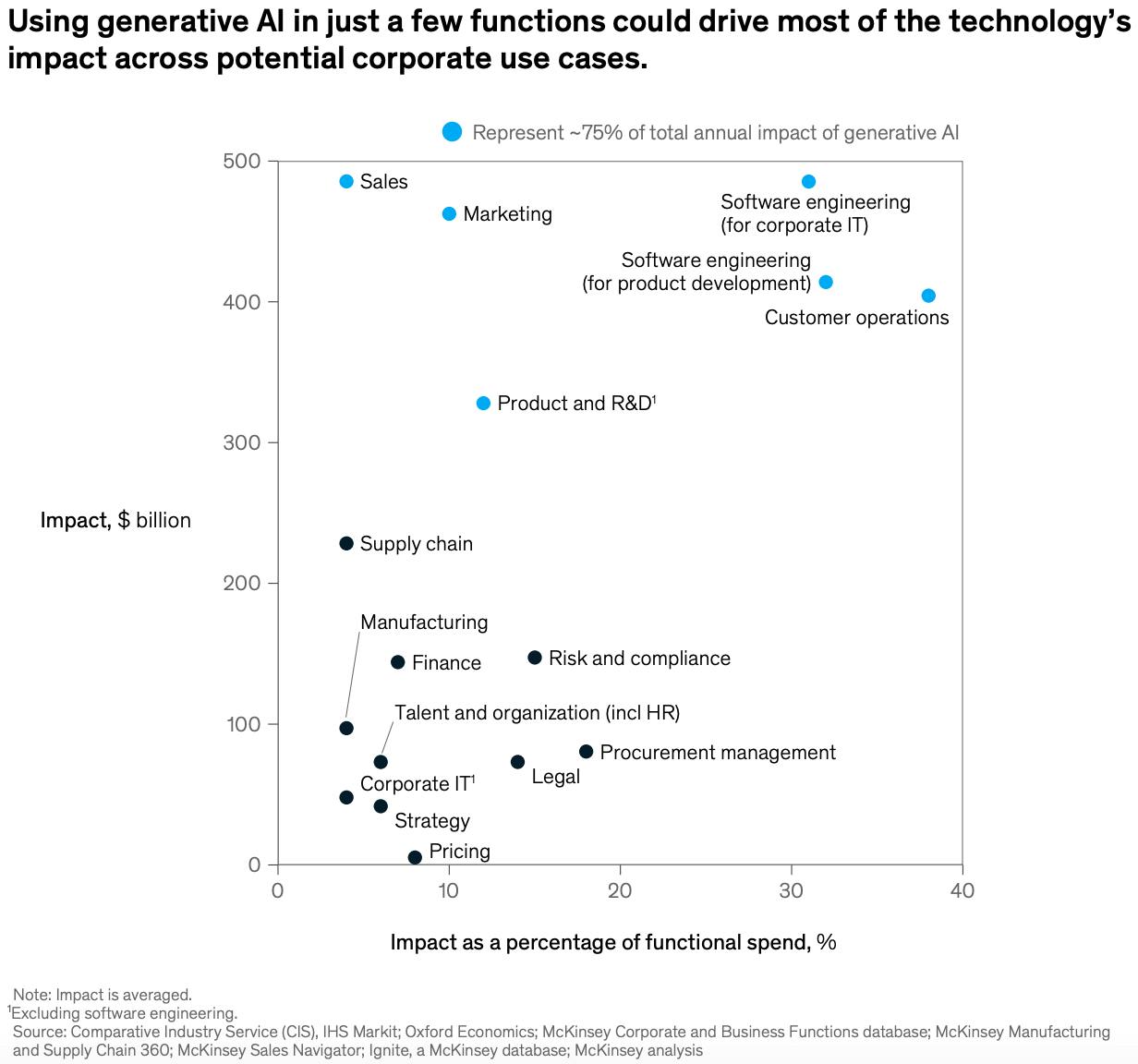

Within this landscape, the voice assistant market had an estimated value of $4.9 billion in 2024 and was projected to grow to $25.0 billion by 2035, as of 2025. Growth is driven by technological advancements and consumer demand. The conversational AI market had a market size of $11.6 billion in 2024 and was projected to reach $41.4 billion by 2030. McKinsey further highlights customer operations as one of the four functions capturing about 75% of gen-AI value, with customer care productivity gains of 30-45%, a key driver of enterprise investment in empathic voice agents and real-time emotion analytics.

Source: McKinsey

The adoption of voice interfaces further expands this opportunity. As of 2023, more than 1 in 4 people regularly used voice search on their mobile devices. By 2024, experts estimated the number of digital voice assistants to be 8.4 billion. As voice interfaces evolve from command-driven to empathic and emotionally adept, emotionally intelligent AI is likely to become a default interaction layer in assistants, customer-service bots, and embedded devices.

Competition

Competitive Landscape

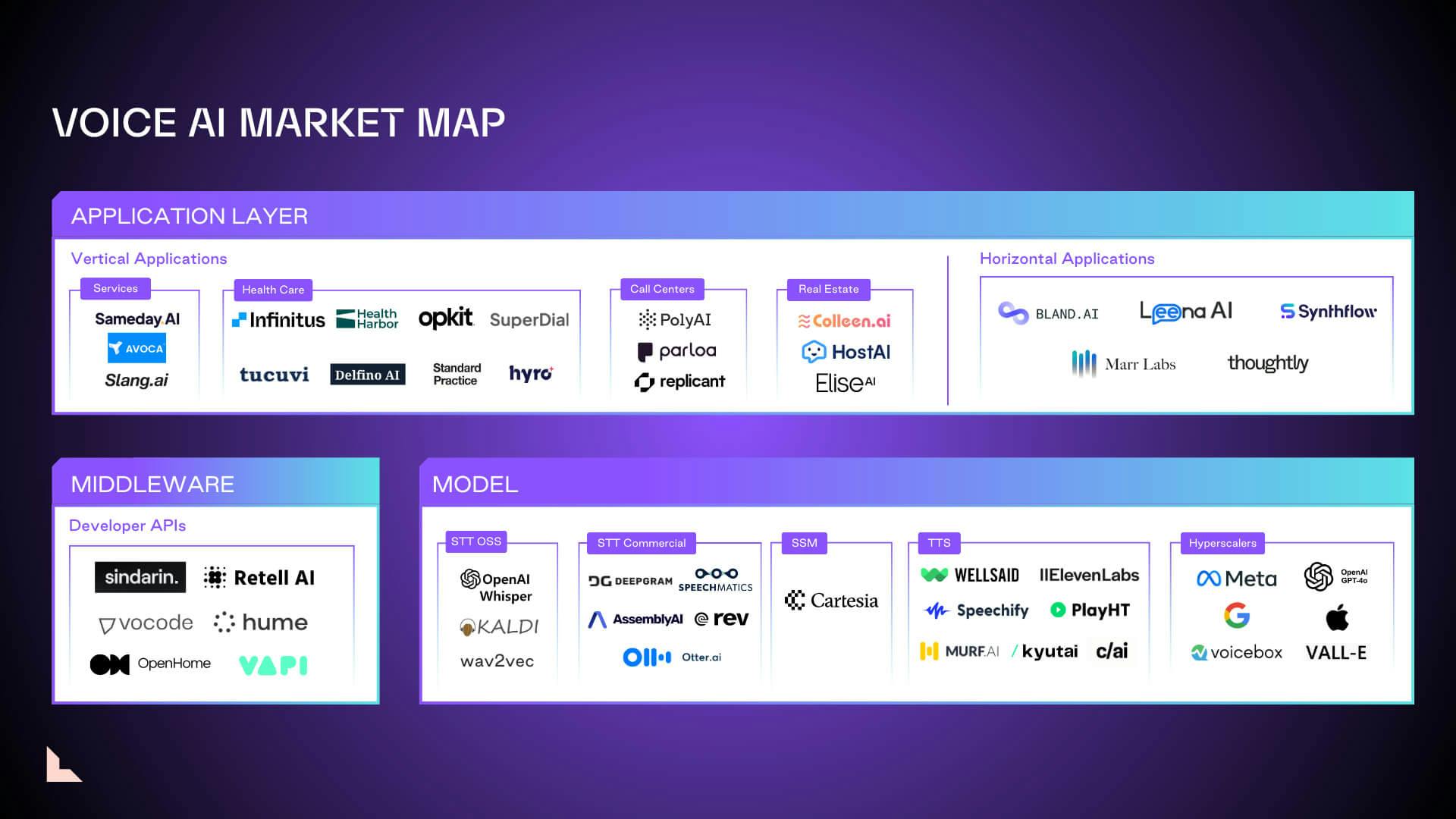

Source: VC Cafe

The emotionally intelligent voice AI market spans affective computing, speech and conversational AI, and voice generation. The market blends elements of both mature and emerging industries, with large cloud incumbents such as Microsoft (Azure Cognitive Services), Amazon (AWS Polly), and IBM (Watson AI) dominating enterprise speech infrastructure, while startups like ElevenLabs and Cartesia compete on expressive voice generation tools.

Within this landscape, Hume AI occupies a distinct niche that fuses voice synthesis and emotion recognition into a unified product, enabling dynamic, affect-aware interaction rather than a tool focused solely on content generation.

Competition

Conversational & Emotional Intelligence

OpenAI: Founded in 2015, OpenAI develops advanced large language models, including ChatGPT and GPT-4o, which feature voice capabilities that allow users to have conversational interactions with natural speech patterns and can understand a user’s tone of voice. In March 2025, OpenAI secured $40 billion led by SoftBank at a $300 billion valuation, the largest private funding round as of January 2026. The company raised $78 billion total as of January 2026 from investors led by Andreessen Horowitz, NVIDIA, Sequoia Capital, TPG, and Citi. OpenAI competes directly with Hume AI in the emotionally-aware conversational voice AI market, as its newest 4o-mini-tts model, as of January 2026, can detect and respond to emotional tone in real-time conversations.

Affectiva/Smart Eye: Founded in 2009 and acquired in 2021, Affectiva uses computer vision and deep learning to analyze facial expressions and vocal intonations to detect human emotions. Affectiva raised $62.6 million across seven funding rounds led by the National Science Foundation, Kleiner Perkins, Motley Fool Ventures, E14 Fund, and Pegasus Tech Ventures before being acquired by Swedish company Smart Eye for $73.5 million in 2021. As of February 2025, the company analyzed more than 10 million face videos from 90 countries and collected more than 38K hours of data, with technology used by 90% of the world’s largest advertisers and 26% of Fortune Global 500.

Entropik: Founded in 2016, Entropik is an AI-powered integrated market research platform that uses multimodal emotion AI technologies, including facial coding, eye tracking, and voice AI, to measure cognitive and emotional responses of consumers to content and product experiences. Entropik raised $25 million in Series B funding in February 2023, led by Bessemer Venture Partners and SIG Venture Capital. As of March 2023, Entropik held 17 patents in Multi-Modal Emotion AI technologies, and as of January 2026, served more than 150 global brands, including P&G, Nestle, ICICI, and JP Morgan. The company operates primarily in the consumer research and market insights space, helping brands optimize advertising, product design, and customer experiences through emotion measurement. Entropik focuses on retrospective analysis for market research, appealing to enterprises that seek voice-based emotion detection capabilities.

Voice Generation

ElevenLabs: Founded in 2022, ElevenLabs creates AI audio technology that generates realistic synthetic voices used for text-to-speech, voice cloning, and dubbing services for audiobooks, advertisements, and media production. In January 2025, ElevenLabs raised $180 million in Series C led by a16z and ICONIQ Growth at a $3.3 billion valuation. The company has raised a total of $281 million across 6 rounds of funding led by Andreessen Horowitz, NVIDIA, Sequoia Capital, Valor Equity Partners, and Endeavor Catalyst. In just two years, ElevenLabs’ millions of users have generated 1K years of audio content, and the company’s tools have been adopted by employees at over 60% of Fortune 500 companies as of January 2026. The company focuses primarily on voice generation quality, which differentiates it as a content-generation tool for pre-recorded media.

Cartesia: Founded in 2023 by Stanford AI Lab researchers, Cartesia develops real-time generative AI models using state space models (SSMs) rather than traditional transformer architectures, creating emotionally expressive voice AI optimized for speed and edge deployment. In March 2025, Cartesia raised $64 million in Series A funding led by Kleiner Perkins Fortune, with $86 million raised total as of January 2026. The company's flagship product, Sonic, cut latency from 90 to 45ms as of March 2025, making it one of the fastest voice AI solutions available for customer support and digital avatars. The company claims to differentiate through architectural efficiency and low latency.

Murf AI: Founded in 2020, Murf AI is a text-to-speech platform that creates studio-quality voiceovers using AI voices for podcasts, videos, presentations, and commercial content without requiring recording equipment or voice actors. In September 2022, Murf AI raised $10 million in Series A led by Z47 and raised $11.5 million total across two rounds as of January 2026. The company reports synthesizing over 1 million voiceover projects with 22x ARR growth over 18 months and supporting 200+ AI voices across 20+ languages as of June 2025. Murf AI likely targets content creators, marketers, and educators needing high-quality voiceovers for pre-recorded content.

Platform Incumbents

Microsoft: Founded in 1975 with Azure AI Speech launched around 2016, Microsoft provides comprehensive speech-to-text, text-to-speech, speech translation, and speaker recognition services with support for over 140 languages and over 400 neural voices as part of its Azure cloud platform. Microsoft has a market cap of $3.6 trillion as of January 2026. In February 2025, Microsoft announced the release of 14 HD voices that can detect emotional cues in text and autonomously adjust the voice’s tone and style, with improved intonation, rhythm, and emotion.

Amazon Web Services (AWS): Founded in 2006, Amazon Web Services (AWS) operates as a subsidiary of Amazon, which had a market cap of $2.6 trillion as of January 2026. AWS provides text-to-speech services through Amazon Polly, which converts text into lifelike speech with natural-sounding voices for applications and content. In 2024, Amazon Polly launched a new generative engine using technology called BASE (Big Adaptive Streamable TTS with Emergent abilities) that creates humanlike, synthetically generated voices. Polly’s generative voices are emotionally engaged, assertive, and highly colloquial while also offering Brand Voice for custom exclusive voices.

IBM Watson: IBM was founded in 1911 and has a market cap of $282.9 billion as of January 2026. Through its Watson cloud platform, the company provides enterprise AI services, including speech-to-text, text-to-speech, and emotion analysis capabilities for industries like finance, healthcare, and customer service. Watson's Tone Analyzer can gauge emotions by the tone of voice, while Emotion Analysis determines the emotional state of conversation participants. IBM presents Watson as a comprehensive enterprise AI platform where voice and emotion capabilities serve as integrated components.

Business Model

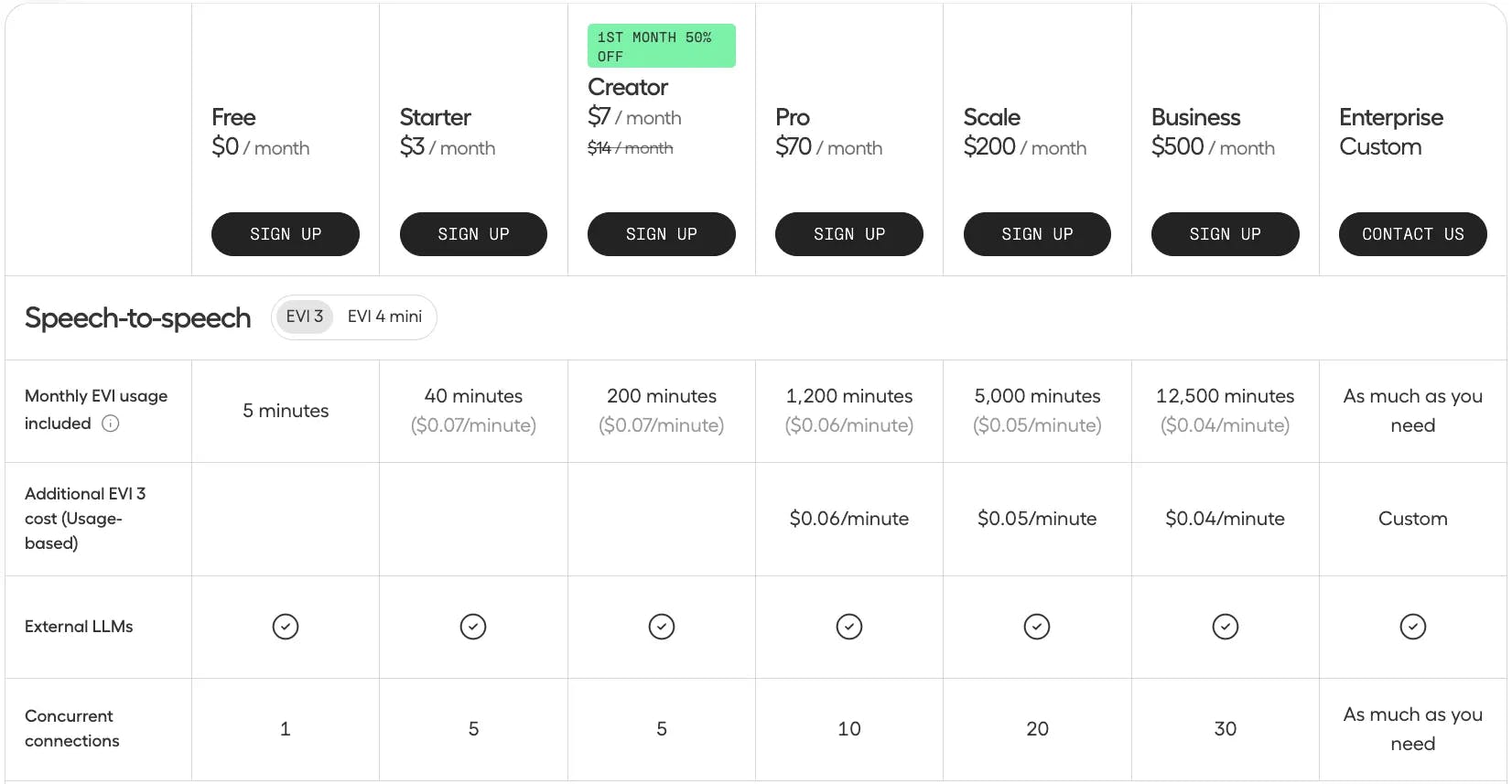

As of January 2026, Hume AI offered a freemium and tiered subscription pricing model combined with additional usage-based charges.

Empathetic Voice Interface & Octave

Empathetic Voice Interface and Octave both utilize a tiered monthly subscription model. This starts with a free tier offering limited character usage and custom voice creation. Paid tiers range from $3-$500/month and offer progressively higher character allowances, more projects, commercial licenses, and lower per-character overage charges. An Enterprise tier provides custom usage, pricing, and support.

Source: Hume AI

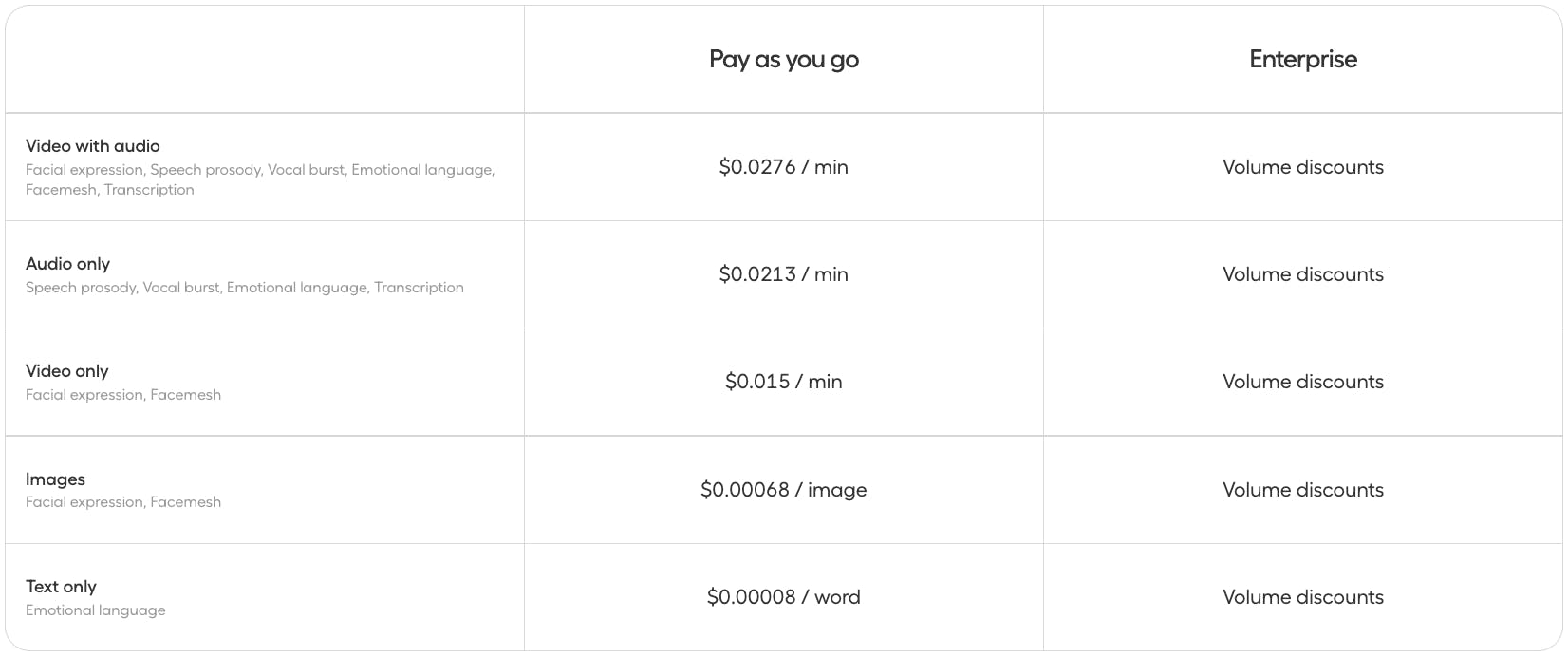

Expression Measurement API

Expression Measurement API features a pay-as-you-go structure based on the modality analyzed and the unit of consumption (per minute for audio/video, per image, per word for text). Rates vary, ranging from $0.00024/word to $0.08/min, depending on the service utilized. An Enterprise plan offers volume discounts across all service types.

Source: Hume AI

Traction

Since the release of its beta version of EVI in February 2023, Hume AI has seen significant traction, with the company rolling out its technology to its waitlist of over 2K companies and research organizations. Initial adoption focused on healthcare applications, where Hume AI built research partnerships with labs at Mt. Sinai, Harvard Medical School, and Boston University Medical Center to explore how subtle vocal and facial cues could support improved clinical outcomes. By early 2025, the company reported over 3.5K sign-ups for its API platform, with a growth rate exceeding 200 new sign-ups per week. As of November 2025, Hume AI stated that it worked with more than 100K customers, ranging from startups to larger enterprises.

Since its beta launch, the company has expanded to various verticals and highlighted several key collaborations that validate its technology. In enterprise customer experience, customers such as Vonova and Hamming AI use Hume AI’s models for customer support voice agents and analytics. In health, wellness, and behavioral science, partners include hpy and Northwell Health Holdings for digital therapy and mental health. Automotive and hardware interfaces include a Fortune 100 automotive company, Toyota, and Volkswagen. In publishing and audio production, customers include Spoken, Inception Point, and AudioStack. Developer infrastructure partners such as Vapi, Groq, SambaNova Systems, and Cerebras Systems embed Hume’s models into real-time inference stacks.

Hume AI also maintains strong ties to the scientific community with its continued publication record in high-impact journals (over 40 articles as of January 2026) and collaborations with academic institutions like the University of Zurich.

Valuation

In March 2024, Hume AI raised $50 million in Series B funding led by EQT Ventures, with participation from Union Square Ventures, Nat Friedman & Daniel Gross, Metaplanet, Northwell Holdings, Comcast Ventures, and LG Technology Ventures. The capital was allocated towards scaling Hume AI’s team, accelerating its research, and continuing the development of its EVI product. Previous rounds included a $12.7 million Series A funding round led by Union Square Ventures in January 2023, with additional investors including Comcast Ventures, LG Technology Ventures, Northwell Holdings, and Wisdom Ventures.

Key Opportunities

Cross-Sector Expansion

Emotional intelligence may become a cross-industry capability as AI-mediated interactions become more common. Hume AI has deployed its technology in sectors including healthcare, automotive, education, and wellness. In healthcare, workforce shortages and the expansion of telehealth, which was projected to reach $504.2 billion by 2030 as of December 2023, are increasing interest in tools that support clinicians by capturing and interpreting emotional signals in remote settings. In automotive, intensified competition from electric vehicles is shifting differentiation toward in-cabin experience, leading OEMs to integrate voice and emotion analytics into infotainment, driver monitoring, and safety systems.

The technology may also be applicable to retail and e-commerce assistants and to enterprise HR, training, and interactive learning platforms, where emotion-aware voice systems may influence engagement and information retention. Additional use cases can include gaming and virtual environments, where emotionally responsive avatars and non-playable characters are becoming more common, as well as smart-home and IoT systems, supported by the presence of voice assistants in approximately 72 million US households as of July 2025.

Integration into Agentic AI Ecosystems

The AI industry appears to be moving towards multimodal and agentic systems that can process visual, audio, and speech inputs in real time. Based on publicly described partnerships with Groq, SambaNova, and Cerebras, Hume AI’s technology could function as a voice and emotion component within high-performance inference hardware and model infrastructure. As AI platforms increasingly incorporate natural voice interfaces, the company may be able to scale through API-based distribution and model-layer integrations rather than direct end-user applications. This integration-oriented approach could position its technology within enterprise and cloud deployment workflows, depending on the extent and durability of these partnerships.

Data Moat

Emotionally intelligent AI typically depends on access to large, high-quality multimodal datasets, which can be difficult to assemble due to privacy constraints, labeling complexity, and cross-cultural variation in emotional expression. Hume AI’s founder, Cowen, has contributed to affective science research for more than a decade, including peer-reviewed publications in outlets such as Nature Human Behaviour and Science Advances. If incorporated into commercial systems, datasets derived from this research could support emotion perception and prosody modeling that may be challenging to reproduce using synthetic data alone.

In contrast to voice-generation providers such as ElevenLabs or Play.ht, which primarily emphasize audio quality, Hume AI appears to focus on mapping emotional signals to speech and on cross-cultural emotion labeling. Its body of peer-reviewed research may provide a degree of scientific grounding for emotion-aware models, although the extent to which this translates into sustained technical differentiation depends on ongoing data access, model performance, and competitive advances in the field.

Leading Ethical and Responsible Emotion AI

As regulators increase scrutiny of biometric and emotion-related data under frameworks such as the EU AI Act, FTC guidance, and proposed GDPR updates, enterprises may place greater emphasis on vendor transparency and data governance practices. Hume AI maintains an ethics committee that has articulated six principles for evaluating empathic AI applications, including beneficence, emotional primacy, scientific legitimacy, inclusivity, transparency, and consent. The company states that its models are trained on consensual, anonymized datasets collected under academic research ethics standards, which, if accurately implemented, would distinguish its data practices from approaches relying on scraped or non-transparent data sources.

If regulatory enforcement intensifies, such practices could be relevant for enterprise adoption decisions, particularly in regulated sectors such as healthcare and education. More broadly, vendors that can demonstrate responsible data collection, governance, and bias mitigation may be better positioned to meet enterprise compliance requirements as emotion-aware AI systems become more widely deployed.

Key Risks

Competitive and Platform Dependency Risk

The voice and emotion AI market is consolidating around large multimodal incumbents such as OpenAI, Anthropic, Google, and Microsoft, all of which are rapidly integrating native affective and real-time voice capabilities into their models. OpenAI’s GPT-4o, for example, introduced real-time, emotionally expressive voice responses in May 2024, raising the competitive baseline for empathetic conversational AI.

In this environment, Hume AI depends on infrastructure partnerships with Groq, SambaNova, Cerebras, and ecosystem integrations with major AI model ecosystems for distribution. While these give reach, they also create dependency risk: if partners build competing in-house voice or emotion models, Hume AI could lose its embedded position. With platform differentiation narrowing, Hume AI’s long-term defensibility depends on sustaining a scientific edge that cannot be easily replicated or commoditized by scale players.

Commercial Scalability

Despite its scientific credibility, Hume AI’s commercial model introduces several execution risks. The company relies on API-based monetization and tiered subscriptions oriented toward developers, individuals, and small businesses, which can result in uneven and usage-dependent revenue. In API-driven products, developers often experiment with integrations without committing to sustained usage, which can limit revenue predictability. Industry benchmarks suggest that freemium SaaS and API products typically convert only a small share of users to paid plans, often cited in the 1–10% range, which may constrain growth absent strong retention and expansion dynamics.

More broadly, emotion AI remains an emerging product category, and some enterprises may treat it as a nonessential or experimental capability rather than a core system of record, leading to longer evaluation cycles and modest initial spend. At the same time, larger platforms such as OpenAI and ElevenLabs benefit from more established developer ecosystems and distribution channels. In this environment, Hume AI’s ability to scale adoption may depend on demonstrating clear, repeatable value from emotion-aware features and evolving its monetization and distribution strategy beyond early-stage developer use cases.

Data and Regulatory Uncertainty

Even with an established ethical framework, the use of emotional analytics carries risk if outputs are misinterpreted or applied inappropriately, particularly in regulated contexts such as healthcare, education, or employment. Emotion recognition performance can vary across cultures, genders, and languages, and uneven model behavior could expose enterprises to bias or discrimination concerns, potentially affecting trust in vendors that supply such systems. In addition, emotion and biometric data are subject to tightening regulatory oversight, which may increase compliance requirements for both vendors and customers. These constraints could raise implementation costs, extend procurement timelines, and limit adoption in highly regulated industries, depending on how regulatory standards evolve and are enforced.

Summary

Founded in 2021, Hume AI builds emotionally intelligent voice models that combine affective science with multimodal AI to interpret and generate emotionally nuanced speech. It offers three core products: a speech-to-speech product, a text-to-speech product, and an API for analyzing human expressive behavior across various media types. In 2024, it raised $50 million in Series B funding for use towards team expansion, AI research, and development of its speech-to-speech offering. Overall, the company’s outlook reflects a balance between technical capability and execution risk, shaped by market adoption dynamics, competitive pressures, and evolving regulatory expectations around emotion-aware AI systems.