Thesis

The rapid acceleration of AI is the result of a powerful feedback loop where advances in algorithmic breakthroughs, improvements in hardware infrastructure, increased data availability, and funding reinforce one another. New model architectures and higher-performing GPUs don’t just enhance model training performance; they attract more investment, driving more technological R&D and fueling further breakthroughs. This virtuous cycle is the engine behind the pace of progress in AI and the advent of increasingly capable foundation models.

Symbolic AI

Before deep learning, progress in AI was driven largely by symbolic systems, or programs that manipulated explicit symbols and rules rather than learned representations. Early work in logic, search, and graph-based planning, along with expert systems in domains like medicine and chemistry, showed that carefully engineered rules and knowledge graphs could solve constrained problems remarkably well. These successes helped fuel the first big wave of optimism around AI in the 1960s–80s, even as perceptrons and early neural networks fell out of favor.

Yet symbolic AI eventually hit a ceiling. Its symbols were defined only in terms of other symbols, not in terms of perception or action in the real world. This “symbol grounding” problem meant that systems remained brittle, so slight changes in wording or environment could cause catastrophic failures because the models had no autonomous way to connect their internal representations to reality.

As tasks grew more complex, the cost of hand-coding rules and ontologies grew, progress slowed, and AI entered its first “winter.” Much of the later interest in learned world models can be read as a response to this unresolved tension. It is an attempt to learn structured representations of the world from data rather than defining them by hand. Deep learning, and later transformer-based architectures, let engineers bypass hand-engineering symbolic structures and instead learn high-dimensional representations directly from data at scale.

Algorithmic Advancements

The rapid advancement of artificial intelligence in recent years was made possible by several key algorithmic breakthroughs. These developments shaped how AI systems were trained and scaled, leading to dramatic improvements in their capabilities and costs.

Beginning in 2017, OpenAI demonstrated that reinforcement learning systems could scale far beyond prior expectations through projects like OpenAI Five, which trained using Proximal Policy Optimization across 256 GPUs and 128K CPU cores and learned the equivalent of 180 years of Dota 2 self-play per day, entirely from scratch and without human data. This work showed that long-horizon, partially observed, multi-agent environments could be mastered by existing algorithms when run at massive scale, producing coordinated strategies that surprised professional players.

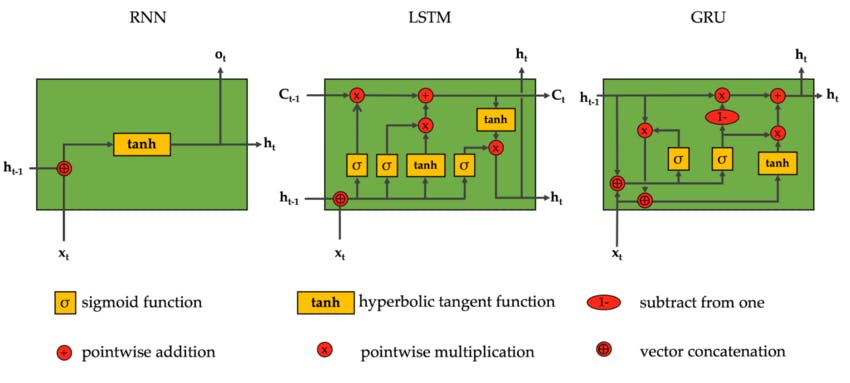

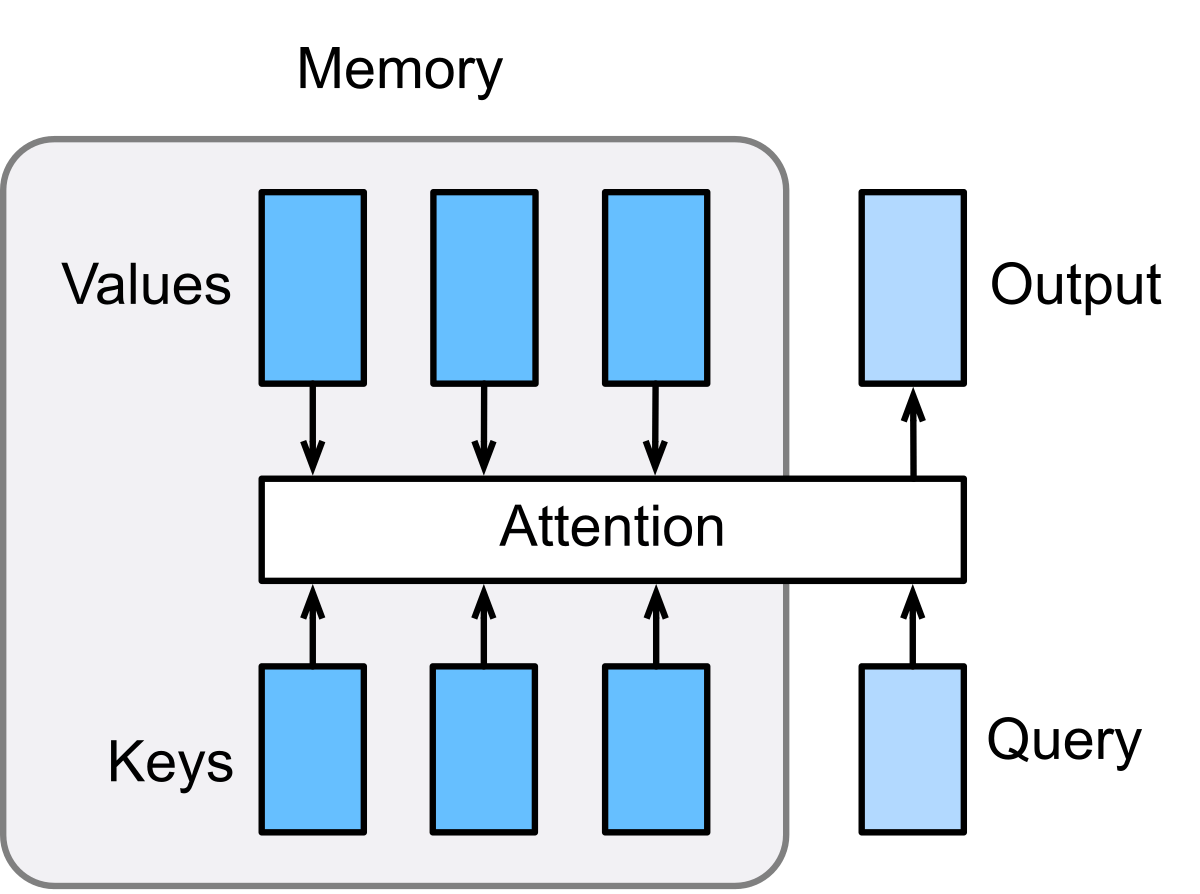

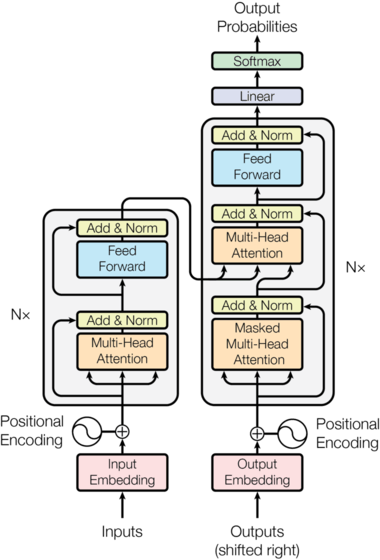

The invention of transformer architectures was one of the most significant breakthroughs in modern AI. In 2017, a team of Google researchers, led by Ashish Vaswani and Noam Shazeer, released the paper Attention Is All You Need, introducing the self-attention mechanism as a replacement for recurrent and convolutional sequence models.

The core innovation was the ability of self-attention to let a model directly relate every token in a sequence to every other token, enabling it to capture long-range dependencies and globally relevant context without relying on step-by-step recurrence. This architecture avoided the local representation traps that RNNs and CNNs often fall into, where predictions become overly influenced by recent tokens or local patterns, and allowed the model to flexibly prioritize information across the entire sequence. By removing recurrence, transformers also made it possible to train on sequences in parallel, a practical benefit that later proved essential for scaling.

This ability transformed language processing because it sped up the processing time for a sequence of text and enabled a deeper understanding of context independent of the distance between words in a phrase. In June 2018, OpenAI used this architecture to build its first Generative Pre-trained Transformer (GPT) model and demonstrated how transformers could be scaled and adapted for generation.

Before GPT-1, natural language models mostly relied on supervised learning from large corpora of manually labeled data, making it prohibitively expensive and time-consuming to train large models. However, this all changed with the introduction of scaling laws. Scaling laws for language models, which describe how increases in model size, dataset size, and the computation used for model training can predictably lead to language model performance improvements, were first proposed in 2020 by an OpenAI team led by researcher Jared Kaplan.

Although previous findings from Google’s 2019 BERT paper and OpenAI’s 2019 GPT-2 paper hinted at the existence of scaling behavior, Kaplan’s 2020 paper was the first to systematically study and propose the existence of such a phenomenon for language models. The discovery of scaling laws provided evidence and formal descriptions of the relationship between LLM performance and the quantity of compute used for model training, which helped guide the development of future models like GPT-3, GPT-4, and GPT-4o.

Techniques such as reinforcement learning from human feedback (RLHF), transfer learning, and mixture of experts (MoE) contributed to the emergence and optimization of foundation models, which are machine learning models trained on vast datasets that can be applied across a broad set of use cases.

To explain each of these techniques in brief, RLHF incorporates human feedback as reward signals to train AI models to align better with human preferences. Transfer learning describes the process of pre-training a model on a large dataset for a general task before fine-tuning the model for a specific task using a smaller dataset to achieve better performance with limited data and leverage knowledge from similar domains. MoE is an architecture where a neural network is segmented into individual components specializing in different aspects of a task. A gating network decides which expert should handle inputs into the model and combines the outputs of the selected experts.

Software and Hardware Infrastructure

Algorithmic developments revolutionized AI model architectures and training approaches, but these innovations could not have been realized without concurrent progress in both software and hardware infrastructure. OpenAI’s ability to scale model training in particular has been shaped by investments in software infrastructure. In 2018, the company pushed Kubernetes beyond typical industry limits, scaling clusters to 2.5K nodes to support large training jobs and reinforcement-learning experiments. This work required fixing systems-level bottlenecks across storage, networking, and scheduling that only emerged at large scale.

OpenAI also built internal tooling, such as Rapid, a distributed RL training system that supported projects like OpenAI Five, which relied on hundreds of GPUs and tens of thousands of CPU cores to coordinate self-play at very high throughput. These early investments created a foundation where researchers could reliably run large-scale experiments without rewriting code or fighting infrastructure constraints.

By 2021, OpenAI had expanded these capabilities to 7.5K-node Kubernetes clusters, allowing a single training run to span hundreds of GPUs and supporting workloads for GPT-3, CLIP, DALL·E, and scaling-laws research. To achieve this, OpenAI reworked its networking stack, improved cluster-wide reliability, and built new systems for resource management, scheduling, and monitoring. The result was an infrastructure layer that let researchers scale experiments quickly and consistently, making software orchestration a central enabler of frontier-level model development.

The computational demands of training increasingly complex models on larger datasets required specialized hardware solutions to make large-scale AI development both technically and economically feasible. Advancements in computer chip architectures, such as Nvidia's GPUs, Google’s TPUs, and Intel’s IPUs, enabled modern AI development by providing the parallel processing capabilities required for training large machine learning neural networks. Originally intended to assist in the rendering of 3D graphics and video, these chips became more flexible and programmable over time, supporting the computational demands of machine learning training.

For instance, in November 2023, Nvidia announced the Nvidia H200 Tensor Core GPU to “handle massive amounts of data for generative AI and high-performance computing workloads.” The H200 GPU was capable of delivering memory at nearly double the capacity and 2.4x more bandwidth compared with its predecessor, the Nvidia A100, which was released three years prior in May 2020.

Similarly, in December 2024, Google released its Trillium Tensor Processing Unit (TPU). Compared to the previous generation of Google’s Cloud TPU v5p, which was released one year prior in December 2023, Trillium TPUs deliver 4x faster training for dense LLMs like Llama-2-70b and up to 2.5x better performance per dollar. Nvidia's development and deployment of networking technologies, most notably through NVLink and InfiniBand, also removed critical bottlenecks in orchestrating multi-GPU training systems.

Moreover, advancements in networking resolved bottlenecks in orchestrating multi-GPU training systems. Early deep learning models could be trained on a single machine with one or a few GPUs, but as model sizes and datasets expanded, researchers shifted towards distributing training workloads across multiple servers with multiple GPUs. This shift exposed networking as a critical component of performance, as fast interconnects were necessary to handle frequent gradient exchanges and parameter updates without slowing down training.

In addition, key technologies like Nvidia NVLink and the adoption of InfiniBand evolved networking technologies in AI from basic point-to-point connections to sophisticated high-bandwidth network fabrics. Introduced in March 2014, Nvidia NVLink is a high-bandwidth, low-latency interconnect to facilitate data exchange in multi-GPU systems. Unlike its predecessor, PCI Express (PCIe), devices using NVLinks can use mesh networking to communicate instead of a central hub, addressing data exchange demands that traditional PCIe buses could not keep up with.

For instance, in 2024, the most advanced PCIe standard offers 128 GB/s of bandwidth, while a system consisting of eight H100 GPUs connected to four NVLink switch chips can achieve GPU interconnect speeds of up to 900 GB/s. Furthermore, each new NVLink generation increased intranode bandwidth. NVLink 3.0 provided approximately 600 GB/s of bidirectional GPU bandwidth, while the latest NVLink 4.0, introduced in March 2022, provided up to 900 GB/s bandwidth per GPU.

While NVLink improved communication between GPUs on a server, the development of Infiniband improved communication between servers, allowing for low latency and efficient data transfer. Infiniband is a networking technology that enables direct communication between servers and storage systems, bypassing the overhead of CPU systems to deliver high-bandwidth and low-latency data transfer. In March 2019, Nvidia acquired Mellanox, the last independent supplier of InfiniBand products. In November 2020, Nvidia introduced its Mellanox NDR 400 InfiniBand platform, which offered communication speeds of 400 Gb/s. In March 2024, Nvidia announced the Nvidia Quantum-X800 Infiniband platform, capable of end-to-end 800 Gb/s throughput.

In 2025, the largest AI models, with hundreds of billions or trillions of parameters, are all trained on AI supercomputers, which consist of clusters of servers where networking between GPUs is often the bottleneck to scaling. Networking technologies like NVLink and Infiniband allowed for near-linear scaling of training jobs to hundreds of servers by keeping the communication overhead between servers and GPUs low. Consequently, advancements in networking and improvements in throughput and latency were instrumental in advancing the scale and efficacy of training AI models.

Data Availability and Management

Another tailwind driving the progress of AI has been the increasing availability of data. The internet’s vast repository of text, video, and image data has proven to be an invaluable resource for training large language models.

Importantly, this data has become legible to AI. Specialized datasets like Common Crawl, RefinedWeb, and The Pile enabled models to easily access diverse data and develop broad knowledge across different domains. Web scraping technologies and data processing pipelines also evolved to more efficiently collect, clean, and prepare training data at large scales. 60% of OpenAI’s GPT-3 pre-training dataset came from a filtered version of Common Crawl, with the rest coming from datasets such as WebText2, Books1, Books2, and Wikipedia.

Not only is the repository of data that LLMs have access to vast, but it is also quickly growing. In 2010, approximately 2 zettabytes of data were generated globally in total. By 2024, this figure was estimated to be around 147 zettabytes, representing a 74-fold increase in 14 years. In 2024, more than 400 million terabytes of data were created daily. In 2024, an estimated 90% of the world’s data was generated between 2022 and 2024. This illustrates how dramatically data creation is accelerating. The total amount of data created, captured, and consumed globally is projected to grow from 147 zettabytes in 2024 to 394 zettabytes in 2028, more than doubling the total amount of global data within five years.

Financial Investment

Funding is another component of the flywheel driving AI progress. Financial investment in AI globally has increased exponentially between 2014 and 2024, fueling accelerated growth in model capability as well as the aforementioned growth factors. In 2024, US investments in AI totaled $67 billion, and between 2013 and 2022, global investment in AI reached nearly $934 billion. Funding for AI startups grew from $670 million in 2011 to $36 billion in 2020.

Moreover, cloud computing providers and technology hyperscalers, such as Google, Amazon, and Microsoft, have made massive investments in AI infrastructure, creating computational resources that benefit the entire AI ecosystem - ranging from data centers to software tools, frameworks, and developer tools that make AI development more efficient.

For example, Google announced that it plans to invest $75 billion in cloud servers and data centers in 2025 alone. Amazon, meanwhile, plans to spend over $100 billion in capital expenditures in 2025, with the vast majority going towards AI infrastructure for Amazon Web Services. In January 2025, Microsoft announced a partnership with OpenAI to invest over $100 billion to build Stargate, a new AI supercomputer. A 2022 report conducted by McKinsey revealed that over 50% of respondents planned to commit at least 5% of their digital budgets to AI.

OpenAI Company Overview

Founded in December 2015, OpenAI is an artificial intelligence research and development company seeking to advance artificial general intelligence in a way that is “safe and benefits all of humanity”. OpenAI pursues cutting-edge research in AI, with a particular focus on deep learning and foundational large language models (LLMs). It builds and deploys AI products and services for both consumer and enterprise use, collaborating with various organizations, such as Microsoft and PwC, to bring AI capabilities to a broader market.

OpenAI’s work can be broadly divided into three categories: research, product development and deployment, and policy and safety.

Research: OpenAI’s research teams conduct fundamental and applied research in AI across fields like robotics, compute scaling, and simulated environments, with a focus on advancing AI system capabilities while mitigating harmful risks.

Product: OpenAI’s product development and deployment offerings are responsible for creating and implementing AI products and APIs for developers and companies to build AI-enabled applications.

Safety: OpenAI’s policy and safety teams conduct safety research, develop benchmarks to evaluate ethical AI use, and advocate for responsible AI development.

OpenAI’s product offerings can be categorized into four main areas: 1) Large Language Models, 2) Code Generation and Understanding, 3) Image and Video Generation, and 4) APIs and Services. LLMs include the GPT family of models (GPT-4o, GPT-5, etc.) as well as ChatGPT (Chatbot, API, Enterprise). Code Generation and Understanding refers to OpenAI Codex, a specialized model derived from the GPT family trained on code from public sources. OpenAI Codex is the model that powers GitHub Copilot to provide code suggestions and completions. Products under Image and Video Generation include DALL-E and Sora.

OpenAI also provides several APIs and services, such as its API platform that enables developers to access GPT models and fine-tuning capabilities through a suite of endpoints, embedding libraries to create numerical representations of text, and Playground, a web-based interface where users can experiment with different OpenAI models and tweak parameters.

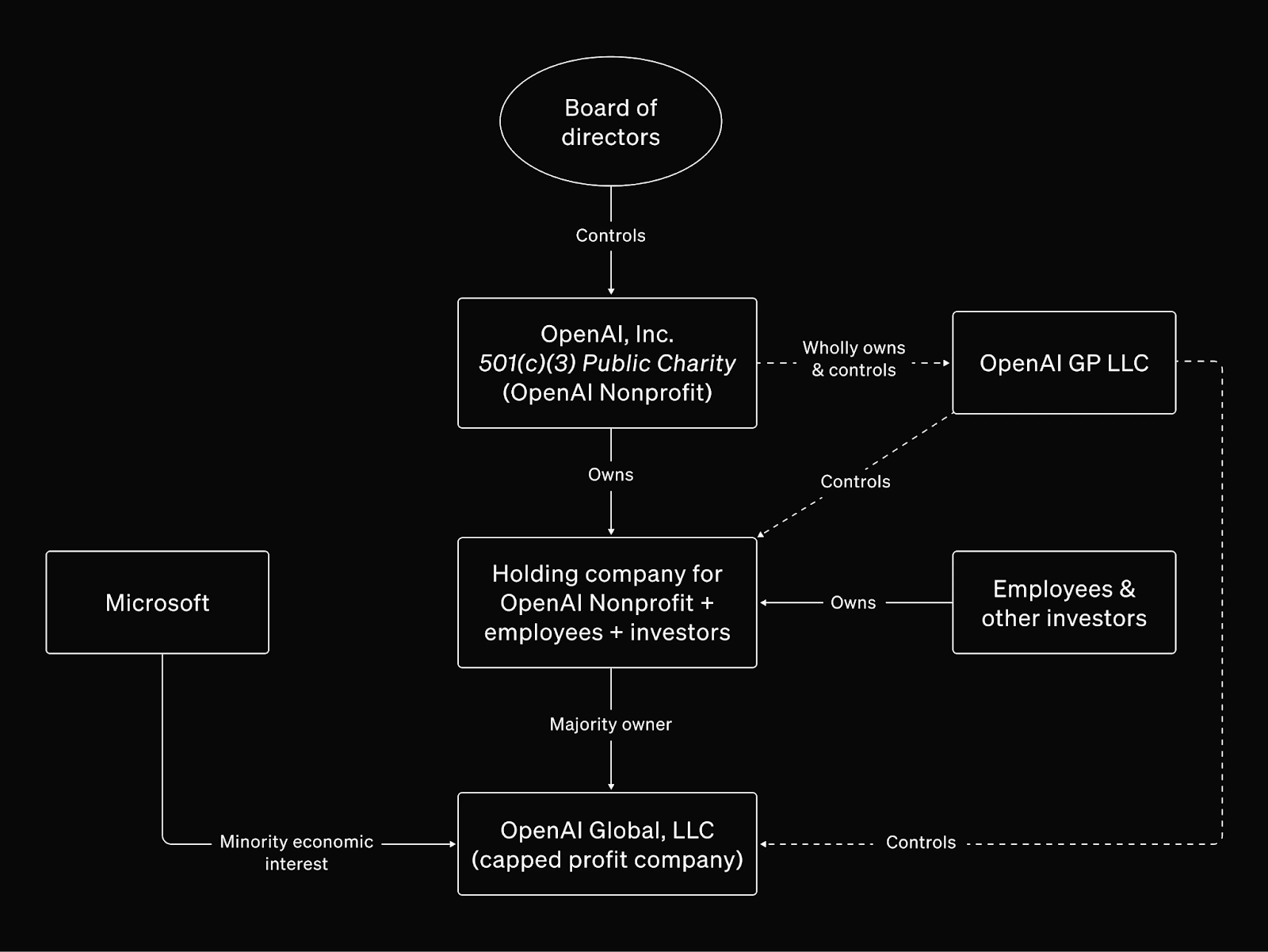

While initially established as a nonprofit, OpenAI introduced a “capped-profit” for-profit subsidiary in 2019 under the oversight of the nonprofit parent to balance the capital intensity of building foundation models with its overarching mission of ensuring AI development benefits humanity. In 2024–2025, the organization began transitioning away from that capped-profit structure, and in October 2025, OpenAI announced a new governance model consisting of the OpenAI Foundation (the nonprofit parent) and OpenAI Group PBC (a public benefit corporation). As a PBC, OpenAI Group is legally required to advance its stated mission and consider the broader interests of all stakeholders, not solely shareholders.

The OpenAI Foundation continues to control OpenAI Group and now holds conventional equity, with all stockholders participating proportionally in any increase in value, with the goal of aligning long-term incentives across the organization. This updated structure is designed to preserve nonprofit mission control while enabling the capital formation necessary for frontier-scale model development. OpenAI’s organizational design appears intended to reflect a dual aim: achieving large-scale global impact through commercially successful products, and maintaining its mission at the core of its governance and operations.

Founding Story

Post-War Beginnings

“Can machines think?”

This question, contained in the first line of Alan Turing’s seminal 1950 paper, “Computing Machinery and Intelligence”, captured a radical idea that was starting to take shape in the minds of mathematicians and computer scientists alike in the mid-20th century.

In the aftermath of World War II, a small circle of mathematicians, logicians, and codebreakers began contemplating what computers might ultimately become. During the war, Turing worked on cryptanalysis to crack the German Enigma cipher and developed the Bombe machine, an early computational device that automated decryption, proving that machines could perform tasks requiring complex reasoning. In 1948, three years after WWII, Turing published his Intelligent Machinery report, regarded as the first Artificial Intelligence manifesto, proposing that machines might eventually imitate human learning and problem-solving.

At the same time, other prominent thinkers contributed theoretical elements that would go on to lay the groundwork for intelligence. In 1944, John von Neumann introduced his concept of game theory, and in 1948, Norbert Wiener described cybernetics, the science of communication between machines and living things. Computer scientists Claude Shannon and John McCarthy developed foundational research in electronic circuits, Boolean logic, and information processing that would provide the technical foundations for early breakthroughs in artificial intelligence.

In 1950, Turing proposed the Turing Test, a criterion for machine intelligence based on a human’s ability to distinguish outputs from the machine and another human. This test sparked significant public interest in artificial intelligence and public debate about whether machines could have the capacity to think.

In the 1950s, a combination of WWII computing breakthroughs, such as ENIAC and EDVAC, transitioning from military projects to research and business applications, Wiener’s cybernetics movement, growing interest in Shannon’s work in information theory, academic discussion about artificial intelligence sparked by Turing’s ideas, and post-war government funding for scientific research culminated in the 1956 Dartmouth Workshop.

This was an approximately eight-week-long event that gathered eleven mathematicians and scientists for an extended brainstorm on artificial intelligence. Organized by John McCarthy, Claude Shannon, Marvin Minsky, and Nathaniel Rochester, the Dartmouth Workshop coined the term “Artificial Intelligence” and was considered to be the founding event of artificial intelligence as a field.

Early demonstrations of AI soon followed. In the early 1950s, the first working AI programs ran on room-sized computers, capable of playing checkers and chess, indicating that machines, indeed, could execute logical strategies.

In 1958, Frank Rosenblatt invented the perceptron, the first conceptual demonstration of an artificial neural network capable of learning through repeated experimentation. Inspired by the structure of human neurons, Rosenblatt created a mathematical device that could recognize basic patterns through repeated feedback. In 1959, IBM’s Arthur Samuel coined the term “Machine Learning” in a paper describing how computers could beat humans at checkers.

Building on the foundations set in the 1950s, AI research blossomed in the 1960s with introductions in practical applications that seemed to validate bold predictions about AI’s imminent ability to surpass human intellect.

In 1965, the creation of DENDRAL at Stanford marked the introduction of the first expert system, designed to infer molecular structures from chemical data to complete work that previously required human expertise. In 1967, Joseph Weizenbaum created ELIZA, an early natural language processing program that could participate in simple conversations by finding patterns in dialogue. ELIZA rephrased users' inputs as questions, but many people became emotionally convinced that it could understand them.

Several advances in problem-solving algorithms and language understanding also occurred in the 1960s. Researchers developed programs that could solve algebra word problems or manipulate blocks with natural language. AI labs were created at universities across the United States, like MIT, Stanford, and Carnegie Mellon. In 1969, the first International Joint Conference on AI convened, marking the growth of a scientific community around AI.

The First AI Winter

While these advancements sparked great optimism about AI’s potential, AI research began to hit a wall at the turn of the 1960s. Early AI efforts were still mostly rule-based and symbolic, reflecting an assumption that human reasoning could be encoded explicitly. In 1969, Marvin Minsky and Seymour Papert published Perceptrons, a book analyzing neural networks and proving the limitations of single-layer perceptron networks, leading many to abandon neural network research for the next decade, solidifying the AI paradigm around symbolic logic.

In the 1970s, the optimism surrounding AI’s potential clashed with reality, and the field experienced its first “winter” as funding dried up and research stalled. The symbolic and expert systems developed in the 1950s and 1960s struggled with the complexities of the real world and failed to scale beyond demos and narrow puzzles. In 1974, British Mathematician Sir James Lighthill published the Lighthill report, claiming that AI had overpromised and underdelivered in areas like language translation and robotics. This report became the basis for the British government’s decision to cut AI funding for AI research in most British universities during that period.

Similar skepticism for AI arose across the world. In 1969, the US Defense Advanced Research Projects Agency (DARPA), previously a major AI sponsor, passed the Mansfield Amendment, which required the organization to find mission-oriented projects with measurable milestones instead of “basic undirected research.” Researchers were required to show that their work would produce useful military technology for funding. Stagnation in AI research and the Lighthill report suggested that most AI research was unlikely to produce useful technology, so DARPA’s funds were redirected towards other projects like autonomous tanks and battle management systems.

Expert Systems

The AI Winter forced scientists and researchers to confront hard problems and redefine their approaches. In the 1980s, researchers focused on “expert systems” instead of trying to build general intelligence. By embracing rule-based and symbolic logic systems, scientists encoded facts and rules to achieve usefulness in narrow domains. For instance, the XCON system, built in 1978 at Digital Equipment Corp., helped configure computer orders, and systems like MYCIN, built in 1972, could suggest a diagnosis from symptoms by identifying the bacteria causing illnesses.

At this moment, AI finally seemed to be delivering practical value for businesses. By the mid-1980s, nearly two-thirds of Fortune 500 companies were using expert systems in daily operations for use cases like evaluating loans or monitoring industrial equipment. Nevertheless, by the end of the decade, expert systems had largely fallen out of vogue due to a combination of their limitations to narrow use cases, and because of a failure to meet overhyped expectations.

The Machine Learning Renewal

After the rise and fall of expert systems, AI researchers began revisiting neural networks. In 1986, Geoffrey Hinton, David Rumelhart, and Ronald Williams rediscovered the backpropagation learning algorithm, which had been described by Paul Werbos a decade earlier. This breakthrough enabled them to train multi-layer neural networks efficiently, overcoming the limitations of a single-layer perceptron, and led to a small revival in neural network research in the back half of the decade.

In 1989, Yann LeCun utilized backpropagation to train a series of convolutional neural networks (CNNs), coined as LeNet, and demonstrated that they could recognize handwritten digits. This new model architecture illustrated that machine learning algorithms could solve practical computer vision problems and laid the foundations for modern deep learning later on. LeNet showed that neural networks weren’t just theoretical concepts but could solve genuine real-world problems; however, LeCun’s solution still wasn’t practically viable because limited computing power constrained network size, and large labeled datasets were scarce.

In the 1990s, AI slowly emerged from its winter as the field pivoted towards machine learning algorithms that could iteratively improve through experience. Instead of relying on human-coded rules and symbols, researchers increasingly relied on statistics to create AI systems that could learn from data.

New algorithms such as decision trees, support vector methods, and ensemble methods were utilized in AI systems for tasks like pattern recognition. Additionally, the increased availability of online datasets and the propagation of powerful personal computers made training models more accessible.

The watershed moment of this era came in 1997 when IBM’s Deep Blue defeated world chess champion Garry Kasparov. Deep Blue was a supercomputer that used brute-force search and programmed heuristics to evaluate 200 million chess moves per second. Deep Blue’s victory demonstrated that machines could surpass human intellect in certain domains and amplified public and academic interest in machine learning.

Another key development that year was the invention of Long Short-Term Memory (LSTM) by Hochreiter and Schmidhuber. LSTMs are a specialized type of recurrent neural network architecture designed to handle the difficulties of learning from sequential data, like paragraphs. The key innovation of LSTMs was that their memory cell structures allowed them to remember information for long periods and update their memory with new, relevant information to enable sequential data processing. This technology would go on to lead breakthroughs in Natural language processing, speech recognition, and machine translation.

Scale: Big Data and Deep Learning

As the internet expanded in the early 2000s, technology companies like Google and Amazon began applying machine learning to unprecedented quantities of data. Concurrently, Moore’s Law yielded faster CPUs and the rise of GPUs, providing the computing power needed to train more complex AI models. The combination of increasing amounts of data from the internet and sensors with better computing enabled deep neural networks to flourish for the first time.

The architecture that LeCun proposed with LeNet was finally feasible to exploit on a large scale. In 2006, Hinton demonstrated that multi-layer neural networks could be efficiently pre-trained, which suggested that AI could learn representations on its own. In 2009, AI researcher Fei-Fei Li created ImageNet, a labeled dataset of millions of images in more than 1K categories, which became an annual contest for AI researchers and a catalyst for computer vision advances.

In 2012, the AI field experienced a seismic shift with the invention of AlexNet by Alex Krizhevsky, Ilya Sutskever (who later co-founded OpenAI), and Geoffrey Hinton. Sutskever was a PhD student working in Hinton’s lab at the time. He built a deep convolutional neural network and won the ImageNet competition by a large margin, reducing error rates by over ten percentage points. Compared to previous models, AlexNet incorporated a deeper architecture with more layers than previous CNNs, used Rectified Linear Units (ReLu) as its activation function to address the vanishing gradient problem, and trained on two Nvidia GTX 580 GPUs to allow for training on a much larger dataset and implement dropout during training to improve generalizability.

AlexNet’s dramatic performance improvements in the 2012 ImageNet competition were the breakthrough moment that launched the modern deep learning era because it demonstrated that sufficient data, computing power, and architectural innovations could drive neural networks to outperform traditional approaches by significant margins. Almost overnight, researchers abandoned decades of work on hand-selecting features in machine learning models and rushed to implement deep neural networks that could learn generalized knowledge representations over large corpora of data.

One individual AlexNet inspired in particular was Sam Altman, the future co-founder and CEO of OpenAI. In 2012, when AlexNet came out, Altman was watching its progress. In an interview with Bloomberg, Altman recalls that although he had studied AI as an undergrad, he had been distracted by it for a while, and seeing AlexNet was the moment when he realized that:

“Man, deep learning seems real. Also, it seems like it scales. That’s a big, big deal. Someone should do something.”

OpenAI’s Company History

In 2012, AlexNet demonstrated incredible performance on image recognition tasks. AlexNet dramatically lowered the top-5 error rate, from 26.2% achieved by the runner-up models down to just 15.3%, and did so on a massive scale using the ImageNet dataset, which comprised over 14 million annotated images across more than 20K categories.

The performance was impressive, but more importantly, AlexNet’s success represented the culmination of three fundamental inflection points. As discussed in the prior section, for decades, neural networks had been relegated to the backwaters of AI research. Early excitement in the 1970s and 1980s was eventually dampened by significant challenges like vanishing gradients, limited data availability, and computational constraints.

These obstacles led many researchers to favor alternative methods such as support vector machines. Considered the state of the art in the 2000s, support vector machines were more predictable and easier to train on the modest datasets available at the time. Meanwhile, neural network research was largely overshadowed, leaving deep learning as a niche interest pursued by a few researchers like Hinton.

The second factor was the breakthrough in computational power brought about by Nvidia GPUs. In the 2000s, scientists predominantly relied on CPUs for training their models. CPUs process tasks sequentially, which makes the training of large, deep neural networks prohibitively slow. The introduction of Nvidia's CUDA platform in 2007 marked a turning point by enabling widespread access to parallel processing. AlexNet itself was trained on a pair of GTX 580s, which accelerated the training process by hundreds of times compared to traditional CPU-based methods. This breakthrough in hardware made it feasible for researchers to distribute the immense computational workload of neural network training across hundreds of GPU cores

The third factor was the assembly of ImageNet as the de facto dataset for computer vision. As described briefly in the prior section, spearheaded by computer science professor Fei-Fei Li, ImageNet was built on the lessons learned from her earlier, smaller datasets like Caltech 101 (~9K images). Li envisioned a dataset that reflected the complexity of the real world, drawing inspiration from the linguistic database WordNet to define roughly 22K countable object categories comprising over 14 million labeled images.

This massive dataset provided the raw material needed to train deep neural networks effectively and served as the foundation for the ImageNet Large Scale Visual Recognition Challenge. Prior to AlexNet’s entry in the third year of the challenge, the contest was dominated by models based on support vector machines, which only delivered incremental improvements. AlexNet’s neural network submission, leveraging both Nvidia's GPU acceleration and the expansive ImageNet dataset, shattered previous accuracy records and solidified neural networks as the future of AI research in the 2010s.

AlexNet showed that neural networks, when scaled with sufficient data and computational power, could dramatically outperform existing methods in highly complex accuracy-based tasks. For Sam Altman, this was the moment when the potential of AI became undeniable. Altman believed that the scalability of deep learning offered a tangible pathway to artificial general intelligence (AGI), machine intelligence that could intellectually match and eventually surpass humans in all aspects. These early signals from the research community spurred Altman to focus his energies on AI development, setting the stage for the founding of OpenAI.

Sam Altman

Sam Altman, a tech entrepreneur and investor, has been a central figure in OpenAI’s story since its inception. Before OpenAI, Altman was president of Y Combinator, where he showed an interest in fueling research into big future technologies (including AI). Altman became an advocate of AI safety and long‑term research. He was influenced by thinkers concerned with existential risk and pushed the idea that AGI should be developed cautiously for the benefit of all. In OpenAI’s early days, Altman helped articulate the organization’s altruistic mission and co‑authored its charter and strategy, emphasizing broad distribution of AI benefits.

However, as CEO of OpenAI in later years, Altman’s approach evolved toward aggressive deployment and commercialization of AI technology. He became the public face of OpenAI’s products, frequently touting the potential of systems like ChatGPT to transform industries. Altman believed that “the best way to make an AI system safe is by iteratively and gradually releasing it into the world” to learn from real feedback. This philosophy led OpenAI to release models and prototypes at a relatively fast pace, a stance that put it at odds with more cautious voices, both internally at OpenAI and externally from public critics.

By 2023, critics and some internal team members felt Altman was prioritizing growth and profit over safety. Tensions climaxed in November 2023 when the board suddenly fired Altman, citing concerns about his candor and the company’s direction. Many interpreted this as a clash over Altman’s aggressive approach. Ilya Sutskever (Chief Scientist and fellow co-founder) reportedly felt Altman was pushing OpenAI’s software too quickly into users’ hands, writing that “[OpenAI doesn’t] have a solution for steering or controlling a potentially superintelligent AI.” The attempted ouster was short-lived; employee revolt and investor pressure forced the board to reinstate Altman as CEO within a week. By the end of the attempted board “coup” and his reinstatement, Altman had become synonymous with OpenAI’s identity and vision.

After returning, Altman has continued to lead OpenAI’s push toward AGI, but with heightened scrutiny. Overall, Altman has steered OpenAI from a cautious research lab to an AI industry leader that is willing to commercialize cutting‑edge models to fund further research, a trajectory that he deems necessary to achieve AGI, even if it means accepting greater short‑term risk. His transformation from an AI safety‑focused advocate to a pragmatic, product‑driven CEO embodies the balancing act at the center of OpenAI.

Ilya Sutskever

Ilya Sutskever is often described as the “brain” behind OpenAI’s original core research and AGI strategy. As described earlier, as a PhD and postdoctoral researcher under Geoffrey Hinton, Sutskever was instrumental in early deep learning breakthroughs. He brought this expertise to OpenAI as co-founder and Chief Scientist.

Sutskever’s contributions at OpenAI were instrumental to the company’s initial success. He helped develop the fundamental architectures and techniques that underlie foundation models. He was a key author on influential research papers and guided teams working on unsupervised learning, reinforcement learning, and super alignment (alignment of superintelligent AI). Philosophically, Sutskever had always been deeply interested in AGI. He had been one of the strongest internal voices insisting that AGI is achievable with enough scale and the right algorithms, and that OpenAI’s purpose is to lead that effort responsibly.

In the early years of OpenAI, Sutskever supported open research and collaboration, but as OpenAI shifted strategy, he agreed with limiting certain model releases for safety (for instance, the deliberate staged release of GPT‑2 in 2019 had his support, to study misuse risks). Sutskever also became increasingly focused on AI safety as models grew more powerful. In 2023, he co‑led OpenAI’s Superalignment project, a dedicated effort to solve the technical alignment problem for superintelligent AI within four years.

Despite his commitment to safety research, Sutskever found himself at odds with Sam Altman’s pace of deployment. As a board member, Sutskever was one of the chief architects of Altman’s surprise firing in November 2023, motivated by fears that OpenAI was moving too fast without fully solving how to “steer or control a potentially superintelligent AI.” He and others on the board believed a pause or leadership change was necessary to refocus on risk mitigation. However, when the ouster backfired, Sutskever reversed course within days, and he signed the open letter urging Altman’s return, publicly apologizing and acknowledging the depth of employee support for Altman.

Though Altman and Sutskever remained amicable in public, the crisis significantly decreased Sutskever’s role at the company. By May 2024, Ilya Sutskever stepped down as Chief Scientist and departed OpenAI. He went on to found a new startup called Safe Superintelligence (SSI) in September 2024, aiming to pursue safe AGI research with a smaller, more cautious team. Sutskever, once the technical heart of OpenAI, chose to start afresh rather than continue amid OpenAI’s more commercial, fast‑paced environment.

Post OpenAI, Sutskever’s influence on AGI approaches remains extensive. Sutskever championed the importance of fundamental research and alignment at OpenAI, and appears to be doubling down on safety‑driven AGI development at SSI. Sutskever’s experiences show the tension that some believe exists between ambition and caution in developing AGI: Sutskever seems to have had the view that commercialization may be necessary to secure further funding for AGI, but that AGI safety should remain a higher priority.

Elon Musk

Elon Musk played a crucial role in OpenAI’s founding and early direction, though he departed relatively early and later became one of its most prominent critics.

Musk’s interest in AI was driven by fascination and fear. Musk has repeatedly warned over the 2010s and 2020s that superintelligent AI could become an existential threat if not properly controlled. In the mid‑2010s, Musk was alarmed by the rapid progress at DeepMind (which Google acquired in 2014) and by conversations with insiders like Larry Page who, in Musk’s view, seemed too cavalier about AI surpassing human intelligence.

Musk co-founded OpenAI in 2015 as a direct response to these fears. Musk envisioned OpenAI as a non‑profit “counterweight” to big tech companies, one that would focus on safe AGI and share its research openly. As a co‑chair of OpenAI in its first years, Musk provided not just funding but also a strong philosophical grounding in existential risk minimization. He often spoke about avoiding an “AI arms race” and advocated for cooperative, careful development of AI.

Musk pushed for bold goals (he encouraged the team to announce a $1 billion fund commitment at launch to show seriousness) but also urged the organization to be very mindful of safety. However, by early 2018, Musk’s relationship with OpenAI became strained. He felt OpenAI was not progressing fast enough to “catch up” to Google and reportedly offered to take charge of the organization. When other founders did not accept his proposal for greater control, Musk decided to leave OpenAI’s board in February 2018 to pursue AGI development through his own companies.

Upon leaving, he stated that he would focus on AI development at Tesla and that OpenAI would be better off charting its own path. Musk also withdrew a large planned donation, creating a funding gap that was later filled by Reid Hoffman (founder of LinkedIn and Inflection AI). After his departure, Elon Musk grew increasingly vocal in his criticism of OpenAI’s trajectory. In 2019, when OpenAI pivoted to a capped‑profit model and partnered with Microsoft, Musk expressed disappointment, suggesting that OpenAI had deviated from its open, non‑profit ethos.

By 2023, Musk openly criticized the company, saying “OpenAI was created as an open source (which is why I named it ‘Open’ AI), non-profit company to serve as a counterweight to Google, but now it has become a closed source, maximum-profit company effectively controlled by Microsoft…Not what I intended at all.” Musk even took to calling Sam Altman “Scam Altman”, indicating a personal rift.

In 2023, Musk founded xAI, his own AI venture, stating the goal to build a truth‑seeking AI and explicitly positioning it as an “open-source” alternative to OpenAI. He also became involved in legal disputes with OpenAI; in early 2023, he threatened legal action, claiming OpenAI’s pivot to for‑profit was against the original founding agreements, and in late 2024, he led a consortium that attempted a $98 billion bid to take control of OpenAI’s parent entity. Despite his early exit, Elon Musk’s influence on OpenAI’s AGI approach remains important. In the broader AI discourse, Musk remains a prominent voice warning against unchecked AI development and AGI existential risk, even as he heads xAI, a key OpenAI competitor in the foundational model space.

Putting Together a Team to Build AGI

The Founding Dinners

The creation of OpenAI was preceded by a series of private gatherings and discussions in 2015 that brought together tech entrepreneurs and AI researchers who shared both excitement about AI’s potential and anxiety about its risks. These founding dinners, reportedly attended by Sam Altman, Elon Musk, and a small circle of confidants, were where the core vision of OpenAI began to take shape. Though AGI was the listed goal, the co-founders were less sure about how to get there. When Dario Amodei (then at Google Brain, former OpenAI researcher, and future founder of Anthropic) asked what the goal of OpenAI was in lieu of “build[ing] a friendly A.I. and then release its source code into the world,” CTO Greg Brockman responded that “[OpenAI’s] goal right now… is to do the best thing there is to do. It’s a little vague.”

Key Team Members

OpenAI’s founding team was a blend of Silicon Valley tech entrepreneurs and some of the world’s leading AI researchers, reflecting the interdisciplinary nature of the challenge. At launch, the organization named Sam Altman and Elon Musk as co-chairs of the board, signaling both a commitment to its mission and star power to draw talent and funds. Ilya Sutskever came on as OpenAI’s first Research Director, with responsibility for setting the research vision for OpenAI and attracting top research talent to OpenAI. Greg Brockman, who left his role as CTO of Stripe to join, became OpenAI’s CTO and was responsible for building out the engineering team. The early technical team featured Wojciech Zaremba and John Schulman, researchers known for contributions to deep learning and robotics, as well as Trevor Blackwell, Vicki Cheung, Andrej Karpathy, Durk Kingma, and Pamela Vagata.

This mix included experts in various subfields. Karpathy was known for work in computer vision, later became Tesla’s AI director, and founder of Eureka Labs; Kingma co-developed important generative modeling techniques (VAE); Schulman was an expert in reinforcement learning. OpenAI also assembled a notable group of advisors; luminaries like Pieter Abbeel (co-founder of Covariant, developer of AI models for robots), Yoshua Bengio (“Godfather of AI” and 2018 Turing Award laureate alongside Geoffrey Hinton and Yann LeCun), Alan Kay (2003 Turing Award Laureate), Sergey Levine (co-founder of Physical Intelligence, developer of AI models for robots), and Nick Bostrom (AGI alignment and existential risk philosopher) were listed as advisors or supporters early on. The founding team’s breadth indicated OpenAI’s intent to tackle everything from game-playing AI to robotics to language models.

On the business and funding side, in addition to Musk and Altman, Reid Hoffman (LinkedIn co-founder), Jessica Livingston (Y Combinator co-founder), Peter Thiel (Founders Fund and PayPal co-founder), Amazon Web Services, and Infosys were announced as initial funders. Many of these figures attended early meetings or were involved in shaping the strategy (Thiel, for example, had an interest in long-term tech breakthroughs, and Hoffman was interested in the societal impacts of AI). Each founding member had a defined role: Altman as the facilitator and public face, Musk as the visionary benefactor, Brockman running day-to-day engineering, and Sutskever leading research. This core team established OpenAI’s reputation from the start as a heavyweight effort uniting talent from both academia and industry.

Early AGI View

At its founding, OpenAI explicitly positioned itself as an organization focused on the path to AGI, meaning AI that can match or exceed human capabilities across a broad range of tasks. The founders conceptualized AGI as a long-term, but not infinite, project. Timelines would manifest on the order of decades, but the team remained agnostic about exactly when it might arrive, emphasizing instead that preparation and guiding principles were needed immediately.

The OpenAI team believed that AGI would most likely emerge from an extension of then-current techniques (deep learning and reinforcement learning), scaled up and combined with new ideas. They did not see a need to invent a wholly new paradigm for intelligence, but rather to incrementally whittle away at AI’s limitations, task by task. This philosophy was evident in their research agenda, which tackled a variety of domains (vision, language, robotics, games), the idea being that progress in many narrow domains would eventually yield general intelligence.

An internal concept that guided OpenAI’s thinking was something akin to an “AI Kardashev scale” or levels of AI capability. Though not public at the time, OpenAI researchers thought in terms of milestones. For example, achieving human-level performance in a video game, then achieving human-level dialogue, then human-level learning, and so on, each increasing the “general” nature of the AI. Eventually, these stages would lead to an AI that can do anything a human can, and more.

From the outset, OpenAI’s philosophy was that AGI could be an overwhelmingly positive development for humanity, ending scarcity, curing diseases, improving the quality of life globally, but only if its rollout was managed carefully. Thus, the founding team stressed cooperative principles. The OpenAI Charter later encapsulated this by stating that if any one “value-aligned, safety-conscious” lab (including OpenAI) was close to AGI, “we commit to stop competing with and start assisting this project.” OpenAI’s founding AGI philosophy was simultaneously optimistic yet cautious. The company assumed AGI is achievable and would work actively toward it, but simultaneously build in ethical guardrails and a mindset that the outcome (benefiting humanity) is far more important than just being the first to reach AGI.

Existential Risk

Given that several founders were motivated by the dangers of uncontrolled AI, OpenAI’s founding documents and discussions gave significant attention to safety measures. The team was well aware of existential risk scenarios popularized by thinkers like Nick Bostrom and Eliezer Yudkowsky, where a superintelligent AI would pursue goals misaligned with human values directly or indirectly, with catastrophic results.

To address this, OpenAI committed to a focus on AI safety research from day one. This included technical research on alignment (how to ensure AI systems do what humans intend) and policy research on how to deploy powerful AI systems responsibly. OpenAI’s initial press release noted the difficulty of predicting timelines for human-level AI and the dual-use nature of such technology (it could “benefit society” or “damage society if built or used incorrectly”). By setting up as a non-profit and pledging to collaborate and publish openly, the founders believed they were structurally mitigating some risk as the lab would not be under pressure to take reckless shortcuts for profit or hide progress.

In practice, early OpenAI sought to address long-term risk by shaping the research culture by hiring researchers interested not just in raw capability but also in ethics and safety, and they funded work on AI alignment strategies (such as inverse reinforcement learning and, later, debate and reward modeling for alignment). They also engaged with the wider community on safety. For example, OpenAI attended and supported the Beneficial AI conference at Asilomar in 2017, which discussed AI principles to avoid existential hazards.

Another way the founding team addressed risk was by instituting a board that had an unusual level of power; the board of the non-profit could even override the management of the for-profit arm if it believed an OpenAI project was leading to undue risk. This governance structure was meant as a bulwark against the scenario of a CEO unilaterally pushing to deploy a dangerous AI system; the board (bound by the non-profit mission) was supposed to keep the mission on track. Concern for existential risk was built into OpenAI, influencing everything from research priorities (with alignment work running in parallel to capability work) to institutional design.

OpenAI Name Origin

The name “OpenAI” was chosen in deliberate contrast to the state of AI research at the time. In 2015, cutting-edge AI was being developed behind closed doors in large companies (Google, Facebook) or secretive government projects. By choosing the name OpenAI, the team signaled that their organization would be “open” in two possible interpretations.

The first interpretation, as defined by Musk (who originally proposed the name OpenAI), meant that OpenAI would collaborate openly and publish its findings for the world. The founding statement promised to “publish… papers, blog posts, or code” and to share any patents with the public. This was meant to engender a spirit of transparency and trust, inviting the global scientific community to join in advancing AI rather than treating it as proprietary IP.

The second interpretation referred to the outcome. OpenAI was dedicated to ensuring the benefits of AI were open to all, not locked behind one corporation or government. As Altman argued, AI’s advantages should be broadly distributed, rather than creating a scenario where only a select few reaped the rewards.

Internally, the leadership acknowledged that “open” did not necessarily mean releasing every detail of every project immediately. Early on in the company’s development, Altman stressed that contrary to popular expectations, OpenAI would not “release all of our source code.” Sutskever also made it clear in internal emails that “open” meant that the benefits of AI would accrue to the public, but OpenAI would not build in public after a certain scale:

“As we get closer to building AI, it will make sense to start being less open. The Open in OpenAI means that everyone should benefit from the fruits of AI after it's built, but it's totally OK to not share the science (even though sharing everything is definitely the right strategy in the short and possibly medium term for recruitment purposes).”

Tensions between the first and second interpretations began to grow when OpenAI chose not to open-source the full version of GPT-2 in early 2019, citing misuse concerns. Nonetheless, the name has been a guiding ideal. The rationale was that an open approach would build trust and safety through cooperation and prevent any single actor from gaining a decisive advantage in AI.

Over the years, critics pointed out that OpenAI became less “open” (as it partnered with Microsoft and kept certain models closed), but the organization has maintained that its end goal remains the same: to openly share AGI’s benefits with humanity. At least in the beginning, the name “OpenAI” was chosen to stake a philosophical position on the journey to AGI, safety, transparency, and common good above all, even as commercial pressures began to erode some of OpenAI’s founding commitments.

OpenAI’s AGI Philosophy

The AI Kardashev Scale

As OpenAI progressed, it internally developed a framework to map the stages on the road to AGI. This was essentially an AI capability scale, analogous to the Kardashev scale used to classify civilizations. OpenAI defined a series of levels to classify AI systems, which gradually evolved as their research advanced. By mid-2024, this had crystallized into a five-level scale that OpenAI shared with its employees to benchmark progress toward AGI.

Level 1: “Chatbots”, capable of natural language conversation (ChatGPT is a prime example of this first rung).

Level 2: “Reasoners”, AI that can perform human-level problem-solving in specialized domains, essentially matching human intelligence at certain tasks.

Level 3: “Agents” are AI systems that can not only reason but also take actions in the real or digital world autonomously, handling tasks that might normally require a human with a doctoral-level education.

Level 4: “Innovators” AI that can help drive scientific or creative innovation, meaning they contribute original ideas or solutions beyond existing human knowledge.

Level 5: “Organizations”, referring to AI that can perform the work of an entire organization, a full general intelligence capable of orchestrating complex, multi-step projects and managing resources like a group of humans would.

OpenAI executives in 2024 told staff that they believed they were at Level 1 (Chatbot stage) but on the cusp of Level 2. This roadmap is OpenAI’s answer to “How close are we to AGI?”, with Level 5 corresponding to a true artificial general intelligence that can outperform humans at most tasks. OpenAI aimed to measure progress more concretely and communicate both internally and eventually externally about how advanced their AI systems are. It also reflects OpenAI’s philosophy that AGI is not a single leap, but a continuum of increasingly capable systems.

AI Safety vs “Move Fast and Break Things”

One of the core tensions throughout OpenAI’s history has been the debate between prioritizing AI safety and taking a more aggressive, Silicon Valley-style “move fast and break things” approach to development and deployment. Internally, this manifested as a split between team members (and board members) who wanted to thoroughly test and prove the safety of advanced AI systems before releasing them, and those who felt that iterative deployment to the public was the best way to learn and improve.

By late 2023, this philosophical rift came to a head. Sam Altman represented the camp that favored rapid deployment. He argued that exposing models like ChatGPT to millions of users helps uncover problems and yields valuable feedback to refine model development. Altman believed that cautious, purely in-lab development could not anticipate real-world issues as effectively, and that a gradual ramp-up of capabilities in public, alongside product commercialization to supercharge funding growth, would actually make the technology safer in the long run.

On the other side, Ilya Sutskever urged more caution. Sutskever pointed out that as AI systems approach human-level reasoning (and beyond), unpredictable behaviors could emerge, and alignment (ensuring AI goals mesh with human values) becomes critically hard to guarantee in the wild. This faction preferred intensive testing, formal verification, and even holding back certain model releases until better theoretical solutions for control were found.

The clash of these viewpoints played out in public in November 2023, when OpenAI’s board (led by Sutskever and several safety-conscious members) abruptly fired Altman. Implicitly, the firing was due to concerns that he was driving OpenAI to take unacceptable risks as a result of its rapid product commercialization.

In an OpenAI blog post just months before Altman’s firing, Sutskever had written, “We don’t have a solution for…controlling a potentially superintelligent AI” yet, making his concern that the technology was outpacing internal safeguards public. Meanwhile, Altman countered that delaying releases could let competitors get ahead or could slow down the very learning process needed to make AI safe. After Altman’s brief ouster and dramatic return, it became clear that the “move fast” side had won out; employee sentiment and investor backing favored continuing OpenAI’s rapid progress.

Even as OpenAI promised better internal communication and more transparency with its board on safety issues, factions within OpenAI, or “tribes”, as Altman called them, were dismantled systematically. Several OpenAI co-founders, including Sutskever and Mira Murati (then OpenAI's CTO), alongside other key OpenAI employees, would leave over the next year, and the entire board was reconstituted.

OpenAI’s challenge since then has been to not appear reckless while still pushing forward. The company often states that it believes in deploying AI carefully and has improved its models’ safety. Nonetheless, critics remain who see OpenAI as having shifted toward a “break things” mentality by releasing powerful models (GPT-4, etc.) without fully solving known problems like bias, misinformation, or the theoretical alignment problem.

Commercialization

OpenAI’s structure and strategy underwent a significant evolution from pure research to commercialization, especially from 2019 onward. Initially established as a non-profit, OpenAI had no pressure to generate revenue and was funded by donations. However, as the scale of experiments (and the corresponding costs) grew, OpenAI embraced commercialization as a means to sustain its mission. The first major step was the 2019 restructuring into a capped-profit model, which allowed it to accept venture investments.

The direct catalyst was the high cost of training state-of-the-art models; compute and talent were expensive, and OpenAI’s leadership openly acknowledged that without new capital, they’d hit a wall. Once the for-profit OpenAI LP was created, OpenAI quickly entered into a partnership with Microsoft. Microsoft’s $1 billion investment in 2019 provided not just funds but also access to a massive cloud computing infrastructure (Azure) tailored for OpenAI’s needs. In return, Microsoft gained a preferred position to commercially utilize OpenAI’s technologies (for example, the exclusive license to GPT-3 for integration into its products and Azure services).

This partnership marked the beginning of OpenAI’s monetization of its research. By 2020, OpenAI had launched a cloud API offering, allowing developers to pay to use GPT-3 and other models, a significant shift from earlier years when code and models were released openly. The revenue from the API and later consumer-facing products (like the ChatGPT Plus subscription launched in 2023) began to fund further research. OpenAI’s commercialization accelerated with the unprecedented success of ChatGPT.

ChatGPT, which launched in November 2022, reached 100 million monthly active users within just two months, a record at the time. Suddenly, OpenAI was a household name, and enterprises were lining up to collaborate or invest. In early 2023, Microsoft invested an additional $10 billion into OpenAI, in a deal that further deepened commercial ties (integrating OpenAI models into Microsoft’s Office, Bing, and Azure offerings). OpenAI also started forming partnerships across various industries, for instance, working with GitHub on Copilot (an AI coding assistant based on OpenAI’s Codex model) and with companies like Stripe and Khan Academy to pilot its GPT-4 model.

While these moves provided much-needed capital and data (from real-world usage) to OpenAI, they also meant that OpenAI had effectively become a business with customers and product deadlines. Some critics argue that this created a conflict of interest. The more OpenAI chased revenue, the more incentive it had to release powerful AI quickly, possibly at odds with safety concerns. The leadership tried to counter this by framing commercialization as a means to an end.

Sam Altman frequently said that OpenAI’s profits (capped for investors) would all go into funding the expensive mission of building AGI, and that they would not sacrifice safety for profit, though skeptics remained unconvinced. By 2024, OpenAI’s commercialization reached another inflection point. After the board saga, OpenAI held discussions about removing the profit cap and transitioning into a traditional for-profit corporation. In October 2024, OpenAI raised $6 billion in a new funding round at a valuation of $157 billion, with the condition that it would convert into a for-profit company within two years, indicating that even more traditional venture capital dynamics are coming into play. In March 2025, OpenAI raised an additional $40 billion in an investment round led by SoftBank, bringing the company to a $300 billion valuation. Seven months later, it reached a $500 billion valuation after a $6.6 billion share sale.

Under research, competitive, and internal/external pressure, OpenAI evolved from a grant-funded AI safety lab to a global startup with significant revenue streams and products used by millions. This commercial pivot not only provided the resources to train frontier models, but it also fundamentally changed the organization’s structure and external image. By 2025, OpenAI was often mentioned in the same breath as the largest public tech companies, and its decisions are scrutinized not only for scientific merit but also for business implications, even as OpenAI tries to maintain alignment with its founding mission.

Board Drama, Key Departures, and Corporate Restructuring

Sam Altman’s Ousting and Reinstatement

The events of November 2023 at OpenAI marked one of the most dramatic corporate leadership crises in recent tech history. As described briefly earlier, in a surprise announcement on November 17, 2023, OpenAI’s board of directors fired CEO Sam Altman, citing that he had not been “consistently candid” with the board. Along with Altman, President and co-founder Greg Brockman also departed (he resigned in protest after Altman’s firing). This move was shocking because OpenAI had been enjoying a string of successes (ChatGPT, GPT-4) and Altman was widely seen as the driving force behind the company. The fallout revealed a schism at the board level, primarily over issues of trust and the pace of AI development.

The board (which at the time included Ilya Sutskever, Quora CEO Adam D’Angelo, tech entrepreneur Tasha McCauley, and academic Helen Toner) was more concerned about long-term safety and felt Altman was pushing too fast without adequate governance. In the immediate aftermath, OpenAI’s CTO Mira Murati was appointed interim CEO, and the board began searching for a permanent replacement, even as Altman’s ouster sparked public controversy.

Over that weekend, nearly the entire OpenAI staff of researchers and engineers revolted. Altman and Brockman were courted by Microsoft, which offered them positions to continue their work; over 700 OpenAI employees (out of 770) signed an open letter saying they would follow Altman to Microsoft unless the board reversed its decision. This unprecedented show of employee support, alongside pressure from investors, forced a rapid reconsideration.

By November 21, 2023, OpenAI announced a deal to reinstate Sam Altman as CEO and replace the board with a new slate of directors. The new interim board consisted of more industry‑friendly figures (including former Salesforce co‑CEO Bret Taylor and former U.S. Treasury Secretary Larry Summers), with the old board members (except Sutskever) departing. Ilya Sutskever, who had been part of the decision to fire Altman, publicly apologized for the turmoil and was retained as Chief Scientist initially, but was removed from the board. In the end, Altman returned to OpenAI’s helm just days after being ousted, and Greg Brockman also returned to the company (though not to the board).

Unsealed court documents, which were released in 2025, have since added much more texture to why the board moved so abruptly. In a deposition taken as part of Elon Musk’s lawsuit against OpenAI, co-founder and former chief scientist Ilya Sutskever testified that he believed Altman “lied habitually” and described a “consistent pattern” of pitting senior leaders against one another, including then-CTO Mira Murati and executive Daniela Amodei (who later left to co-found Anthropic).

Sutskever said he had spent more than a year quietly documenting concerns about Altman’s conduct and ultimately prepared a 52-page memo at the request of directors Adam D’Angelo, Helen Toner, and Tasha McCauley that catalogued instances where, in his view, Altman misled the board, obscured internal safety processes, and manipulated internal dynamics. Other reporting on the deposition indicates that former board members also weighed accounts that Altman had failed to inform them in advance about major product launches (such as ChatGPT) and had not clearly disclosed his control of OpenAI’s startup fund, deepening their sense that they could not rely on him to be transparent with a mission-driven nonprofit board.

The court filings also reveal that, in the chaotic days after Altman’s firing, OpenAI briefly explored a potential merger with rival Anthropic as a contingency plan if the company could not be stabilized under new leadership. According to Sutskever’s testimony and other accounts, some directors saw a merger or even an orderly wind-down of OpenAI as consistent with their duty to minimize the risks of misaligned AGI, rather than allow the organization to continue under a CEO they no longer trusted. These details reinforce that the crisis was not simply a clash over “moving too fast” on AI, but the culmination of a prolonged breakdown in trust between Altman and key members of the nonprofit board, one that only reversed when employee and investor pressure made his removal untenable in practice.

This governance crisis made three things clear to public observers. First, it highlighted the immense value placed on Altman’s leadership by the OpenAI team and tech giant partners; essentially, the board could not execute OpenAI’s mission without the support of its employees and Microsoft. Second, it exposed how OpenAI’s unusual governance (a non‑profit board controlling a for‑profit) led to instability, as board directors focused on long‑term risks might clash with management’s near‑term plans. Finally, it brought to light communications issues; the phrase “breakdown of communications” was cited around the firing, indicating Altman and the board had not been on the same page about OpenAI’s trajectory.

After his reinstatement, Altman affirmed his commitment to OpenAI and moved quickly to stabilize the organization. The board was expanded in early 2024 to include new members with technical expertise and credibility to avoid such insular decision-making in the future.

The Altman firing incident has since become a case study in AI company governance. For OpenAI, the immediate impact was an even stronger alignment between Altman and OpenAI’s employees and investors, effectively green‑lighting Altman’s “move fast” strategy going forward, but with an acute awareness that AI safety concerns had fallen to the wayside.

2024 Exodus of Founding Team and Talent

In the aftermath of the late‑2023 leadership saga, OpenAI saw a notable exodus of some founding members and other key talent during 2024. The most high‑profile departure was Ilya Sutskever, co-founder and Chief Scientist. As mentioned, after Altman’s return, Sutskever’s role was diminished; he was removed from the board and, in May 2024, left OpenAI entirely. Sutskever’s exit was symbolic: one of the original architects of OpenAI’s research was leaving the organization he helped build. His departure was coupled with the dismantling of the Superalignment team he had led.

Several other researchers (including Superalignment co-lead Jan Leike) who were focused on long‑term safety and alignment left around this time as well, either to join Sutskever’s new venture or other labs. Sutskever co‑founded Safe Superintelligence Inc. (SSI) in mid‑2024, a new research startup dedicated to building safe AGI with no other product development, taking with him at least one former OpenAI researcher (Daniel Levy) as a co‑founder. SSI managed to raise $1 billion in its first months, indicating strong investor faith in the ex-OpenAI team.

Mira Murati also left OpenAI to start her own AI venture. On September 25, 2024, Murati announced that she was stepping away from the company to “create the time and space to do my own exploration.” This announcement was followed hours later by resignations of OpenAI’s chief research officer, Bob McGrew, and a vice president of research, Barret Zoph. Murati later announced the founding of Thinking Machines Lab in February 2025. McGrew became a senior advisor to the lab, and Zoph became CTO of Thinking Machines.

Furthermore, a number of OpenAI’s safety and policy team members quit in early 2024, apparently out of disillusionment with the board episode and the direction of the company. For example, OpenAI’s former head of policy/regulatory affairs, Miles Brundage, resigned, and several alignment researchers publicly expressed pessimism about OpenAI’s commitment to safety after seeing how the board was treated. This brain drain in 2024 had both practical and cultural impacts.

Practically, OpenAI had to hire aggressively to fill the void. By 2024, the organization was recruiting top researchers and engineers from tech giants (helped by the allure of OpenAI’s high valuation and resources). Culturally, the departure of mission‑driven talent like Sutskever signaled a shift in OpenAI’s internal balance. The center of gravity moved more toward those focused on scaling, engineering, and product delivery. It also meant the loss of some historical memory and context from the founding era. On the other hand, many of the newer employees (who joined during the GPT‑3/ChatGPT boom) were very aligned with Altman’s vision, so OpenAI’s day‑to‑day operations continued relatively unabated.

The 2024 exodus served as an inflection point for the company; OpenAI transitioned from the founders‑run research lab to a next‑generation company less dependent on its original team. By 2025, apart from Sam Altman himself, most of the daily leadership did not include the early founder team. The emergence of competitor labs (Anthropic, which was founded in 2021 by Dario Amodei after leaving OpenAI, SSI by Sutskever in 2024, and Thinking Machines by Murati in 2024) means some of the talent that pioneered OpenAI’s breakthroughs is now working elsewhere on similar goals, potentially in ways more aligned with the original OpenAI mission than OpenAI’s later commercial incarnation. OpenAI has undoubtedly been strengthened in deployment engineering and scale by new talent and funding, but the loss of “the idealists” has permanently affected the company’s trajectory and AI safety attitude towards AGI.

Structure Changes

OpenAI’s organizational structure has undergone several transformations: from a non‑profit to a capped‑profit hybrid, and now toward an even more commercially driven model. Originally, OpenAI was a 501(c)(3) non‑profit entity. The rationale was to free the company from profit incentives so it could focus purely on the societal good. Early governance was straightforward — a board of directors (composed of scientists and donors) oversaw the institute, and day‑to‑day decisions were made by the research leadership. By 2019, after recognizing the funding imperative, OpenAI created the for‑profit subsidiary OpenAI LP with the unique capped‑profit model.

The non‑profit (OpenAI Inc.) remained as the controlling shareholder of the LP, and its board had ultimate control to ensure mission adherence. Investors and employees could hold equity in the LP, but with a capped return. This structure was novel, an attempt to marry the need for billions in capital with a mission‑driven backbone. In practice, it meant that by 2020–2021, OpenAI was operating much like a startup (with venture capital expectations) but still had an unusual oversight mechanism. This duality showed during the 2023 Altman ousting. The non‑profit board (with no equity, focused on mission) could fire the CEO of the company, a scenario that wouldn’t happen in a normal startup or public company. Many saw this governance experiment as problematic when the crisis hit, because the board didn’t include representatives of major stakeholders like Microsoft or the employees, creating a disconnect.

In early 2024, after Altman’s reinstatement, OpenAI immediately took steps to adjust its governance. It added board members with more industry experience. More radically, OpenAI started exploring abandoning the non‑profit control entirely. By late 2024, it became public that OpenAI had agreed (as part of a new funding round) to transition into a traditional for‑profit structure within two years. This likely means dissolving or minimizing the role of the non‑profit OpenAI Inc., and having a normal corporate board for OpenAI Global (potentially a public‑benefit corporation or even a standard C‑corp).

Sam Altman himself noted that raising capital as a nonprofit was impossible, and even the capped‑profit model, while well‑intentioned, was cumbersome when OpenAI needed to move quickly. Another structural shift was internal. OpenAI started to split into more product‑focused teams (for ChatGPT, for the API, for enterprise services), akin to a mature tech firm. The non‑profit still technically exists and engages in some activities (like funding AI safety research outside OpenAI and computing educational initiatives), but its direct control over the technology will diminish. In essence, OpenAI’s structure is converging with the tech industry norm with heavy investor influence, profit‑driven metrics, and conventional corporate governance, a far cry from the niche research lab in 2015.

This evolution has been controversial. OpenAI itself contends that its core mission is unchanged, and it still prioritizes safety alongside an organizational form that best accelerates its work. The coming years (2025 and beyond) will likely see OpenAI as a fully commercial entity, possibly even considering an IPO at up to a $1 trillion valuation according to an October 2025 report, yet one that is tasked (perhaps by its charter or internal policies) with keeping long‑term AGI safety as a priority. It’s an open question how well the mission can be preserved under a more profit‑oriented structure.

Product Evolution

Early AGI Experiments

In its quest for AGI, OpenAI carried out a number of early experiments and projects that were designed to test the waters of general intelligence and advance the state of the art. One of the first initiatives released was OpenAI Gym in 2016, a toolkit for reinforcement learning researchers. Gym provided a common set of environments (like games and tasks) where algorithms could be evaluated, fostering progress in RL. Gym’s creation stemmed from OpenAI’s philosophy of measuring progress, as the team wanted to easily track how a single algorithm might perform across many tasks as a proxy for generality.