Thesis

The market for AI-generated 3D content is growing rapidly as production costs for traditional 3D workflows remain extremely high. As of June 2023, traditional workflows cost game studios over $300 million per AAA movie title, with studios allocating 30-40% of budgets to labor-intensive 3D modeling and design. Beyond entertainment, robotics companies rely on massive volumes of synthetic 3D training data, with single datasets costing over $100K to generate. Across industries like gaming, simulation, architecture, and robotics, demand for scalable 3D generation is projected to grow at 24% annually from 2025 to 2032, outpacing manual production capacity.

As demand grows, production remains constrained by manual 3D workflows and the need for highly specialized labor. Legacy software systems require teams to manually model, texture, and assemble scenes. For example, Unreal Engine workflows often involve creating base meshes in external software, refining details and textures, and importing external assets for real-time rendering. Emerging AI-powered tools such as Kaedim (2D-to-3D mesh generation), Luma AI (3D asset generation), and Polycam (LiDAR photogrammetry capture) accelerate specific tasks but only address isolated parts of the pipeline. The result is a fragmented ecosystem where asset-level automation exists while end-to-end world generation remains unsolved.

World Labs builds Large World Models (LWMs) that generate persistent, explorable 3D environments from single images or text prompts, enabling AI to construct entire virtual worlds rather than isolated assets. By automating the complex process of modeling, texturing, and assembling environments, the company provides the foundational infrastructure for scalable 3D content production. This approach empowers teams across gaming, visual effects (VFX), robotics simulation, and digital twin applications, addressing a market where manual 3D workflows can no longer match the speed and scale of demand. World Labs solves this scalability challenge by delivering AI-native infrastructure that automates world-building, translating creative intent directly into 3D realization.

Founding Story

Source: World Labs

In January 2024, Fei-Fei Li (Co-Founder, CEO), Justin Johnson (Co-Founder), Christoph Lassner (Co-Founder), and Ben Mildenhall (Co-Founder) founded World Labs to develop 3D environment generation, complete with real-time simulation.

Li, who serves as the company’s CEO, is widely recognized as the “Godmother of AI.” She is the Sequoia Professor of Computer Science at Stanford University and Co-Director of the Human-Centered AI Institute, with previous leadership roles as Director of Stanford’s AI Lab and Chief Scientist of AI/ML at Google Cloud. Known as the inventor of ImageNet, a large visual database that significantly advanced AI’s ability to interpret images, she is regarded as one of the driving forces behind the deep learning revolution.

Prior to World Labs’s founding, Li sought to tackle spatial intelligence, the next foundational challenge for artificial intelligence. She identifies this as a fundamental unsolved problem for AI, critical to achieving artificial general intelligence, since understanding and interacting with physical space is essential beyond language and text processing.

After studying under Li, Johnson began collaborating with her on methods for AI systems to generate and reason about 3D spaces. Drawing on his background in computer vision and generative modeling, Johnson brought technical depth from his academic research at the University of Michigan and his work at Facebook AI Research. In early 2023, their shared vision became clear during a dinner with Andreessen Horowitz partner Martin Casado, who encouraged the foundational ambition to transform AI’s capabilities by integrating vision, graphics, and interactive world modeling.

The team then recruited Lassner and Mildenhall based on their expertise in rendering and spatial reasoning, rounding out a founding team uniquely capable of merging generative AI with physical simulation and interactivity. Lassner previously developed Pulsar, an efficient sphere-based differentiable renderer that laid the groundwork for a 3D rendering technique known as Gaussian Splatting. He has extensive experience in 3D graphics and spatial computing from his work at Meta Reality Labs and Epic Games. Mildenhall brings his expertise in 3D neural rendering from Google Research. He is the co-creator of NeRF (Neural Radiance Fields), which established a method for generating photorealistic 3D scenes by encoding geometry and appearance within neural networks.

Product

Foundations of Modern 3D Representation

3D scenes have been traditionally represented as polygon meshes, collections of vertices, edges, and faces that define object surfaces. This approach requires artists to manually model geometry, unwrap UV coordinates, and paint textures, a process that could take weeks for a single environment. Neural Radiance Fields (NeRFs), developed by Mildenhall in 2020, replaced meshes with neural networks that map 3D coordinates to color and opacity values.

Mildenhall's creation of NeRF established the foundation for neural 3D rendering, while Lassner's development of Pulsar, an efficient sphere-based differentiable renderer, explored methods to accelerate rendering using explicit geometric primitives. Together, their research bridged the gap between implicit neural rendering and explicit geometric methods, establishing the foundation for the next major technology known as 3D Gaussian Splatting.

Introduced in 2023, 3D Gaussian Splatting is a new way to represent a 3D scene. Instead of building worlds from heavy 3D meshes or complex neural networks, the scene is broken down into thousands of tiny, semi-transparent clouds called “Gaussians.” These clouds enable continuous volumetric rendering without requiring expensive computations for blank spaces in the environments.

Source: Hugging Face

World Labs’ models build on Gaussian Splatting to generate complete environments. Its techniques combine the efficiency of explicit geometry with the realism of neural rendering, enabling high-fidelity, photorealistic scenes that remain lightweight and interactive.

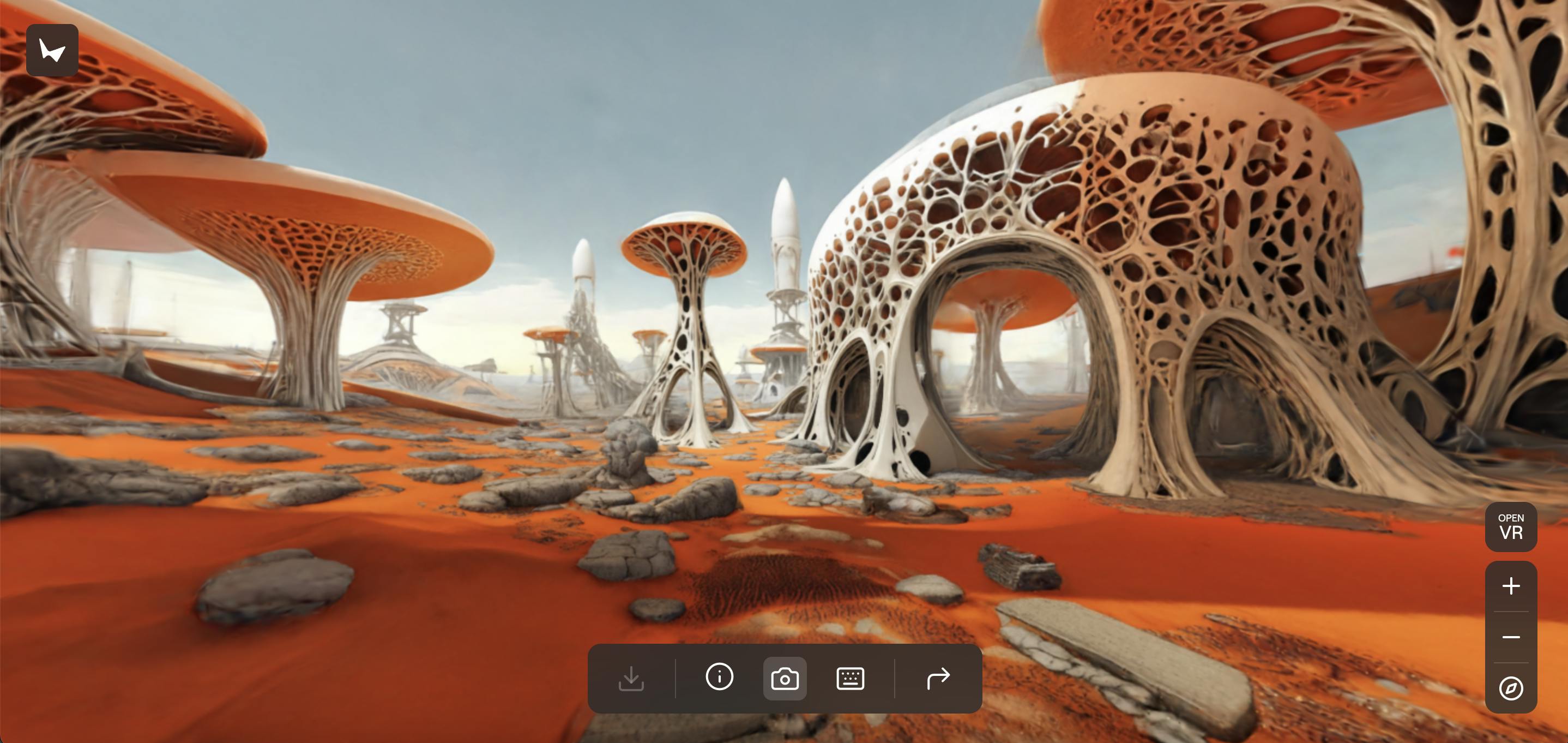

Marble

Building on this foundation, World Labs builds LWMs, AI systems that generate coherent, explorable environments from minimal inputs like a single image or text prompt. In September 2025, World Labs launched its first product, Marble, as a limited beta. All users can explore 3D worlds directly in the browser with six degrees of freedom navigation, while beta users can also generate new worlds from text or image prompts.

Source: World Labs

Users can build out expansive environments, as it takes a single image and generates parts of the environment out of the frame. Compared to World Labs’s first release of its spatial intelligence tool in December 2024, its generated worlds are larger, more stylistically diverse, and have cleaner 3D geometry. In October 2025, World Labs integrated RTFM (Real-Time Frame Model) into Marble, enabling real-time world generation at interactive framerates on a single H100 GPU. RTFM uses posed frames as spatial memory, allowing users to explore endlessly without losing world detail or consistency. The model renders complex visual effects like reflections, glossy surfaces, and shadows learned from end-to-end video data.

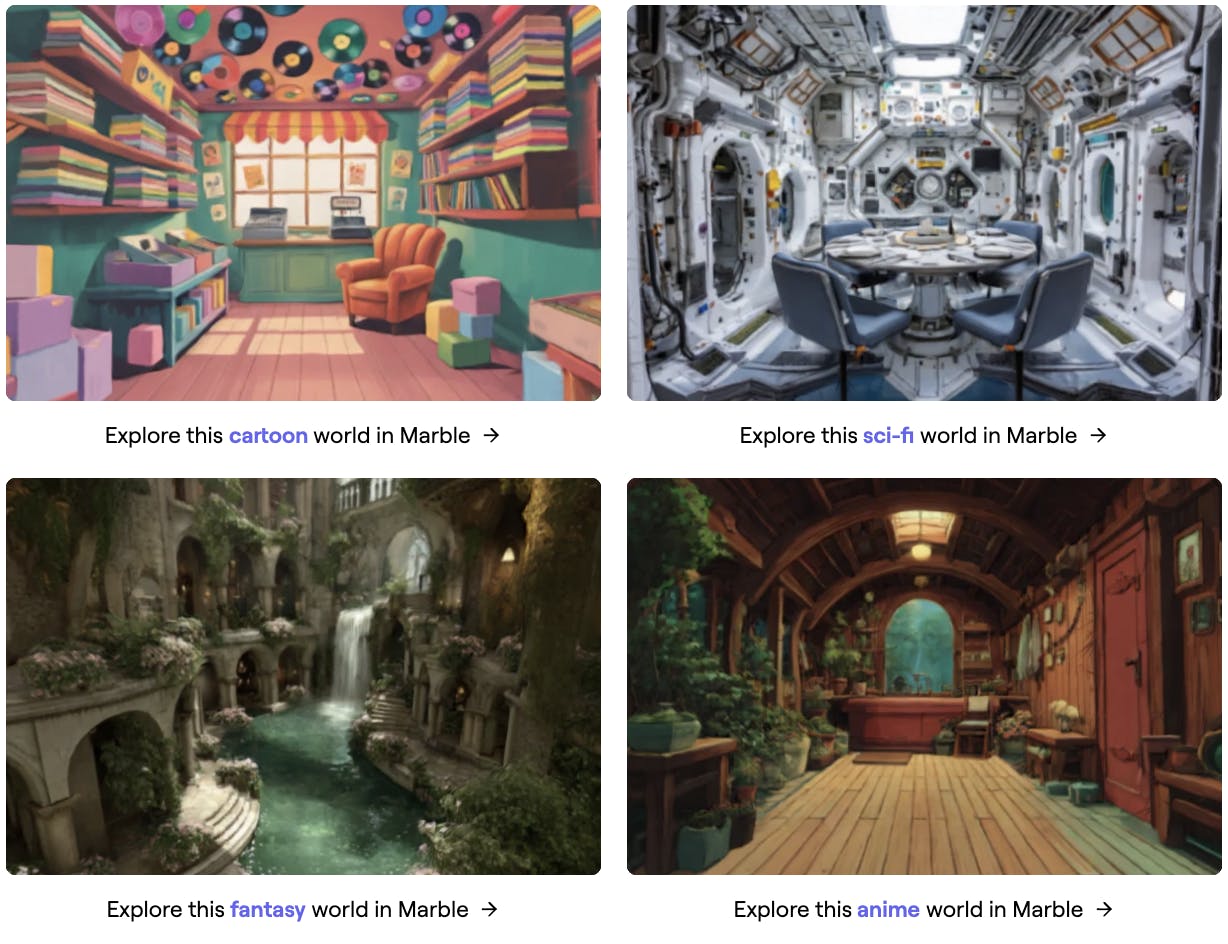

Source: World Labs

Marble's models can generate worlds in multiple visual styles, from flat designs to colorful cartoons and realistic, detailed imagery, adapting the same spatial understanding to different artistic treatments.

Source: World Labs

After generating worlds, builders can export their creations as Gaussian splats and use them in downstream projects. To support this workflow, World Labs developed Spark, an open-source rendering library that natively integrates Gaussian splatting into Three.js, enabling efficient rendering across desktops, laptops, mobile devices, and VR headsets. Marble also supports export to a variety of professional software, including Unreal, Unity, Blender, and Houdini. As of December 2025, World Labs plans to expand Marble to web platforms for easier sharing and distribution.

Market

Customer

World Labs’ target customers are organizations and creators that rely on high-fidelity 3D content but face significant costs or time constraints in manual scene production. This includes VFX studios, game and immersive-media developers (e.g., Industrial Light & Magic, Unity-based studios, independent VR creators), and industrial simulation and robotics teams building digital twins or training environments. Among these domains, World Labs’ earliest traction will likely come from content-creation and immersive-media markets, where faster world generation directly accelerates production.

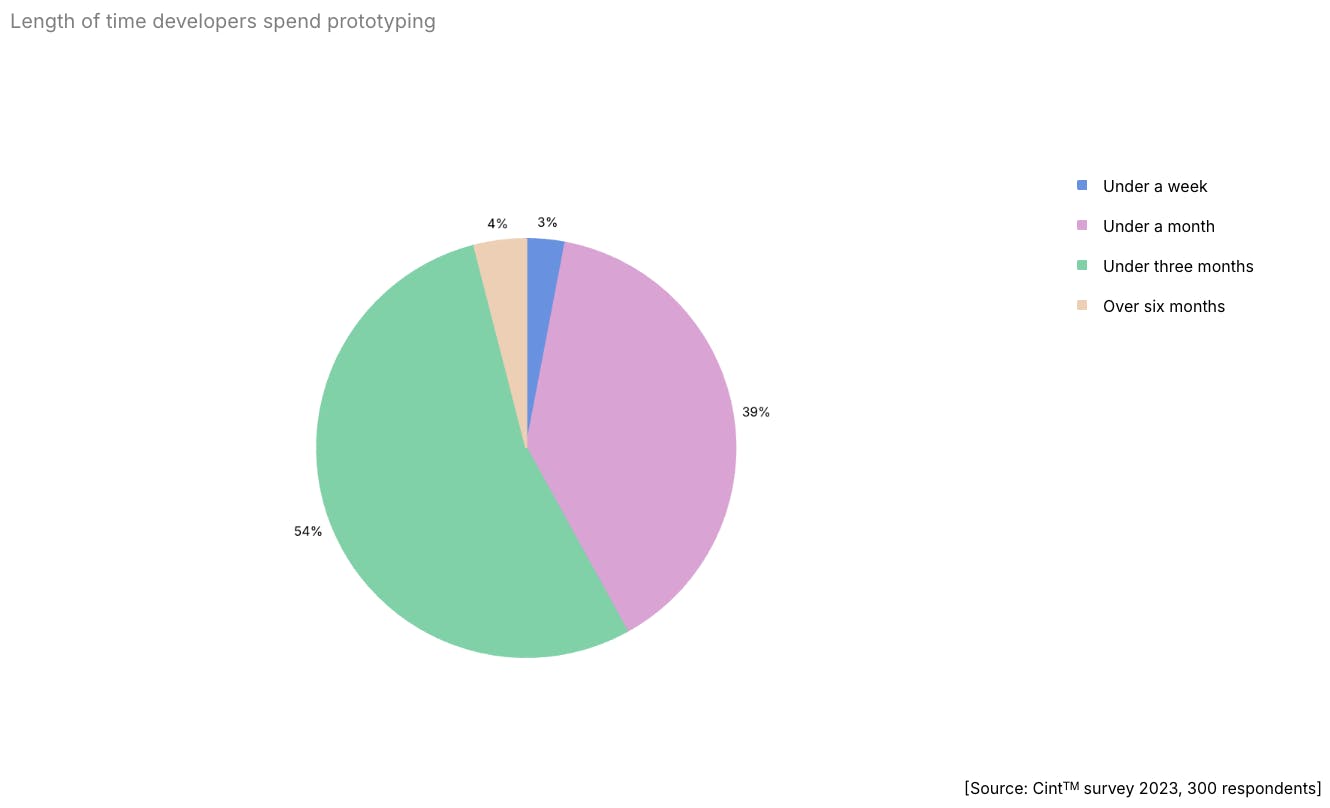

The largest near-term opportunity exists in 3D content creation for gaming, film, design, and animation. In 2024, 96% of game studios were completing prototypes in under three months, up from 85% in 2022, highlighting the industry’s accelerating production cycles.

Source: Unity

As of 2023, most game studios faced rising development costs, with 77% reporting increases and 88% seeking new tools to speed production. This pressure drives rapid adoption of generative workflows, and 56% of AI adopters in game development already use AI specifically for world-building. Similarly, AR/VR builders creating interactive worlds require environments that can be built quickly, navigated freely, and concepted iteratively.

Given this shift toward AI-generated spatial content, World Labs extended its technology into education and training. In December 2024, the company announced integration with EON Reality to power AI-based spatial learning environments. This partnership aims to bring world-model generation to classrooms and corporate training platforms beginning in 2025, enabling instructors to build immersive learning simulations without 3D design experience.

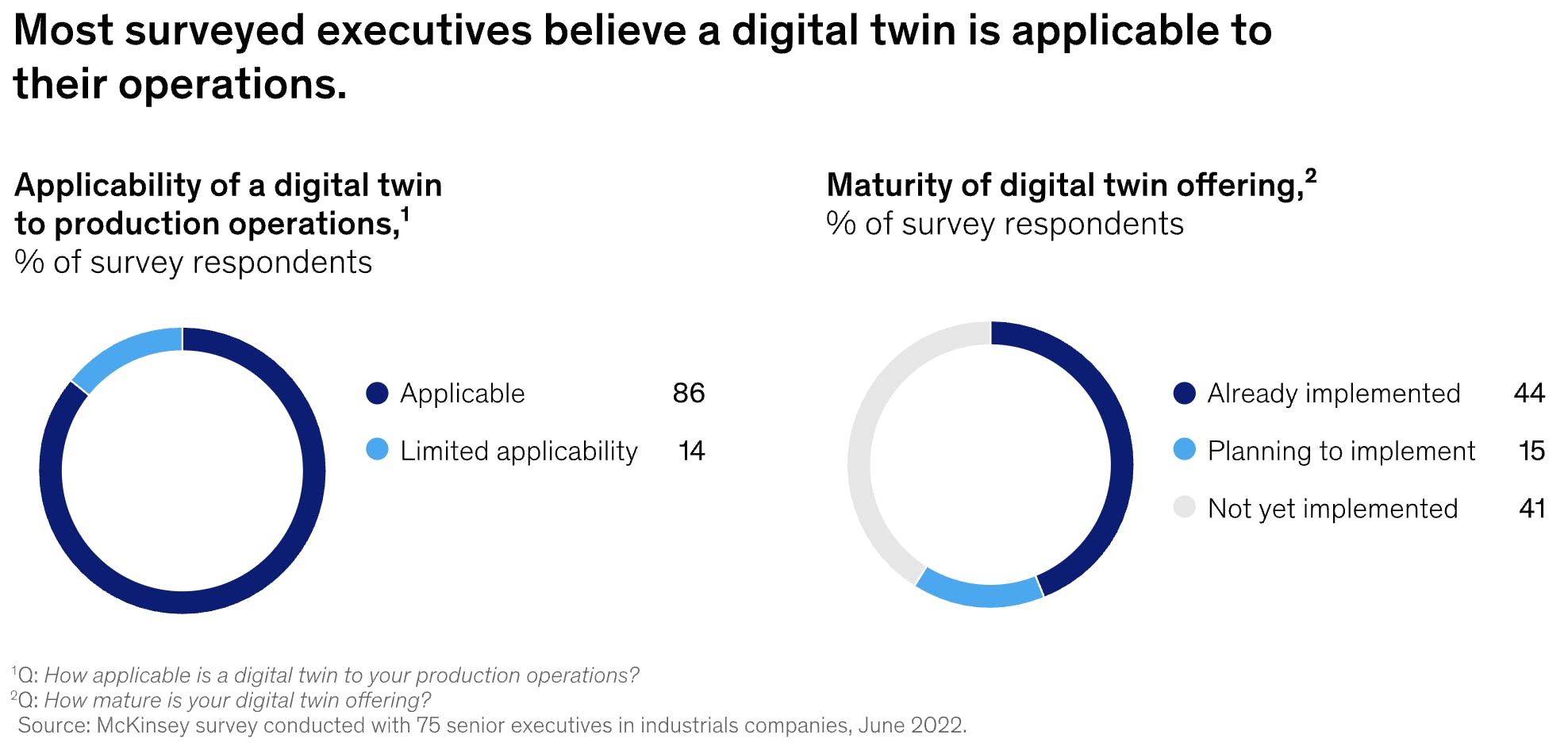

Additionally, World Labs’ technology appeals to industrial and enterprise customers (robotics, manufacturing, architecture, and automotive) that often need accurate 3D worlds for simulation, training, or digital twin purposes. While these teams currently face expensive manual scene build-outs, research suggests digital twins can cut development time by up to 50% for users, and 86% of manufacturer executives expect the technology to play to be used in operations in the future.

Source: McKinsey

Market Size

The 3D generation market includes gaming, VFX, simulation, and education, along with other industries adopting AI-assisted creation tools to replace manual 3D production workflows. The broader spatial computing market was valued at $102.5 billion in 2022 and is projected to grow at a 20.4% annual rate, reaching $469.8 billion by 2030.

Gaming and media remain the largest demand drivers in spatial computing. The global gaming market is expected to reach $656.2 billion by 2033, while the animation industry is estimated to surpass $895.7 billion by 2034. These markets depend on scalable, high-fidelity 3D content pipelines and are increasingly turning to AI generation to meet demand for higher output without increasing production time or labor.

Enterprise and education applications are scaling in parallel. The simulation and computer-aided engineering software market is projected to reach $19.2 billion by 2030, growing at 8.5% annually, as enterprises shift to virtual testing, digital twins, and physics‑accurate simulation. At the same time, the education technology market, valued at $163.5 billion in 2024, is expected to expand rapidly as AR/VR in education adoption grows to $14.2 billion by 2028, driven by increasing K-12 and career-technical-education budgets.

McKinsey identifies spatial computing as a key trend of 2025, describing it as the ability to “use the perceived 3D environment to enable new forms of human–computer interaction.” Forbes further highlights that venture capital investors provided approximately $4 billion in spatial computing investments, anticipating major enterprise adoption by 2030. Within the context of this broader shift, the AI-generated 3D asset market is projected to reach $9.2 billion by 2032 as enterprises integrate AI-powered 3D generation tools.

Competition

Competitive Landscape

The spatial computing and AI-driven 3D generation market includes major incumbents and a growing wave of specialized startups. Established companies like Nvidia, Google, and Meta dominate infrastructure through GPUs, cloud platforms, and simulation engines such as Omniverse, integrating tightly with their developer ecosystems. Their tools prioritize scalability and enterprise integration over domain-specific optimization.

Startups, by contrast, concentrate on narrow, high-value use cases, such as single-image 3D generation, robotic simulation, and synthetic-data pipelines. Players like Luma AI and Scenario are making 3D creation faster and cheaper, reducing the need for manual asset production.

World Labs targets the gap between these approaches by building LWMs capable of generating full, consistent 3D environments from minimal inputs. Rather than creating isolated objects, its models emphasize scene-level coherence and physical plausibility, enabling use cases in robotics, AR/VR, and simulation.

Competitors

3D Creation and Content Platforms

Luma AI: Founded in 2021, Luma AI develops multimodal AI systems focused on video and 3D understanding, with its flagship product Dream Machine enabling users to generate high-quality video clips from text or images. Beyond its Dream Machine, Luma’s Genie platform enables text-to-3D model generation and interactive scenes feature, which allows users to create explorable 3D environments from text prompts or images, leveraging Neural Radiance Fields (NeRF) technology. Luma AI has over 25 million users as of November 2024, and the company has raised $173 million across five funding rounds, with its latest Series C round in December 2024, raising $90 million led by Amazon and AMD at a post-money valuation of $400 million. As of August 2025, Luma AI is also seeking to raise over $1.1 billion at a valuation of $3.2 billion. Luma AI focuses on visual realism, bridging text-to-video systems and embodied world models like DeepMind's Genie.

Polycam: Founded in 2020, Polycam is a platform for capturing 3D scans of environments via LiDAR or photogrammetry on mobile devices, and exporting captures such as meshes or point clouds for downstream use. It supports exporting its data into formats compatible with NeRF tooling and is also exploring approaches like Gaussian splatting for rendering formats. In February 2024, Polycam received $18 million in Series A funding from Left Lane Capital, Adobe Ventures, Hurley, and others, bringing its total funding to $22 million. Polycam operates at the capture layer, primarily serving users who need accurate digital replicas of real-world spaces for applications like real estate, architecture, and preservation.

Kaedim: Kaedim was founded in 2020 and offers a platform that converts 2D images or sketches into 3D models using AI-driven algorithms. It integrates with engines like Unity, Unreal, and Blender to streamline game development pipelines. Kaedim closed its $15 million Series A funding, led by A16Z GAMES, in March 2024 at a valuation of $50 million. With more than 200 game studio customers, Kaedim focuses on accelerating asset creation for game environments. Kaedim provides tight integration with industry-standard design tools, accelerating 2D-to-3D conversion.

Scenario: Founded in 2021, Scenario offers a generative AI platform that enables game developers and digital artists to train custom, style-consistent models and rapidly create large volumes of 2D and 3D art assets. The company raised $6 million in a seed round in January 2023, led by Play Ventures, bringing its total funding to $11.5 million. While Scenario’s core focus remains on asset-level generation rather than full environment creation, its workflow-centric approach appeals to game developers who need large volumes of style-consistent assets.

Simulation and Spatial Intelligence

Physna: Physna uses geometric deep learning to index and analyze 3D models for manufacturing and engineering applications. By creating a complete 3D model search engine and anomaly detection system, Physna helps enterprises improve product development and supply chain accuracy. After its founding in 2015, it has raised $85 million from investors like Sequoia Capital, Tiger Global, and Drive Capital, with its most recent $56 million Series B round in July 2021 led by Tiger Global, Sequoia Capital, and Google Ventures. Physna focuses on search and comparison of 3D structures, giving it a competitive edge in enterprise adoption where accuracy and traceability of CAD models are critical.

Blackshark.ai: Founded in 2020, Blackshark.ai builds photorealistic 3D digital twins of the Earth by applying AI to satellite and aerial imagery. These high-fidelity digital twins serve industries such as gaming, mapping, and autonomous navigation for enterprise clients like Microsoft. The company raised $15 million in a Series A extension in November 2023, bringing its total funding to $35 million with investors like Point72 Ventures and M12 (Microsoft's venture fund). Blackshark.ai focuses on reconstructing the Earth from satellite imagery for geospatial intelligence, giving it a defensible edge through proprietary datasets and partnerships with mapping providers.

Bifrost: Founded in 2014, Bifrost provides AI-powered platforms to generate synthetic 3D datasets that accelerate AI and robotics training. Its technology enables the scalable creation of diverse and annotated 3D environments essential for simulating complex real-world scenarios, reducing reliance on costly manual dataset generation. It raised $8 million in October 2024 in a Series A led by Carbide Ventures and Airbus Ventures, bringing its total funding to approximately $13.7 million. Bifrost focuses on robotics-specific data pipelines, appealing to enterprises building perception AI systems.

Platform Incumbents

NVIDIA: Founded in 1993, NVIDIA is a public company with a market cap of $4.4 trillion as of December 2025, dominating AI compute infrastructure with an estimated 92% GPU market share as of Q1 of 2025. Beyond its GPU hardware, NVIDIA’s Instant NeRF is an inverse rendering technology that reconstructs 3D geometry and appearance from sets of 2D images, enabling rapid scene generation for artists and VR/AR applications. Furthermore, the company’s Omniverse ecosystem extends this capability into digital twins and physics-based simulation, supported by its proprietary USD (Universal Scene Description) standard. NVIDIA leverages strong infrastructure, GPU hardware, and simulation stack synergies. While NVIDIA and World Labs are largely complementary, as NVIDIA builds the hardware and infrastructure while World Labs focuses on the foundational models, they could become competitors if NVIDIA moves further into generative 3D modeling itself.

Google DeepMind: Google is a public company with a market cap of $3.9 trillion as of December 2025. Founded in 2010 and acquired by Google in 2014, DeepMind operates under Alphabet, which has committed $85 billion in AI investments for 2025. DeepMind’s Genie, led by Tim Brooks, a former co-lead for OpenAI’s Sora project, is a foundation world model capable of generating an endless variety of action-controllable, playable 3D environments for training and evaluating embodied agents. Genie 3, announced in August 2025, represents a significant advancement with real-time generation at 720p and 24 frames per second, enabling interactive exploration of photorealistic or fictional worlds from text or image prompts.

Meta AI: Founded in 2004 (as Facebook), Meta is a publicly traded company with a market cap of approximately $1.6 trillion as of December 2025. Meta’s AI division invests heavily in large language, vision, and spatial models, powering applications across VR/AR and AI ecosystems. Meta's recent 3D generation product, Meta 3D Gen, allows users to create virtual worlds and 3D assets from text prompts, generating high-quality meshes with physically-based rendering materials in under a minute. Meta is expected to invest up to $65 billion to power AI goals in 2025, including a recent $14.3 billion stake in Scale AI. While both Meta and World Labs are building generative 3D world models, Meta’s approach is tightly integrated with its metaverse strategy and Reality Labs hardware ecosystem.

Adobe Firefly: Founded in 1982, Adobe is a public company with a market cap of $138.6 billion as of December 2025, dominating creative software with products like Photoshop, Illustrator, and Premiere Pro. Adobe’s generative AI suite, Adobe Firefly, launched in March 2023, is primarily known for text-to-image and text-to-video capabilities. Though not a full 3D world model, Firefly is increasingly embedding “scene-to-image” or 3D-referenced generation workflows, as users can build simple 3D scenes with shapes and feed them as prompts to generate imagery. Adobe also integrated Firefly into its Substance 3D apps for Text-to-Texture and generative background features. Because Firefly is already embedded in a widely used creative toolset, its incremental push into 3D pipelines acts as a competitive lever.

Business Model

As of December 2025, World Labs’s core platform Marble remains free to use for world navigation and limited-access beta creation. Its current focus is on building a developer and creator ecosystem rather than monetizing early users.

The company remains pre-revenue as of December 2025. However, the company’s collaboration with EON Reality, announced in December 2024, which integrates World Labs’ spatial generation capabilities into educational and enterprise training tools, hints at future B2B and platform-licensing opportunities.

Traction

World Labs' Marble beta and its growing developer community demonstrate early traction. Nearly 3K beta users participate in the official Discord server as of December 2025, using Marble to generate, export, and share custom 3D environments. The company’s community-built tools further showcase developer engagement, with users releasing open-source projects such as cropping extensions and GPT-driven world-building companions.

In 2024, NVentures, Nvidia’s venture arm, contributed to World Labs’s Series A extension. Nvidia’s venture arm investment in World Labs signals a closer relationship with NVIDIA’s AI ecosystem, while Nvidia’s Omniverse platform provides a spatial computing ecosystem closely aligned with World Labs’s focus on large-scale virtual world generation. The company has also selected Google Cloud as its primary compute provider, allowing it to utilize existing enterprise relationships and marketplace distribution channels.

Valuation

As of December 2025, World Labs has raised a total of $230 million across two funding rounds. The most recent round was a $130 million Series A extension in September 2024 as the company emerged out of stealth. The round was led by Andreessen Horowitz, New Enterprise Associates (NEA), and Radical Ventures. Andreessen Horowitz general partner Martin Casado also assists with product development and research within World Labs, fostering a direct operational partnership.

Other notable investors include Marc Benioff (Salesforce CEO), Jeff Dean (Google), Geoffrey Hinton, Reid Hoffman (LinkedIn), and Eric Schmidt (former Google CEO), in addition to corporate investors like Databricks Ventures, NVentures, and Adobe Ventures.

World Labs’ September 2024 round valued it at over $1 billion, a 5x increase from its initial valuation of $200 million when it was founded in April 2024.

Key Opportunities

Integration into Core Industry Workflows

Unity and Unreal Engine collectively control 51% of the game engine market as of 2024, serving as the dominant platforms for game development and increasingly for VFX, simulation, and spatial computing. For World Labs, native integration with incumbents like these is critical to adoption, as it reduces switching costs and enables immediate deployment within existing creative and simulation workflows.

By embedding directly into Unity, Unreal, and leading digital content creation platforms, World Labs establishes itself as infrastructure inside the production stack rather than a standalone tool. Industry and export reports indicate that gaming and VFX leaders are ready to adopt AI-driven generation once solutions deliver real-time performance, predictable SLAs, and transparent costs. Delivering LWMs that meet these needs would allow World Labs to integrate into production ecosystems, accelerating adoption and establishing itself as the default layer for AI-driven 3D creation.

Enabling Sim-to-Real Transfer & Robotics Infrastructure

A persistent challenge in robotics is the “sim-to-real gap,” which is the difficulty of transferring control policies trained in virtual environments to physical systems. While 85% of robotics developers use simulation for testing, most still face major performance drop-offs when deploying models on hardware due to sensor noise, domain shifts, and modeling inaccuracies.

If World Labs’ approach can generate physically accurate training environments at scale, it could address core sim-to-real challenges around visual fidelity and physics modeling that traditional simulators lack. World Labs could serve robotics companies’ need for synthetic training environments and digital twins.

Key Risks

Data Access Challenges

High-fidelity 3D physics-grounded data access represents a critical competitive moat in the world model space. Unlike 2D content, for which billions of images exist online, high-quality 3D datasets remain extremely limited and expensive to acquire.

Unlike 2D image data, which is widely available through open datasets and web-scale scraping, the 3D data ecosystem is fragmented across domains like simulation engines, robotics labs, and VFX studios. At the same time, organizations are increasingly protective of their data assets (both 3D data and text assets), with companies like Reddit and X shutting down API access to preserve competitive advantages.

World Labs faces a structural disadvantage in integrating this data compared to incumbents like NVIDIA, Google, or OpenAI, which already operate with large-scale compute and simulation infrastructure. NVIDIA is already using neural reconstruction and Gaussian-based rendering to convert sensor data from real-world drives into high-fidelity simulations for autonomous vehicle development. If competitors generate high-quality synthetic 3D data at scale, World Labs's real-world data advantage diminishes significantly. Without exclusive data partnerships, which are often costly, or the ability to build its own large-scale data engine, World Labs risks having its architectures replicated by larger competitors with superior access to compute and content.

Adoption Cycle Challenges

World Labs operates in a space where commercialization timelines vary widely across verticals. In robotics and industrial simulation, adoption cycles can be over 5–7 years, while investors may expect shorter returns. If these slower-moving sectors do not generate meaningful revenue in the early years, the company may need to rely more heavily on creative and gaming markets to sustain growth. However, those markets typically offer lower margins, fragmented buyer behavior, and less predictable spending patterns.

In addition, the creative industry has previously shown resistance to AI tools that threaten traditional workflows, as seen with artist backlash against image generators. This skepticism could delay adoption or constrain World Labs to niche, experimental use cases rather than mainstream production pipelines. Furthermore, overreliance on such markets risks framing World Labs as a “content-generation platform” rather than a foundational AI infrastructure company, which could limit its long-term strategic value. Balancing short-term traction in creative tools with long-horizon investments in robotics, simulation, and digital twin applications will be essential to building long-term and strategic valuation.

Summary

World Labs builds Large World Models (LWMs), AI systems that can generate and render interactive 3D environments in real time from text or image inputs. Its core product, Marble, integrates RTFM (Real-Time Frame Model) to enable persistent, photorealistic worlds that can be explored directly in the browser. The company aims to streamline 3D content creation and simulation workflows for industries such as AR/VR, content creation, and digital twins.