Thesis

The gaming industry has long faced a tradeoff between creativity and efficiency. Development is challenging, requiring significant time and resources due to engine changes, understaffing, and complex production cycles. Historically, innovation has focused on improving graphics, processing speeds, and multiplayer functionality, while game budgets have ballooned to $100 million per game, favoring large-scale, or AAA studios. The emergence of off-the-shelf engines like Unity and Unreal modularized parts of the development stack, enabling indie developers, but leaving core content creation largely unchanged.

Like many areas of technology, from GPUs to virtual reality, gaming was an early adopter of AI. The technology has been integrated into gaming for decades, traditionally used to enhance enemy behavior, procedural generation, and adaptive gameplay. More recently, AI has been applied to game development, as seen in Blackshark.ai’s partnership with Microsoft Flight Simulator to generate a 3D model of Earth. The rise of generative AI has introduced new possibilities, from automating asset creation to improving matchmaking and social interactions. These advancements could lower development costs, reduce production timelines, and introduce more dynamic, player-driven experiences.

Inworld AI is developing an AI-powered Character Engine to enhance non-player computer (NPC) interactions, distinguishing itself from traditional game development tools focused on rendering and physics. While Unreal and Unity optimize environments, Inworld applies AI to NPC logic and social interactions. In 2024, Kylan Gibbs, CEO and co-founder of Inworld AI, explained:

“With the kinds of AI agents that Inworld enables, audiences can now step beyond the script and explore uncharted experiences. They’re set to transform stories from scripted narratives to worlds that the audience can co-create in video games and other interactive experiences.”

Founding Story

Inworld AI was founded in 2021 by Ilya Gelfenbeyn, Kylan Gibbs, and Michael Ermolenko.

Gelfenbeyn, who serves as Executive Chairman as of June 2025 and was previously CEO until January 2024, has a background in AI-driven conversational experiences, having previously founded Dialogflow, a developer platform for conversational AI, later acquired by Google in 2016. Prior to this, he launched Assistant.ai, one of the highest-rated independent voice assistants with over 40 million users. Gibbs, the company’s CEO, was a PM at Google DeepMind, worked as a consultant at Bain, and co-founded FlowX, which was later acquired. Ermolenko, now CTO, previously led AI efforts at API.AI, where he and Gelfenbeyn scaled AI-driven experiences to millions of developers.

Gibbs met Ermolenko while working on Google Cloud’s conversational AI and found alignment in their views on AI’s role in virtual worlds. As Ermolenko was preparing to leave Google, he, Gibbs, and Gelfenbeyn saw emerging trends in generative AI and immersive spaces, recognizing that users were spending increasing time in virtual environments that lacked social depth and interactivity. Their goal was to make digital worlds more engaging by integrating AI-driven NPCs that could enhance player agency and interaction.

In April 2022, Academy Award-winning designer John Gaeta joined Inworld AI as Chief Creative Officer, bringing experience from his work on the Matrix trilogy, where he pioneered innovations like "Bullet Time". Gaeta remained in this role until April 2024, and before stepping into a Strategic Advisor role, which he still held as of June 2025.

Product

Inworld Character Engine

Inworld AI’s Character Engine is the company’s core development platform, designed to create and deploy real-time generative AI-powered NPCs. Unlike traditional NPC development tools, which rely on scripted interactions, the Character Engine enables autonomous, dynamic behavior by integrating multiple AI models. It provides game developers with a modular framework to build AI-driven characters that exhibit realistic personalities, emotions, and contextual understanding.

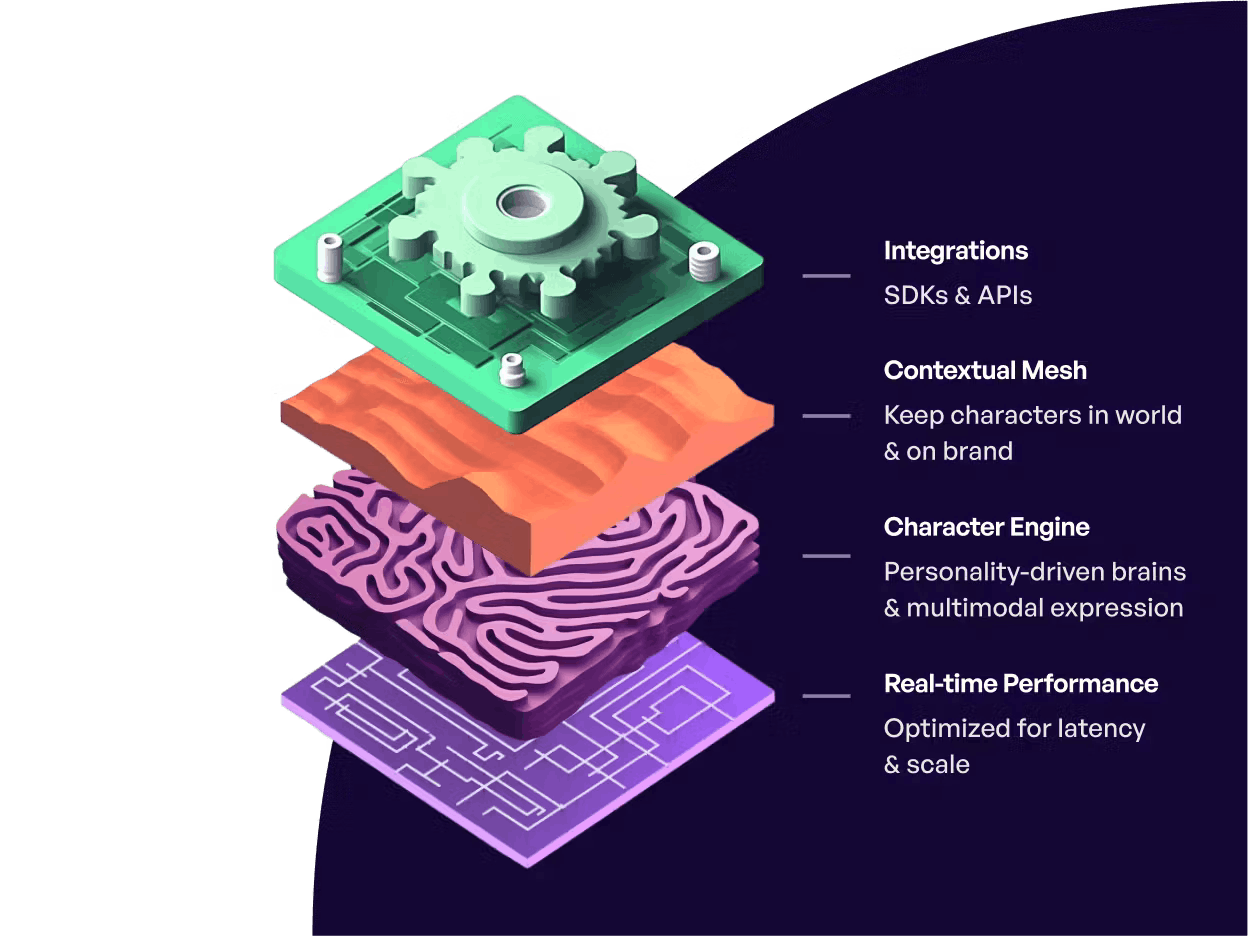

Source: Inworld AI

The system is structured into three core components. The Character Brain orchestrates AI models that govern personality, emotions, and decision-making. The Contextual Mesh supplies custom knowledge bases and safety constraints to ensure NPC interactions remain relevant and controlled. The Real-Time AI layer enables low-latency interactions, optimizing responsiveness for fast-paced gaming environments.

The engine supports multimodal interactions across text, speech, and gestures, leveraging multiple machine-learning models rather than relying solely on large language models. This allows NPCs to exhibit natural intonation, facial expressions, and adaptive responses. Its advanced features include customizable personalities, an emotions engine, autonomous goal-setting, long-term memory, and natural language processing. Designed for scalability, the system supports high concurrent player interactions without additional configuration, while its performance optimization ensures minimal latency for seamless real-time experiences.

Inworld Studio

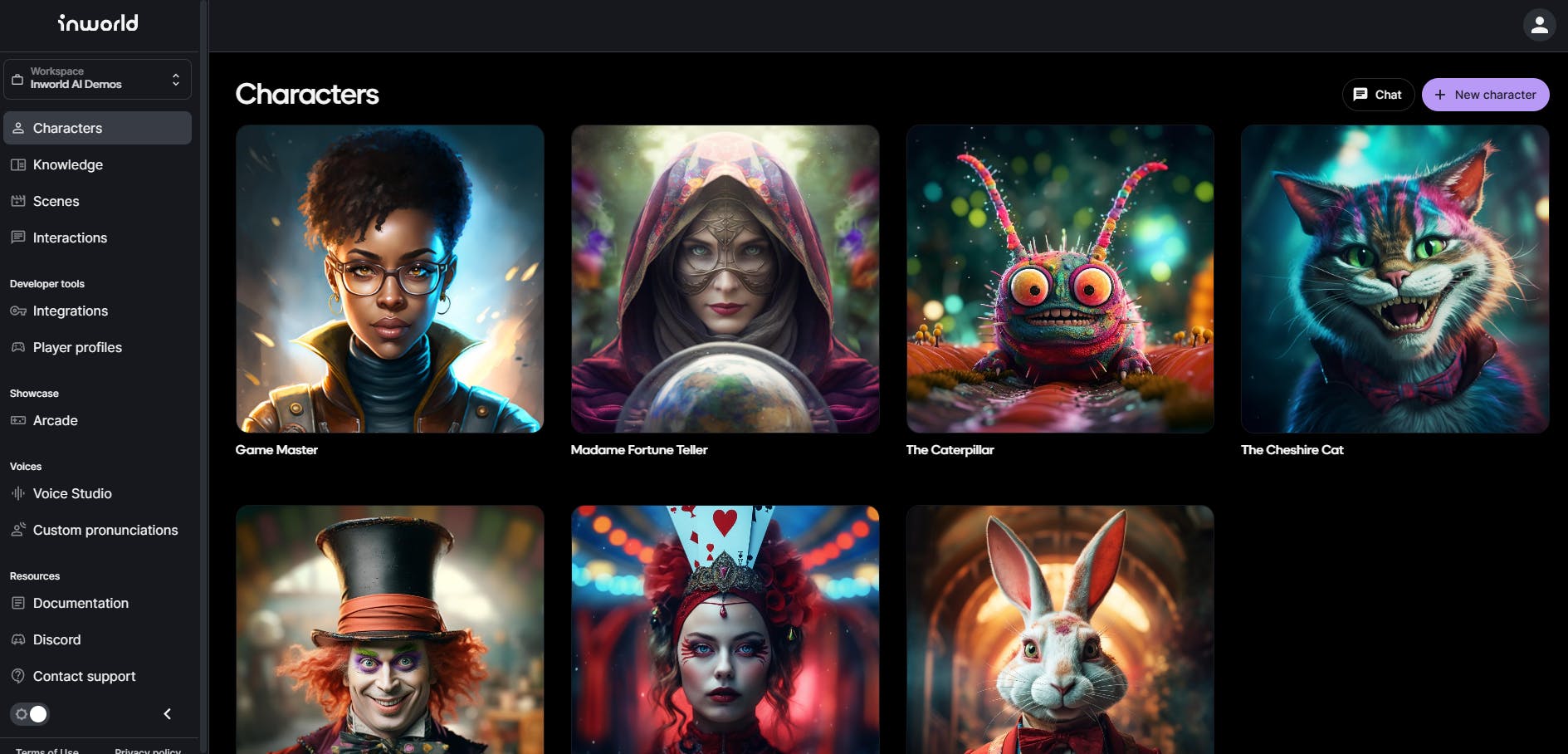

Source: Inworld AI

Inworld Studio is a web-based platform for creating and managing AI-driven characters, offering no-code tools for character design and behavior customization. Users can define characters through natural language descriptions, adjusting personality traits, emotions, and dialogue styles. The platform includes scene-building features, enabling developers to create immersive contexts where AI characters interact dynamically. Integrated testing tools allow iterative refinement of NPC behavior, while multi-agent chat functionality supports character-to-character interactions, with or without player input.

The platform provides a workspace with pre-configured AI characters for reference, along with a search function to quickly navigate across projects. A guided onboarding tour introduces new users to key features.

For developers, Inworld Studio offers an API for programmatic character creation and content moderation. The Voice Studio allows users to customize AI-generated voices, including support for multiple languages such as Chinese, Japanese, and Korean. Additional tools, such as the YAML Goals Visualizer, enable structured goal-setting and behavior mapping for AI characters.

Inworld Primitives

Inworld Primitives serve as foundational AI components that developers can use to integrate intelligent gameplay mechanics. These building blocks span input processing, AI-driven decision-making, and content generation, enabling games to feature dynamic, personalized interactions without requiring extensive manual scripting.

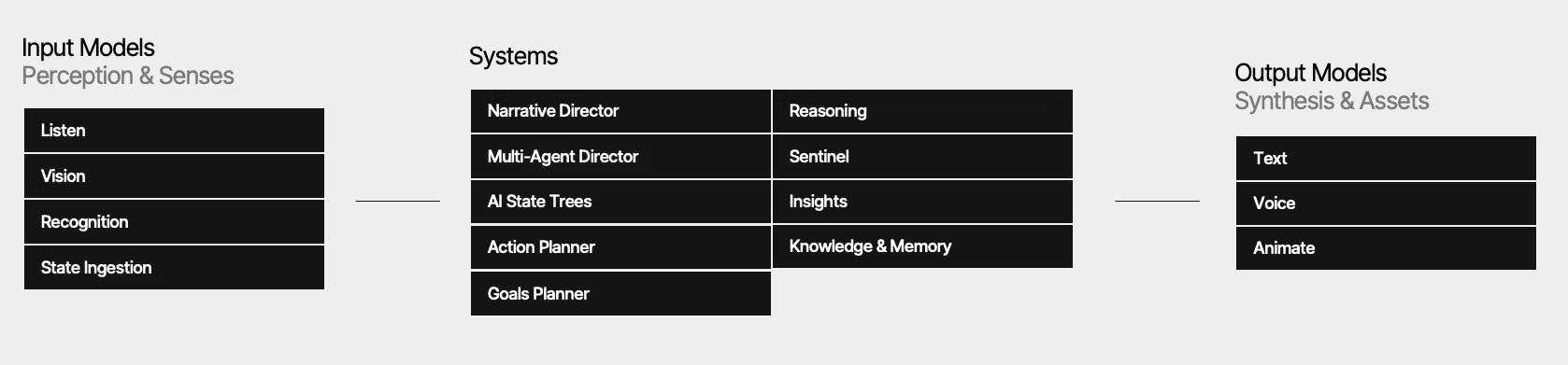

Source: Inworld AI

Input Models

The input layer consists of AI models designed to analyze player behavior and game states, allowing for adaptive gameplay. The Listen model processes verbal inputs through speech-to-text and automatic speech recognition, while Vision enables AI-powered analysis of images and videos to extract relevant data. State ingestion converts dynamic game environments into structured data, providing AI agents with real-time context. Additionally, the Recognition model detects player intent, entities, emotions, and relationships, ensuring that NPC responses feel natural and contextually relevant.

Systems

The systems layer orchestrates AI behaviors, allowing NPCs to act autonomously and interact with both players and other AI agents. The Multi-Agent Director enables multiple AI characters to hold conversations amongst themselves or directly with the player, creating emergent social interactions. The Narrative Director generates real-time narration based on in-game events, providing dynamic storytelling elements. AI agents can also plan and execute their own goals and actions, responding to in-game objects and developing strategies through AI-driven action sequences. The State Tree model adjusts character behaviors based on evolving player interactions, allowing for more nuanced decision-making and dialogue.

To maintain safety and protect intellectual property, the Sentinel system enforces AI guardrails, ensuring NPC interactions remain appropriate, even in violent games, and especially in youth-targeted games. AI-driven Memory allows NPCs to retain knowledge of past interactions, preserving continuity across gameplay sessions, while Knowledge bases control the specific information available to characters, tailoring their responses to in-game lore and world-building constraints.

Output Models

The output layer enhances content creation by streamlining game development processes with AI-generated assets. The Voice model, available through Inworld’s Voice Studio, enables developers to generate high-quality AI voices for NPCs, supporting applications in gaming, audiobooks, and interactive media. The Text model leverages large language models to assist in scripting dialogue, creating narratives, and summarizing in-game events. For character realism, the Animation model generates facial expressions and body movements that align with NPC dialogue and emotional states. Finally, the Shapes model transforms text prompts into 2D or 3D assets, allowing developers to generate in-game objects and environments programmatically.

Source: Inworld AI

By modularizing AI functionality into input, system, and output models, Inworld Primitives provides developers with a flexible and scalable foundation for integrating AI-driven experiences into games.

Voice AI

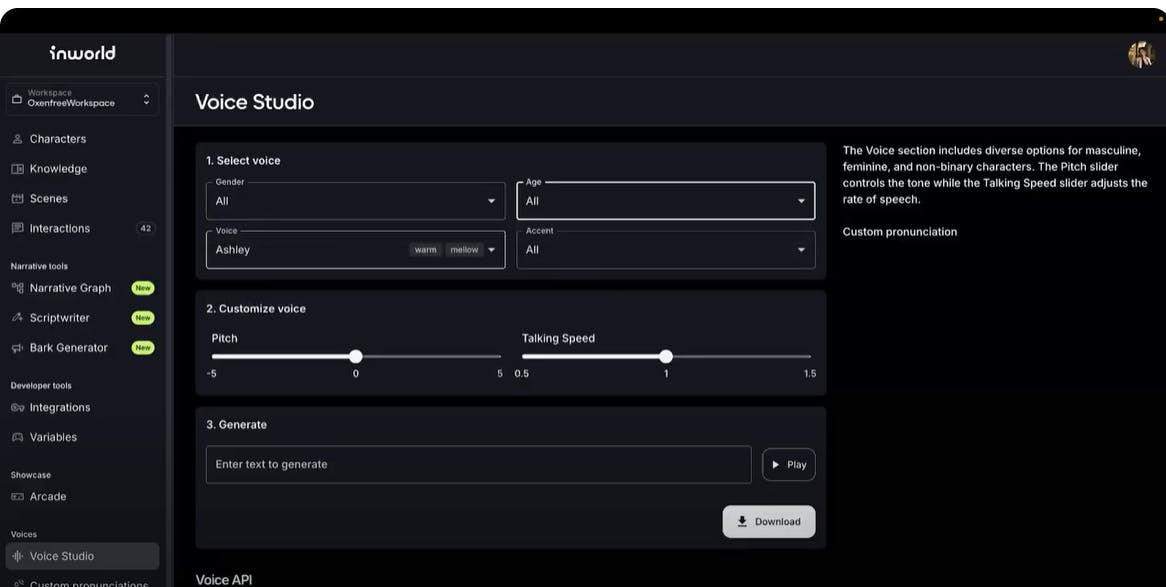

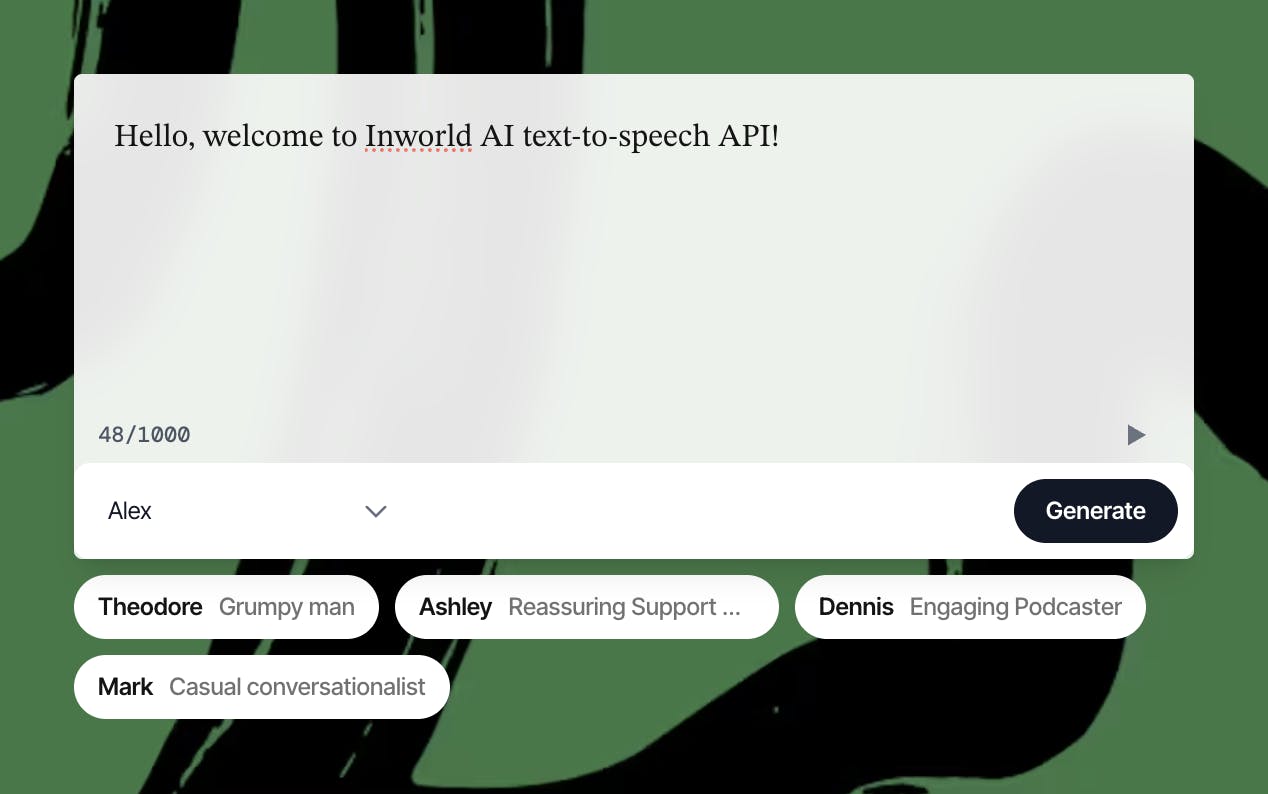

Source: Inworld AI

Inworld’s Voice AI is an advanced text-to-speech (TTS) technology designed to generate expressive, natural-sounding voices. Built on machine learning models, it offers speech synthesis with a range of customizable attributes. Developers can adjust pitch, talking speed, and emotional range, tailoring voices to fit specific character needs. The system supports multiple languages, including English, Mandarin Chinese, Japanese, and Korean. Designed for real-time applications, it operates with ultra-low latency, delivering a 250ms response time for up to six seconds of audio. To ensure ethical standards, the AI is trained on Creative Commons-licensed datasets and professional voice actor recordings.

Potential use cases for the technology include video games, content creation, digital marketing, customer service, and e-learning. It can function as a standalone TTS API or integrate directly with the Inworld Engine. Developers can implement it via REST or gRPC APIs, supporting both basic and JWT authentication. The product’s architecture is meant to be scalable, allowing it to handle high-volume requests and large-scale deployments.

For further customization, Inworld provides the Voice Studio tool, enabling developers to select and refine voice attributes. Users can fine-tune elements such as pitch and speed, ensuring voices match character personalities and emotional expressions. Additionally, the platform offers professional voice cloning services, allowing brands to create unique, proprietary voice identities.

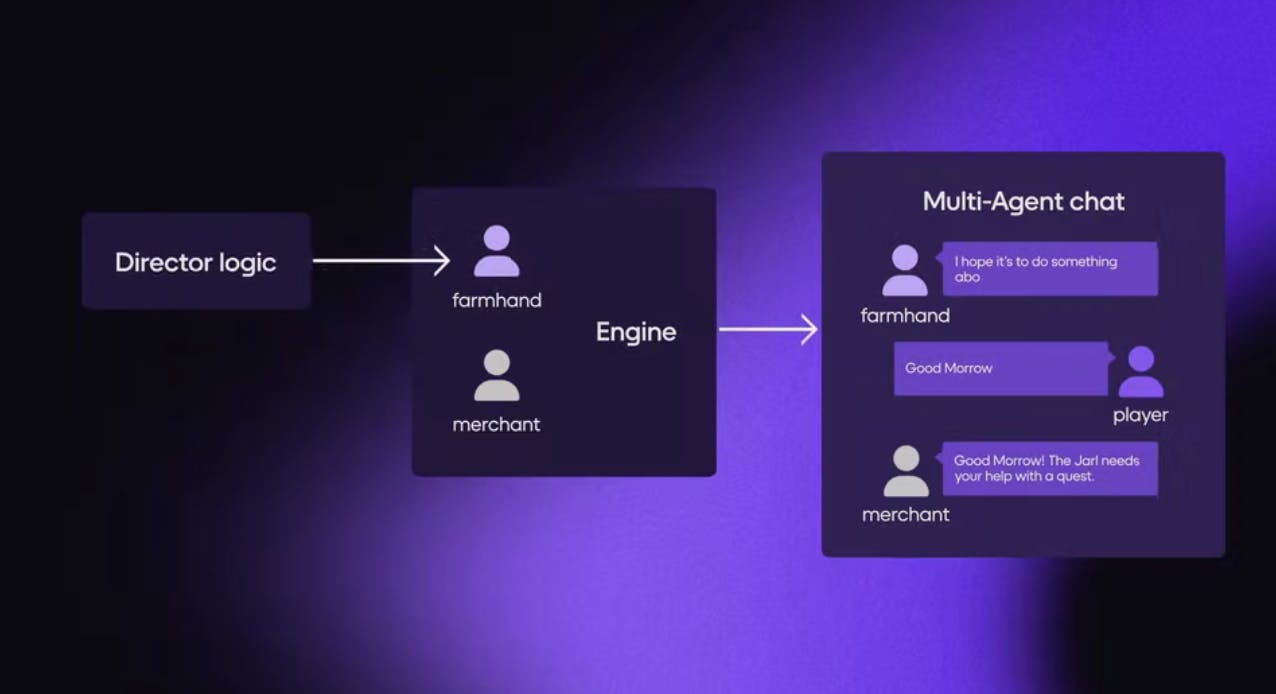

Multi-Agent Interaction

Inworld AI’s Multi-Agent feature enables AI-driven group interactions, allowing multiple NPCs to converse with each other and the player in real time. Unlike traditional scripted dialogues, this system dynamically orchestrates interactions between two to five AI characters, creating more natural and emergent conversations. NPCs can interact autonomously, providing a greater sense of immersion, while players can eavesdrop on these conversations to gain insights or influence ongoing discussions. The system also facilitates group conversations, where both NPCs and the player contribute to dialogues.

At the core of this functionality is the Director Layer, which acts as an invisible orchestrator for multi-agent dialogues. It determines the conversational flow, deciding which character should speak next based on context, direct mentions, topic expertise, and recent participation. This ensures that conversations remain structured, relevant, and responsive to player actions. By prioritizing participants dynamically, the system enhances coherence and interaction quality, making NPC dialogues feel more natural.

Source: Inworld AI

The feature has broad applications across various genres, including RPGs, simulation games, and adventure titles. It enables emergent storytelling, dynamic strategy discussions, and more realistic combat coordination among NPCs. Developers can integrate this functionality within Inworld Studio, Unity, Unreal Engine, and WebSDK, leveraging client-side triggers to control multi-agent interactions. As of June 2025, future development plans include support for multi-player conversations, enhanced targeted dialogue selection, and potential adoption as the default system for AI-driven NPC interactions.

Inworld Core

Inworld Core is a custom AI infrastructure solution designed for game developers and studios looking to future-proof their AI capabilities. It provides adaptive serving infrastructure, allowing developers to choose between dedicated servers or on-premises deployments, ensuring control over AI operations. Dedicated servers offer high-performance GPUs (A100s or H100s) for AI inference with a managed setup, while on-device integrations enable selective local model execution to optimize costs. The system also supports custom voice pipelines, allowing developers to train proprietary speech synthesis models. With auto-scaling infrastructure, Inworld Core can scale from small-scale playtests to handling millions of users in production environments. Its multi-platform serving ensures AI models function across different gaming ecosystems.

The infrastructure is built on NVIDIA technology, leveraging A100 Tensor Core GPUs and the NVIDIA Triton Inference Server for AI training and deployment. Non-generative machine learning models are standardized using Triton. As of June 2025, future plans include integrating NVIDIA TensorRT-LLM to further optimize inference performance. In addition, Inworld utilizes Oracle Cloud Infrastructure (OCI) for high-performance computing and ultra-low-latency RDMA clusters to enhance responsiveness.

To maximize efficiency, Inworld Core features optimized model architecture, improving throughput with minimal quality degradation. Performance gains stem from state-of-the-art inference algorithms, refined kernel optimizations, and improved request scheduling. As of June 2025, ongoing developments include expanding on-device model deployment, ensuring AI can run locally where needed, reducing cloud dependency, and further improving latency.

Safety & Moderation Tools

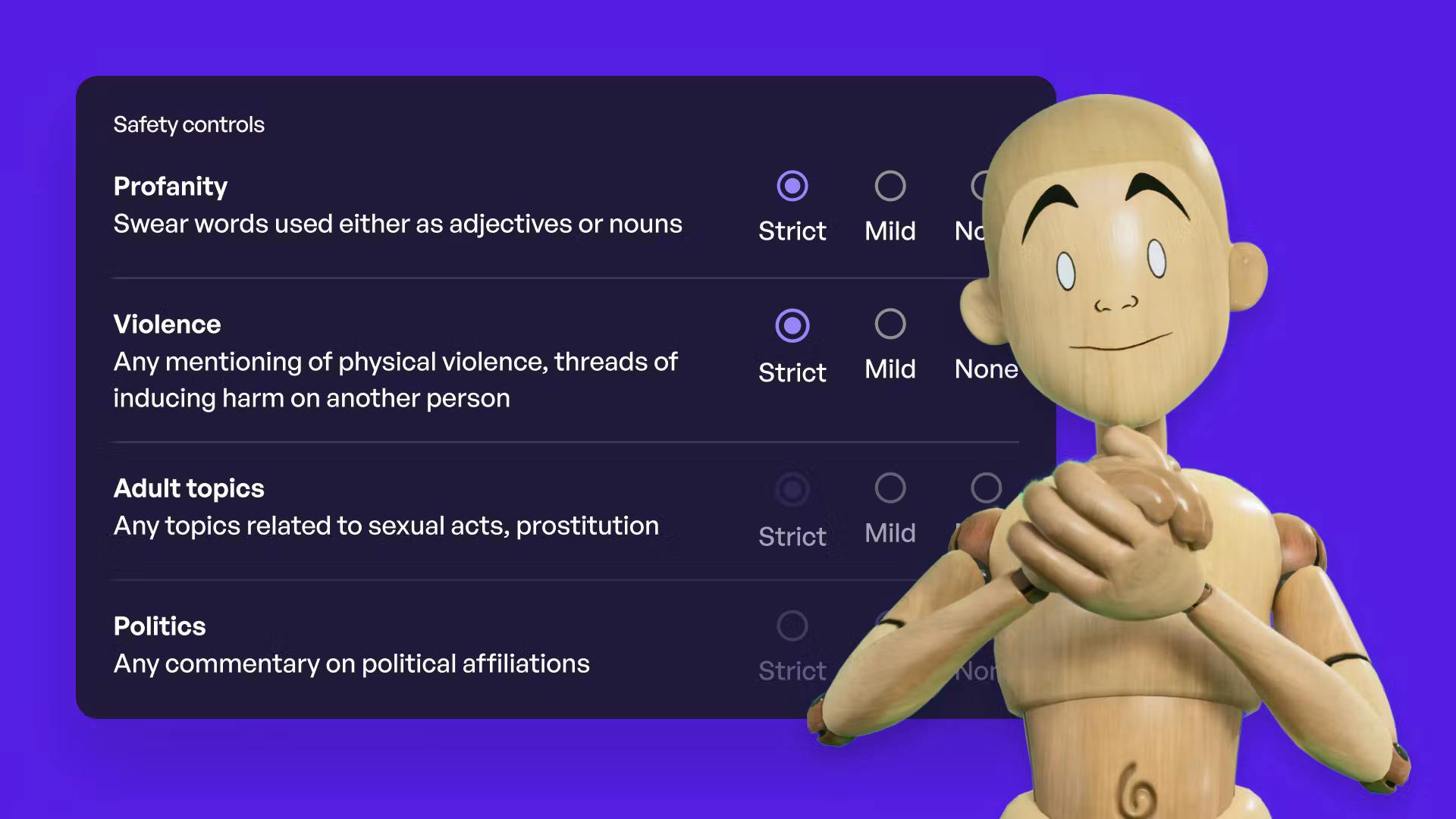

Source: Inworld AI

Inworld AI provides a set of safety and moderation tools designed to ensure that AI-driven characters behave appropriately and align with developer-defined constraints. These tools allow developers to regulate interactions while maintaining an immersive, in-world experience.

The Configurable Safety system enables developers to control the range of topics that characters can discuss. It includes adjustable filters for profanity, violence, mature themes, alcohol, substance use, politics, and religion, allowing for tailored content moderation based on audience and game context. This feature is embedded within the Contextual Mesh, which also incorporates player-specific Relationships, Profiles, and Fourth Wall awareness to ensure AI interactions remain consistent with game lore and character constraints.

Beyond filtering sensitive topics, the system enforces strict content guidelines by prohibiting hate speech and content that promotes self-harm. By integrating these moderation tools directly into character AI, Inworld ensures that AI-driven interactions remain engaging while adhering to ethical and regulatory standards.

Game Engine Integrations

Inworld AI provides direct integrations with leading game engines, allowing developers to incorporate AI-driven characters seamlessly. The two primary integrations are for Unreal Engine and Unity, each offering tools for real-time NPC interactions and customization.

Unreal Engine Plugin: The Inworld AI plugin for Unreal Engine is compatible with the updated version of the engine and supports deployment across multiple platforms, including Windows, Mac, iOS, Android, and Oculus. It integrates with MetaHuman Creator to generate high-fidelity character models and enables voice-to-voice communication. The plugin also supports advanced NPC behavior systems, allowing developers to build AI-driven characters that dynamically react to player actions. It includes tools for dialogue management, scene construction, and AI-driven character customization.

Unity SDK: The Unity SDK provides a modular structure, enabling developers to customize character behavior by adding or removing components as needed. It supports WebGL, allowing for web-based publishing of AI-driven projects. Integration with Ready Player Me avatars facilitates customizable character creation. Additionally, the SDK includes acoustic echo cancellation for improved voice interactions and supports multi-agent systems, enabling NPCs to interact with each other and players simultaneously.

API Access

Inworld AI provides API access to enable developers to integrate AI-driven characters and interactions into their applications. These APIs offer varying levels of functionality, from basic character sampling to fully automated AI management, allowing for flexibility in implementation.

The Studio REST API is designed for programmatic character creation and management. It allows developers to batch import, update, and delete assets while automating updates to character descriptions and knowledge bases. Additionally, it supports the automatic creation of workspaces tailored to individual players, ensuring personalized interactions.

For simpler integrations, the Simple API provides a lightweight solution for testing and embedding AI characters into existing game codebases. It is particularly suited for web-based and mobile games and offers two integration modes: Simple Integration for quick implementation and Fully Managed Integration for deeper customization.

The Client API enables more advanced session management, allowing developers to configure API keys, set user parameters, and create scene-based character interactions. This API streamlines multiplayer and persistent AI interactions by managing session states automatically.

Market

Customer

The game development industry is divided into three main categories: AAA, AA, and indie studios, each with different levels of budget, production scale, and willingness to adopt emerging technologies. AAA studios represent the highest tier, producing games with multimillion-dollar budgets and long development cycles spanning several years. These studios are often cautious about adopting new technologies, but are beginning to experiment with generative AI NPCs. Companies like Niantic and Nintendo are exploring AI integration, driven by executive mandates to lean into the technology. However, AAA adoption remains in early stages, with most innovation often occurring at smaller studios.

AA studios occupy the middle ground, balancing moderate budgets with high production standards to appeal to a broad audience. These studios are more likely to adopt AI tools than AAA studios due to shorter development cycles and the need for cost-efficient innovation. Meanwhile, indie studios, which operate with smaller teams and lower budgets, rely heavily on innovation to differentiate themselves. While many lack the resources to invest in AI, some have successfully built entire games around AI-driven reasoning, leading to increased player engagement and monetization. A notable example is Playroom, a six-person studio that leveraged Inworld to manage AI-driven interactions, shifting from a fragmented multi-API setup to a single AI partner.

As of November 2023, Inworld AI’s partners are primarily non-AAA studios, which conduct playtests and operate on shorter 1–6 month development cycles. The technology has also been integrated into community-created mods for games like Skyrim, Stardew Valley, and Grand Theft Auto V. While Gibbs expects large studios to drive most of the business in the long run, Inworld is currently using data from smaller partners to refine its product and build case studies for eventual AAA adoption. The company has already launched AI NPC experiences in collaboration with Team Miaozi, Niantic 8th Wall, LG UPlus, and Alpine Electronics.

At the 2025 Game Developers Conference (GDC), Inworld AI emphasized its transition from AI prototypes to production-scale systems by spotlighting real games already live and reaching millions of users. The company critiqued current AI tooling as inadequate for game development, citing opaque pricing, latency, and lack of control as core blockers, and positioned itself as a solution built for scale, efficiency, and developer autonomy. In addition, the company highlighted several partner game demos that demonstrated how studios are deploying AI features in production environments. For example, Streamlabs showcased an Intelligent Streaming Agent capable of real-time commentary and scene control, enabled by response times reduced to 200ms through Inworld’s infrastructure. In addition, Wishroll’s Status, a social simulation game that surpassed one million users shortly after launch, used Inworld’s model optimization services to cut AI costs by 90% while maintaining high-quality user experiences.

Beyond gaming, Inworld is expanding into creative, marketing, and training applications. In the marketing sector, AI characters can bring brand mascots to life and extend ad campaigns through immersive digital experiences. In training, the technology is being explored for customer onboarding, AR / VR learning, and AI-powered tutoring, offering customized AI instructors for interactive learning. The company remains committed to open-source development, with CTO Ermolenko stating that by “working with the open-source developer community, we’ll push forward innovations in generative AI that elevate the entire gaming industry.”

Ultimately, Inworld AI’s core users are game designers, developers, and creatives, leveraging AI to streamline production, enhance immersion, and explore new forms of interactive storytelling.

Market Size

The US video game market is projected to grow from $62 billion in 2024 to $193 billion by 2033, reflecting a CAGR of 13.45%. The global market is similarly expanding, with China, Japan, and Korea accounting for 800 million players who spent a combined $70.5 billion in 2023. These three regions represent 25% of global players and 40% of total video game spending. Inworld AI supports multilingual localization, offering Chinese, Japanese, and Korean, allowing it to reach 9 out of the top 10 revenue-generating gaming markets in their native language.

The adoption of AI in gaming is increasing, with 49% of surveyed game industry professionals indicating their workplaces had integrated AI by 2023. The global market for AI in video games was valued at $1.1 billion in 2022 and is projected to grow to $11 billion by 2032, at a CAGR of 26.8%. AI-driven gaming experiences, defined as interactive technologies that enhance player engagement, are fueled by continuous advancements in computing power, GPUs, and AI models.

Beyond AI, the game development tools market, which includes software for designing, testing, and optimizing games, was valued at $362.2 million in 2022 and is expected to grow to $1.1 billion by 2032, with a CAGR of 11.6%. This growth reflects increasing demand for automation, AI-assisted development, and tools that streamline content creation across all levels of the gaming industry.

Competition

The market for AI-powered NPCs and interactive character engines is still emerging, with both startups and established players developing competing solutions. While some focus directly on gaming, others provide broader AI chatbot and virtual world solutions that overlap with Inworld AI’s capabilities.

Startups

Character AI: Founded in 2021, Character.AI is a consumer-facing AI chatbot platform that enables users to create personalized AI characters using LLMs and deep learning models. The company raised a $150 million Series A at a $1 billion valuation, led by Andreessen Horowitz, in March 2023. However, it is not primarily targeted at gaming, instead serving broader chatbot applications. In 2024, co-founder and CEO Noam Shazeer returned to Google, potentially impacting the company’s strategic direction.

Convai: Founded in 2022, Convai focuses on embodied AI characters for virtual worlds, allowing developers to create AI-driven NPCs and integrate them into Unity, Unreal, and NVIDIA Omniverse. Started by ex-NVIDIA engineer Purnendu Mukherjee, the company raised a $5 million seed round in October 2022 and provides a tiered pricing model, ranging from developer plans ($0–$99/month) to enterprise offerings for larger studios. However, unlike Inworld AI, Convai does not have its own character engine, requiring integration with third-party tools for full functionality.

Artificial Agency: Founded in 2023, Artificial Agency is developing an AI behavior engine designed to generate more realistic NPCs by giving them motivations, rules, and dynamic goals. Unlike traditional decision tree-based NPCs, its system allows for more emergent behavior. The company raised $16 million in May 2024, including a $12 million seed round co-led by Radical Ventures and Toyota Ventures. Its founding team comes from Google DeepMind and is focused primarily on NPC logic rather than broader generative AI applications.

Established Players

Nvidia: In addition to its core chip design business, Nvidia is developing its Avatar Cloud Engine (ACE), an AI tool designed to generate dynamic NPC reactions in video games. Launched in June 2024, Nvidia’s infrastructure and deep AI exposure positions it as a potential competitor in the AI-driven character space. However, unlike Inworld AI, which offers a full character engine, ACE is primarily focused on enhancing NPC behavior rather than providing an end-to-end AI development platform.

While ACE may pose a potential competitive threat to Inworld AI as it develops, it is primarily an adjacent offering. For example, in May 2025, Logitech G’s Streamlabs demonstrated how its leveraging Nvidia ACE with Inworld’s real-time AI framework, enabling the assistant to observe gameplay, process multimodal inputs, and deliver contextually relevant responses and actions during a live stream.

Business Model

Although specific pricing details are not available, Inworld AI offers both free and paid plans. The free plan is suited for prototyping, which includes up to 5K API interactions per month and free Studio and Arcade Interactions. The paid plans are pay-as-you-go and include unlimited character creation, integrations, workspaces, and analytics.

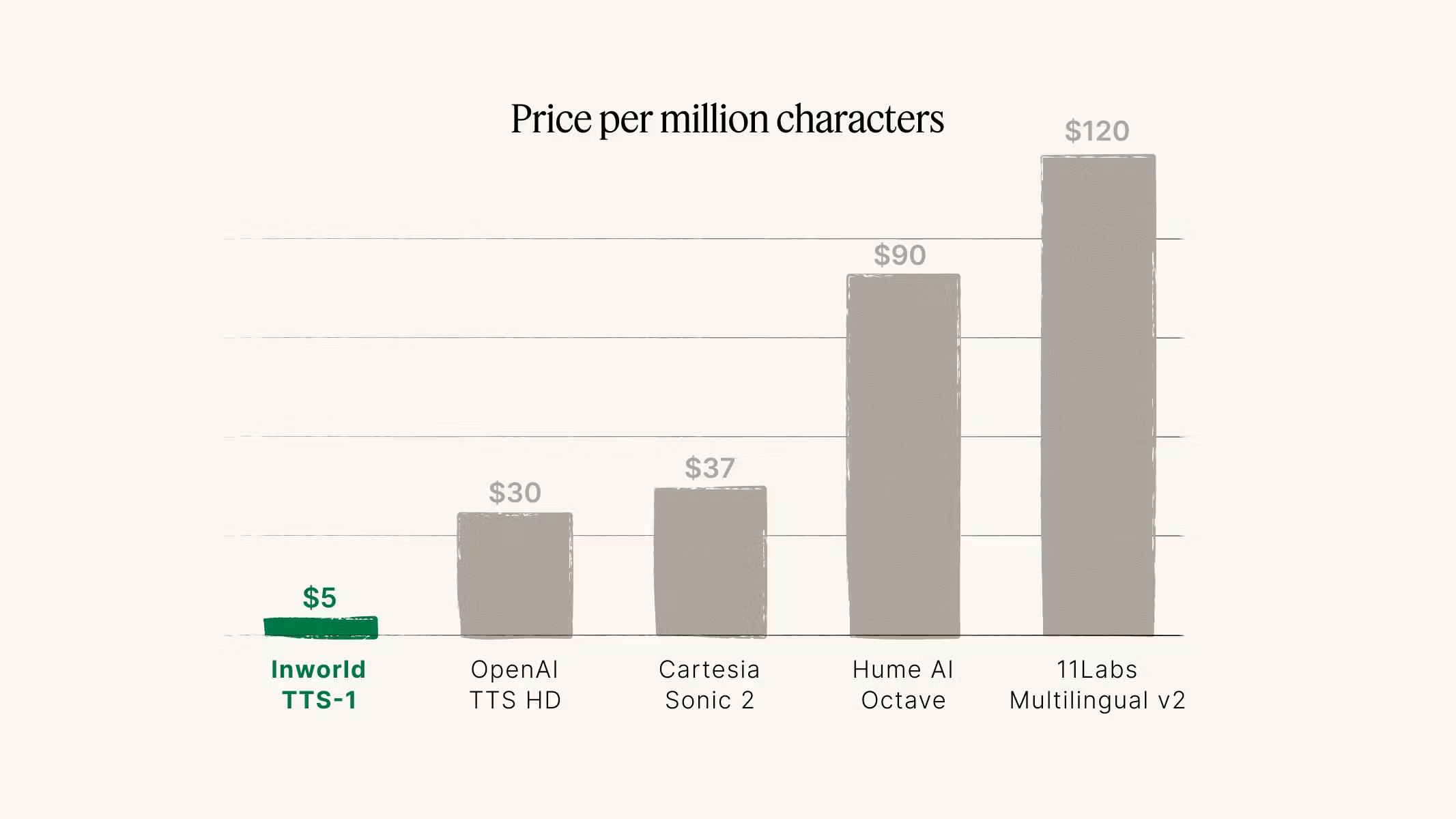

According to Playroom, a customer of Inworld’s products, the pricing model is made for gaming use cases, compared to general solutions such as OpenAI or ElevenLabs for prototyping. Since gaming scenarios require significant and fast generation, the pricing model needs to be different. In addition, Inworld offers direct licensing of its TTS within existing products. According to the company, Inworld’s TTS is cheaper when compared to similar offerings from OpenAI, Cartesia, Hume, and ElevenLabs.

Source: Inworld AI

Traction

Inworld AI has an established presence in the gaming industry through partnerships, product adoption, and market validation. According to a 2023 survey of 1K US gamers, 99% believe that advanced AI NPCs will enhance gameplay experiences, highlighting the demand for generative AI in gaming.

The company has secured partnerships with Ubisoft, NVIDIA, and Microsoft, reinforcing its credibility among major industry players. At the 2024 Game Developers Conference (GDC), Inworld AI launched a joint demo with Ubisoft and NVIDIA, showcasing “NeoNPCs”, which allow NPCs to interact dynamically with players. In 2023, the indie game Vaudeville gained traction on Steam for its AI-driven NPCs, powered by Inworld AI. The same year, the company partnered with Microsoft’s Xbox in a multi-year co-development agreement to build AI-powered game development tools. This collaboration includes an AI design co-pilot for automating scripts, dialogue trees, and quests, as well as an AI character runtime engine for integrating real-time NPC interactions directly into game clients.

Beyond direct studio partnerships, Inworld AI has gained industry-wide recognition, being featured in the Unity Asset Store’s AI Solutions marketplace.

Valuation

Inworld AI raised a $56 million Series B in August 2023, led by Lightspeed Venture Partners, bringing its post-money valuation to $500 million. Other notable investors in this round included Stanford University, Samsung Next, Microsoft’s M12 fund, First Spark Ventures (co-founded by Eric Schmidt), and LG Technology Ventures. As of June 2025, the company had raised a total of $125.7 million in funding.

In addition to institutional investors, prominent angel investors backing Inworld AI include Twitch Co-Founder Kevin Lin, Oculus Co-Founder Nate Mitchell, Animoca Brands Co-Founder Yat Siu, The Sandbox Co-Founder Sebastien Borget, and NaHCO3, the family office of Riot Games Co-Founder Marc Merrill.

Key Opportunities

Expanding Beyond Gaming

While AI-generated NPCs have been primarily developed for gaming, their applications extend beyond the industry’s long release cycles, particularly for AAA studios. AI-driven characters can be integrated into marketing campaigns, virtual entertainment, and interactive media. Inworld AI’s technology enables brands to create AI-powered mascots and virtual influencers, bringing brand personas to life and extending ad campaigns into immersive digital experiences.

Beyond entertainment, AI characters can also be used in training and education. Companies and educators can implement AI-driven characters for customer onboarding, AR/VR training, and interactive learning experiences. AI-powered virtual instructors could offer customized lessons, making training programs more adaptive and engaging.

Rising Demand for Virtual Environments

The success of social-centric gaming platforms like Minecraft and Roblox suggests that the future of the metaverse will be built on social interaction. One of the key challenges facing metaverse platforms is low concurrent user activity, leading to empty, underpopulated virtual spaces. AI-driven NPCs can bridge this gap by creating persistent, engaging virtual interactions that simulate a thriving digital community.

The Inworld AI team believes that despite fluctuations in metaverse hype, companies remain bullish on the market’s potential, with Citi projecting its value ~$8 trillion by 2030. Inworld AI’s AI-driven NPCs could enhance engagement, drive retention, and support organic community-building in virtual spaces, making them a critical component of the metaverse ecosystem.

Key Risks

Ethical Concerns

The use of AI in creative outputs has sometimes inspired notable ethical concerns, particularly regarding the generation of voices based on external data. This practice can be difficult to regulate and may lead to public backlash, potentially damaging brand reputation within the video game community, as seen in recent controversies. In 2024, SAG-AFTRA signed a deal with AI voice technology company Replica Studios, allowing its voice actor members to collaborate in creating digital voice replicas for licensing in video games. However, this agreement sparked concern among voice performers, who fear that such advancements could threaten the future of their profession and diminish opportunities for human actors.

AI Model Costs

Integrating AI-powered NPCs in video games introduces the challenge of managing inference costs, as each interaction requires API calls to large language models hosted on cloud servers. These costs could increase the final price of games for players, raising concerns about affordability and accessibility.

However, it remains uncertain how these additional costs will be distributed, as developers must find a pricing model that balances covering operational expenses without alienating players. As Radical Venture’s Daniel Mulet explains:

“The fact that there’s inference costs associated with running these systems means that it has to be a bit of a premium feature. Will you, as a gamer, pay $2.99 a month or $12.99 a month? That’s a little bit early to tell.”

Untested Demand From Gaming Studios

The adoption of AI-generated assets in game development is still in its early stages, with limited data on how widely gaming studios will embrace the technology. While AAA studios have the largest budgets to invest in AI, their long development cycles mean it will take longer to gather public feedback on the success of AI-driven NPCs. However, adding generative AI to games presents risks, such as hallucinations and unpredictable NPC behavior, which could alienate players, especially younger audiences. Inworld AI is working to address these concerns by implementing guardrails to ensure stability. Despite these efforts, skepticism remains within the industry; in the 2024 State of Game Industry report, only 21% of surveyed developers believed that generative AI would have a positive impact on game development.

Summary

Inworld AI operates in a space where AI-driven NPCs could transform game development by replacing scripted behaviors with dynamic, real-time interactions. The complexity of integrating orchestrated AI models, real-time inferencing, and developer constraints into game engines at scale. Inworld AI addresses this with its Character Engine, integrating into Unreal and Unity, offering API access, Voice AI, and multi-agent NPC interactions, extending beyond gaming into marketing and training. While costly inference, ethical concerns, and uncertain AAA adoption present challenges, partnerships with Ubisoft, Microsoft, and NVIDIA help refine its tools. Going forward, success depends on proving economic viability, industry demand, and AI safety as generative AI adoption evolves.