Thesis

As of June 2025, the Fugaku Supercomputer was the world's seventh most powerful supercomputer. With almost 7.6 million processor cores (or about 1.9 million desktop computers), it had an estimated build price of $1 billion in 2020. This supercomputer can run an accurate simulation of a molecule of caffeine: at every computational step, the position and interactions of caffeine’s 24 atoms are calculated.

Already, this is a massive computational lift. Every one of its 24 atoms interacts with every other atom through quantum forces, requiring the solution of complex equations that scale exponentially with system size, leading to an exponential explosion in computational complexity. Even tiny shifts in atomic position can alter the entire molecule’s energy state, demanding calculations at femtosecond time steps and atomic-level precision. If just one more atom were added to this caffeine molecule, it would now require two Fugakus to run a full simulation. Unintuitively, the size of the problem doubled with just one additional atom.

Caffeine, however, is a relatively small molecule. The typical active pharmaceutical ingredient in most therapeutics averages between 20 to 100 atoms. For a drug of roughly 100 atoms, it would not require another hundred, or thousand, or even a million additional Fugaku supercomputers; it would take a minimum of millions of Fugaku supercomputers to run an accurate simulation. At that scale, it would cost around nine times the world’s annual GDP.

Exponentially scaling problem spaces increasingly constrain humanity's most pressing challenges. Drug discovery, quantum mechanics, materials science, chemical simulations, optimization problems, and quantum-scale technologies like next-generation transistors, batteries, and photovoltaics demand computational resources that grow exponentially with problem complexity. The required buildout of classical computers for these problems is economically and technologically impossible to justify.

Quantum computing offers a solution to classical limitations, yet practical implementation has proven difficult since its theoretical foundations in the 1980s. Quantum systems are hard to engineer, harder to understand, and impossible to simulate in advance. As a result, almost forty years after its theoretical beginnings, the median quantum computer has just 32 qubits, the quantum analog of transistors. For comparison, a minimum of 100K qubits is required for a quantum computer to be useful.

PsiQuantum is a quantum computing company that is pursuing a path toward a million-qubit quantum computer. Instead of working on easy-to-manufacture but difficult-to-scale quantum technologies, PsiQuantum has done the opposite. The company prioritizes engineering quantum chips that are mass-manufacturable at existing semiconductor foundries using its novel photonic technologies. As of 2025, PsiQuantum was working to open its first quantum data center, enabling companies and researchers worldwide to address problems that have historically remained computationally locked to humanity.

Founding Story

Source: Forbes India

PsiQuantum is a quantum computing company founded in 2016 by Jeremy O'Brien (CEO), Terry Rudolph (Chief Architect), Pete Shadbolt (Chief Scientific Officer), and Mark Thompson (Chief Technologist). These four quantum physicists came together and brought decades of foundational research experience with the mission to build the world's first commercially useful quantum computer.

The story of PsiQuantum’s founding is unlike many other companies. It did not emerge from a sudden breakthrough, but from a slow, deliberate march of incremental research done by O’Brien and his colleagues. Leading the team is O’Brien, an Australian quantum physicist with over 25 years of research on quantum computing, 150 publications, and over 46K citations. Prior to PsiQuantum, O’Brien was a Professor of Physics and Electrical Engineering at Stanford University and the University of Bristol, and held various visiting professorships at Osaka University, Hokkaido University, and the University of Tokyo.

His obsession with quantum computing began in 1995 as a 19-year-old physics and mathematics undergraduate at the University of Western Australia when he read an article about quantum computing. After completing his undergraduate studies at the University of New South Wales, where he had transferred specifically to study honors physics, O’Brien remained at the university to pursue a PhD in 1998 in the field.

Under the supervision of Bruce Kane, who himself had pioneered the Kane quantum computer that same year, O’Brien spent the next three years creating a quantum computer using single phosphorus atoms nanofabricated on silicon, along with additional research on the material physics of superconductors, organic conductors, and electrons.

Although his thesis was published in 2001 along with a patent, O’Brien grew pessimistic. The dominant thinking at the time to build quantum computers was the “noisy intermediate-scale quantum” (NISQ) view – that 1K qubits was all that was needed to build a usable quantum computer. Thus, most experimentalists built small systems designed for a few dozen to a hundred qubits and worked to incrementally scale such systems.

As O’Brien noted, the current progress on quantum computers involved mainly “magnetic resonance and ion trap systems,” which could scale well enough to reach 1K qubits, but no more. O’Brien disagreed with the view that this was adequate. Contrary to existing belief, O’Brien worked out that a practical quantum computer would require, at a minimum, a million qubits, which could not be done through existing quantum computing paradigms at the time.

After his PhD, O’Brien joined Andrew White’s research lab at the University of Queensland as a postdoc after reading the group’s work on photonic qubits. By 2003, O’Brien had demonstrated the world’s first optical quantum-controlled NOT gate, a huge milestone towards building a fully functioning quantum computer. Analogous to silicon’s mass-manufacturable transistors, O’Brien had built a very large, three-meter quantum transistor using mirrors and optical principles. There were two critical limitations of his work: miniaturizing the setup and scaling the production of the quantum building block. If these could be overcome, then building a scalable quantum computer would be possible. This would require three skills: quantum, silicon photonics, and the understanding to engineer the two together. This shifted O’Brien’s interest from traditional quantum to optical quantum.

O’Brien understood the quantum, but what he needed was people who understood silicon photonics and a place where he could start small-scale silicon manufacturing. In 2006, White recommended O’Brien to a larger and more funded lab with manufacturing capabilities at the University of Bristol. In under three years, O’Brien became the Founding Director of the Center for Quantum Photonics (CQP) and built a 100-person quantum research empire in Bristol. The center would eventually train hundreds of the world’s first quantum engineers.

Under the influence of O’Brien’s insights, the group viewed existing quantum computing approaches with skepticism. Instead of being enamored by the idea of building unscalable NISQ processors and attempting to scale performance afterwards, the CQP revisited the core engineering principles.

What were quantum computers for? To solve problems involving exponential state spaces that conventional computers cannot simulate, such as molecular simulations or factorization problems. Next, what scale of qubits did these problems require? From this, O’Brien believed that to do anything useful, a million-plus-qubit quantum computer is required.

This defined the research goals of the CQP: to create million-qubit quantum computers at the nanoscale is, at the end of the day, a manufacturing challenge, not a scientific challenge. There is only one industry that existed at the time, and remains so today, that can manufacture trillions of components at scale: the semiconductor industry. Thus, the CQP focused on translating large-scale photonic quantum setups in the lab onto nanoscale dimensions on silicon wafers that were compatible with existing silicon fabrication processes.

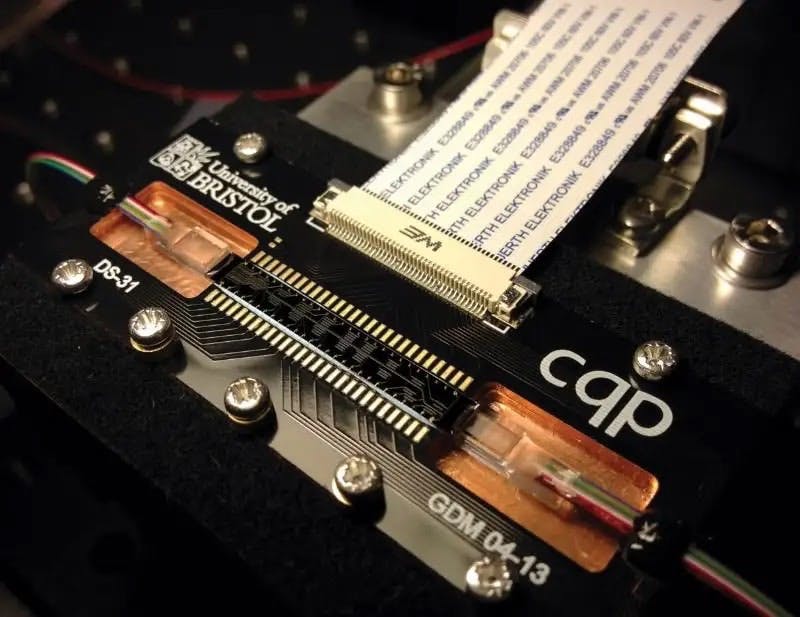

By 2008, five years after his initial optical quantum-controlled transistor, O’Brien’s team demonstrated that they could replicate the one-ton, three-meter design onto a single silicon chip you could hold in your hand, proving that one could take advantage of the established semiconductor industry, which collectively produces over a trillion chips a year, to directly design photonic (optical) quantum circuits onto a silicon wafer. Thus, the field of integrated quantum photonics was born, and the miniaturization challenge was solved.

In 2013, the team developed the world’s first two-qubit quantum processor (QPU) that was accessible online. Back in 2013, someone could connect to “Qcloud” with their laptop and access the QPU! This initial QPU is now in the British Science Museum.

Source: NewScientist

But it was the next two breakthroughs in 2014 that solidified O’Brien’s confidence in constructing a practical, million-qubit quantum computer.

Both breakthroughs came from future PsiQuantum co-founder, Shadbolt, who at the time was a PhD student under O’Brien. First, Shadbolt developed a new architecture that simplified the scaling of photonic quantum components on a silicon chip. Second, Shadbolt co-invented a “variational eigenvalue” solving algorithm that same year, which was used on the team’s photonic quantum processor. This allowed the team to solve a chemistry problem otherwise intractable for classical computers. From this, Shadbolt built the first public API to a quantum processor, allowing other researchers to access Bristol’s quantum hardware.

With a path forward towards scaling millions of photonic quantum components on a silicon wafer and a working software layer to utilize the quantum processors, O’Brien spun off the group as PsiQuantum in 2015 with the mission “to build and deliver the world's first large-scale, fault-tolerant, error-corrected quantum computer.” Importantly, it would skip a lab-scale quantum demonstration and jump straight into building a large-scale quantum computer that would outperform any supercomputer in the world.

After meeting Peter Barrett from Playground Global, he was convinced to relocate to Silicon Valley in April 2016 and raised a $13 million seed round. The original founding team of PsiQuantum, alongside O’Brien, included Rudolph, Shadbolt (who had left O’Brien’s group to obtain a post-doc at Imperial College London in theoretical quantum under Rudolph), and Thompson. The four co-founders had already spent years working together at the University of Bristol (O'Brien, Thompson, and Shadbolt) and Imperial College London (Rudolph and Shadbolt) and shared O’Brien’s philosophy of scalable-first quantum that he developed back in 2001.

Mark Thompson studied physics at the University of Sheffield before obtaining a PhD in Electrical Engineering at the University of Cambridge in 2006. During this time, Thompson was also a researcher and engineer at the UK-based Bookham Technology, a pioneering startup at the time that integrated photonic components onto silicon wafers. After graduating, Thompson initially joined Toshiba Corporation as a development engineer and established their silicon photonics research center. This became valuable for PsiQuantum afterwards, as Thompson had seen firsthand the immense technological and economic difficulty of scaling photonic components from lab to large-scale fabrication production. In 2010, Thompson left to join the University of Bristol as a Professor of Quantum Photonics, where he became closely acquainted with O’Brien’s CQP team and eventually joined PsiQuantum to lead its silicon photonics research in 2016.

Meanwhile, Terry Rudolph grew up in southeastern Africa before moving to Queensland at age 12. Rudolph completed his undergraduate in physics and mathematics at the University of Queensland in 1994 before obtaining a PhD in theoretical quantum optics at York University in 1998. Rudolph then joined Bell Labs in a research position in 2001 before taking a pay cut to join Imperial College London in 2003 on an Advanced Fellowship. Notably, this was around the same time O’Brien had moved to Bristol, and the two became friends. During his time at Imperial College London from 2004 to 2015, Rudolph published four influential papers that essentially created the Pusey-Barrett-Rudolph theorem, which was hailed as the most important foundation in quantum theory. Eventually, Rudolph joined O’Brien to co-found PsiQuantum in 2016 and invented the fusion-based quantum computing (FBQC) architecture that became the foundational groundwork for PsiQuantum’s technological approach to building quantum computers. Interestingly, he is the grandson of physicist Erwin Schrödinger, a physicist for whom the famous Schrödinger’s cat thought experiment was named.

Fariba Danesh later joined PsiQuantum as its Chief Operating Officer in January 2021, bringing over 30 years of experience in novel semiconductor product development. Previously, Danesh served as the CEO of Glo AB, another photonics semiconductor company that developed microLED displays. Danesh was also the SVP of Broadcom (a semiconductor networking company) for three years, the VP of Global Operations for Maxtor (a data storage company) for two years, COO of Finisar Corporation (a fiber optic communication product company) for four years, CEO of Genoa Corporation (a III-V semiconductor optical amplifier company) for three years, and VP of Operations and Media at Seagate Technology for eight years.

Stratton Sclavos joined as PsiQuantum’s Chief Business Officer in April 2023, bringing over 35 years of experience as a previous founder and investor. Sclavos was previously the CEO of VeriSign for 12 years, which grew to over 4K employees and $2 billion in annual revenue. Sclavos had also held executive positions at Go Corporation, Megatest, and MIPS Computers, and served as a Board Member at Salesforce, Intuit, and Juniper Networks.

Finally, Susan Kim joined PsiQuantum as its Chief Financial Officer in 2024, bringing 25 years of experience in financial and operations experience. Kim had previously served as the CFO of PacBio, DataAI, and Katerra for a combined seven years. She had also worked as a manufacturing process engineer at AMD for four years and in a technology investment banking role at Morgan Stanley for four years.

PsiQuantum’s contrarian philosophy differentiated it from existing companies that worked on NISQ devices. PsiQuantum's mission had already looked beyond the NISQ era and allowed the company to avoid compromises on manufacturability and scalability. Over the course of nine years, with O’Brien as its visionary, Rudolph as its theorist, Shadbolt as its experimentalist, and Thompson as its engineer, PsiQuantum has built thousands of wafers of quantum chips. Where other companies that operated within the NISQ-era philosophy still struggle to reach hundred-count qubit levels, PsiQuantum is actively constructing its first large-scale quantum datacenters on three separate continents.

Today, the NISQ paradigm has fallen away in favor of massively scaled systems, in line with O’Brien’s contrarian perspective. Even John Preskill, the physicist who coined the term NISQ, said that no useful applications had emerged from the NISQ era.

Product

Source: PsiQuantum

PsiQuantum’s mission and product can be summarized in a single, though technically dense statement: PsiQuantum leverages the mature semiconductor foundry ecosystem to fabricate wafers of monolithically integrated silicon photonic processors to construct a commercially viable, fault-tolerant quantum device, which can be combined to form massive million-qubit quantum datacenters.

Each term meaningfully constrains and drives the core engineering focus of PsiQuantum.

PsiQuantum’s first constraint is to take advantage of the existing semiconductor foundry ecosystem. The semiconductor industry, developed over 60 years with trillions of dollars in investment, and produces trillions of chips every year. Recognizing this manufacturing scale, PsiQuantum has ensured the design of its quantum processors can be specifically mass-manufactured at existing foundries like TSMC, Intel Foundry, Samsung Foundries, or GlobalFoundries.

This solves two key challenges for PsiQuantum. First, it avoids the upfront capital necessary to build a dedicated quantum processor fabrication facility, which can cost up to $20 billion dollars. Rather, by constraining its quantum photonic processors to be compatible with the standard processes at existing foundries, PsiQuantum can tap into the existing infrastructure without bearing the initial astronomical cost or the technological complexity in managing an in-house foundry’s equipment, yield, and necessary expertise. Second, as existing foundries are designed for high-volume production, PsiQuantum can guarantee a pathway to scale, following the same scaling trajectory that has driven Moore’s Law for 50 years. PsiQuantum is positioning itself to mass-produce the quantum computer by standing on the shoulders of the giants of the classical computing industry.

PsiQuantum’s second constraint is a simplistic processor design to ensure compatibility with existing semiconductor foundries while reducing chip complexity costs. Silicon photonics is the technology that allows silicon to operate on light, acting as an optical medium to guide and manipulate light within a processor. The qualifier – monolithically integrated – signifies that all optical components must be able to be etched directly onto a single silicon wafer. The entire processor can be etched in a unified manner, which simplifies manufacturing costs at foundries.

PsiQuantum’s third constraint is to produce a practical, working quantum processor. This means that the quantum processors should be fault-tolerant and scalable. As a result, PsiQuantum has focused on photonic quantum technologies, as photons are intrinsically more fault-tolerant, and ensured that its mass-produced processors can be networked together to create a “quantum datacenter” that can collectively manipulate millions of qubits.

These three constraints – manufacturing, simplicity, and scalability – emerged from O’Brien’s rejection of the NISQ philosophy that had traditionally dominated the quantum computing space in 2001.

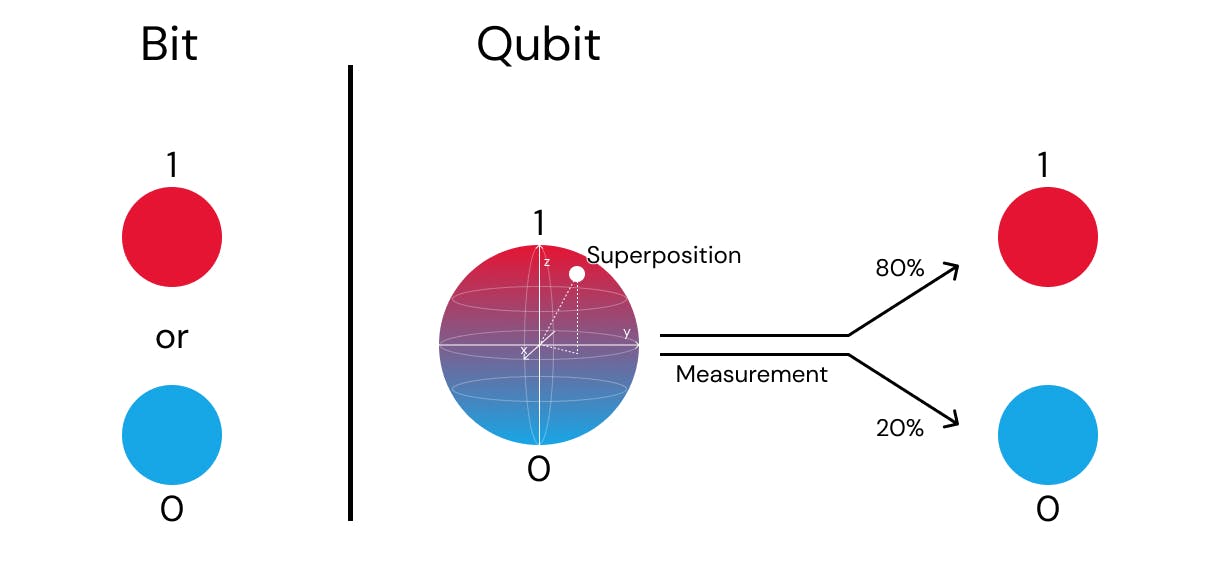

Quantum Computing 101

Classical computers are based on switching an electrical circuit on and off, representing information as discrete units of either 0 or 1. However, quantum computers perform calculations using quantum bits (qubits), which can represent a 0, 1, or any combination of the two in between. This is the quantum superposition principle.

Once prepared, PsiQuantum’s photon-based qubits can be both horizontally or vertically polarized, effectively representing both 0 and 1 at the same time, which is leveraged during computation. Importantly, the superposition can only exist when it is not observed or measured. During measurement, either intentionally or accidentally, the photon qubit’s superposition collapses to a classical result of either horizontal (0) or vertical polarization (1).

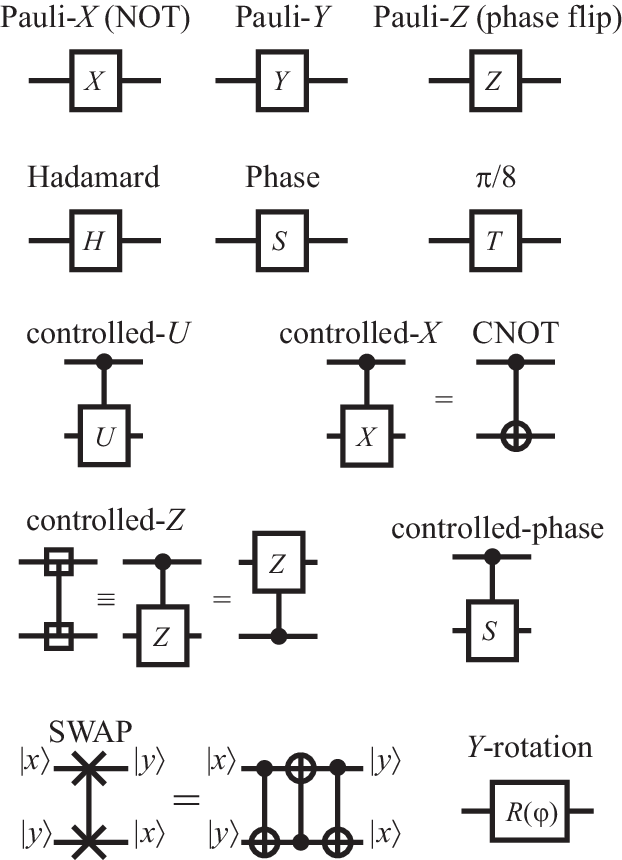

These qubits are manipulated using quantum gates, which are effectively operators that can “operate” on a qubit’s state. For example, applying a Hadamard Gate on a qubit puts the qubit in equal probability of being both 0 and 1, thus creating a superposition. Applying a Pauli Gate (in one of the X, Y, or Z direction) controllably modifies the state of a qubit, which a researcher can use to nudge a qubit into collapsing into the 0 or 1 state. Like in classical computers, applying these gate operations on specific qubits in a specific sequence encodes information that eventually yields a useful output.

Source: Lancaster PHYS483 Lecture Notes

Using these gates, the control of quantum mechanics principles – superposition, entanglement, and interference – becomes possible. These phenomena allow quantum computers to explore many solutions in parallel, allowing near-exponential speedups for problems with very large search spaces like optimization, drug discovery, cryptography, or quantum physics itself.

These principles are well understood. However, the main challenges in quantum computing lie in decoherence, error correction, and scalability.

The advantage of quantum computing relies on the quantum mechanical principles of qubit superposition and entanglement. This can be reliably done, but is fragile and hard to maintain, causing decoherence. Over time, the delicate qubit quantum states degrade as they interact with the surrounding environment. This includes any interaction with external thermal vibrations or electromagnetic interference, which will accidentally “measure” a qubit and cause it to collapse into its classical state. Therefore, most quantum computers are operated at temperatures colder than deep space (0.01 K compared to 2.7 K in space) in an attempt to minimize the chance that thermal interactions occur, allowing qubits to maintain coherence for longer.

In a sense, environmental noise corrupts quantum information, causing one of two errors in a qubit: bit-flips and phase-flips. For classical computers, the chance that a bit flip error occurs, such as 0 turning into a 1, is so unlikely (0.0000000001% chance) that it is generally ignored for all practical purposes. This is unfortunately untrue for quantum computers: decoherence will cause errors to occur with significant frequency and, if uncorrected, will propagate throughout the computation, rendering any final result useless.

Consequently, the longer a quantum algorithm runs and the more qubits it involves to represent additional states, the more opportunities there are for decoherence to introduce errors into the system. Error frequencies became significant within microseconds to milliseconds and will propagate errors throughout the computation, rendering the final result useless.

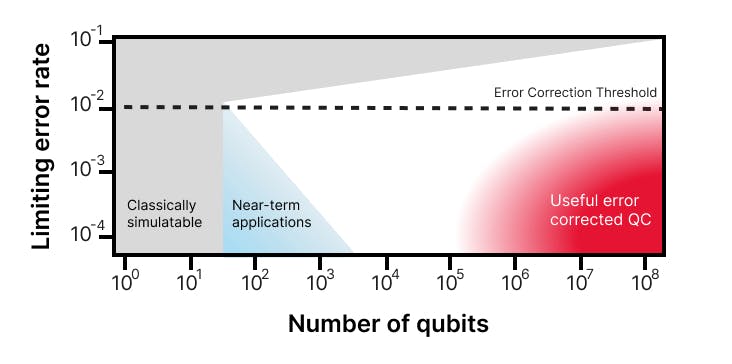

The conclusion that can be drawn is that for a functional quantum computer to exist, it must be a fault-tolerant quantum computer. From this, it is important to note that adding more qubits does not improve the performance of a quantum computer unless the error rate is also reduced.

Because decoherence is not an engineering problem that can be solved (one cannot simply build a “better” qubit), it can only be suppressed or mitigated. Thus, during computation, a fault-tolerant quantum computer must actively correct decoherence errors through quantum error correction (QEC).

This typically involves redundantly encoding a single ideal “logical qubit” across multiple imperfect “physical qubits.” Because errors occur even if a gate operation is not performed on an active qubit, every qubit must be encoded redundantly, with error correction occurring at regular intervals for all qubits. This makes it possible to suppress the error probability to a reasonable level, allowing useful quantum algorithms to be performed.

This creates a scalability issue. Because the overhead of requiring multiple qubits to perform QEC scales polynomially, more points of failure are created across the system. Engineering a system to control and interconnect a large number of qubits while isolating each qubit from the environment is a challenge that has long been the barrier to achieving the scale necessary for useful quantum computers.

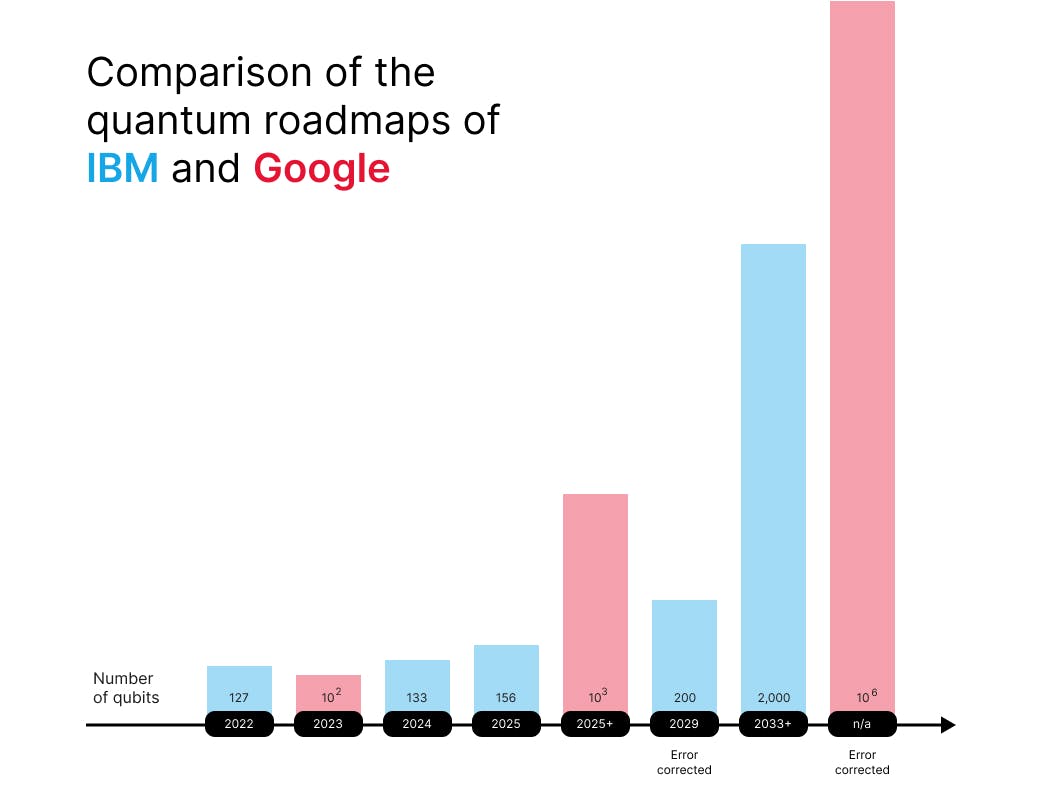

As of 2025, the world is still far from the estimated minimum 100K qubits at a sufficiently low error rate for any quantum computing to produce any useful output. For example, current state-of-the-art quantum processors from IBM and Google have 1.1K and 105 qubits, respectively, with other competitors hovering around 50-150 qubits on average.

Disregarding the two orders of magnitude gap in minimum qubit count, there remains a discrepancy of eight orders of magnitude in error rates. Even if 100K qubits are achieved, current QEC schemes will remain insufficient for applications like cracking real-world RSA codes or simulating chemical molecules.

Put together, even a basic implementation of Shor’s factoring algorithm remains out of reach for current quantum computers. The algorithm, used to break modern encryption schemes, minimally requires a fault-tolerant quantum computer with hundreds of thousands of high-fidelity qubits. Meanwhile, simulating large molecules, protein interactions, and atomic interactions in materials will require millions of low-error qubits.

Besides qubit count and error rates, there are several other factors that influence the performance of a quantum computer. In brief, they include:

Gate fidelity: the accuracy of quantum gate operations, often degraded by noise, control errors, and decoherence. This is analogous to the reliability of logic gates in GPUs (which have virtually perfect fidelities).

Code cycle time: the speed at which qubits within a system are manipulated and the information transfer between qubits. This is analogous to inherent latency in memory transfer bandwidth from logic to memory cores in a GPU.

Qubit connectivity: the pattern of qubit interactions, whether they are connected by nearest-neighbor only or are connected in an all-to-all topology. This affects the efficiency of multi-qubit operations and is analogous to interconnect topologies in networked GPU chips.

Algorithmic efficiency: how well quantum algorithms are mapped to hardware, minimizing qubit usage and gate depth. This is analogous to optimizing MatMul operations through CUDA.

The type of qubit modality, whether that be photons or trapped ions, will affect the factors above. The right question is which qubit modality offers inherent, durable advantages while suffering from problems that can be engineered away.

Learning from classical computing, the transistor unlocked the exponential growth described by Moore's Law once it became small, reliable, and most importantly, mass-producible. Quantum computing is in a similar pre-transistor phase: researchers are still exploring various ways to build qubits, each with its tradeoffs regarding decoherence, error correction, and scalability.

The path toward achieving a functional quantum computer is unclear. What is clear is that quantum needs its transistor moment. And PsiQuantum believes that the answer lies in photonic qubits.

PsiQuantum’s Photonic Qubits

Rather than picking a qubit in achieving the best performance in a single metric, PsiQuantum has chosen to work with photonic qubits because of their potential to achieve O’Brien’s non-negotiable end goal: a commercially viable, million-qubit, fault-tolerant system. This choice has dictated PsiQuantum’s roadmap for design, fabrication, and infrastructure.

Compared to the leading approaches favored by Google and IBM, superconducting circuits are extremely sensitive to environmental noise and thus require operating temperatures of 10 mK. For a million-qubit system, no viable cooling technology exists today that can maintain the 10 mK temperature. Meanwhile, controlling trapped ions requires controlling millions of individual ions with a complex array of lasers, presenting a scaling challenge.

By encoding information in particles of light, the quantum system is inherently resilient to the thermal noise and decoherence that affect matter-based qubits. Thus, PsiQuantum’s system operates at a comparatively mild 4 K using liquid helium, a 1000x operational advantage. Economically, this means that bespoke dilution refrigerators can be scrapped for mature and scalable cryoplant technology that is already developed for large-scale physics experiments.

Source: PsiQuantum

Although decoherence is not a problem for photons, two issues are that it is difficult to consistently generate single identical photons and that photons do not naturally interact, making two-qubit gates difficult to implement. Photons can also be easily absorbed or scattered by materials or particles, causing information to be lost. However, PsiQuantum believes that these are solvable engineering problems with its commercially scalable Omega chipset hardware, fusion-based quantum computing algorithmic framework, and active compiler software.

Hardware: The Omega Chipset

Source: PsiQuantum

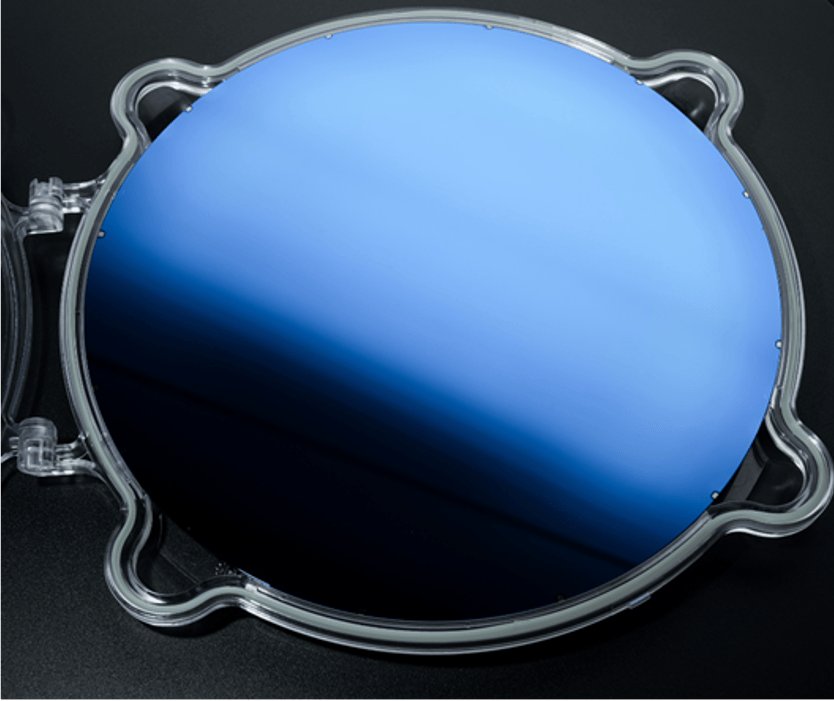

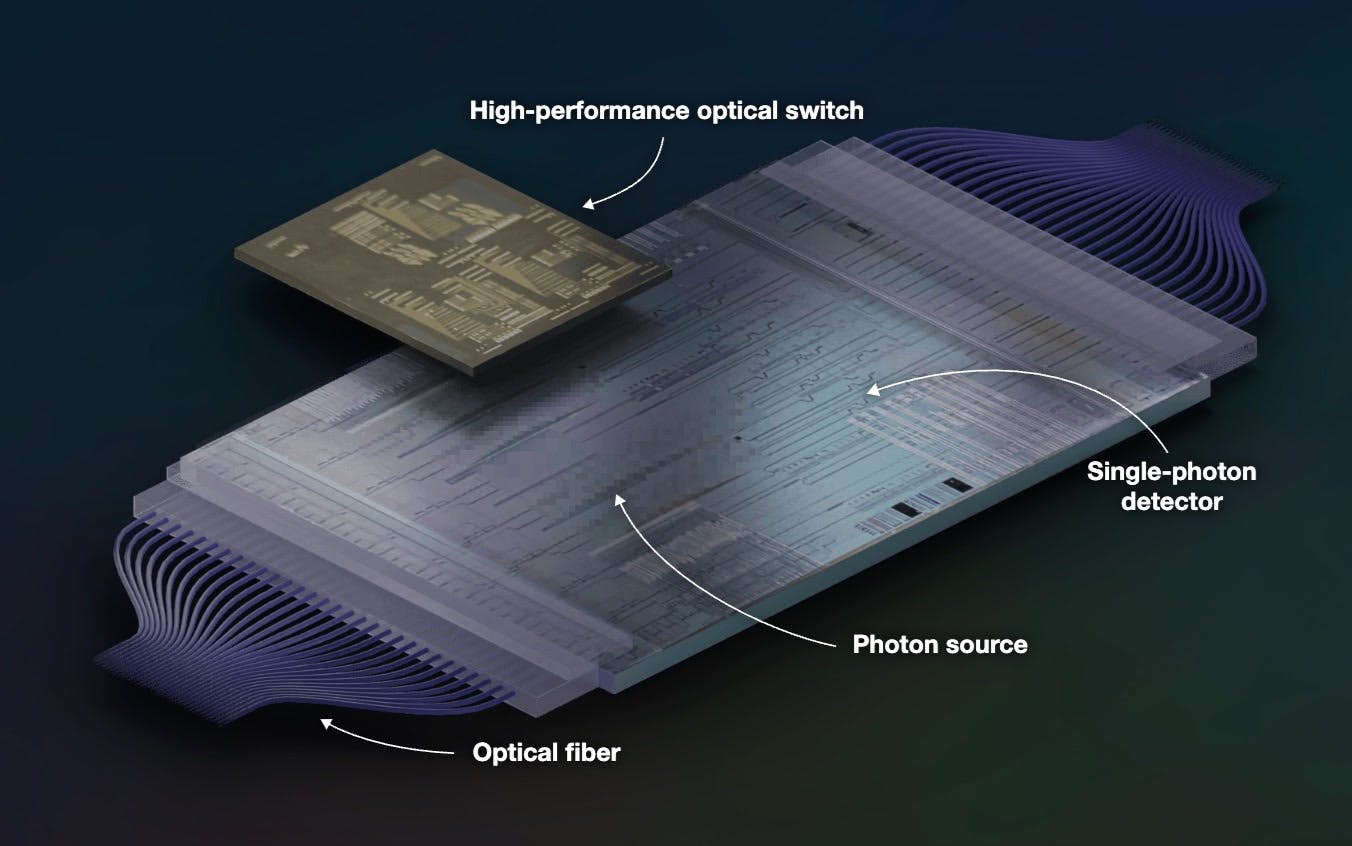

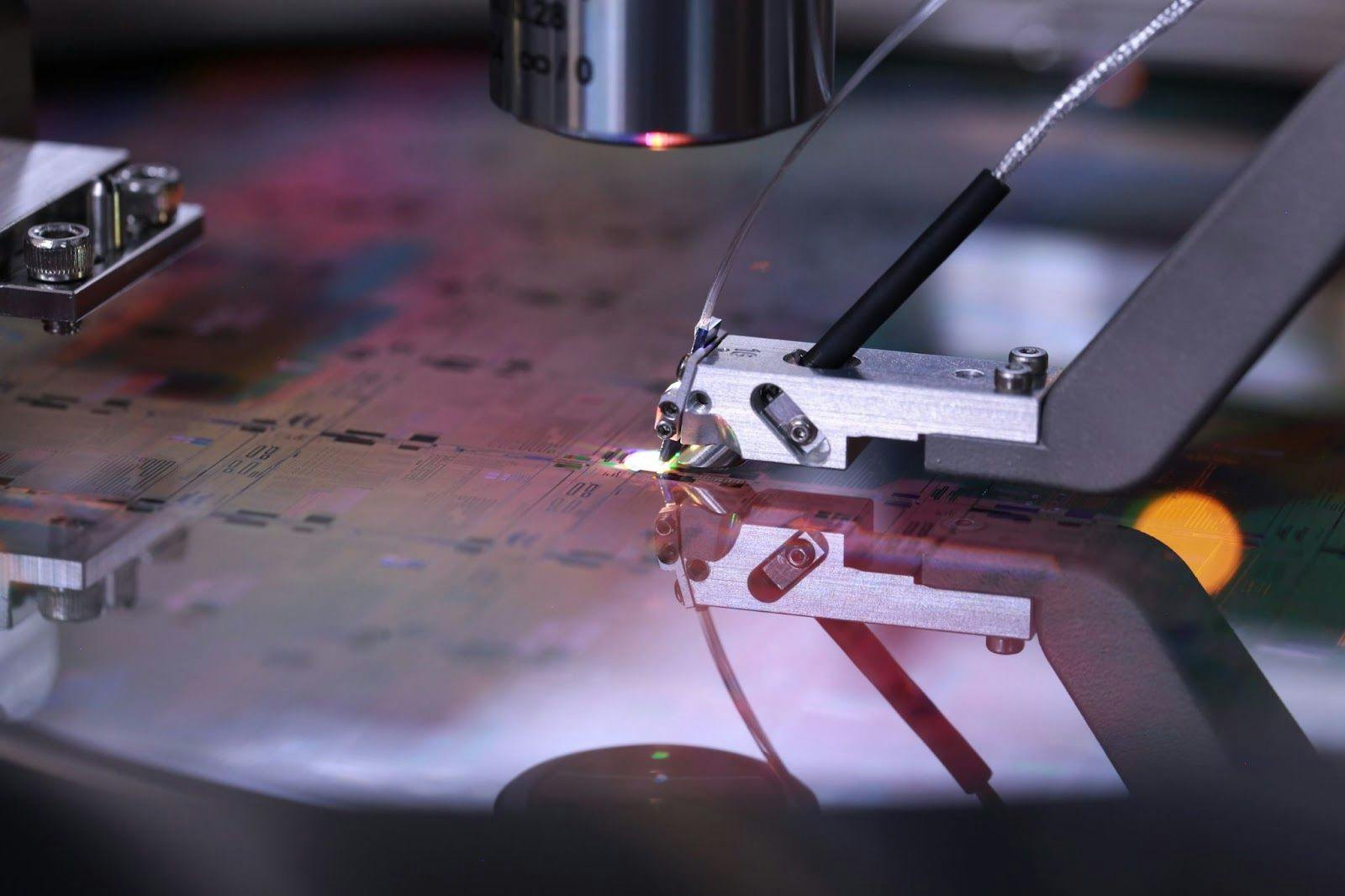

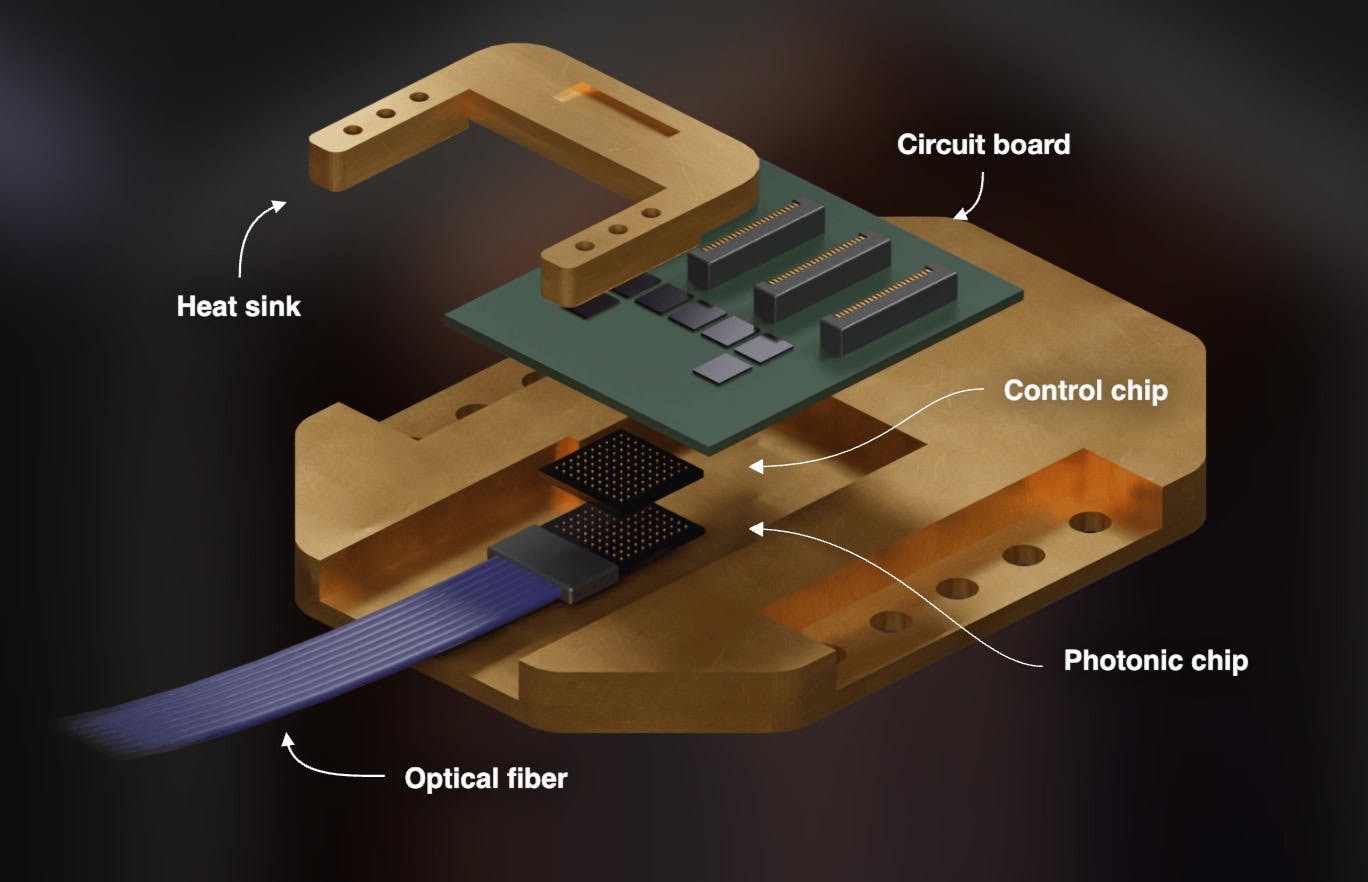

In February 2025, PsiQuantum developed its Omega chipset, a manufacturable platform for photonic quantum computing. Using the mature, high-volume manufacturing processes of GlobalFoundries, PsiQuantum is able to fabricate its quantum chips on 300mm silicon wafers. This breakthrough allows PsiQuantum to take advantage of decades of accumulated knowledge, process control, and the economies of scale in the semiconductor industry.

Source: PsiQuantum

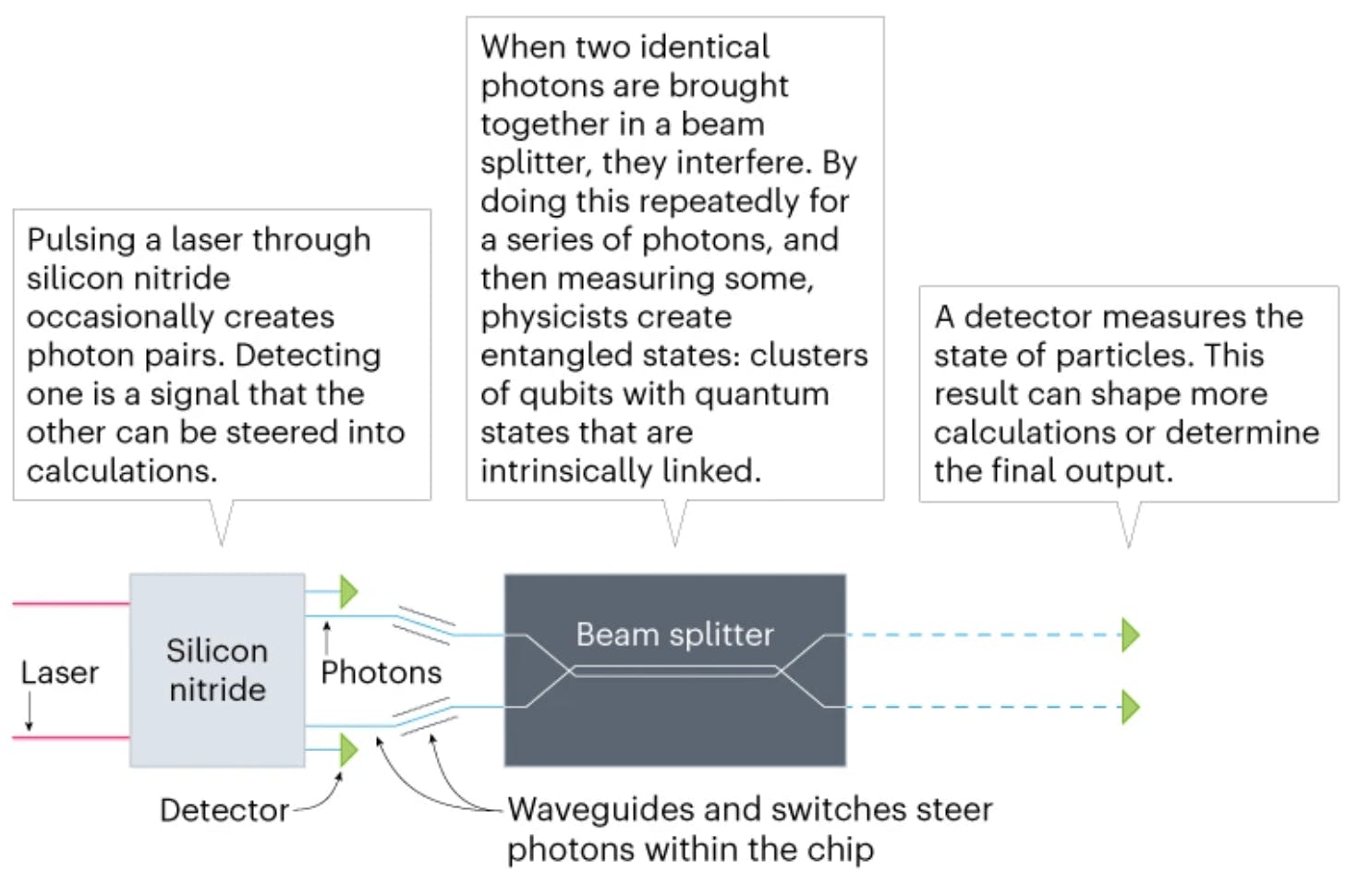

The silicon wafer contains single-photon generators through spontaneous four-wave mixing (SFWM) in silicon nitride waveguides. By pumping lasers through this device, PsiQuantum is able to produce pairs of photons roughly once in every 20 attempts. These photons then travel along the waveguides, which, along with components like interferometers and beamsplitters, are etched into the silicon wafer.

Source: Nature

To control the traffic of photons, PsiQuantum has engineered a network of high-speed optical switches on the chip as well. Uniquely, PsiQuantum manufactures Barium Titanate (BTO), a novel electro-optic material, in-house as the base for its optical switches, which is more efficient than traditional materials in routing photons within the chip.

Source: PsiQuantum

At the final step, a Superconducting Nanowire Single-Photon Detector (SNSPDs) consisting of a thin niobium nitride superconducting wire performs the measurement step. To be used, the material is cooled below its superconducting temperature to around 4 K. When a single photon strikes the wire, it creates a resistive "hotspot," generating a detectable voltage pulse. These detectors are monolithically integrated onto the silicon wafers with a measurement fidelity of 99.98%.

Source: PsiQuantum

The integration of silicon nitride for waveguides, BTO for switches, and niobium nitride for detectors into a single, 26-layer manufacturing stack requires over hundreds of process steps at a traditional semiconductor foundry. This allows PsiQuantum to be one of the only companies in the world to unlock the mass-manufacturing of quantum chips.

Source: PsiQuantum

Architecture: Fusion-Based Quantum Computing

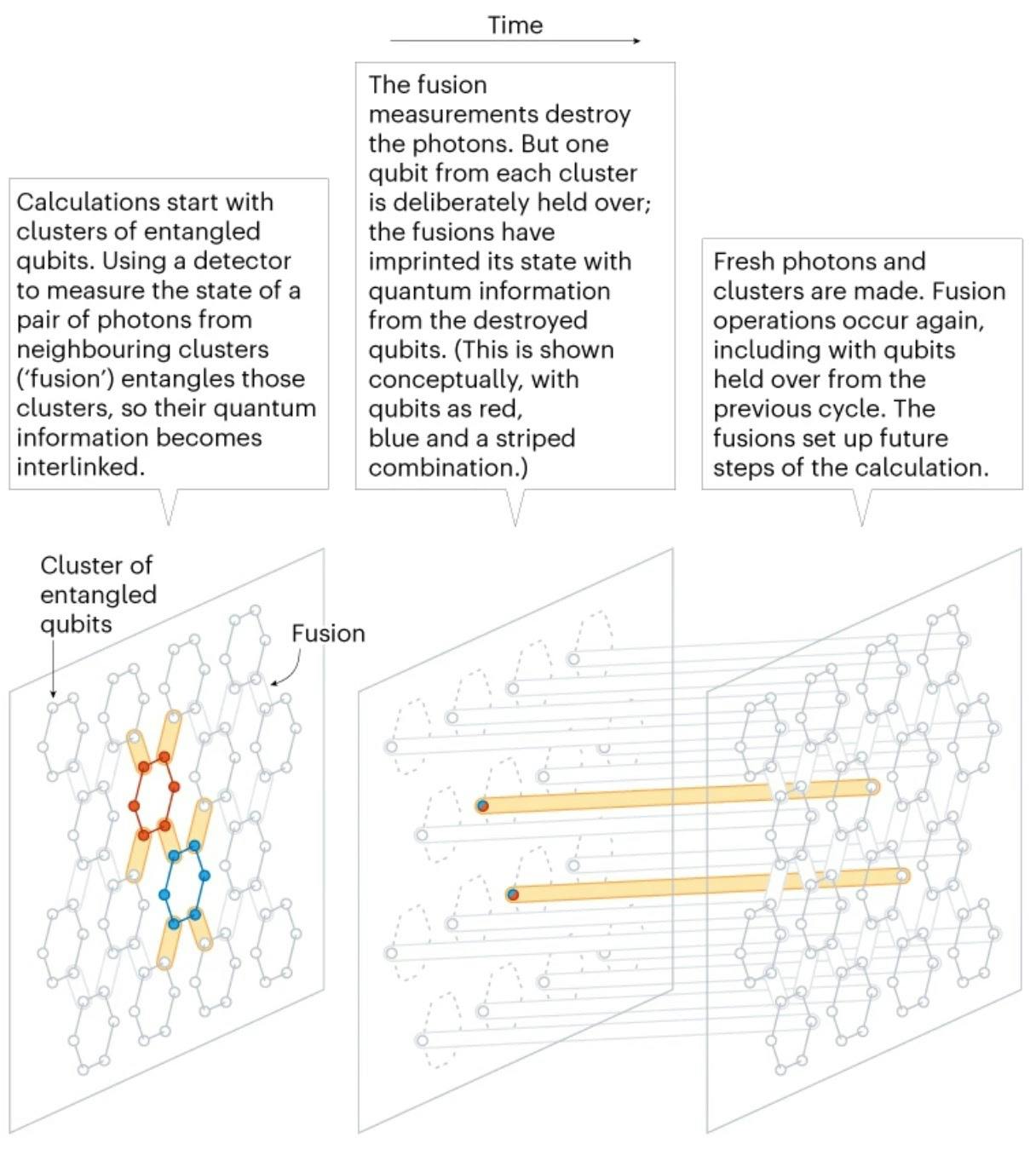

With PsiQuantum’s Omega chipset solving the manufacturability problem, the next primary bottleneck is managing qubit error propagation. For photonic quantum computing specifically, the dominant error mechanism is the loss of photons as they are absorbed or scattered while traveling through the optical components of the wafer. To solve this problem, Chief Architect Rudolph invented the Fusion-Based Quantum Computing (FBQC) architecture.

This differs from the existing Circuit-Based Quantum Computing (CBQC) architecture, which mirrors classical computing’s procedural flow as computation occurs across logical gates. Unfortunately, the CBQC model requires that most qubits maintain coherence for the entire duration of the algorithm, regardless of whether it is actively being utilized during computation. From the earlier Quantum Computing 101 section, it follows that maintaining coherence for an extended amount of time would increase the chance of an error occurring for each qubit, which is catastrophic to a long-running computation.

For the sake of simplicity, this system can be summarized as a method to create small groups of entangled qubits just-in-time for computation rather than trying to keep all qubits “ready” for computation and maintaining global coherence. This decreases the error rate, preventing the decoherence of photons in computation. Meanwhile, PsiQuantum is able to entangle qubits from separate groups through fusion measurement, effectively merging the qubits’ quantum states into a larger connected system. As a result, the computation proceeds step-by-step as qubit groups are spun up from an idle state as needed.

Source: Nature

The main benefit of the FBQC architecture is its localized failure event. If a photon happens to be decohered while it is in a computation state (rather than an idle state), the event is localized to a specific qubit group. PsiQuantum’s external classical system is then able to detect the failure, discard that attempt, and retry the computation in the next computational step with a newly spun-up qubit group. The FBQC converts a single, fatal photon decoherence event into a recoverable error by enabling multiple correction attempts. After experimentation, PsiQuantum found that the architecture was able to tolerate a photon loss probability of up to 10.4% per fusion measurement, roughly five times better tolerance compared to previous photonic architectures.

Software: Active Volume Compiler

The final bottleneck for PsiQuantum is the efficient mapping of quantum algorithms on its hardware. As computational cost grows superlinearly with qubit number (qubit count multiplied by gate count) in quantum algorithms, it is paramount to make use of all qubits within a system. However, without an efficient software compiler to efficiently assign qubits to the computational task, a vast majority of qubits may inefficiently remain in the idle state while waiting for operations in other qubits to be completed. In 2022, PsiQuantum unveiled its Active Volume Compiler (AVC), which minimizes this inefficiency by parallelizing operations across distant qubits and pre-scheduling gates for computation, improving the overall qubit utilization.

Most qubit systems are connected through their nearest neighbors, which only allows quantum information to be transmitted across adjacent qubit neighbors. To some extent, this is because the mainstream qubit modality – superconducting circuits, trapped ions, or neutral atoms – remain fixed in a grid system. PsiQuantum designed the AVC to take advantage of the fact that photonic qubits can move within a chip and can be easily connected over long distances using an optical fiber. By allowing some level of all-to-all qubit connection regardless of physical proximity, the compiler effectively converts high-level algorithms into optimized sequences of gate operations that maximize hardware utilization.

Source: PsiQuantum

The end result is a quantum computer where computational cost scales with logical operations rather than qubit and gate counts. This grows more important as problems grow more complex in scale. According to PsiQuantum, its AVC delivers approximately 50x runtime reduction for factoring algorithms, compressing the RSA-2048 breaking time from months to roughly 9 hours using identical logical qubit counts. Additional claims include a 700x reduction in computational resources for breaking elliptic curve cryptography keys.

Market

Customer

PsiQuantum's commercial approach largely ignores traditional customer acquisition playbooks in favor of deep partnerships. In most cases, the company's "customers" function as collaborators who work to identify high-value computational challenges suitable for PsiQuantum's machines. As of 2025, most high-value problems remain concentrated in materials science, pharmaceuticals, finance, automotive, and cryptography.

For example, PsiQuantum partnered with Mercedes-Benz in 2023 to simulate Li-ion battery chemistry for Mercedes-Benz's future electric vehicle development. In the study, PsiQuantum analyzed fluoroethylene carbonate molecular behavior, which is computationally impossible for classical supercomputers, demonstrating that the simulation could be completed in just 24 hours.

Likewise, PsiQuantum also partnered with Mitsubishi Chemical Group and Mitsubishi UFJ Financial Group in 2024 to perform photochromic molecule simulation for energy-efficient materials. If better understood, this molecule could lead to future applications in smart windows and next-generation solar panels. More significantly, PsiQuantum has established a major partnership with Boehringer Ingelheim for pharmaceutical applications, demonstrating quantum advantages in molecular dynamics simulations that could revolutionize drug discovery processes.

PsiQuantum also partners with entire governments. For the US, it fields both research work at university campuses and defense contracts with DARPA. The company has already participated in DARPA's US2QC (Underexplored Systems for Utility-Scale Quantum Computing) program and collaborated with the Air Force. For countries outside of the US, such as the United Kingdom and Australia, PsiQuantum is building quantum data centers for educational or research-focused partnerships.

Market Size

The quantum computing market is valued at $885 million in 2025. Forecasts reveal an estimated CAGR of 19%, resulting in conservative estimates of a $1.26 billion market size by 2030 to aggressive projections of a $12.6 billion market size by 2032. The minimal market value today is indicative of the research-heavy models of most quantum computing companies. Instead, the thesis for most quantum opportunities is in the future potential these breakthroughs could derive. For example, one analysis from McKinsey projects cumulative economic value creation of $1.3 trillion by 2035.

Competition

The main difference between any two quantum computing companies is qubit modality. A qubit can technically be any system that displays quantum properties (such as superposition) with two distinct states. For example, PsiQuantum uses photons that can be horizontally or vertically polarized as qubits. These photons can be manipulated and measured using optical components such as beam splitters and waveguides. Meanwhile, IonQ uses individual trapped ions that can have different energy levels depending on the ion’s valence electrons, resulting in two distinct states. Other qubit types include topological anions, superconducting circuits, neutral atoms, and quantum dot qubits.

The chosen modality drastically affects the roadmap of a quantum company, as each presents a unique set of advantages and challenges concerning decoherence, error correction, and scalability. PsiQuantum’s photonic qubits can inherently operate at higher temperatures than other qubit types, simplifying the cooling infrastructure required. However, photons also suffer from transmission loss, which is difficult to mitigate, and are difficult to reliably generate by a photon generator.

As of 2025, the mainstream approach was the use of superconducting circuits, which underpins the technology used by IBM, Google, AWS, Intel, Rigetti, IQM, and several other companies. Using Josephson junctions, a mature technology that is easily manufactured, the circuits exhibit discrete energy levels that can represent two distinct qubit states. However, superconducting circuits suffer from short coherence times due to highly sensitive qubits, are difficult to scale qubit count, require cryogenic operating temperatures at around 0.01 K (10 mK), and require considerable QEC overhead.

Alternative qubit approaches, such as trapped ions and neutral atoms, also introduce distinct tradeoffs across decoherence, error correction, and scalability, and are omitted here for conciseness.

Hyperscaler Quantum Solutions

Google Quantum AI: Google Quantum AI is a division within Google that works on developing quantum computers with superconducting circuits as its qubit modality of choice. In 2019, it was able to achieve quantum supremacy with its 53-qubit Sycamore processor, performing a task in seconds that it estimated would take a supercomputer millennia. More recently in 2023, Google was able to achieve QEC below a key threshold, showing that increasing physical qubit redundancy led to a more reliable logical qubit. Its Willow chip also achieved a 105 qubit count. Additionally, Google's ecosystem includes its Cirq quantum software framework and its Quantum AI cloud platform, positioning it as a well-funded, vertically-integrated competitor to PsiQuantum with a defined roadmap to reach a fault-tolerant 100K qubit quantum computer.

IBM Quantum: IBM Quantum is a division within IBM that aims to deliver a system that accurately runs 100 million gates on 200 logical qubits by 2029. Using superconducting circuits, IBM announced a 1.1K qubit Condor processor in December 2023, demonstrating a significant achievement in raw qubit count. Meanwhile, its 156-qubit Heron processor, announced in April 2025, prioritizes lower error rates and serves as the foundation for its modular Quantum System Two. This modular approach mirrors PsiQuantum’s strategy, though it is applied to a different physical modality. IBM also maintains its robust Qiskit software ecosystem, which has a community of over 550K developers.

Microsoft Azure Quantum: Microsoft Azure Quantum is pursuing a novel topological qubit modality, which is a high-risk but high-reward approach. The core hypothesis is that Majorana Zero Modes (MZMs) can be used to create qubits with built-in, inherent resilience to local noise, bypassing the QEC schemes required by competitors. Microsoft unveiled its first Majorana 1 chip in February 2025, and it was designed to maintain eight topological qubits and is intended to be scalable to a million qubits on a single chip. Although Microsoft Azure Quantum is years away from PsiQuantum’s technological progress, if successful, its approach could render its qubit innovations obsolete. In the meantime, Microsoft develops its own Azure Quantum cloud platform, providing access to partner hardware (including competitors like Quantinuum) and its Q# programming language.

Amazon Web Services (AWS): Amazon partially competes with PsiQuantum in two areas: primarily as a cloud platform provider and secondly as a hardware developer. Its AWS Braket service is hardware-agnostic, allowing any quantum computing platform to join, thereby commoditizing access to multiple quantum processors. Effectively, AWS Braket has become a distribution channel for the industry. However, AWS has also developed its Ocelot quantum chip that utilizes "cat qubits," a type of bosonic qubit that inherently suppresses bit-flip errors. This allows for a much more efficient QEC scheme to correct the remaining phase-flip errors, which AWS claims could reduce the resource overhead for fault tolerance by up to 90%. Compared to PsiQuantum, Amazon’s Ocelot chip could demonstrate a new innovation in efficient QEC efficiency that challenges PsiQuantum's fault-tolerant photonic qubit modality.

Intel: Intel’s quantum computing effort is designed around its expertise in silicon design and manufacturing. Intel has chosen to work on silicon spin qubits, which encode quantum information in the spin of a single trapped electron. Because of its compatibility with existing semiconductor foundries, it is able to use existing, highly advanced CMOS fabrication facilities, which largely mirrors PsiQuantum's focus on standard silicon photonics foundries. Intel's latest Tunnel Falls chip, released in June 2023, is a 12-qubit chip released to the research community. Furthermore, Intel has demonstrated the ability for these qubits to operate at slightly higher temperatures (around 1.6 K) than superconducting qubits. Overall, Intel directly competes with PsiQuantum on the promise of manufacturability and scalability, though Intel’s ability to deliver on those promises is unclear.

Direct Quantum Competitors

IonQ: IonQ was founded in 2015 and went public through a SPAC in 2021. IonQ pursues trapped-ion qubit modality using Ytterbium ions, which benefits from long coherence times. Its systems include the 25-qubit Aria and 36-qubit Forte with fidelities at around 99.4%. The company's roadmap includes the upcoming 64-qubit Tempo system, which aims for 99.9% fidelity. With already available systems integrated into major cloud platforms like AWS Braket and Azure Quantum, IonQ has demonstrated real-world usage unlike PsiQuantum. With high-quality, stable qubits through established cloud channels, IonQ has been able to capture early market share and user access, which may pose a challenge to PsiQuantum in the future.

Xanadu: Xanadu is a Canadian startup founded in 2016, also in the photonic quantum computing domain. In January 2025, it unveiled Aurora, a room-temperature system with 12 qubits. Interestingly, Xanadu has chosen to create an agnostic, open-source software library called PennyLane, which has become a popular tool for quantum machine learning. Xanadu raised a $100 million Series C round in November 2022, reaching a $1 billion valuation.

Quantinuum: Quantinuum was founded in 2021 as a result of a merger of Honeywell Quantum Solutions and Cambridge Quantum. Using a trapped-ion qubit modality, it uses a new Quantum Charge-Coupled Device (QCCD) architecture, which can move ions between specialized zones on a chip for storage, gates, and readout, enabling all-to-all connectivity. Its System Model H2 processor reached 56 qubits, while their partnership with Microsoft resulted in a breakthrough in QEC. However, compared to PsiQuantum, it has no demonstrable progress towards a large-scale qubit count system.

Rigetti Computing: Rigetti Computing was founded in 2013 and went public through a SPAC in 2021. Focusing on superconducting qubits, Rigetti has developed its Ankaa-3 system and has a 336-qubit Lyra system on its roadmap. However, its commercially available quantum processor is the Novera, which is a small 9-qubit system. Like Quantinuum, there seems to be no demonstrable progress towards a large-scale qubit count system akin to what PsiQuantum has been developing towards.

Pasqal: Pasqal is a French quantum computing company founded in 2019, with Nobel Prize winner Alain Aspect on the founding team. Utilizing neutral atoms manipulated by lasers, the company has developed the Orion Gamma with 140 qubits and is planning to release the Vela with 200 qubits in 2027. Additionally, the company claims that its quantum processors are able to operate at room temperature.

QuEra: QuEra was founded in 2018 and develops neutral atom quantum computers using optical tweezers for qubit manipulation. The company raised $230 million in a funding round led by Google in February 2025. Its flagship computer, Aquila, has 256 qubits and is available through Amazon Braket.

D-Wave Quantum: D-Wave was founded in 1999 and develops quantum annealing systems for optimization problems. Its flagship system, the Advantage2 prototype, has 1.2K qubits, one of the highest numbers of qubits in a single quantum system. The company differs from PsiQuantum by focusing exclusively on commercial optimization applications rather than pursuing universal quantum computing.

Indirect Alternative Compute Startups

Cerebras: Cerebras was founded in 2016 to build what the company claims to be “the world’s largest chip”. Its flagship product, the Cerebras Wafer-Scale Engine (WSE), is described by the company as “the fastest AI processor on Earth” which “surpasses all other processors in AI-optimized cores, memory speed, and on-chip fabric bandwidth.” This chip, the size of a dinner plate, is capable of exploring combinatorially large problem spaces, much like quantum computers aim to do, but through brute-force parallelism.

Lightmatter: Lightmatter was founded in 2017 to develop optical processing chips. Using lasers, Lightmatter has developed an efficient platform to accelerate compute. With its Passage interconnect, Lightmatter enables data centers a cheaper and easier way to connect multiple GPUs used in AI processes while reducing the bottleneck between connected GPUs. This could be used in existing classical computing clusters to enable data centers to scale GPU clusters into million‑chip domains, effectively mirroring the massive parallelism seen in quantum systems.

Business Model

Despite having over $1 billion in funding, PsiQuantum is still pre-revenue and contracts its quantum computers primarily for research and future development purposes. Thus, they run their quantum computers in-house and expose an endpoint for users to experiment with drug discovery or materials science. The company deploys dedicated quantum algorithm teams to help translate complex business problems into quantum algorithms, performing rigorous resource analysis to quantify exact hardware requirements and ROI timelines.

Traction

In 2025, PsiQuantum announced its Omega chipset, the world’s first mass-producible chipset for quantum computing chips. The chipset, manufactured on GlobalFoundries’ 300mm silicon wafers, contains all the advanced components required to build million-qubit-scale quantum computers. This will enable it to build out “millions of chips” for PsiQuantum’s two datacenter-sized quantum compute centers in Brisbane, Australia, and Chicago, Illinois.

Source: PsiQuantum

In a test demonstration of its Omega chipset, PsiQuantum announced that a collaboration with the pharmaceutical giant Boehringer had resulted in more than 200x improvement in simulating important drugs and materials. In 2024, another collaboration with the Mitsubishi UFJ Financial Group aimed to simulate industrial molecules that could be used to improve smart windows, data storage, and solar cells.

Meanwhile, PsiQuantum is actively deploying an open-source software stack to incentivize developers to start using its platform, with QREF providing an open-source data format to encode quantum algorithms and Bartiq guiding developers through resource estimation for quantum algorithms.

PsiQuantum has also received significant traction from the US government. In 2022, PsiQuantum was awarded a $10.8 million contract with the Air Force Research Laboratory. The company has also advanced to the final stage of DARPA’s US2QC program. Additionally, the company is working with the DOE on proving the viability of existing cryogenic cooling solutions in the SLAC National Accelerator Laboratory.

PsiQuantum also received $940 million AUD from the Australian government to build the world's first quantum data center in Brisbane. The Australian partnership shows PsiQuantum’s gradual penetration into government partnerships as countries prepare for a comprehensive national quantum strategy. This partnership would include technology transfer, local manufacturing capabilities, and workforce development programs. PsiQuantum has also begun the construction of a second quantum data center in the United Kingdom.

Valuation

In September 2025, PsiQuantum announced it had raised a $1 billion Series E at a $7 billion valuation “to build the world’s first commercially useful, fault-tolerant quantum computers.” The company said that the round of funding would be used to “break ground on utility-scale quantum computing sites in Brisbane and Chicago” and to deploy “large-scale prototype systems”. The round was led by BlackRock, Temasek, and Baillie Gifford with participation from Nvidia’s venture capital arm NVentures, Macquarie Capital, and Ribbit Capital.

This round of funding took place just six months after PsiQuantum raised a $750 million round of funding from BlackRock at a $6 billion valuation in March 2025. Prior to this, in July 2021, PsiQuantum raised a Series D at a $3.2 billion valuation. Overall, PsiQuantum has raised a total of $2.3 billion in funding as of September 2025.

Key Opportunities

Quantum Compute as a Service

The quantum computing as a service market reached $1.9 billion in 2023, with increasing enterprise adoption through cloud platforms including Amazon Braket, IBM Quantum Network, and Microsoft Azure Quantum. These platforms reduce the capital barriers that otherwise would restrict quantum computing access to research institutions and medium-sized corporations. With pay-per-use models, even startups working in the relevant fields, such as drug design, could experiment with quantum algorithms.

PsiQuantum's data center-scale systems allow the company to integrate its processors directly with existing cloud infrastructure without requiring specialized quantum facilities. For that reason, PsiQuantum is positioned to rapidly build out more data center systems, which will then offer better pricing for companies compared to small-scale systems developed by other competitors.

Transpiler & Algorithmic Codesign First Mover Advantage

As quantum computing remains a niche expertise, enterprise quantum algorithm adoption will remain difficult as developers lack expertise in quantum gate programming and hardware-specific optimization requirements. However, this skills gap creates an opportunity for quantum hardware companies to establish algorithmic partnerships during the pre-commercial phase, positioning themselves as the default platform when enterprises eventually transition from research and testing to production quantum applications.

PsiQuantum has been pursuing these early-stage algorithm co-development partnerships already. For example, the company has collaborations with pharmaceutical companies like Boehringer Ingelheim for drug discovery applications. These partnerships produce domain-specific quantum algorithms optimized for PsiQuantum's architecture while training enterprise researchers in FBQC programming paradigms rather than gate-based approaches used by superconducting competitors.

These early algorithm partnerships will eventually establish switching costs among enterprises looking to adopt quantum computing. As enterprise teams developing quantum applications through PsiQuantum collaborations gain specialized knowledge in its specific architecture, it will make it difficult to translate these learned skills to alternative quantum architectures. Similar to Autodesk or Adobe’s partnerships with university courses, partnering early enables PsiQuantum to build lock-in effects where enterprises choose PsiQuantum not because of hardware performance, but because of human capital expertise.

Key Risks

Scaled Photonic Quantum

Even after nearly a decade of engineering, PsiQuantum still faces fundamental technical challenges in scaling photonic quantum systems to the million-qubit threshold required for commercial viability. Photons experience significant loss rates as they traverse optical components, including waveguides, switches, fibers, and detection systems. Achieving acceptable photon loss rates across millions of integrated components is still unproven by the company, even with its novel FBQC architecture.

Additionally, PsiQuantum's architecture depends on generating high-quality single photons with precise efficiency, purity, and indistinguishability specifications while detecting them with near-perfect fidelity and minimal noise. The company's Omega chipset reports detection fidelities of 99.98%, but consistently achieving these specifications across millions of superconducting nanowire detectors in a manufacturing environment is also unproven. The cryogenic cooling requirements for these detectors at 4 K also add operational complexity that could compound system-wide error rates, although it is significantly easier to maintain than the 0.01 K needed for superconducting qubit-based quantum companies.

NISQ Sufficiency

PsiQuantum has adopted an "all-or-nothing" strategy by bypassing the NISQ philosophy to focus exclusively on large-scale fault-tolerant machines. However, there is a risk that NISQ computers become good enough for a range of commercially valuable problems, undermining the business case for its vastly more expensive and longer-term solution.

Hypothetically, assume that by 2030, a competitor like IBM or Google will have developed a 1K qubit NISQ machine with advanced QEC. If the competitor is able to demonstrate a passable quantum advantage for a specific task, like portfolio optimization, it would still generate millions in value, undermining the need for expensive PsiQuantum systems in favor of a cheap and usable NISQ computer. The risk here is that PsiQuantum finds itself building overly complex systems while competitors are profitably using simpler, albeit less powerful, quantum computers.

Overstated Quantum Supremacy

The quantum computing industry faces mounting skepticism regarding practical applications despite approximately $4 billion in hardware startup funding. According to Nikita Gourianov at Oxford University, the quantum computing sector has been misled by researchers who have overstated technological capabilities to secure venture capital funding and private-sector compensation for academic research. Gourianov notes that "despite years of effort, nobody has yet come close to building a quantum machine that is actually capable of solving practical problems."

The fundamental uncertainty surrounding quantum computing timelines compounds these concerns. For over 40 years, researchers have consistently projected quantum computing breakthroughs within a 10-year horizon, yet these predictions have repeatedly failed to materialize. Whether PsiQuantum's current 5-year commercialization timeline represents genuine technical feasibility or another iteration of perpetual optimism remains unclear. Many core quantum computing obstacles are scientific rather than engineering in nature, and the industry has not demonstrated that these fundamental scientific problems can be improved by engineering approaches. Additionally, no commercially useful quantum computing applications currently exist, with all performance benchmarks remaining somewhat theoretical rather than demonstrating real-world utility.

PsiQuantum's pre-revenue model currently depends on consulting services and research partnerships rather than actual quantum computational deployments, highlighting the industry-wide absence of commercially viable quantum applications. Furthermore, the company previously projected that commercial fault-tolerant systems would be operational by 2025, which has now been pushed back to 2029. If quantum computing fails to demonstrate clear practical utility within the next ten years, investor confidence could collapse across the sector, threatening another quantum winter.

Summary

PsiQuantum is one of the only quantum computing companies pursuing million-qubit fault-tolerant systems while competitors continue to operate under the NISQ philosophy. Through years of research, the company's photonic approach has managed to overcome scalability limitations through semiconductor foundry compatibility and produce novel FBQC architectures that can tolerate high error rates during computation. With $1.3 billion in funding, PsiQuantum is the most funded quantum computing company in history and has secured partnerships with major companies like Mercedes-Benz and governments around the world. However, even after nearly a decade of engineering, the company's moonshot bet remains unproven in real-world tasks and has yet to deliver a readily available enterprise solution. PsiQuantum's success will come down to whether quantum computing's transistor moment lies in photonic qubits and whether the company can deliver on its timeline before competitors demonstrate commercially viable alternatives.