A few weeks ago, we unpacked the history and potential future of autonomous vehicles in our deep dive, The Trillion-Dollar Battle To Build a Robotaxi Empire. Now, the battle to become the leading robotaxi company is heating up. On June 22, 2025, Tesla launched its long-awaited robotaxi service with a fleet of 10 cars in downtown Austin. The launch took place 10 days after its originally scheduled launch date, which Elon Musk explained was due to Tesla “being super paranoid about safety.”

Elon was probably right to be concerned about safety, especially considering the unblemished safety record of Tesla’s chief rival in the robotaxi market, Waymo, which uses a suite of sensors, including cameras, lidar, and radar, to help its autonomous vehicles navigate. Tesla, on the other hand, is an outlier in the industry that relies on cameras alone. Elon has argued that lidar and radar are too expensive, not as scalable, and not necessary for safety, and Tesla is betting that with enough data and compute, cameras alone can solve self-driving. Achieving a similar safety standard to Waymo will be necessary for Tesla to win that bet.

Often, analysis on the future of autonomous vehicles focuses on the end state of the market and on which players will establish a dominant market position in a trillion-dollar-plus market that is up for grabs. But the more immediate question and pressing question is which approach will define the future of autonomous vehicles (AVs)? The approach used by Waymo and all the other robotaxi players, which implements the use of lidar, or Tesla’s approach of using cameras alone, aided by compute and data.

Waymo’s “Conventional” Approach to Self-Driving

Waymo's approach to self-driving is defined by three key characteristics:

Waymo’s sensor stack: a full suite of sensors, including cameras, lidar, radar, and microphones.

The Waymo driver model: a modularized, transformer-based model that uses data from its sensors to make decisions.

Waymo’s operational design domains: Waymo relies on mapping to operate in specific geo-fenced areas.

Understanding how each of these works paints a picture of Waymo’s distinctive approach to autonomy.

Waymo’s Sensor Stack

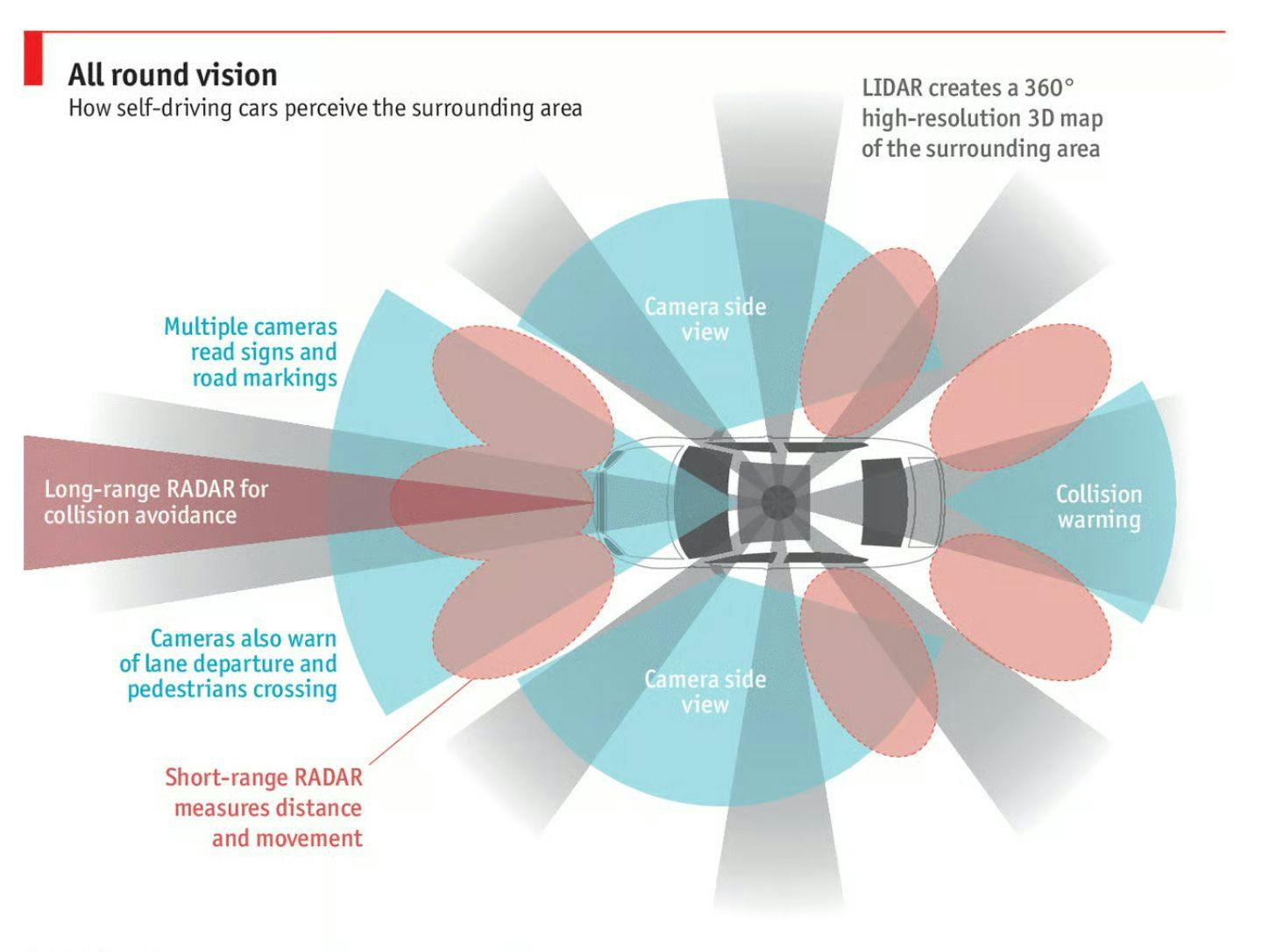

Source: The Economist

Waymo, similar to other robotaxi companies like Baidu, Zoox, and Pony.ai, utilizes a combination of sensors to perceive and navigate its environment while ensuring redundancy in case some sensors are not functioning or are blocked. These sensors include:

Cameras that capture and identify objects that are recognized in context (construction zones, traffic lights, and signs).

Radar and ultrasonic sensors that send electromagnetic and sound pulses to identify nearby objects, distances, and velocities.

Waymo external audio receivers (EARs), which are microphones placed around the vehicle to detect and triangulate important sounds, including emergency vehicle sirens.

Lidar, a remote sensor that sends laser pulses in all directions to create a constantly updated 3D map of surroundings based on the delay between emitted pulses and their registered reflections.

One of the key advantages of a multi-sensor stack like Waymo’s is the ability to collect both two and three-dimensional data, each of which is ultimately used to describe the three-dimensional world the vehicle is navigating. Waymo’s sensor suite theoretically allows it to operate more effectively in visibility-limited conditions where cameras alone may be insufficient, like snowy weather, though it hadn’t rolled out operations to any such locations as of June 2025.

The Waymo Driver Model

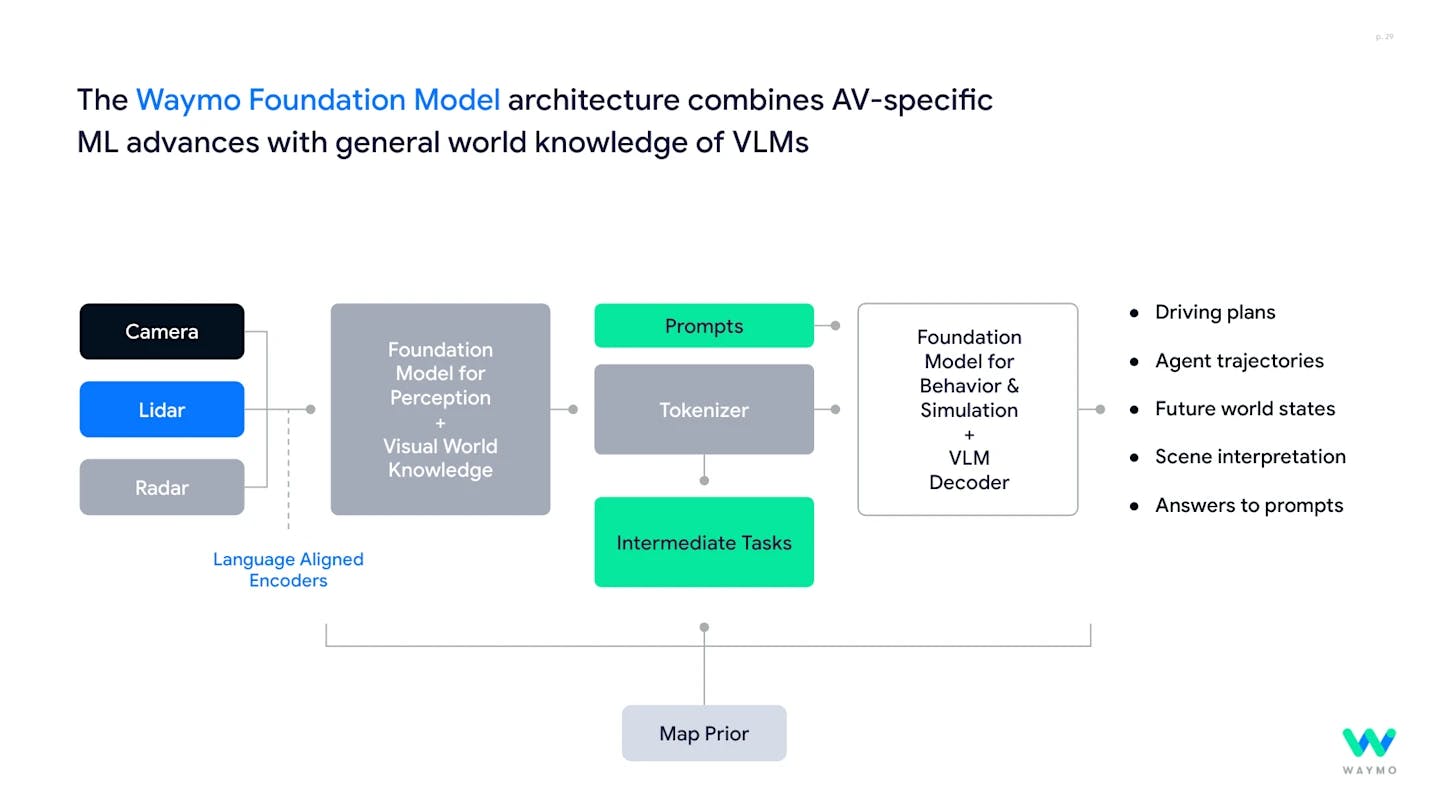

The AI model powering Waymo Driver is the Waymo Foundation Model, which is based on a combination of LLMs and VLMs (vision-language models). Waymo’s foundation model is a modular transformer-based neural network, meaning different models contribute to perception, prediction, decision-making, and control, with one model’s outputs acting as inputs to the next. While each constituent model in the complete Foundation Model is separately parametrized and calibrated, the decision-making process is flexible and not hard-coded, as some Waymo skeptics have claimed.

Source: Waymo

Waymo’s research team is also exploring an End-to-End Multimodal Model for Autonomous Driving, or EMMA, that uses a multi-modal large language model foundation to map directly from raw camera input without using lidar or radar. However, as of June 2025, Waymo hadn’t said if or when EMMA will be used in the Waymo Driver.

Mapping Dependencies

As of June 2025, Waymo vehicles operated only in geofenced regions of cities, or Operational Design Domains (ODDs), that have been 3D-mapped using lidar. Waymo vehicles can operate in locations that haven’t been mapped, and often test in such locations, but don’t operate commercially in non-mapped locations.

Waymo’s maps enable its cars to identify their location even if GPS services are interrupted and allow the onboard lidar sensors to continually triangulate the vehicle’s location. Waymo’s map generation process requires driving vehicles outfitted with lidar sensors down each street in the months ahead of launch in a new city, a process that some critics have suggested is expensive or time-consuming. However, one estimate put it at several million dollars per city; a relatively low up-front expense to unlock a new customer base.

Tesla’s Contrarian Bets

Tesla's strategy is different from Waymo’s in a few key ways:

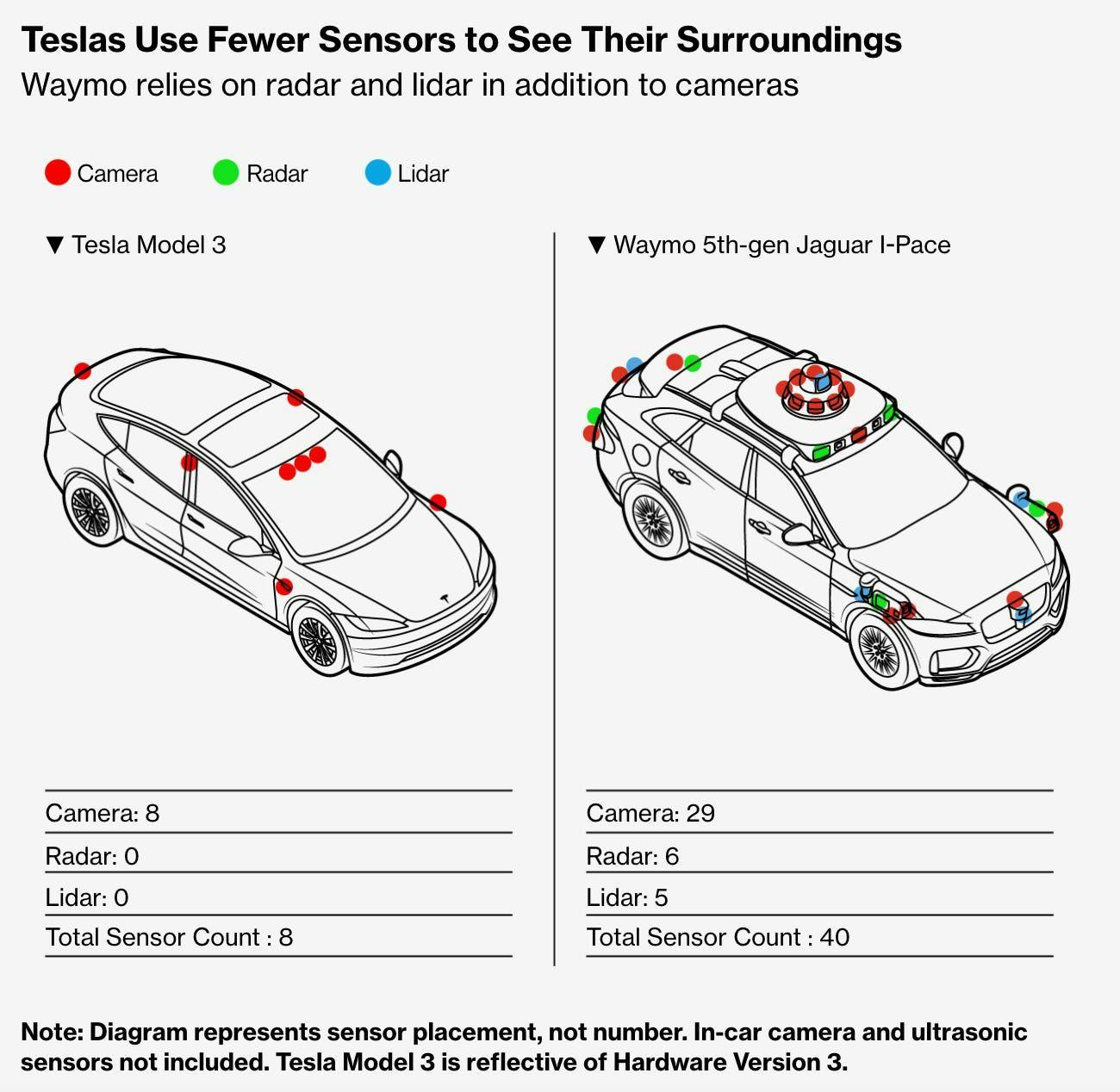

It only uses cameras, unlike Waymo’s camera, lidar, and radar suite.

It uses a single neural net model based on the camera data, avoiding the modular approach Waymo uses.

It relies on tens of billions of miles of camera data each year from its existing vehicles on the road, compared to Waymo’s millions of miles each year.

Why Tesla Only Uses Cameras

In lieu of using lidar or radar, Tesla’s autonomous technology relies entirely on a suite of eight cameras to make driving decisions. In contrast, Waymo’s fifth-generation car has 29 cameras, six radar sensors, and five lidar sensors.

Source: Bloomberg

Tesla has mentioned a few reasons for not using Lidar.

First, Tesla has argued that lidar is not necessary. As early as 2013, Elon expressed skepticism about the need for lidar in autonomous vehicles. Elon explained the reasoning for this in 2021:

“Humans drive with eyes & biological neural nets, so makes sense that cameras and silicon neural nets are only way to achieve generalized solution to self-driving.”

Elon remained skeptical of lidar in 2025. When pressed on an earnings call in January 2025 about whether he still sees lidar as a ”crutch”, he reiterated his belief in the all-camera approach, saying that “Obviously, humans drive without shooting lasers out of their eyes”.

Second, Tesla has argued that lidar is too costly. Elon first called lidar too expensive in the early 2010s, when it cost ~$75K per unit. Costs have come down significantly, but using lidar (and radar) is still pricier than using cameras. Waymo’s fifth-generation sensor suite across cameras, lidar, and radar was estimated to cost $12.7K combined in 2024, compared to only $400 per vehicle for Tesla. That said, costs may continue to fall, given that some Chinese lidar makers were pricing units as low as $200 each as of March 2025.

Third, Tesla believes cameras work well enough to avoid the need for prebuilt maps. Because Tesla trains its vision system based on real-time road perceptions, it skips the centimeter-level HD maps that Waymo spends significant time creating before launching in a city. Tesla’s system doesn’t have zero reliance on maps, but it's designed to be able to operate purely on vision if no route or map is available.

Tesla’s End-To-End Driving Model

The cameras Tesla uses power an underlying foundation model that also differs from Waymo’s. Like Waymo’s foundation model, Tesla's self-driving model is trained end-to-end. It differs, however, in being a single large model going from camera inputs directly to motion control outputs, compared to Waymo’s modular combination of intermediate neural networks.

Until 2024, Tesla’s full-self-driving (FSD) software was also modular. But in March 2024, Tesla’s FSD v12 scrapped the old hand-off between perception and planning/control and replaced it with a single video-transformer that ingests footage from the car’s eight surround cameras. By moving to a single end-to-end neural network, Tesla dropped “>300k lines of C++ control code by ~2 orders of magnitude.”

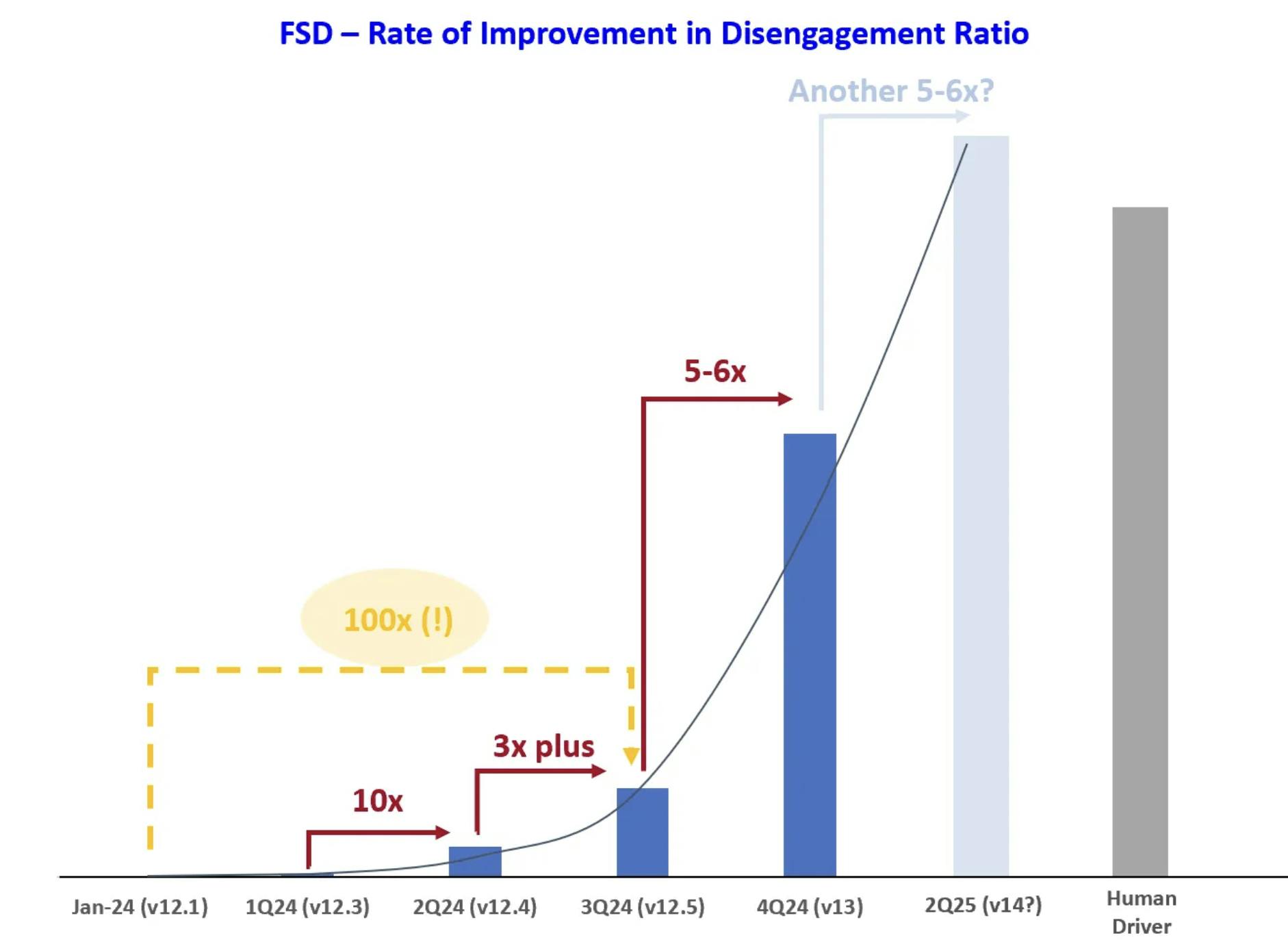

The new model seems to be working for Tesla. Since launching v12, Elon has claimed huge improvements in FSD’s disengagement ratio, or how often the human driver has to intervene and take back control. One unverified estimate in October 2024 put the improvement at 100x so far that year and projected a further improvement in the next quarter.

Source: Freda Duan

Tesla’s Data Edge

Tesla has also argued that it has an underlying data edge that will make its model continue its rapid progress. All Teslas built since 2016 operate in “shadow mode”, where the system quietly predicts what it would have done at each moment and if its chosen action diverges from the human driver’s. That gives Tesla an estimated 50 billion miles each year to train on, compared to the 71 million rider-only miles Waymo has done in its history through March 2025. To train its models on all this data, Tesla has been investing heavily in “supercomputers” that it plans to have powered by the custom chips Tesla is making in-house.

Are Cameras Alone Sufficient?

Tesla’s approach to self-driving may be cheaper and easier to scale, but safety remains a key question. Waymo, for example, has expressed doubts that a camera-only approach can deliver the safety levels needed to successfully deploy autonomous vehicles at scale.

Driving mistakes like circling a parking lot or getting stuck in a drive-through are one thing, but safety incidents can represent an existential threat to an autonomous driving company. Just ask Cruise, which shut down in December 2024, after a female pedestrian was struck by a human-operated vehicle and landed in front of an autonomous Cruise vehicle which then dragged her 20 feet before stopping while on the woman’s leg, causing serious injury. In the competition between Tesla and Waymo’s approaches, safety may be the decisive factor in the outcome.

Waymo’s Safety Record

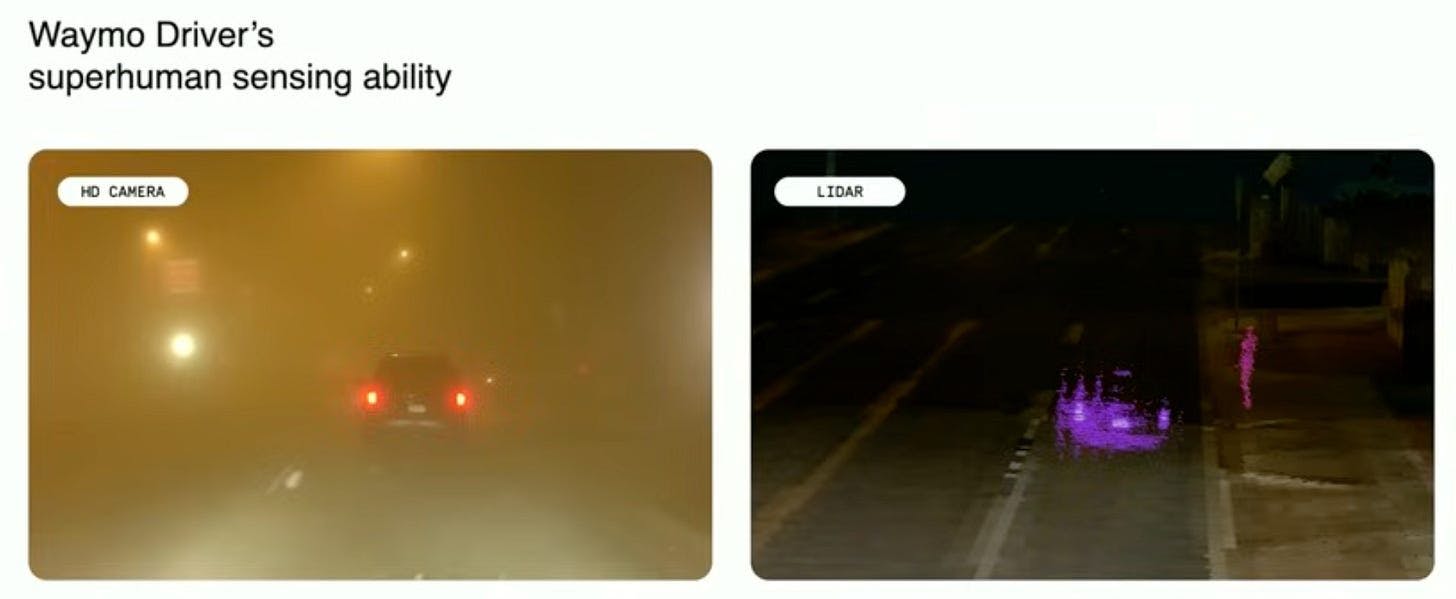

At Google I/O in May 2025, Waymo showed a few examples of its full suite of sensors successfully avoiding pedestrians in cases where it claimed a camera-only approach would have struggled. In one example, Waymo's lidar picked up the presence of a pedestrian in a Phoenix dust storm that was not visible on the camera.

Source: Google I/O

In another example, Waymo’s sensors were able to detect a pedestrian who was behind a bus and avoid a collision:

Source: Google I/O

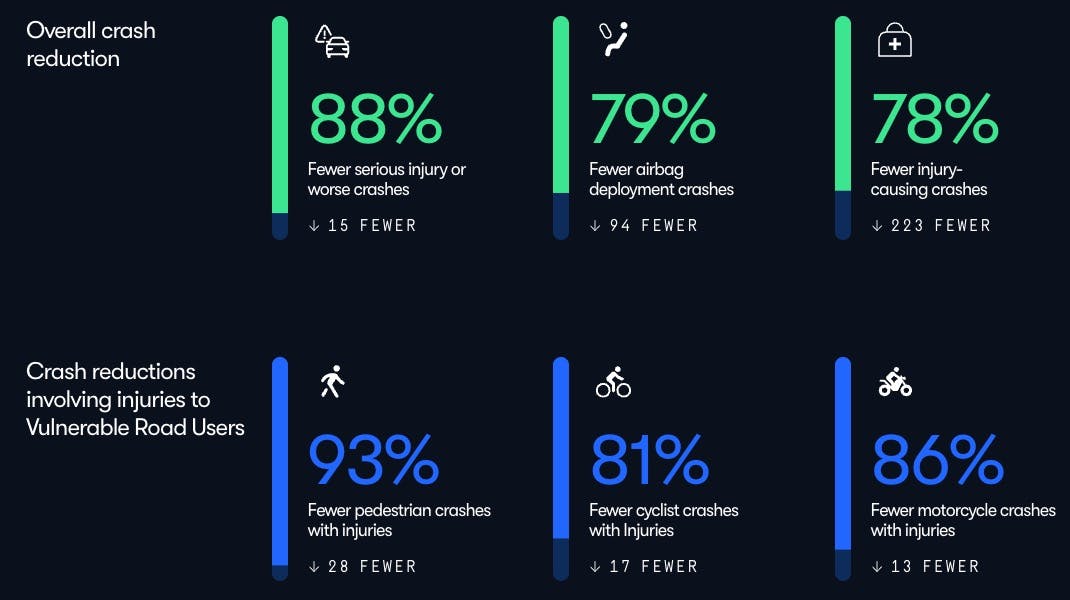

Beyond these anecdotes, Waymo has the safety record and data to support its claims that its sensor suite has led to enhanced safety. Waymo has published rider-only (RO) vehicle safety statistics relative to human drivers in the same cities where it operates, and has a safety record that is better than average human drivers across all metrics incorporated in the study. Additionally, a December 2024 study by Swiss Re showed Waymo had an 88% reduction in property damage claims and a 92% reduction in bodily injury claims when compared to human-driven vehicles.

Source: Waymo Safety

When an injury does occur involving a Waymo car, it's typically the fault of other drivers. A February 2023 safety report detailing Waymo’s first million miles of fully driverless operation noted that 55% of all incidents were human drivers colliding with stationary Waymo vehicles. Waymo also only has one fatal accident in its history, due to (ironically) a Tesla striking an unoccupied Waymo and other cars at a red light, killing one person.

Tesla’s Safety Record

Tesla does not have comparable safety data to Waymo yet for its autonomous fleet, but there have been incidents in recent years with Tesla’s FSD capabilities, where some have claimed its lack of additional sensors has contributed to accidents and required more frequent intervention.

For example, in 2023, a Tesla in FSD mode hit a 71-year-old woman at highway speed, killing her. Video of the crash shows a sun glare appearing to blind the camera, and the National Highway Traffic Safety Administration (NHTSA) opened an investigation into Tesla in October 2024 for four total FSD collisions that occurred in low visibility situations.

There have been reports of similar issues with Tesla’s older Autopilot system. In April 2024, for example, the NHTSA released an analysis of 956 crashes where Tesla’s Autopilot was thought to have been in use. It found that the Autopilot system had a “critical safety gap” which contributed to at least 467 collisions, 13 of which resulted in fatalities.

More recently, in June 2025, the public-safety group The Dawn Project reenacted a March 2023 crash where a Tesla struck a child after driving past a stopped school bus. It found that, two years later, the same issue remained unresolved: a Tesla with FSD enabled would still run down a child crossing the road while illegally going past a stopped school bus with its red lights flashing and stop sign extended.

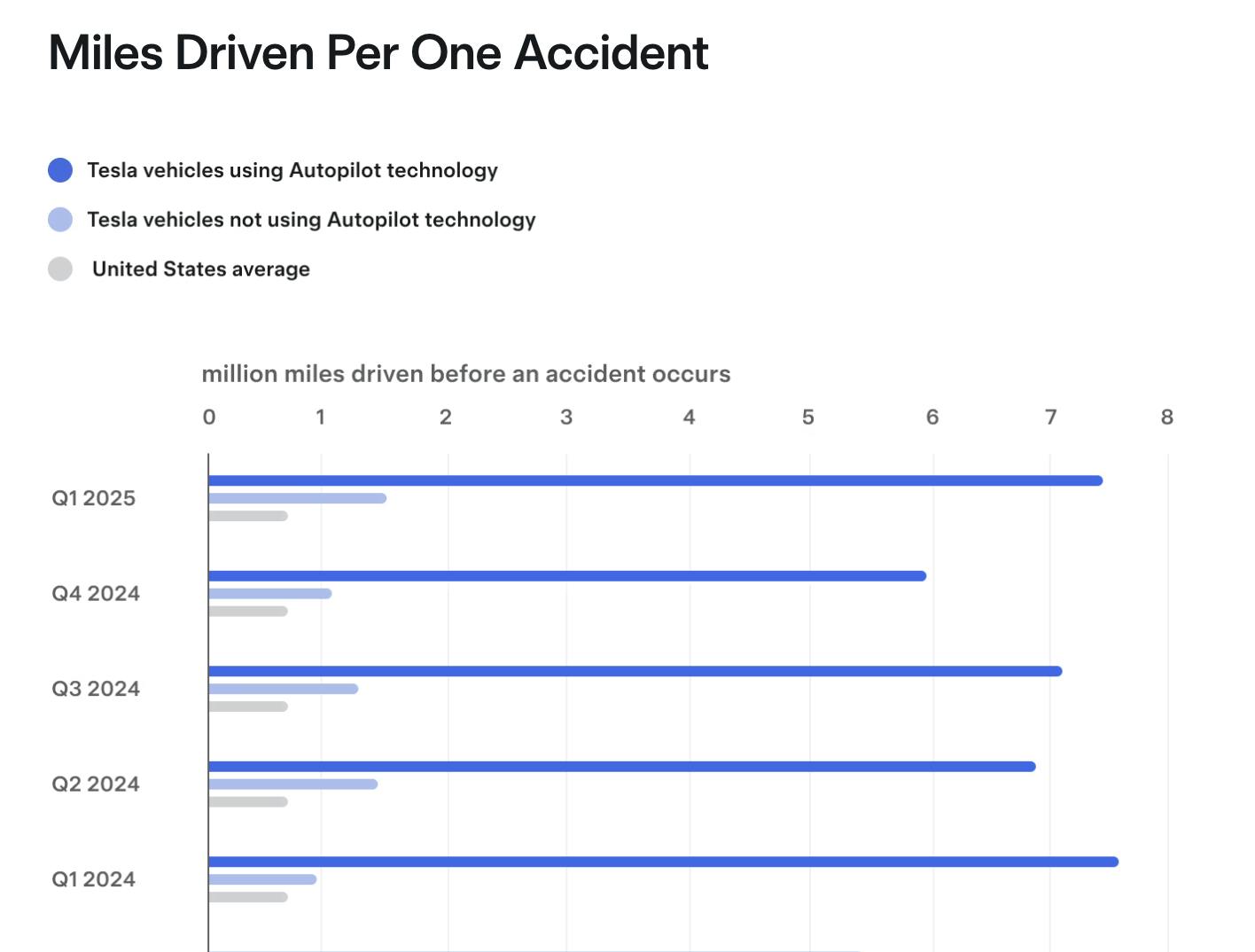

Elon has argued that all driving has risks and, on a relative basis, Tesla’s Autopilot technology is much less risky than a human driver. Tesla’s Vehicle Safety Report claims Autopilot drives over 7x more miles before an accident occurs compared to the US average.

Source: Tesla

But this comparison isn’t apples-to-apples with other self-driving technologies. Tesla’s report combines miles driven under Autopilot and Tesla FSD, both systems that require driver attention at all times. Tesla Autopilot data is also primarily from highway driving, where the system is most often used and where accidents are less frequent. The Tesla data also only includes events in which an airbag or seat-belt pretensioner is deployed, but leaves out fender-benders, curb strikes, and other Advanced Driver Assistance Systems (ADAS) incidents.

There’s unlikely to be a real apples-to-apples safety comparison between Tesla and Waymo until Tesla has scaled up its L4 robotaxi program in a comparable way. Tesla’s Austin robotaxi launch in June 2025 was only a small step in that direction, but early results have been mixed.

Tesla launched 10 vehicles in a geofenced area in Austin, and all vehicles had a safety driver in the passenger seat. The vehicles used in the launch were Model Ys, not the Cybercabs previewed by the company in October. While no major incidents occurred, videos showed vehicles driving on the wrong side of the road, several incidents of “phantom braking”, vehicles pulling over in the middle of an intersection, and safety drivers intervening.

In response to these incidents, the NHTSA has requested that Tesla submit responses to a number of questions surrounding FSD incident data, and Tesla has asked that the NHTSA not make its responses public.

Self-Driving and the “Bitter Lesson”

Even if Tesla is behind on its safety record today, the more important question is whether Tesla’s strategy is the right long-term bet. With Tesla’s FSD getting better each year, it matters less where Tesla is today and more where they will be in a few years.

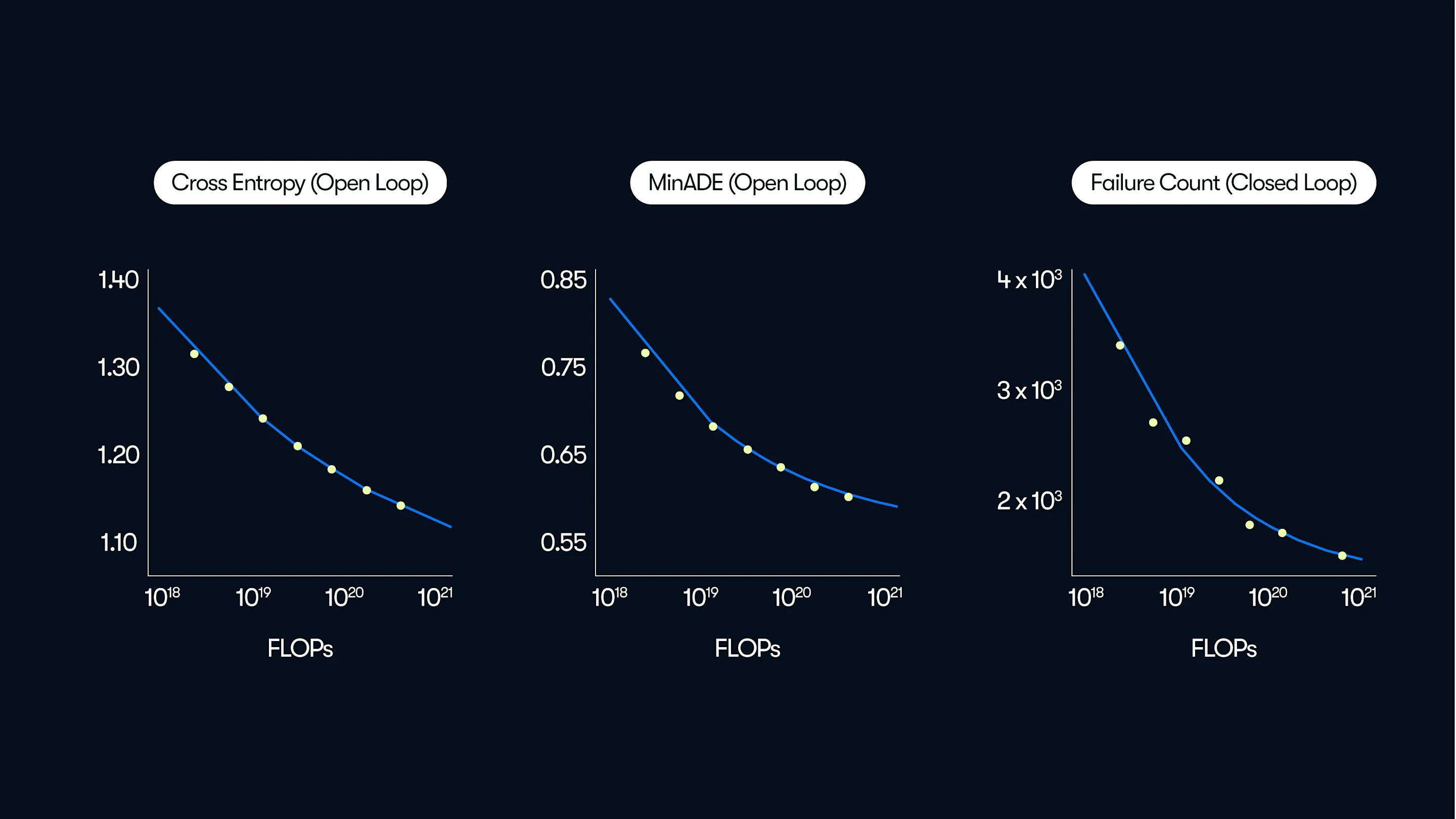

In a way, Elon’s strategy is a bet that the same scaling laws that created LLMs will lead to self-driving cars working too. As Richard Sutton argues in his piece The Bitter Lesson, general-purpose methods that exploit ever-growing computation and data usually outperform systems packed with hand-engineered, domain-specific knowledge. Recent research from Waymo confirms what has been known for a long time: scaling laws exist in self-driving, too, and more compute leads to better outcomes.

Source: Waymo

It’s also possible that the solution to self-driving won’t even require particularly specialized models at all. Sam Altman said in June 2025 that OpenAI has a “new technology that could just do self-driving for standard cars way better than any current approach has worked.” Altman didn’t provide any more details, but this is another datapoint in support of Tesla’s strategy that enough data and compute could solve self-driving.

Why Tesla’s Data Advantage Isn’t a Silver Bullet

But this simple framework might be too simple. AI reporter Timothy Lee has argued that Tesla’s "huge data advantage isn’t a silver bullet" for a few reasons.

First, the dimensionality, quality, and volume of data matter. Waymo uses lidar sensors to capture three-dimensional images, whereas Tesla cameras only capture the world in two dimensions and must map that data to a 3D world. Additionally, the FSD intervention or disengagement data Tesla has collected may be noisy. Only some interventions are due to FSD mistakes, so false positives may be generated when drivers, for example, pull over for a rest stop.

Second, there are limits to pure imitation learning, and watching humans drive might not be enough. Lee brought up one example where researchers tried to teach an AI model to play an open-source Mario Kart clone called SuperTuxKart by training it on human gameplay, which proved challenging:

“When Ross played the game, he mostly kept the car near the center of the track and pointed in the right direction. So as long as the AI stayed near the center of the track, it mostly made the right decisions. But once in a while, the AI would make a small mistake—say, swerving a bit too far to the right. Then it would be in a situation that was a bit different from its training data. That would make it more likely to make another error—like continuing even further to the right. This would take the vehicle even further away from the distribution of training examples. So errors tended to snowball until the vehicle veered off the road altogether.”

The paper describing this experiment was published in 2011. However, Lee argued in September 2024 that the same limitations apply to LLMs despite all the progress that has occurred in AI in the past 13 years, because LLMs still lack the type of reliability we’d expect for self-driving cars and still show the same “run-off-the-rails” behavior in long conversations.

He also argues LLM builders have taken a similar strategy to Waymo in improving their models, given these challenges. Just like Waymo has relied on safety drivers to give careful notes on why they took over the car historically, LLM labs hire human beings to rate model outputs and improve them through reinforcement learning from human feedback (RLHF). LLM labs also rely heavily on synthetic training data to improve their models, just like Waymo does with its billions of simulated miles on top of its millions of real-world driven miles. So even if LLMs show the power of simply scaling data and compute, that isn’t necessarily a slam dunk for Tesla’s strategy.

So, Who Is Right?

Despite anecdotes in support of one approach or the other, the evidence still seems to indicate that it’s too early to say.

It’s clear from publicized incidents and Tesla’s robotaxi launch in Austin that there is still a big gap between its self-driving capabilities and Waymo’s. If the race ended today, Tesla would lose and Waymo would win.

But the race is far from over. If scaling laws are sufficient to make Tesla’s strategy work in the future, then it is in a uniquely advantageous position to surpass Waymo, given its vertically integrated approach, low cost of production, and lack of reliance on mapping. If they aren’t, then Tesla’s self-driving cars may plateau at a safety level that regulators and customers don’t ever get comfortable with.

What makes this competition so fascinating is that these strategies are mutually exclusive. Either Tesla is right that cameras can match human-level safety, or Waymo is right that multiple sensors are essential. There's no middle ground position, and the stakes of being wrong are measured in both lives and trillions of dollars.