In popular culture, portrayals of personal AI assistants like J.A.R.V.I.S. from Iron Man and Samantha from Her have long captured the public's imagination. They depict a future where human beings are freed from busywork by digital assistants, allowing them to reclaim valuable time. In Iron Man, J.A.R.V.I.S. is an AI that controls Tony Stark’s body suit, runs his businesses, and acts as security for his mansions and towers. In Her, Samantha is the protagonist’s companion who edits his letters, manages his calendar and emails, and even takes him out on dates.

Today’s assistants are lackluster in comparison. From incumbents to startups, AI assistants on the market are not nearly as capable or emotionally intelligent as J.A.R.V.I.S. or Samantha. Most critically, they lack long-term memory, are siloed between platforms, and do not demonstrate emotional intelligence. Several important technical roadblocks have hindered the development of a personal AI assistant, including fragmented data access and user modeling. Until these core issues are overcome, the dream of having a truly intelligent, helpful, and emotionally aware assistant will remain out of reach.

Today’s "AI" Assistants

AI assistants offered today range from legacy AI assistants driven by natural language processing (NLP) to newer, generative AI assistants with the ability to respond naturally and take on a variety of tasks. While there is a broad range of domain-specific chatbots, workflow platforms, and enterprise copilots, when it comes to general-purpose assistants, there are fundamental limitations.

NLP-Based Incumbents

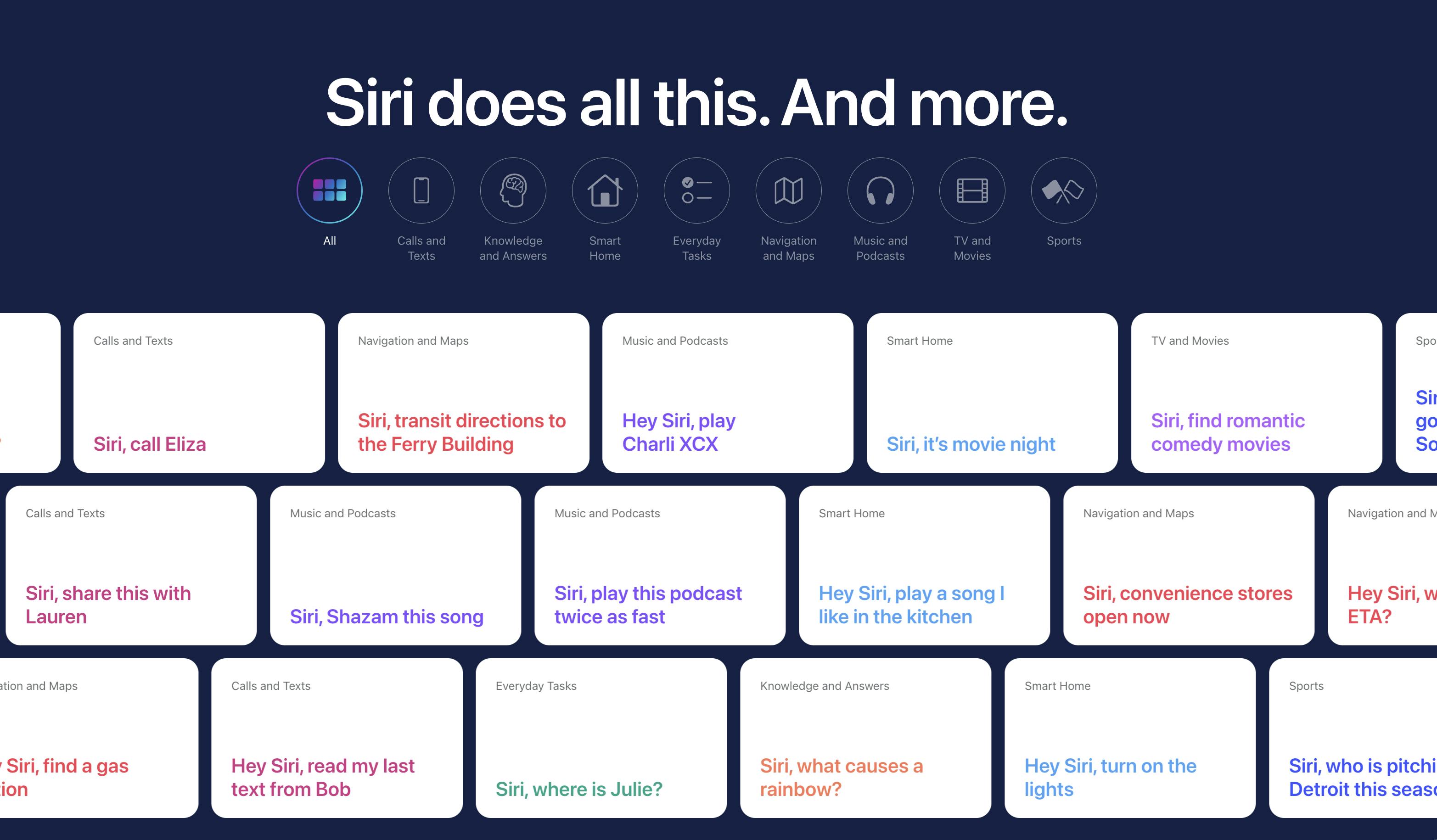

Apple’s Siri, Amazon’s Alexa, and Google Assistant represented the first generation of mainstream AI assistants. In their present iterations, they remain primarily command-driven systems, built on intent recognition and rules-based workflows rather than open-ended reasoning.

For instance, to make a request with Siri, the user has to say, “Hey Siri”, followed by action-trigger words like “remind me” or “set a timer”. These assistants are designed to accomplish structured tasks such as setting alarms, controlling smart home devices, or retrieving factual information, but they quickly reach their limits when users ask more complex or context-dependent questions. In many cases, their fallback strategy is to redirect the query; for example, Siri now routes difficult prompts to ChatGPT, and Google is actively migrating users from Assistant to its Gemini-powered experience.

Source: Apple

Despite years of development, these incumbents still operate with minimal user modeling. While they can store basic profile details like your name, home address, or designated family members, they lack any enduring memory of preferences, context, or relationships across conversations. This limitation is particularly notable given that these assistants often have local access to personal data such as messages and emails, but have not been securely engineered to build a coherent, long-term understanding of the user to better serve them.

Generative-Based New Entrants

In contrast to the incumbents, a wave of startups has emerged to push assistants beyond the rigid boundaries of NLP-era products, though each comes with its own constraints.

RPLY, developed by NOX and launched in February 2025, is an OpenAI-backed application that integrates directly into iMessage. The integration gives the AI a full contextual understanding of a user’s relationships with their contacts and the ability to draft or even automate replies based on the user’s texting patterns. Its promise lies in predicting conversational flow to prevent missed replies, but a slightly inaccurate prediction in an exchange can cause errors or, even worse, erode trust, making full autonomy inappropriate for high-stakes personal communication.

Source: Nox

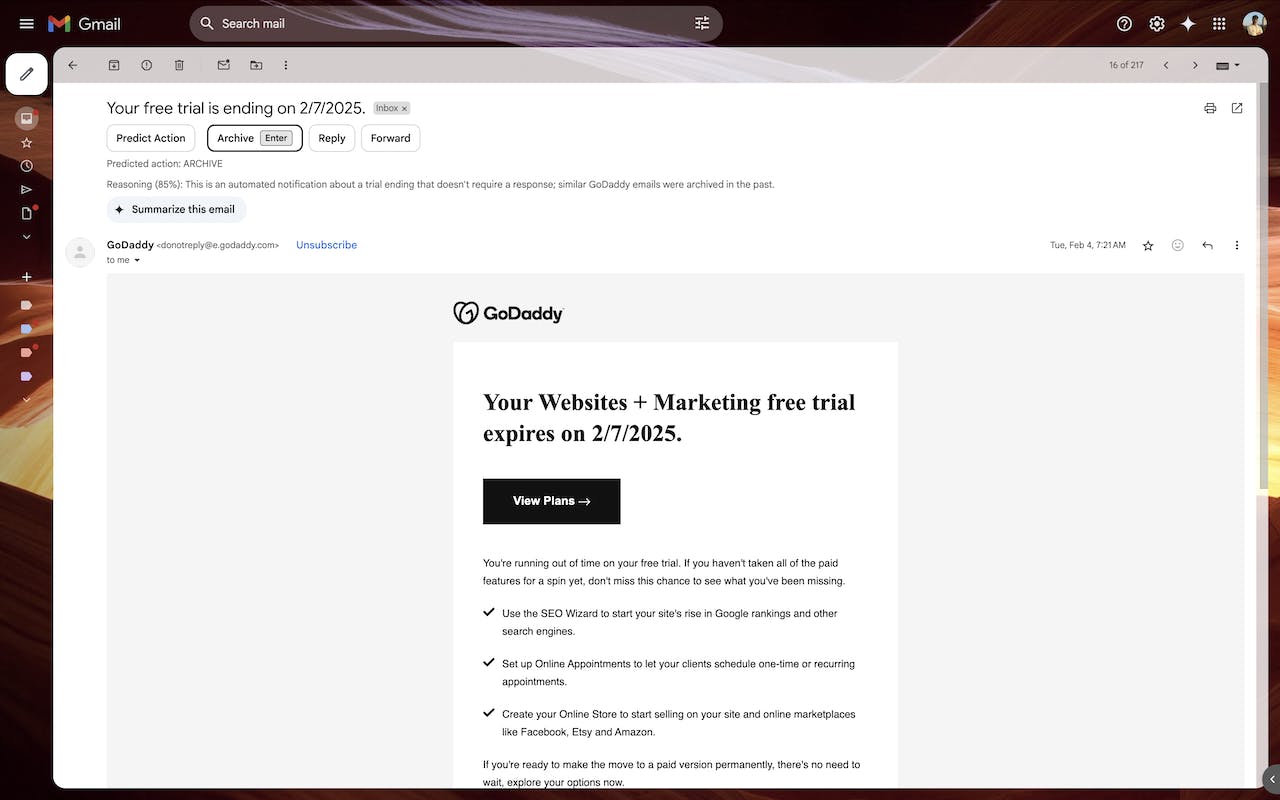

Launched in 2024, Friday is a YC-backed company that develops an AI assistant directly embedded within email. It identifies potential actions for unread messages and drafts suggested responses, alleviating the cognitive burden of inbox triage. Still, the user experience is largely unchanged from traditional email clients, lacking any truly intelligent system for prioritizing and highlighting the most important threads for the user in a way that is personal to them.

Source: Friday

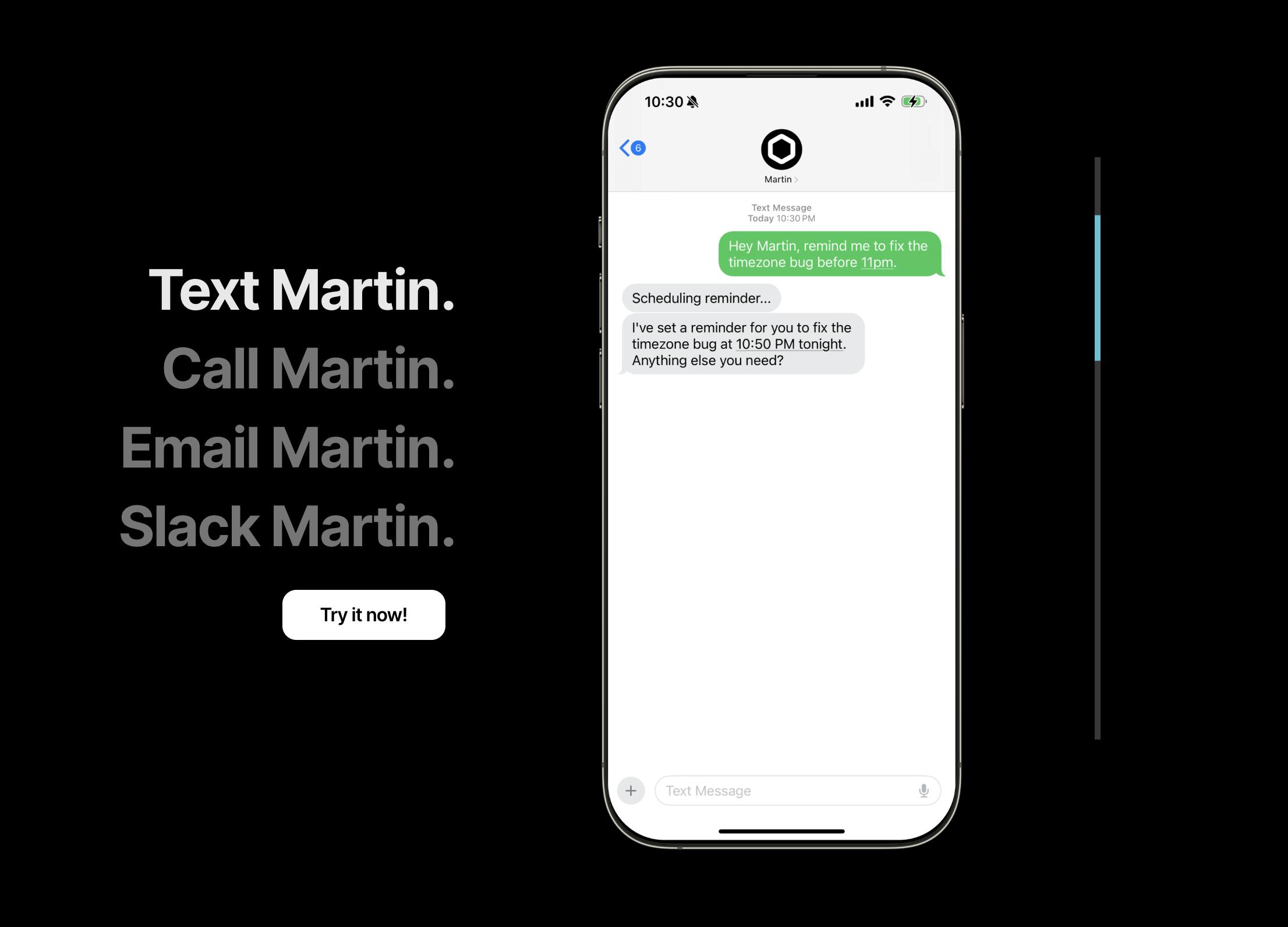

Martin AI, another YC-backed company launched in 2023, positions itself as a cross-platform assistant, available through voice, text, or email. It emphasizes its ability in terms of accessibility and execution across different modalities like text and voice, but it remains reactive. The assistant waits for instructions rather than anticipates user needs or independently surfacing opportunities. For example, if the user has a dinner reservation in their calendar, Martin will not proactively let them know about the travel time. In another instance, if an urgent email comes up, Martin does not prioritize alerting the user. In both scenarios, the user has to prompt it directly to get that information.

Source: Martin AI

Collectively, these startups illustrate both the progress and the bottlenecks of today’s generative assistants. Specifically, they have broadened capability, context awareness, and flexibility, yet still fall short of the proactive, relational intelligence imagined in cultural archetypes like J.A.R.V.I.S. or Samantha.

Defining A Great Assistant

To understand what closing this gap might look like, it helps to examine Google’s Project Astra as a case study in building an ever-present, contextually aware assistant.

At Google I/O 2024, Project Astra was introduced as Google’s vision for what a truly universal AI assistant might look like. Unlike the command-based models of the past, Astra was designed to operate more like a companion that can see, hear, and understand its surroundings. It integrates memory across video, audio, and text, enabling it to recall prior interactions and respond in ways that feel coherent and continuous over time. This approach goes beyond one-off commands or transactional queries, gesturing toward an assistant that can build a working model of the user’s life and needs.

One of Astra’s most notable qualities is its ability to use tools across modalities rather than relying solely on text input. In demos, it could recognize objects through a camera feed, retrieve relevant documentation, toggle device settings, or even initiate a call. This reflects a step-change in how assistants can act as intermediaries across different contexts and devices, blurring the line between digital and physical interaction. Instead of being limited to a single channel, Astra is positioned to become a central orchestrator of tasks, capable of shifting between tools and environments with fluidity.

Equally important is Astra’s proactive nature. Demis Hassabis, CEO of Google DeepMind, highlighted its ability to “read the room” by knowing when to intervene and when to remain silent. In practice, this means Astra could remind a user of a fasting schedule at the right moment or offer to surface a boarding pass when it sees someone heading to the airport. These interventions are not triggered by explicit commands but by situational awareness and contextual judgment, an area that distinguishes Astra from current assistants and hints at the possibility of assistants that act with timing and discretion rather than blunt automation.

Taken together, Astra offers a glimpse of what makes a great assistant. It combines expansive knowledge with memory that extends across interactions, the ability to act across a wide range of tools, a proactive orientation grounded in situational awareness, and an ambient presence that is always available but rarely overbearing.

Source: Google

Execution Challenges

Developing a truly intelligent AI assistant remains a formidable undertaking, hampered by several interrelated challenges. First, access to the data such an assistant needs is highly fragmented and governed by shifting politics and policies. Many of the most common communication channels are either end-to-end encrypted, as is the case with iMessage and WhatsApp, or guarded by restrictive APIs that platforms can revoke at any time. Even when data access is technically permitted, it is often constrained by rate limits, multi-factor authentication, rotating tokens, or strict mobile operating conditions such as background execution limits on iOS. These constraints make reliable, continuous data ingestion fragile and prone to interruption.

Even if access were seamless, resolving identity across data sources poses a complex puzzle. A single person may appear under multiple email addresses, phone numbers, messaging handles, or aliases, all of which may evolve as jobs change, numbers shift, or nicknames emerge. Establishing accurate identity matching is probabilistic by nature, and even a single misidentification can produce identity fragmentation biases. Assuming that both access and identity were addressed, the data itself remains heterogeneous. For instance, messaging content spans multiple languages and modes like emojis, sarcastic texts, and contextual files, each requiring separate parsing and understanding.

Translating this chaotic data into a coherent, evolving user model adds yet another layer of complexity. Human preferences are highly contextual and dynamic. A desire for morning phone calls might be valid during a busy recruiting period, but may evaporate in later phases. To manage such fluctuations, commonly referred to as concept drift, where shifting user behaviors invalidate previous models, an assistant must incorporate mechanisms like confidence scoring, decay functions, and provenance tracking. Without these safeguards, the system risks relying on outdated assumptions and taking inaccurate proactive actions.

Finally, evaluating whether an assistant genuinely “knows” its user is largely unsolved. Traditional benchmarks focus on accuracy or fluency, but they do not capture long-term personalization, relational nuance, or anticipatory behavior. Surveys of large language models and agent evaluation emphasize that the methodology remains fragmented, with no consensus on measuring contextually intelligent and trusted assistant behavior.

Tomorrow’s AI Assistants

The gap between today’s assistants and the cultural archetypes depicted in sci-fi is not due to a failure of imagination but a reminder that reliable intelligence requires foundations that are still being built. Progress will come from treating assistants as systems, not chat windows. That means consented, durable access to a small set of core data sources; a typed personal graph that represents people, threads, events, and documents with clear sources; and memory that decays and re-validates rather than assuming preferences are static. It also means adjustable autonomy that starts with suggestions, graduates to draft actions, and earns the right to act for the user only in well-scoped domains with clear rollbacks and audit trails.

Moving forward, platforms can help by stabilizing access policies and exposing safer, capability-scoped APIs. Researchers can focus on benchmarks that test proactivity, continuity, and value under change. And builders can ship transparent logs, reversible actions, and opinionated defaults for safety. If these pieces come together, assistants will feel less like novelty and more like infrastructure, gradually earning the competence and discretion that make J.A.R.V.I.S. and Samantha compelling.