Thesis

Online video ranks among the top content formats, with apps like TikTok, Instagram, Snapchat, and YouTube within the top 15 most downloaded apps globally in 2023. User-generated content represented 39% of content consumption in the US as of 2022 overall, and 56% among teens. In 2022, video also accounted for 82% of all internet traffic, and 96% of consumers watched explainer videos about products and services. The demand for video content is driving companies to scale up media production, fueling the need for skilled video creators. By 2025, it’s predicted that 30% of outbound marketing from large organizations will be generated synthetically using AI, a significant jump from less than 2% in 2022.

AI tools can automate complex tasks such as scene editing, color grading, and video enhancement, allowing creators to produce professional-quality content with less technical expertise. AI-driven video tools have been shown to cut production times by as much as 60% as of October 2024, giving creators more time to focus on creativity rather than labor-intensive tasks. This potential shift towards AI-driven video creation could expand the creative possibilities for both individual creators and large organizations, enhancing efficiency and reducing costs in video production.

Runway is a web-based generative video platform intended to democratize professional video editing by offering a range of AI tools for real-time collaboration. The platform turns traditionally complex and labor-intensive creative workflows into tasks that can be executed faster. By streamlining processes like scene transitions, color grading, and special effects, Runway allows creators, whether individual filmmakers or large enterprises, to produce high-quality video content without relying on expensive agencies or advanced technical skills. The company also describes itself as “an applied research company building the next era of art, entertainment and human creativity” and applies advances from its research arm, Runway Research, to its products.

Founding Story

Source: Forbes

Runway was founded in 2018 by Cristóbal Valenzuela (CEO), Alejandro Matamala (chief design officer), and Anastasis Germanidis (CTO). Valenzuela met Matamala and Germanidis at NYU while researching applications of ML models for image and video segmentation for creative domains in 2016.

Valenzuela, originally from Chile, developed a fascination with emerging computer vision technologies. In 2016, he moved to New York and began a research role at NYU. While there, in June 2018, he began a deep dive into AI, studying how tools like ImageNet and AlexNet could transform creative and artistic fields.

In the course of his research, he conducted experiments such as integrating AI with Photoshop to streamline tasks like image editing, gaining traction among creatives. A pivotal moment came when Adobe offered him a position to continue this work within its AI team in 2018. However, driven by his vision and desire for control of what he wanted to build, Valenzuela chose to decline the offer and establish Runway independently.

Runway's original vision was to simplify existing AI tools. In 2018, the company launched a model directory that enabled others to deploy and run ML models for a variety of use cases. It abstracted the inference and training process with a visual interface. As the model directory and user base kept growing, the team started to see a pattern in which users were using the product for video editing.

Video editors, filmmakers, artists, designers, and other creators approached Runway because they saw potential in leveraging models to help them automate their manual workflows when the company was founded in 2018. As Runway started to build more deeply around video editing using what was learned from its model directory, the team realized they were cutting the time and cost of making videos and democratizing ML-enabled video editing. That's when they decided to focus on developing tools tailored for video creators.

Product

Runway is an AI creative platform that develops tools and models to empower artists, filmmakers, and content creators in their workflows. Known for its generative AI tools, Runway offers products like Gen-3 Alpha for text-to-video and video editing, alongside tools for image editing, animation, and other creative processes. It is recognized for advancing accessibility to AI technology in industries like film, advertising, and design.

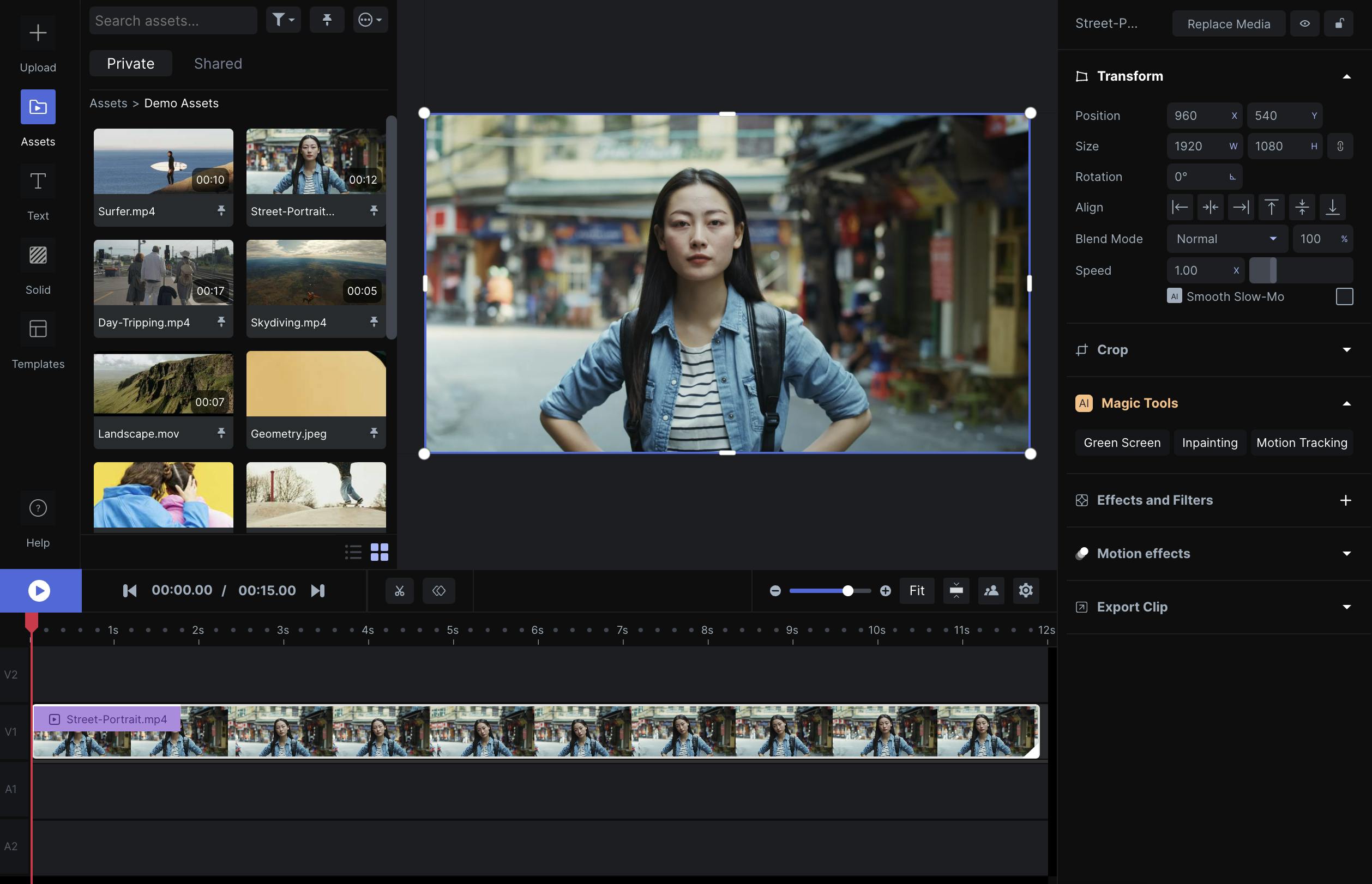

Video Editor

Source: Runway

Runway’s primary offering allows users to edit multitrack video and audio on a single timeline. The editor features effects transitions, trimming, cutting, audio level adjustment, and title creation, among other standard editing tools, incorporated in its suite of generative AI tools. The familiarity of the layout enables easy onboarding for editors.

The editor is browser-based and can be accessible through Chromium browsers. Each project can be shared with collaborators, and edits can be done live simultaneously, similar to the workflows in GSuite, Canva, or Figma. The output resolution for finished projects is limited to 720p for the free tier but rises to 4k for paid tiers.

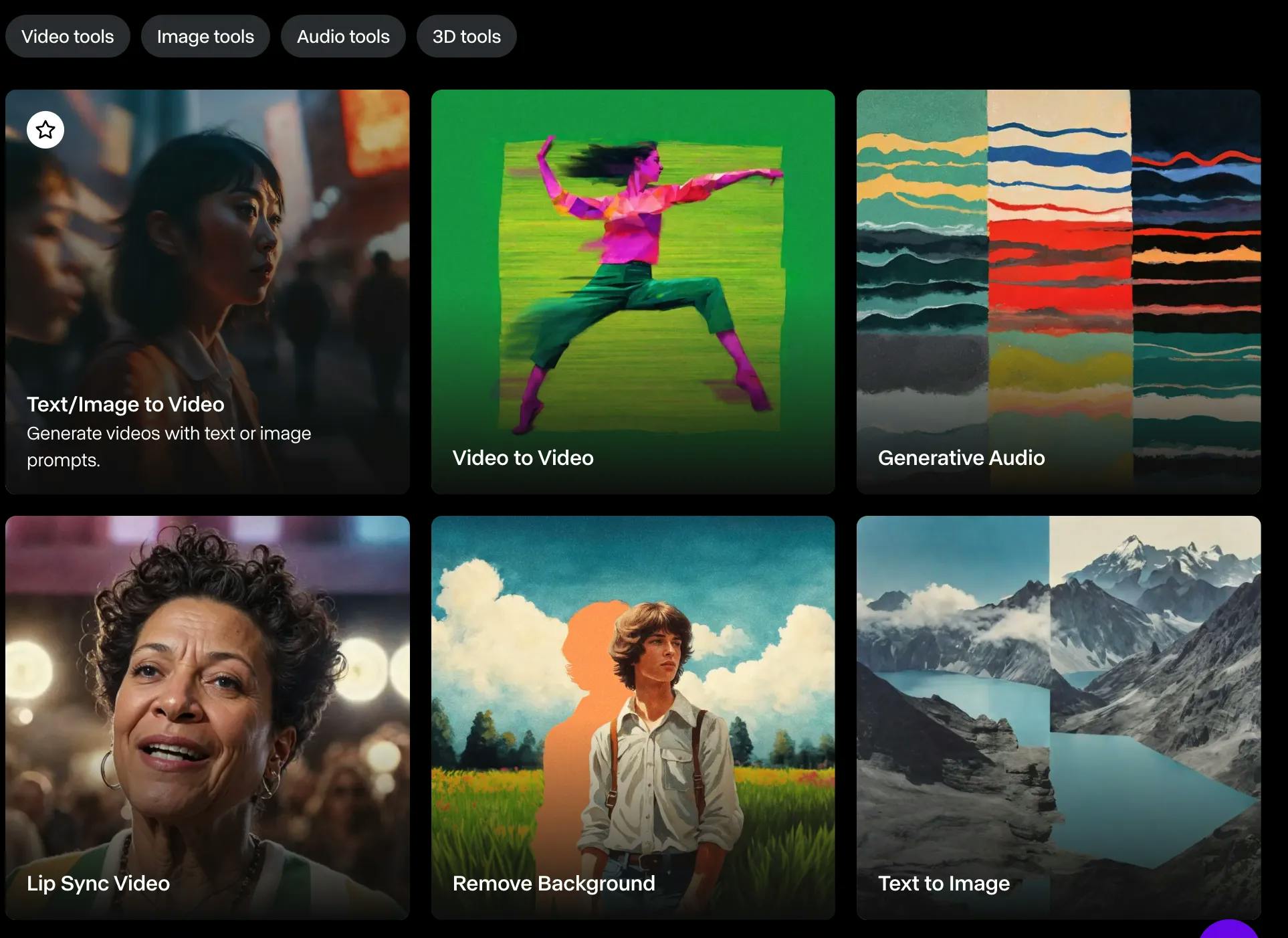

AI Tools

Source: Runway

Runway offers a suite of AI generation and editing tools powered by its base Gen-3 Alpha model. It offered 32 tools as of February 2023, including text-to-video, image-to-video, audio generation, processing, and editing tools. The most notable tools include:

Text-to-video generation: This tool allows users to generate 10-second videos with cinematic quality from text prompts.

Generative Visual Effects (GVFX): GVFX allows users to turn still images into live-action footage.

Multi-motion brush: The brush is an editing tool to apply specific motion and direction to up to five subjects or areas of a scene.

Camera control: The camera control tool allows the user to change the perspective of a shot by moving the camera, and selecting its direction and intensity.

Inpainting: Inpainting is an editing tool that allows users to remove objects from videos by identifying them in a still frame and letting the AI ensure that it follows the mask throughout the scene.

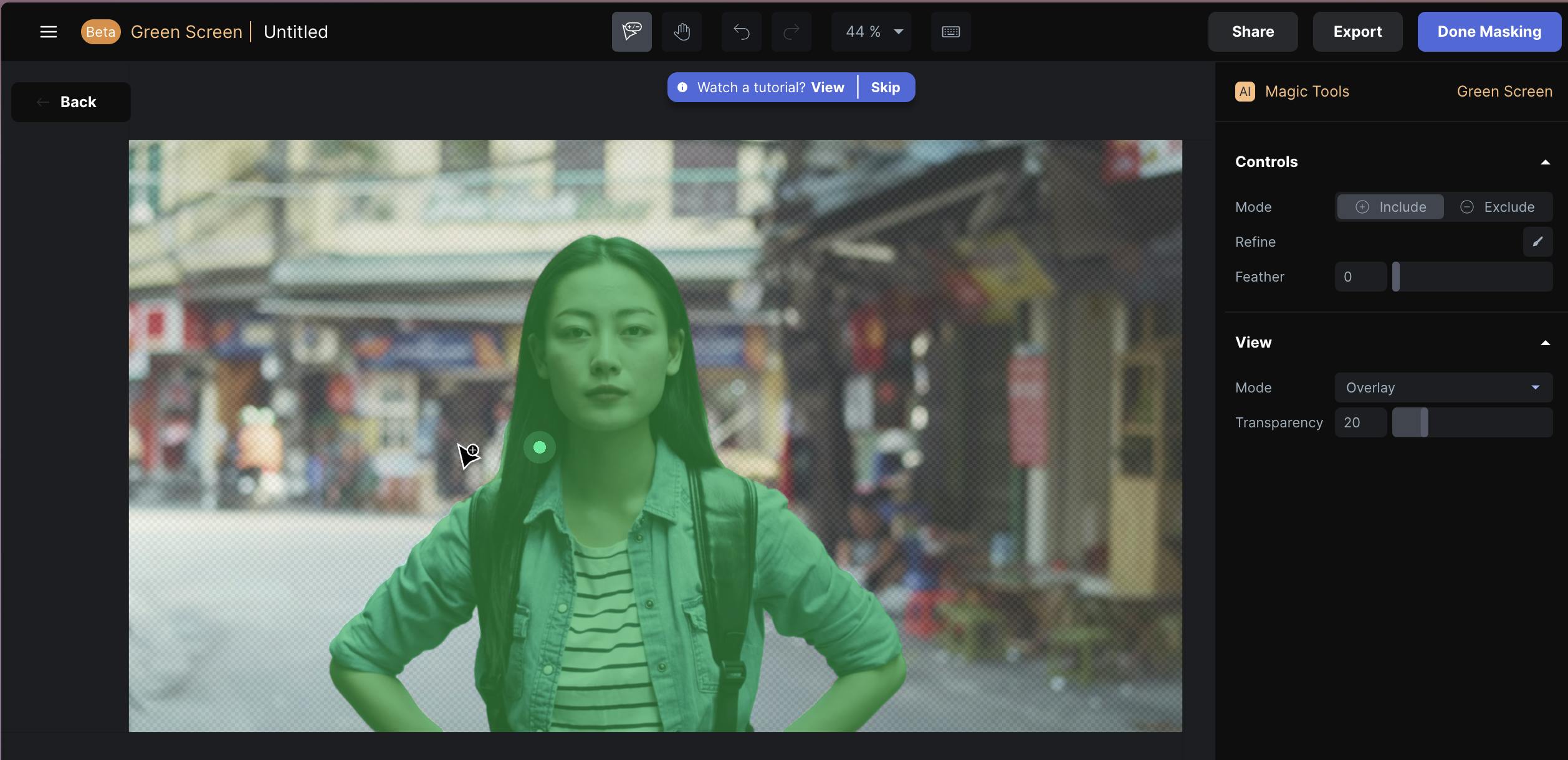

Source: Runway

Remove background: This is a tool that allows users to remove backgrounds from videos. This allows for any shot to be masked with a few clicks around the subjects, allowing editors to swap out the background regardless of how the shot was filmed. It can be combined with image generation to generate new backgrounds from text descriptions.

Source: Runway

Gen-3 Alpha

In June 2024, Runway released Gen-3 Alpha, a base model for video generation. Gen-3 Alpha “can create highly detailed videos with complex scene changes, a wide range of cinematic choices, and detailed art directions”.

Gen-3 Alpha is the underlying model powering Runway’s text-to-video, image-to-video, and text-to-image tools, as well as control tools like motion brush, advanced camera controls, and director mode and upcoming tools for more fine-grained control over structure, style, and motion. Gen-3 Alpha allows for complex interactions and camera movements, enhancing the creative control for users, compared to previous versions. As of June 2024, generated footage maxes out at 10 seconds, however, Runway promised more models with longer generation capacity to come.

Gen-3 Alpha was developed to generate expressive human characters with a wide range of actions, gestures, and emotions. In June 2024, Runway partnered with entertainment and media organizations to create custom versions of Gen-3 for more stylistically controlled and consistent characters. Runway explains that “this means that the characters, backgrounds, and elements generated can maintain a coherent appearance and behavior across various scenes.”

A challenge in video-generating models is control and consistency. Simple tasks like maintaining the color of a character’s clothing can be difficult to achieve. Gen-3 Alpha aims to address this by allowing users to anchor AI-generated videos with specific imagery, creating a “narrative bridge” for more controlled outputs. The ability to bookend videos with specific imagery is valuable for commercial applications, ensuring brand consistency and improving predictability and artistic control through the generation process.

RGM

In December 2023, Runway announced a partnership with Getty Images to build Runway Getty Images Model (RGM). RGM is a "commercially safe" version of Runway’s generative video models, trained on Getty Images’ licensed content library, with legal protections against copyright claims. RGM is tailored to enterprise customers, serving as a baseline for customers to fine-tune the GRM using their proprietary datasets. This enables customers to exercise greater control over the outputs and helps ensure consistency and brand alignment of outputs.

Runway Research

Runway Research collaborates with leading universities and organizations to publish AI research, integrating research findings into its products. In August 2022, Runway contributed to developing Stable Diffusion, a text-to-image model, in partnership with LMU Munich and Stability AI for researchers. The company has also published papers on motion tracking, sound-to-video matching, and high-resolution image synthesis.

Market

Customer

Creatives: Runway’s primary market is the long tail of creators who want to create and edit engaging video content but don’t have the budget for professional agencies or studios. The company's focus on collaborative editing and AI tools lowers the technical barriers and helps expand accessibility. Runway’s partnerships with schools position Runway's product to become a more common tool that the upcoming generation of creators can learn to use as well.

Video is an increasingly critical avenue for discovery and engagement for small businesses and digital creators, but their budgets would not justify video agency fees. Runway’s simplicity and speed enable employees to produce professional content with limited skill.

Enterprise: For professional editors, Runway simplifies tedious processes, such as rotoscoping. Interviews with professional editors highlighted that Runway does not replace its use of existing editing tools but complements them in specific tasks such as object removal or green screening, and enables better team collaboration.

With the launch of Gen-3 Alpha, Runway is developing more features for professional creators. As of June 2024, the company collaborated with entertainment and media organizations to create custom versions of Gen-3 for more stylistically controlled and consistent characters. In line with the thesis that every company is becoming a media company, Runway’s client base is diverse and includes customers from advertising agencies, to filmmakers, production companies, marketing departments, and educational institutions.

Runway enables customers to prototype and accelerate the video production process. Several advertising agencies use Runway for image generation for sketches, idea generation, and final productions. In film production, Runway allows for rapid prototyping of complex scenes or entire sequences without the need for expensive sets or locations. Accelerating turn-around times is one of the key reasons creators like Quinn Murphy or Tool, turn to Runway.

Runway’s key value proposition lies in complementing editing tools to streamline production processes. Processes like B-roll footage, storyboarding, or green screen effects can be time-consuming for creators and that’s where Runway can be leveraged. Using Runway, The Late Show with Stephen Colbert has cut production processes, that used to take hours, down to a few minutes. With the improved capabilities of Gen-3, customers are planning to leverage Runway in more production processes. Runway enables smaller teams to produce high-quality productions and filmmaker Tyler Perry suspended a planned $800 million expansion of his production studio after seeing the capabilities of AI video tech.

Source: Runway

Educators: Runway’s customer base also includes educators and educational institutions. For educators, Runway can enhance creative capabilities and deliver personalized experiences for students. It enables students to experiment with different tools faster and accelerates the students’ understanding of spatial concepts, and movie-making techniques.

Market Size

Runway’s product offerings position it in both the video editing software market and the visual effects (VFX) software market. The video editing software market is valued at $3.1 billion globally in 2023 and is expected to reach $5.1 billion by 2032, growing at a CAGR of 5.8% from 2024 to 2023. The growth is expected to be driven by the increasing demand for premium video content across diverse platforms such as social media, streaming services, and corporate communications.

The VFX market is valued at nearly $10 billion globally in 2023 and is expected to grow at a CAGR of 6.6% to reach $19.3 billion by 2033. The market is growing due to the increasing demand for high-quality visual effects in films, TV shows, and video games. Technological advancements in computer graphics, rendering, and animation software have made effects more realistic and cost-effective. The rise of streaming platforms like Netflix and Amazon Prime has led to greater investments in VFX-driven original content. Additionally, VFX is being applied in industries like VR, AR, architecture, and automotive design, broadening its market.

Competition

Video Editors

CapCut: CapCut, owned by ByteDance and founded in 2020, is an AI-powered video editing platform that allows users to create, edit, and share videos across various social media platforms. It provides an intuitive interface with advanced editing features like background removal, video stabilization, AI-powered color correction, and script-to-video tools. CapCut is widely used by both casual creators and businesses to produce professional-quality videos quickly and efficiently, making it especially popular for TikTok content creation.

CapCut is a competitor to Runway as both companies provide tools for video editing and creative content production, with CapCut focusing on user-friendly, mobile-first video editing for social media creators, while Runway emphasizes advanced AI-powered tools for professional video editing and creative workflows.

Avid Technology: Avid, founded in 1987, is a technically demanding editing tool and considers itself the gold standard of professional editing, especially for film and broadcast. Avid is an industry-standard in Hollywood. According to the company, the product was downloaded more than 3 million times as of February 2021. In November 2023, Avid was acquired for approximately $1.4 billion by STG, a private equity firm.

Avid focuses on professional-grade video editing and production software like Media Composer, which is tailored for filmmakers and large studios. Runway, on the other hand, specializes in AI-powered creative tools, enabling tasks like generative video editing, green screen removal, and image synthesis, appealing to creators looking for advanced automation and efficiency. While Avid targets traditional workflows, Runway emphasizes innovation through AI-driven solutions, positioning itself as an alternative for creative professionals.

Apple: Apple’s Final Cut Pro has become a major player in the editing world. The product offers both full-featured editing and special effects for a $300 one-time purchase as of November 2024. Apple’s improvements to its free iMovie product also make it a popular choice among hobbyists who create videos. Apple was founded in 1976 and Final Cut Pro was released in 1999.

Apple's Final Cut Pro is a competitor to Runway, as both offer tools for video editing and production. While Final Cut Pro provides a comprehensive suite for professional video editing, Runway distinguishes itself by integrating AI features like generative video creation, text-to-video tools, and automated green screen editing, making it more focused on leveraging AI for creative workflows.

Video-Generating Models

OpenAI: In February 2024, OpenAI announced its text-to-video model Sora. The model was released for testing with “a number of visual artists, designers, and filmmakers to gain feedback on how to advance the model to be most helpful for creative professionals” as of November 2024. In June 2024, Toys R Us released the first video commercial generated with Sora, in collaboration with creative agency Native Foreign. OpenAI, founded in 2015, has raised a total of $21.9 billion from investors including Microsoft, Thrive Capital, and Founders Fund as of November 2024.

OpenAI's Sora could be considered a competitor to Runway as both platforms target video creators with advanced AI-powered tools. While Sora specializes in generating high-quality videos directly from text prompts with detailed realism and narrative coherence, Runway offers a broader suite of AI-based video editing tools, including video-to-video transformations and object removal, making it versatile for various stages of video production.

Google: In May 2024, Google launched Veo, Google DeepMind’s “new and most capable generative video model to date.” Veo can generate 1080p video clips over one minute long. Veo has been used by filmmakers like Donald Grover to create cinematic shots. In the long term, Google is planning to incorporate Veo directly into products such as YouTube Shorts. As of November 2024, Google has a market cap of $2.1 trillion.

Both Veo and Runway provide AI-powered tools for video content creation. Veo focuses on generating and editing high-quality videos from text and image prompts, offering advanced cinematic controls and visual consistency. Similarly, Runway empowers creators with AI tools for video editing and generation, targeting creative professionals in filmmaking and content creation.

Pika: Pika was founded in 2023. As of November 2024, the company has raised a total of $135 million. Pika offers text-to-video, image-to-video, and video-to-video generation for up to three seconds, as well as a selection of AI editing tools. Pika has reported “millions of users’” including the band Thirty Seconds to Mars, which has incorporated Pika-generated videos into its concerts.

Pika Labs can be considered a competitor to Runway, particularly in the realm of AI-driven video generation and animation. While both platforms focus on creative video content, Pika excels in turning text or image prompts into short, stylized, and artistic animations, targeting users who need quick, engaging videos for social media and creative experiments. In contrast, Runway offers broader functionalities, including cinematic human motion animations, longer video outputs, and dynamic camera movements, catering to a more diverse range of creators, from casual users to professionals.

Luma AI: Luma AI was founded in 2021. As of November 2024, the company has raised a total of over $67.3 million. Luma is a 3D modeling company, building several foundational models. Its Dream Machine model can generate videos up to five seconds long from both text prompts and images. Dream Machine has gained popularity from its aptitude for animating memes. Notably, the generator allows users to bookmark videos with images in both the first and final frames, allowing for better creative control.

Luma AI focuses on creating photorealistic 3D models and videos using NeRF (Neural Radiance Field) technology, excelling in realism and precise 3D scene generation. In contrast, Runway's text-to-video tools, such as Gen-3, emphasize creative storytelling and artistic video generation. Both serve content creators, but with different strengths: Luma AI in photorealistic precision and Runway in creative flexibility and broad AI video editing capabilities.

Business Model

Source: Runway

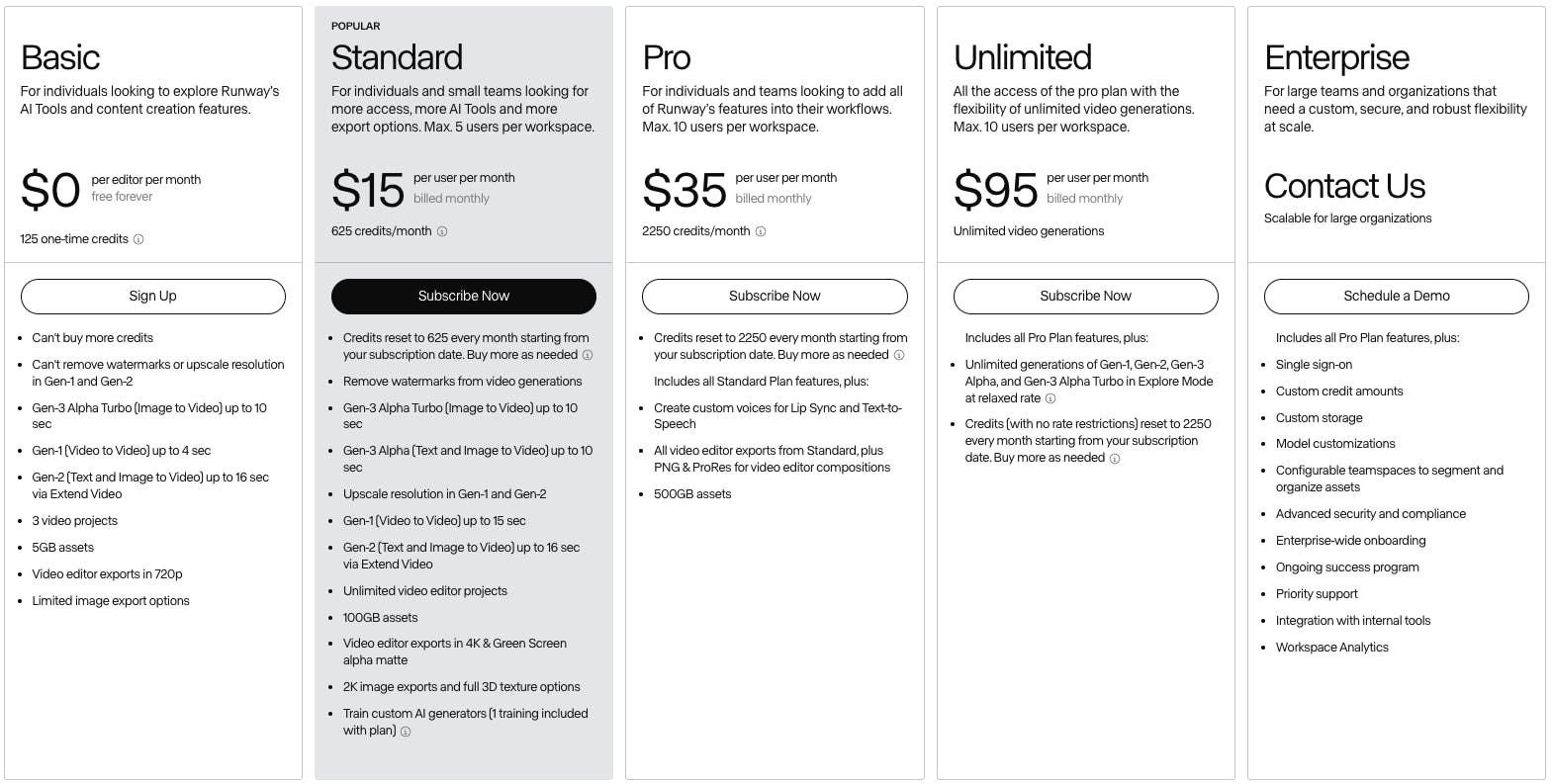

Runway operates on a SaaS model with five tiers:

Basic: The free basic tier limits access to AI models, the resolution, cloud storage, and the number of projects a user can work with. It provides limited access to the AI Magic Tools with a fixed credit limit.

Standard: The Standard tier starts at $15 per month and provides access to 625 credits with the possibility to purchase more, all models, 4k resolution, larger storage, and unlimited projects.

Pro: The Pro tier starts at $35 per month and unlocks more credits, more professional export options, and more customization options. The Standard and Pro tiers have a 20% discount for annual subscriptions.

Unlimited: The Unlimited tier, costing $95 per month, provides unlimited access to gen-AI video generation with 2250 credits per month.

Enterprise: Runway also offers a custom enterprise tier suited for studios requiring a high degree of security and customization. This provides greater assurances that assets cannot be linked or accessed by others, a concern large studios would likely have over a cloud offering, and enables custom integrations into existing software suites.

Traction

According to an unverified source, Runway’s revenue for 2024 reached $121.6 million from 100K customers as of November 2024. Its ARR had more than doubled in 2024, up from $48.7 million in 2023.

In addition to individual creators, Runway’s customers include many notable organizations. Its featured case studies include the editing team for the television show The Late Show With Stephen Colbert and the creative teams at Media.Monks, the computational design team at New Balance, and the production of Under Armour’s ad campaign by Tool. Runway has also been used in the production of the film ”Everything Everywhere All At Once”, which received a 2023 Oscar nomination for Best Film Editing.

Partnerships

Runway collaborated with the 2024 Tribeca Film Festival, highlighting the use of emerging AI tools in filmmaking. Tribeca screened a series of AI short films in partnership with Runway ML, with submissions growing from 300 to 3K in one year. The series by Runway titled “Human Powered" showcased AI's role in filmmaking. Jamie Umpherson, head of creative at Runway said:

“It was a really great example of how different artists are embracing the tools in different ways, and getting really unique outcomes that we hadn’t really seen before. We build the tools and we get them into the hands of creatives. But it’s often once we see it in the hands of creatives where we really begin to understand the full potential that they bring to the creative process.”

In March 2024, Runway partnered with Musixmatch, the world's largest lyrics platform, to explore the intersection of AI and music creation. This collaboration aims to integrate Musixmatch's database with Runway's AI tools, empowering creators to craft innovative music videos, visual storytelling, and lyric-driven content. Through this partnership, both companies can provide artists with new ways to express their vision. This partnership also reflects Runway's commitment to driving innovation at the crossroads of technology and the arts, further expanding its influence in the creative AI landscape. In April 2024, Adobe also announced it’s going to include plug-ins to third-party video generators including Runway for its Premiere Pro.

In September 2024, Runway partnered with Lionsgate, marking the first collaboration between an AI provider and a major Hollywood studio. This deal allows Lionsgate to leverage Runway’s custom AI model, designed specifically to enhance the studio’s production processes using its vast library of film and television content. The partnership aims to help filmmakers by improving pre-production tasks like storyboarding and post-production workflows, such as creating special effects and backgrounds. Lionsgate's leadership views this as an opportunity to stay competitive in the rapidly evolving industry, emphasizing that AI tools can supplement and augment creative workflows, particularly in action-heavy films known for complex visual effects.

Valuation

Runway has raised a total of $236.5 million in funding as of November 2024. Lux Capital led its $2 million seed round in December 2018, followed by an $8.5 million Series A in December 2020 led by Amplify Partners. The company then raised a $35 million Series B led by Coatue in December 2021. In December 2022, it raised a $50 million Series C at a $500 million valuation, led by Felicis Ventures with participation from Madrona, Guillermo Rauch (CEO of Vercel), Amjad Masad (CEO of Replit), Howie Liu (CEO of Airtable), Soumith Chintala (co-founder and lead of PyTorch), Lukas Biewald (CEO of Weights & Biases), and Jay Simons (former President of Atlassian).

In June 2023, Runway announced a $141 million extension to its Series C at a $1.5 billion valuation, from investors including Google, NVIDIA, and Salesforce. Valenzuela explained that the funding will be used to accelerate Runway’s AI research efforts which lead to new product releases for its customers. As of July 2024, it was reported that Runway is in talks with General Atlantic to raise a $450 million Series D at a valuation of around $4 billion.

Key Opportunities

AI Product Pipeline

Runway’s AI product pipeline has increased the number of AI Magic Tools to more than 30 as of February 2023. The company has built a product culture that translates AI research into product features and is well-positioned to capitalize on AI developments for video creation and editing. User reaction to these new features has resulted in the company's product announcement videos garnering millions of views online.

Runway’s research is focused on general world models, aiming to produce systems that “understand the underlying dynamics of the visual world”. Generative video technology and AI image generators still struggle with token prediction, making creative outcomes somewhat unpredictable. A potential opportunity for Runway’s AI tools, Germanidis believes the next generation of models will have a comprehensive, logically sound, physically accurate, and spatially aware understanding of the world:

“As a thought experiment, imagine you’re writing a novel with an AI. You’ve described a vintage sports car, hurtling down a mountain pass. The driver is experienced. They’re taking each turn with ease and finesse. In the distance, the antagonist presses a big red button on their remote control. This is what you tell the AI, and as a result, something happens within this world that is not immediately obvious. At the next turn, the driver goes to drift around the bend and, finding no traction from the brakes, goes careening off the deadly drop on one side. Several pages ago, you described the antagonist placing a remote detonation device inside the car. You told the AI that it was placed above the brake cable and that it was full of acid. Nothing else. The AI, assuming that the remote control and the remote detonation device were related, and knowing that acid is a liquid that will flow downwards when not contained, that this liquid can melt things including metal, such as the brake cable, causing it to no longer be intact, plus the fact that if the brake cable isn’t intact when a car tries to drift then the car will move differently to how the driver is expecting, decides to expire the driver and reduce the resale value of the $7 million Ferrari.”

Democratizing Movie-making

Tools like Figma or Canva have made graphic designs accessible to the general public. Valenzuela believes AI tools will have a similar effect on filmmaking, making this medium more accessible and enabling more people to express their creativity.

Producing high-quality video content typically ranges from $2K for simple projects to $50K for complex ones as of September 2024, with Hollywood productions costing hundreds of millions — making it an inaccessible medium for most individuals. Runway makes video creation more accessible by enabling creators to produce professional-quality videos without requiring a Hollywood budget. By empowering the growing community of independent filmmakers and content creators, Runway could tap into a previously underserved market, which could help expand its reach and customer base.

Artists like Paul Trillo have managed to leverage AI to produce short movies like “Absolve” and “Notes to My Future Self”. Trillo noted that shooting a comparable production with traditional sets, actors, and locations would take months and cost thousands of dollars. Runway is well-positioned in the amateur filmmaker market with projects like the collaboration with the 2024 Tribeca Film Festival to screen several featured AI-generated short films. Runway has also been used in the production of films, such as the award-winning "Everything Everywhere All at Once".

Key Risks

Legal Uncertainties

Generative AI models face potential legal risks due to the use of copyrighted material in training data, especially when trained on publicly available content from the web. Runway has been accused of using copyrighted material from popular YouTube channels to train its AI models, and in 2024, the company was named in a class-action lawsuit alongside other AI firms. The lawsuit alleges that these companies violated copyrights by scraping publicly posted artworks without permission.

Runway and its co-defendants argue that their models generate entirely new creations and do not replicate original works, emphasizing that artistic style is not copyrightable. However, courts have increasingly rejected fair use defenses in similar cases, citing concerns about AI tools replicating artists' unique styles without consent, which presents a legal risk for Runway. The company states that it addressed copyright issues by consulting with artists during model development, though details remain unclear. Additionally, Runway employs content moderation systems and uses C2PA authentication to verify the origins of media created with its Gen-3 models, aiming to mitigate potential legal and ethical challenges.

Mobile-First vs. Web-First

Runway's browser-first approach has been a competitive advantage against established players like Apple and Adobe. However, this strategy could face limitations as the demand for mobile-native content creation grows. The rapid rise of CapCut, launched in 2020 and achieving 357 million global downloads by 2022, underscores a shift toward mobile-first editing. With the increasing popularity of platforms like TikTok, Reels, and YouTube Shorts, mobile-based editing tools may come to dominate the digital content creation ecosystem, potentially limiting Runway's growth in this emerging landscape.

Commoditization of Models

Runway’s products, including its AI Magic Tools that streamline tedious workflows, have been a major draw for its customers. However, as AI continues to become ubiquitous in software according to an August 2024 survey, this differentiation may erode. Features like AI-assisted workflows could become standard across all editing platforms as the cost of running AI models decreases. Apple's integration of Stable Diffusion on CoreML exemplifies how advancements in hardware and model optimization are enabling AI models to run locally instead of on the cloud. This shift could allow traditional software editors to incorporate similar AI capabilities at lower marginal costs, challenging Runway’s competitive position.

Summary

Runway is bringing the disruption of cloud computing, browser-based software, and generative AI to the video editing market. Its product enables users to create and edit video content quickly and collaboratively, lowering the barriers to creating high-quality creative content at a low cost. Runway builds not only the interface that enables access to its AI Magic Tools but also actively participates in open-source research to expand access to AI. As video content continues to grow as a format, with Instagram Reels and YouTube Shorts highlighting the focus of major platforms on short-form video, the demand for creators to leverage professional editing software will likely continue to increase.