Thesis

Machine learning (ML) practitioners are facing the same challenges in 2023 around the processes necessary to rapidly deploy, maintain, and monitor machine learning models that software developers had 20 years ago deploying applications. This is not for lack of investment – AI adoption has doubled from 2017 to 2022, but 87% of data science projects still fail to make it to production. Even successful ML projects take on average 7 months to deploy. Software developers have an entire toolchain to deploy and re-use code, and today software engineering teams that embrace DevOps practices are able to deploy 208x more frequently and 106x faster. ML practitioners need similar solutions. The MLOps market is nascent and highly fragmented, estimated at $612 million in 2021 and projected to reach $6 billion by 2028.

In this landscape, Weights and Biases (W&B) has emerged as a potential solution for ML practitioners. With the insight that significant tooling is needed to successfully deploy and manage ML models, W&B has built a comprehensive platform that provides tooling for experiment tracking, collaboration, performance visualization, hyperparameter tuning, and more. With a focus on DevOps principles tailored to the ML domain (i.e. MLOps), W&B empowers ML practitioners to enhance their workflows, accelerate deployment times, and increase the chances of successful model implementations. As AI adoption continues to rise and the MLOps market matures, W&B can play a vital role in facilitating the transition toward more agile and successful ML model deployment.

Founding Story

Weights & Biases (W&B) was founded in 2017 by Lukas Biewald and Chris Van Pelt. The two had previously founded and spent almost a decade building Crowdflower together (now known as Figure Eight), which provides high-quality annotated training data for machine learning.

Biewald had been a machine learning practitioner since 2002 when he built his first machine learning algorithm in a Stanford class, and by 2007 he was convinced that the biggest problem in machine learning was access to training data, which is what led him to found Figure Eight. After working on Figure Eight for a decade, Biewald had an internship at OpenAI. While there, he found that the tooling for model development made it hard to modify models directly or even understand what models were doing. Slight changes to model functionality could result in the whole model changing.

Biewald asked Van Pelt for help building some tools for model development, and Van Pelt created an early prototype of what would become Weights & Biases to satisfy his friend’s request. While in 2007 they had thought access to training data was the main blocker to progress in ML, by 2017, they gained deep conviction that the blocker was no longer training data, but rather "a lack of basic software and best practices to manage a completely new style of coding.” So they started Weights & Biases to solve that problem.

Shawn Lewis, another second-time founder who had met Biewald because he had been in a Y Combinator batch with Biewald’s wife, joined as the third co-founder. Together, they aimed to build a company that would fill the gap in the machine learning development process by creating a suite of development tools. The team initially focused on experiment tracking, or logging what was happening during training. Their first product recorded and visualized the process of training a model, which would make it possible for developers to go back and see how the model evolved over time. Their earliest users were developers at OpenAI, Toyota Research, and Uber.

In May 2018, they announced a $5 million funding round led by Dan Scholnick at Trinity Ventures, who had also backed Biewald and Van Pelt at Figure Eight. The name of the company refers to the typical goal of training a model — to find a set of ”weights and biases” that create a model that has low loss (loss refers to the quantified discrepancy between predicted values and actual values).

Product

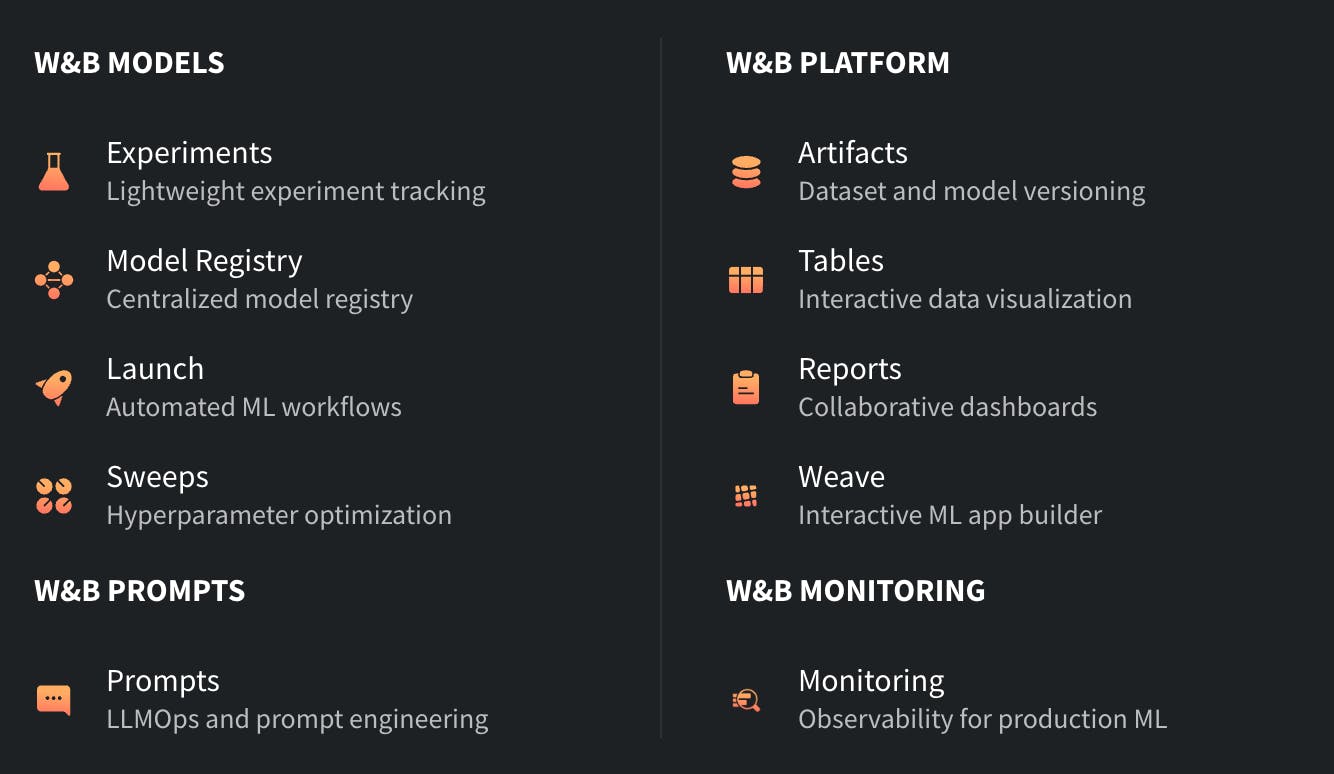

W&B seeks to be an end-to-end solution for machine learning (ML) projects, streamlining the entire process from development to deployment. The platform extends across (1) experiment tracking (logging any change to any model), (2) visualization (allowing users to better understand model behavior and performance), (3) collaboration, (4) hyperparameter optimization, (5) comparing between models, (6) model deployment and monitoring, (7) facilitating the building of ML and data apps, and more. In April 2023, W&B launched a new product targeted at LLM-specific workflows, often called LLMOps.

Developing and deploying an ML model is a complex process. In the same way that a cook, when developing and making a new recipe, would need to keep track of all the ingredients they’re using, the steps they’re taking, and how the food is turning out, ML practitioners need to keep track of what datasets they are training on, what techniques and hyperparameters are being used, and how the model performs. These elements are key to building a well-performing model.

W&B seeks to be the central source of truth where the ML practitioner can keep track of all of this. This can be helpful to the engineer because it can help them better understand how the changes they are making may affect their model and can guide them to make the right improvements to their model. W&B’s product offerings can be categorized into 4 main buckets:

Traditional ML Models

Platform

Monitoring

W&B for LLMs (extending all 3 buckets above to fit LLM use cases)

Source: W&B

W&B Models

Experiment Tracking

Source: W&B

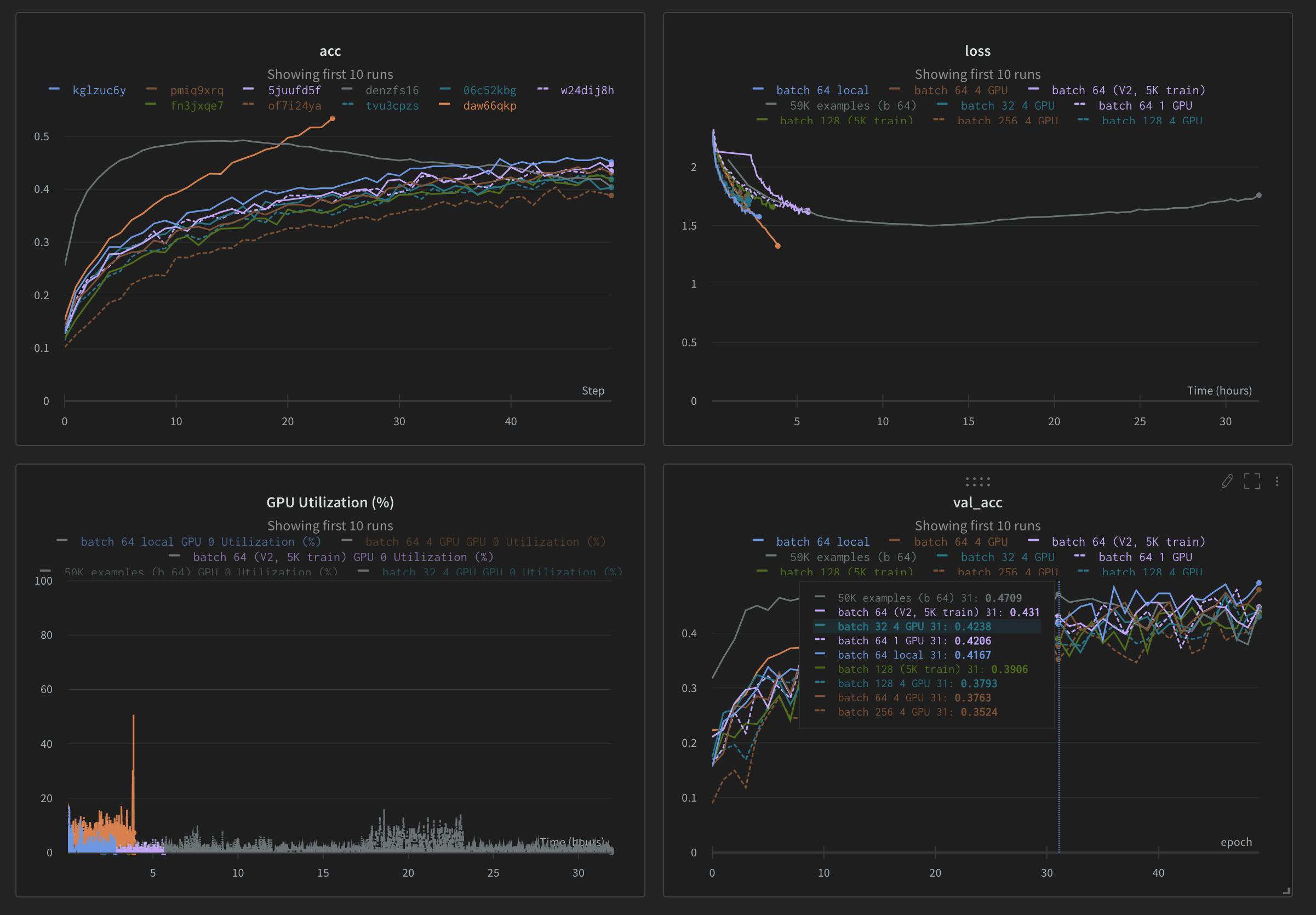

One problem that ML practitioners face is that it can be difficult to track all the iterations of a model as they adjust and improve it. W&B offers an experiment tracking product, which seeks to be "the system of record for your model training.” This allows ML practitioners to, with just 5 lines of code, quickly implement experiment logging that allows them to track, compare, and visualize their ML model. Model inputs and hyperparameters can be easily saved, and the performance of any given model can be graphed against any other model, allowing for easy comparison.

W&B’s experiment tracking also allows for easy version control, enabling users to quickly find and re-run previous model checkpoints. CPU and GPU usage can also be monitored to identify training bottlenecks and avoid wasting expensive resources. Dataset versioning is also supported where users can automatically version logged datasets, with diffing and deduplication handled by Weights & Biases, behind the scenes.

Model Registry

Source: W&B

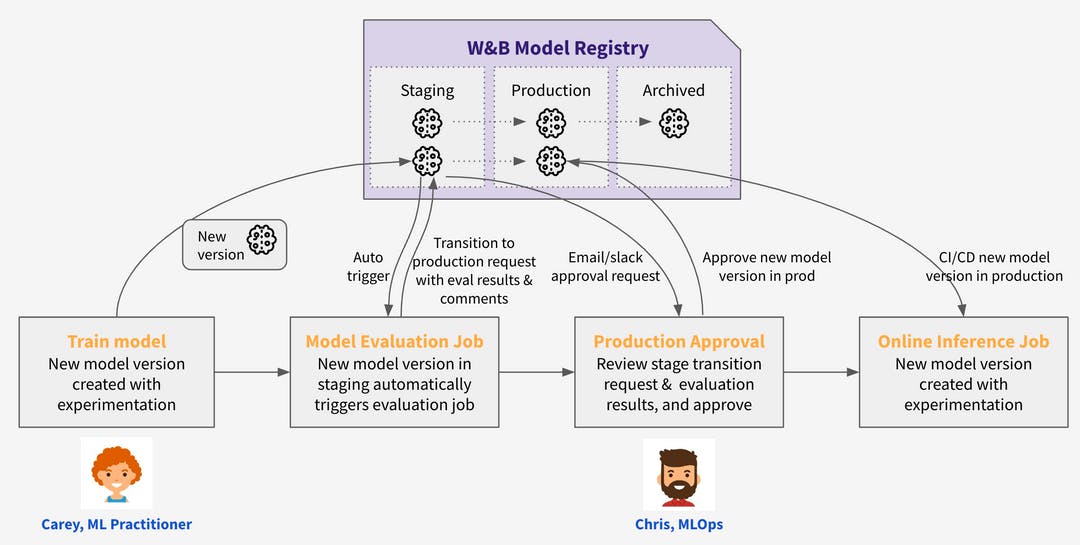

W&B offers a “model registry and lifecycle management” product that enables users to collaborate and track model versions across thousands of projects, millions of experiments, and hundreds of team members. It seeks to be a central place from which one can “manage all model versions through every lifecycle stage, from development to staging to production.“

The model registry provides users with a central source of truth: it makes it clear what model is in production and how new candidate models compare. It also allows users to easily determine which datasets a given model was trained on. Furthermore, it provides solutions for Model CI/CD and can automatically retrain and re-evaluate models to keep a team's production models fresh. The history of any model updates can also be easily audited.

Launch

Source: W&B

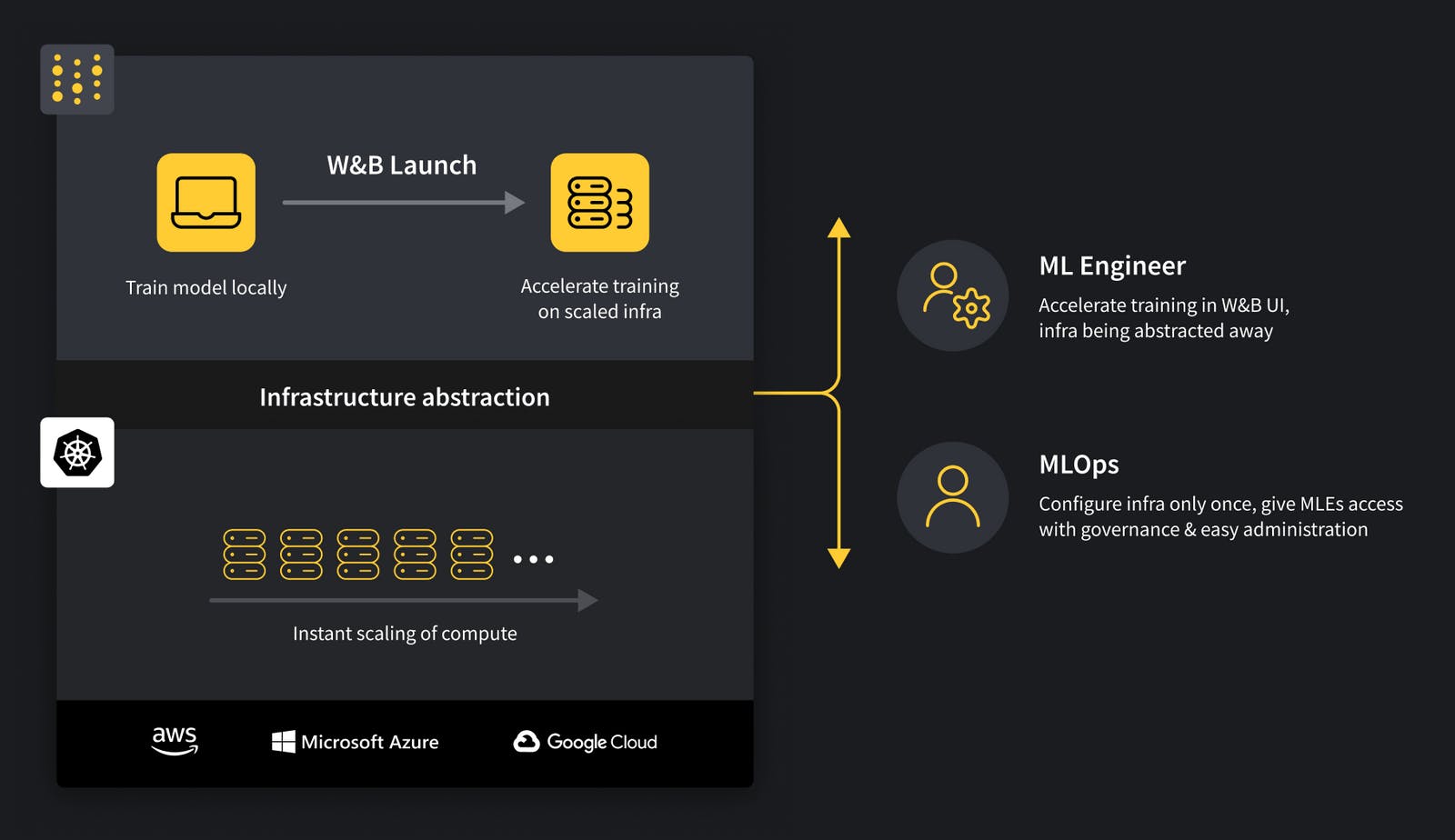

W&B’s Launch product makes it easy to perform ML tasks (e.g. training a model). It allows one to start one of these tasks in a variety of computing environments, from one’s own personal computer to more powerful setups like clusters of computers. Launch allows users to easily connect to and use external resources, like faster graphics processing units (GPUs) and clusters of computers, to improve the speed and efficiency of your machine learning work.

ML practitioners can access the computing resources they require without having to deal with the technical complexities of setting up and managing those resources themselves. On the other hand, MLOps (the operations side of machine learning) can keep an eye on and monitor how these resources are being used to ensure everything is running smoothly. It also allows users to set up automated processes that continually test and evaluate your machine learning models.

Additionally, Launch seeks to “bridge” the gap between ML practitioners and MLOps. This means that the platform aims to break down organizational barriers between the people who develop machine learning models (ML practitioners) with those responsible for the operational aspects of deploying and maintaining these models (MLOps). By providing a shared interface, removes the silos between both groups and allows them to work together more effectively.

Overall, Launch empowers users to shorten the time it takes to move models from development to actual use in real-world applications.

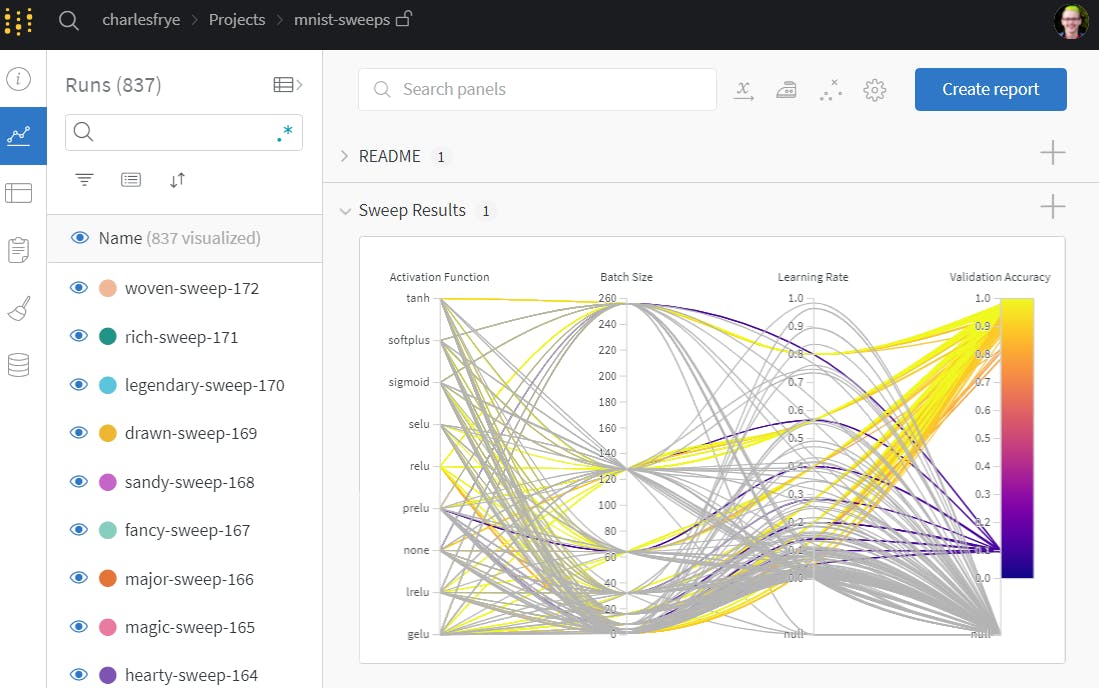

Sweeps

Source: W&B

Sweeps is a product offered by W&B that helps automate and optimize the process of tuning hyperparameters for machine learning models. Hyperparameters are settings that ML practitioners need to adjust before training a model. They control various aspects of the training process and significantly influence the performance and behavior of the model. Hyperparameters can include things like learning rate, batch size, the number of layers in a neural network, regularization strength, and more. These settings interact in complex ways, and tuning them manually can be time-consuming and prone to human bias. As such, they are key to developing any ML model.

Sweep automates the process of searching for the best combination of hyperparameters (i.e. hyperparameter tuning) for a machine learning model. Instead of manually trying out different hyperparameter settings one by one, Sweeps intelligently explores a range of values for various hyperparameters (the range and underlying algorithmic logic of the exploration can be set by the ML practitioner), helping ML practitioners find the optimal configuration more swiftly and efficiently. It also offers a visualization module, which shows graphically which hyperparameters affect which metrics the most.

W&B Platform

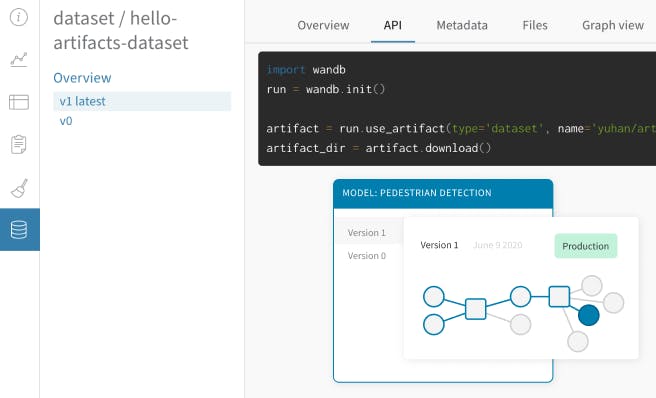

Artifacts

Source: W&B

One problem that ML practitioners run into is the need to keep track of changes to their datasets and models. W&B Artifacts allows for lightweight dataset and model versioning. It enables dataset versioning and automatically compares the most recent version of a dataset with the previous version to identify the differences or changes that have occurred between them. Artifacts also allow for the saving of model checkpoints and the comparison of model versions to identify the best candidate for production. Artifacts allows for greater observability to trace the flow of data through the pipeline so users can know exactly which datasets feed into which models.

Tables

Exploratory data analysis (EDA) is vital for machine learning as it uncovers hidden patterns, outliers, and relationships within the data, helping to guide feature selection, preprocessing, and model design. By thoroughly understanding the data through EDA, machine learning practitioners can make informed decisions that enhance model accuracy and robustness. However, manually performing EDA can be tedious and time-consuming, involving having to manually create visualizations and charts to explore the data.

W&B Tables makes it easy to do EDA so that practitioners can spend more time deriving insights and less time building charts manually. It enables users to visualize and query rows of interest from datasets. Users can also group, sort, filter, generate calculated columns, as well as create charts from tabular data in a streamlined manner.

Reports

ML practitioners often work in teams, and W&B Reports makes it easy to share findings and updates across teams, allowing for “collaborative analysis in live dashboards.” It enables the sharing of graphs, notes, and dynamic experiments with flexible formats. Collaborators can be invited to edit and comment on these shared reports.

Weave

Weave is “a visual, interactive, and composable way of building ML & data apps.” It transforms raw data tables into dynamic and customizable interactive dashboards, facilitating seamless transitions between coding and user interface design. Weave is designed specifically to build machine learning and data applications. Users can use weave to build end-to-end ML workflows, including data exploration, experiments, evaluation, monitoring, and more. It can be thought of as a “Retool” specifically designed for ML.

W&B Monitoring

Production Monitoring

W&B’s Production Monitoring product is a single system of record for developing, staging, and production data. With a central source of truth, users can compare development and production outputs to uncover what went wrong if there are any discrepancies. It provides interactive analytics and training-time context for rapid diagnosis and improvement. Monitoring comes with built-in analysis for drift and decay in LLM, computer vision, and time series. Users can also create custom automations and alerts for model behavior and performance.

W&B for LLMs

The rapid recent proliferation of Large Language Models (LLMs) has created demand for effective tools and platforms. In response, Weights & Biases has developed specialized products or extensions of existing products to cater to this demand, providing essential solutions for managing, optimizing, and fine-tuning these advanced language models.

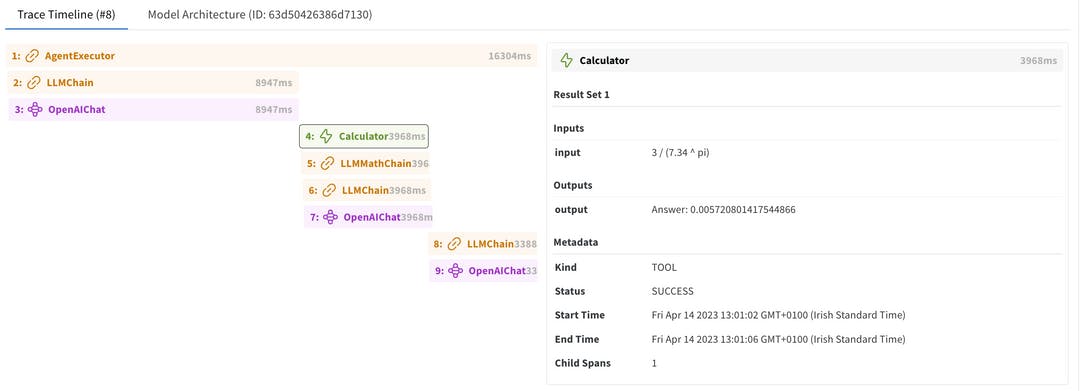

Prompts

Source: W&B

W&B’s Prompts offering allows LLM builders to understand every step of their LLM programs so they can fine-tune, prompt engineer, and debug the latest foundational models. Prompts are essentially the instructions or queries given to LLMs to generate outputs, answer questions, or perform other language-related tasks. Prompt engineering involves crafting these prompts strategically to achieve desired outcomes from the models.

Prompts enables users to design and experiment with prompts in a systematic and efficient manner. It allows users to easily review past results, identify and debug errors, gather insights about model behavior, and share learnings with colleagues.

When practitioners engage in prompt engineering, they need to grasp the configuration of chain components, which are part of their iterative process and analysis. The "Model Architecture" provides a comprehensive overview of all the settings, tools, agents, and prompt specifics within a specific chain's setup.

Prompts also automatically record a user’s interactions with the OpenAI API. This data is presented in configured dashboards, allowing analysis within the app. The Weave Toolkit (described above in the W&B Platform subsection) enables users to derive unique insights interactively, all consolidated in one central location.

Launch for LLMs

Additionally, W&B has extended its Launch product (described above in the W&B Models subsection) to address LLM-specific needs as well. Launch simplifies the process of running evaluations from OpenAI Evals, a collection of evaluation suites for assessing language models. Launch takes care of all the necessary elements for the evaluation, including generating reports, logging evaluation data in W&B Tables for visualization, and producing collaborative reports. OpenAI integration is also streamlined for easy logging of model inputs and outputs.

Market

Customer

W&B serves as a resource for both broader ML teams at companies and individual machine learning (ML) practitioners. Within companies, W&B caters to data science teams, machine learning engineers, and data engineers by providing a platform for streamlined experiment tracking and optimization, as well as collaboration across the team members.

For individual ML practitioners, W&B is a useful tool as well. Individual researchers can leverage its experiment tracking features to maintain a record of their work, enhancing transparency and reproducibility. Freelancers and consultants can use W&B to effectively manage and showcase their projects to clients. Additionally, educators teaching machine learning concepts can incorporate W&B into their curriculum to demonstrate practical experiment tracking and optimization techniques to students, preparing them for real-world applications. Academic researchers can also use W&B.

Large enterprise customers often demand a high level of security, so W&B supports all major cloud, on-prem, and hybrid deployment models. The platform offers robust identity and access management, security and compliance monitoring, and integrations to cloud security services.

Market Size

The market for MLOps is nascent and highly fragmented, estimated at $612 million in 2021 and projected to reach $6 billion by 2028. Gartner indicated in 2022 that it is tracking over 300 MLOps companies. ML researcher Chip Huyen also notes 284 MLOps tools in 2020, of which 180 are startups. Most notable AI startups are focused on application or verticalized AI. For example, among the Forbes 50 AI startups in 2019, seven companies are tooling companies. Given the increasing focus on data-centric AI, more tools are being created for data labeling, management, and more. Of the MLOps startups that raised money in 2020, 30% were in data pipelines.

Competition

Build vs Buy

A main consideration that W&B has to contend with is ML teams choosing to build an in-house solution for MLOps tooling for model development and monitoring. W&B dedicates a full page on its website to addressing this build vs. buy decision, noting the following advantages to buying W&B versus building in-house:

Scale: W&B can handle thousands of experiments, while in-house solutions may not have the same capacity

Cost: Building in house is expensive — it can take multiple engineers, and the average engineer costs $125K per year in salary in the US. So dedicating 5 engineers to work on building an in-house solution for a year would equate to $625K+. Additionally, In-house systems can incur large ongoing costs due to maintenance problems. Dedicating engineers towards the continual maintenance of an in-house system is expensive. Meanwhile, W&B has >99.95% uptime and has 50+ engineers, as well as customer success and support teams to continually help with maintenance and iteration.

Time To Value: W&B can be up and running in a matter of weeks (minutes for smaller use cases) while building an in-house solution from scratch can take 6-12 months

Interoperability: it’s hard to ensure that different systems can coexist with each other in one MLOps platform if you build it from scratch in house, while W&B is already integrated into every popular ML framework and over 9K ML repos.

Social Proof: Leading ML companies like Nvidia use W&B even though they could have built their own solution, and over 500K ML practitioners use W&B

Beyond the Build vs Buy equation, W&B has numerous competitors in the MLOps space, across both open and closed source:

Closed Source:

DataRobot: Founded in 2012, DataRobot’s platform democratizes data science with end-to-end automation for building, deploying, and managing machine learning models. It has raised $1 billion to date. Most recently, in July 2021, it raised a $300 million Series G led by Altimeter and Tiger Global. DataRobot has sharply pivoted to be largely focused on generative AI in recent months, while W&B still emphasizes its support for all ML workflows, with less special emphasis on Generative AI or LLMs.

Comet ML: Founded in 2017, Comet ML allows data scientists to automatically track their datasets, code changes, experimentation history, and production models. It has raised $70 million to date, most recently with a November 2021 $50 million Series C led by Openview. Comet ML and W&B have very similar offerings, but W&B emphasizes helping with hyperparameter tuning, whereas Comet focuses more on version control and monitoring.

Arthur: Founded in 2018, Arthur is a platform that monitors, measures, and improves the performance of machine learning models. It has raised $60 million to date. The company most recently raised a $42 million Series B in September 2022 led by Acrew Capital and Greycroft. W&B is a broader solution across all the different workflows that go into building and deploying a machine-learning model, while Arthur focuses more on the model evaluation aspect and is used mostly for LLMs specifically.

Fiddler: Founded in 2018, Fiddler provides enterprise model performance management software that allows monitoring, explaining, analyzing, and improving ML models. It has raised $45 million to date. Most recently, in June 2021, it raised a $32 million Series B led by Insight Partners, with participation from Lightspeed Venture Partners, Lux Capital, Haystack Ventures, Bloomberg Beta, Lockheed Martin, and The Alexa Fund. Fiddler AI places a strong emphasis on bias detection, fairness monitoring, and ensuring regulatory compliance, while W&B does not have a pronounced emphasis in those areas.

Verta: Founded in 2018, Verta builds software infrastructure to help enterprise data science and ML teams develop and deploy ML models. It has raised $11 million to date, most recently with an August 2020 $10 million Series A led by Intel Capital, with participation from General Catalyst. While both platforms support the end-to-end model development workflow, Verta has more solutions targeted towards AI regulation and responsible AI. W&B has also raised significantly more funding than Verta.

Vessl: Founded in 2011, Vessl provides an end-to-end MLOps platform that enables high-performance ML teams to build, train, and deploy models faster at scale. It has raised $5 million to date, most recently through a $4 million seed round led by KB Investment and Mirae in November 2021. Vessl was founded in South Korea, and is more active in Asia and Korea, counting large Korean enterprises like Hyundai and Samsung among their customers.

Open Source

Seldon: Seldon Core is a leading open-source framework for deploying models and experiments at scale. Founded in 2014, Seldon has raised $34 million in total. Most recently, the company raised a $20 million Series Bled by Bright Pixel Capital in March 2023. Seldon specializes in model deployment and serving enterprise production environments, while W&B is a broader platform.

DagsHub: Founded in 2019, DagsHub’s MLOps model is designed entirely around data-version control (DVC). It has raised $3 million in total, mostly from a $3 million seed round led by StageOne Ventures in April 2020. DagsHub provides a collaborative GitHub-like experience around DVC, including tools like data-science PRs, one-click DVC remote storage, and an informative experiment tracking view. W&B has a fuller solution, as DagsHub is focused primarily on version control.

Aim: Aim is an open-source, self-hosted AI Metadata tracking tool designed to handle hundreds of thousands of tracked metadata sequences. Being open-source, AIM benefits from community contributions and can be extended by developers according to their needs. On the other hand, while not open-source, W&B offers commercial support, detailed documentation, and integrations with other tools and platforms.

MLFlow (Databricks): MLFlow is an open-source platform launched by Databricks to manage the ML lifecycle, including experimentation, reproducibility, deployment, and a central model registry. MLFlow has been said to be more of a barebones solution than W&B. It requires Databricks to run it, and so is a way for Databricks to acquire and onboard new customers. Databricks launched MLFlow in 2018.

Business Model

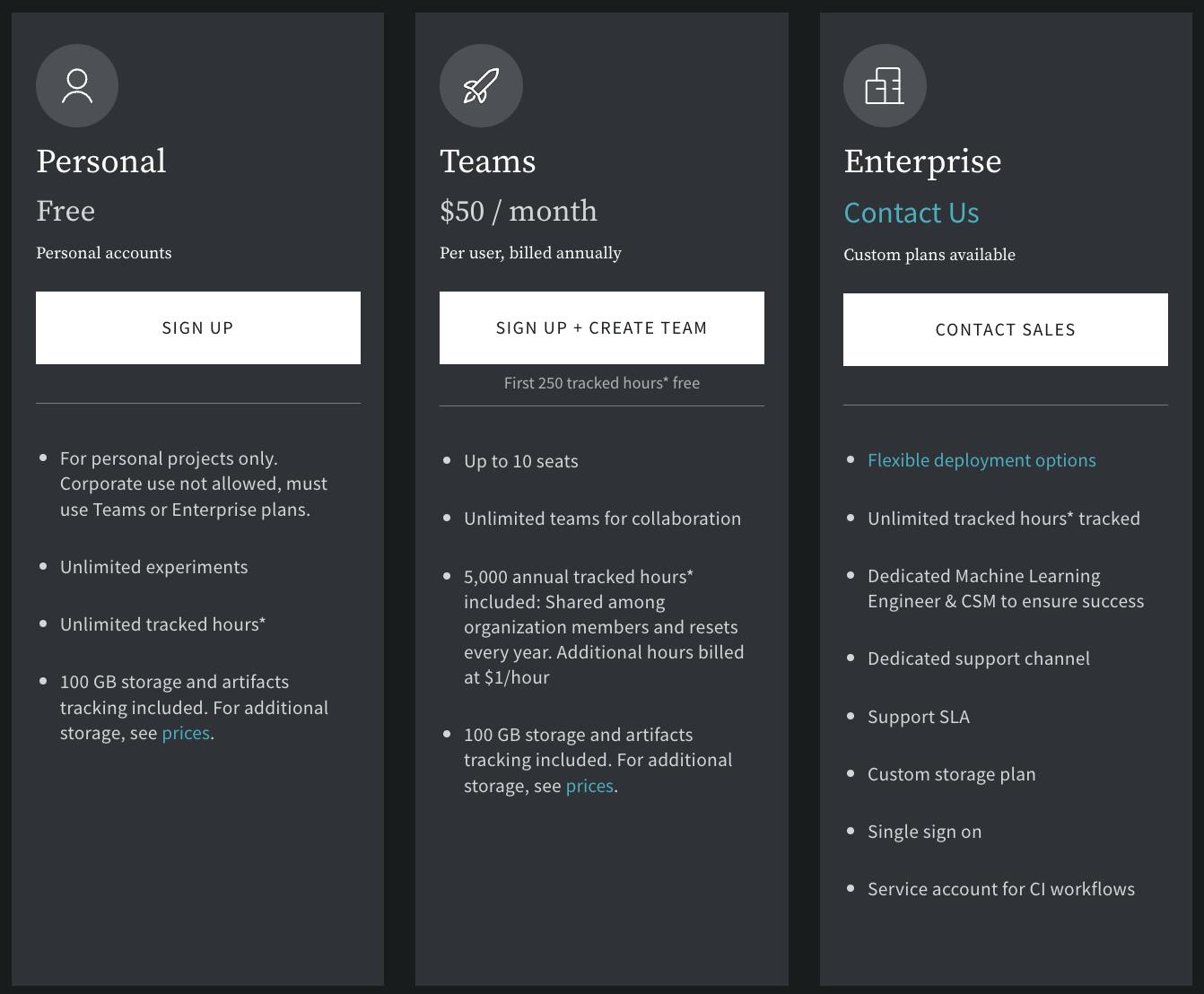

Source: W&B

W&B has a subscription-based revenue model. It offers both cloud-hosted and private hosting configurations. For both of those configurations, a personal account is free. There is a cloud-hosted “Teams” tier that is $50/month/user, billed annually, which supports up to 10 seats. Beyond that, both configurations have an “Enterprise” tier. Pricing is not disclosed publicly for that tier. The Enterprise tier allows users to install W&B locally on their own servers so that sensitive data does not have to leave the system.

Traction

W&B reported that it had 500K+ users and 700+ customers as of November 2023. These included notable AI and ML companies like OpenAI, Meta, and Cohere. Customers also include large enterprises like Toyota, Volkswagen, and Square. The company notes that “OpenAI uses W&B Models to track all their model versions across 2,000+ projects, millions of experiments, and hundreds of team members.” W&B is integrated into 20K repos. These include repos that LLM practitioners rely on like Langchain, LlamaIndex, and GPT4All, as well as specialized infrastructure providers like Coreweave, Lambda, and Graphcore. W&B has also formed partnerships with industry leaders like NVIDIA, Microsoft, AWS, GCP, Anyscale, and Snowflake.

Source: W&B

Valuation

In August 2023, Weights & Biases raised a $50 million round at a ~$1.3 billion valuation led by Daniel Gross (founder of Cue, former partner at YC) and Nat Friedman (former CEO of Github), with participation from existing investors Coatue, Insight Partners, Felicis, Bond, Bloomberg Beta, and Sapphire Ventures. As of November 2023, the company has raised a total of $250 million. Gross and Friedman were both “early users of Weights & Biases” before becoming investors in the company.

Key Opportunities

Capturing the Generative AI Opportunity

Capturing the Generative AI Opportunity represents significant potential for W&B. Throughout 2023, generative AI models like GPT-4 have gained immense popularity for their ability to generate human-like text and other content. These models have vast applications in content creation, chatbots, and even programming. By providing tools and infrastructure that allow developers and data scientists to harness these generative models effectively, W&B can position itself at the forefront of the AI revolution. This opportunity involves not only integrating these models but also creating a user-friendly environment for fine-tuning and customization, ultimately empowering users to build innovative and tailored solutions.

Extending into Feature Stores

Extending into Feature Stores presents W&B with the chance to expand its offerings in a rapidly evolving ML landscape. Feature stores have become crucial for organizing and managing machine learning features used in model training. By integrating feature store functionality, W&B can streamline data pipelines for ML practitioners, reducing the time and effort required for data preparation. This move aligns with the industry's growing demand for more efficient data management within the ML ecosystem. Effectively incorporating feature stores into its platform will enable W&B to enhance its role in supporting end-to-end machine learning workflows, making it an indispensable tool for data scientists and engineers.

Training Open Source Models

As open-source AI models continue to proliferate, seizing the opportunity to become the primary platform for their training could be a strategic move for W&B. Open-source models have gained traction for their adaptability across various applications, from natural language processing to computer vision. By positioning itself as the go-to platform for training these models, W&B can attract developers and researchers alike. This opportunity involves creating specialized features, resources, and support for efficiently and effectively training open-source models. By establishing itself in this niche, W&B can solidify its position as a leader in the ever-evolving AI and ML landscape, bridging the gap between research and practical application.

Key Risks

Falling Behind on LLMs

One key risk for W&B is falling behind in the fast-paced development and adoption of Large Language Models (LLMs). Models like GPT-3 and BERT have revolutionized natural language understanding tasks. Failure to keep up with the incorporation of LLMs into its platform could result in a competitive disadvantage.

Unlike some other MLOps players, W&B was not born into the Generative AI moment and thus has had to adapt to it. To address this risk, W&B may need to further invest in integrating LLM support, optimizing fine-tuning workflows for these models, and providing resources for users to effectively leverage their power. Staying at the forefront of LLM adoption is vital for W&B's relevance in the AI and ML ecosystem.

Other Players Dominate Fine-Tuning Workflows

Another significant risk is the dominance of other players in the fine-tuning workflows within the ML ecosystem. Fine-tuning plays a critical role in adapting pre-trained models for specific tasks, such as text classification or image recognition. If W&B lags behind in this area, it may limit its appeal to users who rely heavily on fine-tuning capabilities. In fact, in August 2023, OpenAI announced a partnership with Scale AI to allow practitioners to easily fine-tune GPT-3.5 on Scale AI’s platform.

Notably, the new OpenAI fine-tuning API does not integrate with W&B. To remain competitive, W&B may need to enhance its fine-tuning features, build partnerships with foundational model players like OpenAI, offer greater customization options, and provide seamless integration with popular pre-trained models. Addressing this risk ensures that W&B remains a valuable tool for data scientists and ML practitioners.

Practitioners No Longer Fine-Tune Models Locally

A notable trend is the shift away from practitioners fine-tuning models locally on their own machines. Fine-tuning locally can be resource-intensive and time-consuming, prompting practitioners to seek cloud-based solutions offering scalability and collaboration features. Adapting to this shift is essential for W&B. To mitigate this risk, W&B may need to enhance its cloud-based offerings, making them more attractive to users who prefer collaborative and scalable fine-tuning environments. Remaining responsive to evolving practices ensures that W&B remains relevant and adaptable to the changing needs of ML practitioners in an increasingly cloud-oriented landscape.

Summary

AI and ML have taken the world by storm, becoming the forefront of technological innovation and advancement. Yet ML practitioners still face many challenges in developing and deploying new models. Some complex workflows and requirements can cause ML projects to run over time and become very inefficient. As a result, spend on MLOps has grown quickly, estimated at $612 million in 2021 and projected to reach $6 billion by 2028.

W&B has built a comprehensive platform that provides tooling for experiment tracking, collaboration, performance visualization, hyperparameter tuning, and more. By embracing DevOps principles tailored for the ML domain (MLOps), W&B empowers ML practitioners to streamline their workflows, expedite deployment processes, and boost the likelihood and speed of successful model implementation. As AI adoption continues to climb and the MLOps market matures, W&B is poised to play a vital role in facilitating the transition toward agile and effective ML model deployment.