Thesis

The proliferation of LLMs has kicked off a race to develop advanced versions, with the pace at which new model releases are gaining adoption accelerating. For instance, the Claude 3 family, launched in March 2024, has achieved a 43% adoption rate as of May 2024, surpassing the Claude 2 generation released in July 2023. Despite this surge of interest, barriers to entry persist, mainly due to the high costs and resource demands associated with training and operating models.

Training cutting-edge language models requires enormous computing power, typically provided by servers and extensive data center facilities run by major cloud vendors. For example, an August 2024 estimate predicted that AI models could be trained with more than one million times as much computing power as GPT-4 was trained on. Training advanced models can cost millions of dollars as of 2023, further exacerbated by the GPU shortage and the centralization of GPU resources by large cloud providers like AWS, GCP, and Azure.

The dominance of such cloud vendors has driven up costs and restricted access to critical computing resources, compelling many companies to rely on foundational model APIs from major providers like OpenAI and Anthropic. In 2023, generative AI saw a shift towards open source. With Meta’s release of LLama-2, open-source LLMs narrowed the benchmark gap between open and closed LLMs. Open LLMs allow for collaborative improvement, transparency, and financial accessibility. Moreover, they tend to be faster and more customizable. However, today’s leading generative AI models are still closed behind commercial APIs, and the high compute and complicated infrastructure necessary to train LLMs creates a barrier of entry for companies that seek to customize models for their own needs.

Together AI aims to address the needs of enterprises seeking AI infrastructure that is cost-effective, transparent, and secure. It provides a decentralized platform connecting users to a network of high-end GPU chips. This platform allows users to run open-source AI models without reliance on traditional cloud services. Together AI offers a full-stack product suite that empowers the open-source community so customers don’t have to spin up their infrastructure.

Founding Story

Together AI was founded in June 2022 by Vipul Ved Prakash (CEO) along with Ce Zhang (CTO), Percy Liang, and Chris Re.

Prakash graduated from St. Stephen’s College in Delhi with a major in computer science in 1997 and worked as an engineer at two startups including Napster in 2001. He then went on to create an open-source spam filter called Vipuls Razor that was installed on over 10 million servers. In 2001, his anti-spam work led him to found his first company, CloudMark, to commercialize Vipul's Razor technology and bring it to more platforms. Cloudmark was deployed at over 120 of the world’s largest operator networks as of 2010.

In 2007, Prakash also founded Topsy, a social media search and analytics company. Topsy was acquired by Apple for more than $200 million in 2013, where Prakash worked as a senior director in AI/ML until 2018. While there, worked on deep learning projects and built an open-domain Q&A system based on Meta’s paper on LSTMs in 2016.

Zhang completed his post-doc at Stanford in 2015, where he was advised by Re. Re had previously built a data and machine learning startup Lattice.io, which was acquired by Apple in 2017. Liang, Re, and Zhang were researchers and professors at Stanford in 2016 which is likely how the co-founders met. Prakash, Zhang, Liang, and Re decided to start a company together in 2022 around the belief that foundation models represented a generational shift in technology.

The team founded Together AI with the goal of building a platform for open-source, independent, user-owned AI systems. They saw how the high expense of GPU clusters pushed these models to be centralized within a small number of corporations. With the founders coming from research backgrounds, they were familiar with the innovations brought by the open community that had limited agency in the face of these big corporations. They founded Together AI with the belief that decentralized alternatives to closed AI systems would be important for this generational shift.

Product

Together AI offers a suite of products that enable users to build on open and custom AI foundation models in a cost-efficient way. Its open-source platform provides the models and services that any organization can use to embed AI into their applications.

Foundational AI Research

Together AI actively leads open-source AI research. In 2023, it released RedPajama, an open-source data for model-pretraining, as well as RedPajama-V2, a dataset with over 30 trillion tokens. RedPajama-V2 was downloaded 1.2 million times in November 2023 alone. Chief Scientist Tri Dao is well known for his work on FlashAttention in 2022, which is used by OpenAI, Anthropic, Meta, and Mistral AI.

Together Inference

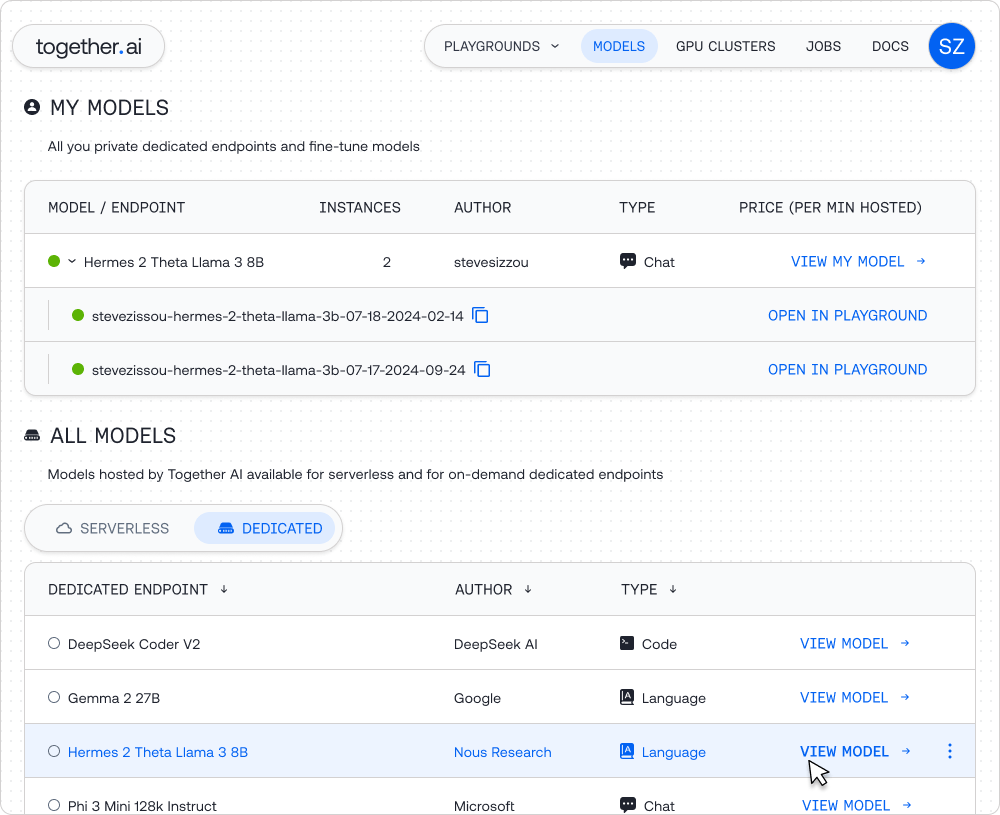

Source: Together AI

The platform provides a user interface to run inference jobs for ML models on its scalable infrastructure. Behind the scenes, the jobs run on a Together Inference Engine, which is multiple times faster than other inference services. An inference engine is responsible for making the predictions based on the data it’s been given.

Together AI provides users with over 50 open-source models through its serverless endpoints, which automatically scale based on traffic. Users can opt to deploy dedicated instances for over 100 popular open-source models or their own fine-tuned model. Either option can be configured with auto-scaling, which automatically provisions additional hardware when the API volumes surpass the capacity of the currently deployed hardware.

Together Fine-Tuning

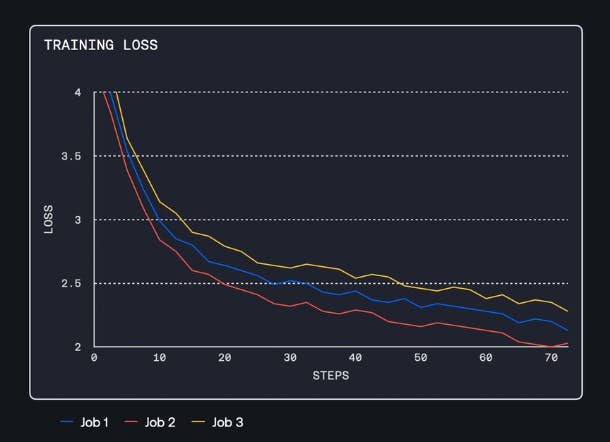

Source: Together AI

Together AI allows customers to customize leading open-source models with their proprietary datasets. They can use APIs to upload their datasets to the platform, start a fine-tuning job, monitor the progress, and host the resulting model on Together or download it. The platform provides an interface to monitor results on Weights & Biases.

Together Custom Models

Users can train their own AI model on Together AI’s platform and retain full ownership. In order to do so, they select a data design, model architecture, and training recipe. Then, they train the model, and the platform abstracts away the job scheduling, orchestration, and optimization that happens behind the scenes. The user then tunes and aligns the model and ultimately evaluates model quality. Together AI offers a consulting service within custom models where experts can help companies design and build customized AI models for specific workloads trained on their proprietary data.

Together GPU Clusters

Source: Together AI

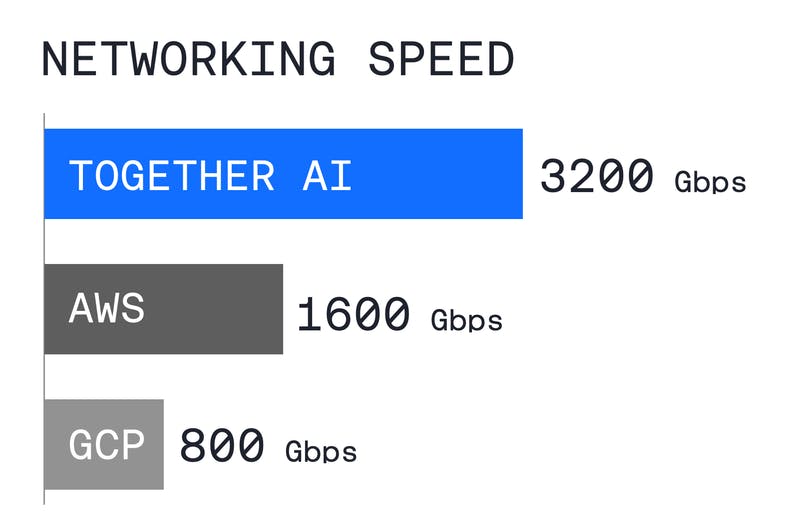

The platform provides access to Together GPU Clusters with state-of-the-art GPU hardware, such as NVIDIA H100, H200, and A100 GPUs. It offers up to 10K+ GPU clusters that are optimized for foundation model training, with flexible commitments starting at one month reservations at a time. It provides 3.2K Gbps of networking speed with Infiniband with fast distributed training.

Market

Customer

As of 2024, Together AI hosts 45K registered users from both AI startups and multinational enterprises. Customers include Pika Labs, a generative AI video startup by an ex-Stanford PhD that closed $55 million. As one investor put it, customers come for "the GPUs, but stay for the workflow services and research.” Together AI is well suited for startups and individual developers who have spiky workloads (training or fine-tuning a model) and benefit from a token-based pricing model.

Together AI’s setup is also ideal for developers to customize and deploy open-source AI models for their applications, without having to worry about the infrastructure. The process is as simple as selecting a model from the Together AI Playground, launching a model using a serverless endpoint, and adding it into a generative AI app using the API key.

Market Size

In 2023, the global AI server market size was estimated at $26.2 billion and estimated to grow to $179 billion by 2032, growing at a CAGR of 23.8%. The AI server market encompasses hardware components like GPUs or TPUs that accelerate AI workloads. The industry can be segmented based on training and inference jobs, and deployment ranges from on-premise to hybrid and fully cloud solutions.

Competition

Often, teams who wanted to train and deploy LLM would look to hyperscalers that offer enterprise-scale solutions, such as Amazon Web Services, Microsoft Azure, and Google Cloud Platform. These platforms offer reliability and scalability through ecosystem offerings that attract large enterprises. Developers would need to follow several steps: onboard onto a cloud service, select a GPU instance, launch that instance, containerize the model, transfer the container to an EC2 instance, install serving software, configure the serving software, and expose it as an API endpoint.

The three main cloud providers have traditionally focused on proprietary models and tools, with heavy investments in GPUs and specialized chips. Each hyperscaler has its LLM available: Microsoft’s partnership with OpenAI for ChatGPT, Google’s Bard, and AWS’ partnership with Anthropic for Claude and Titanium. However, they are moving towards open source as well. For example, Anthropic introduced an open-source model context protocol in November 2024.

Inference-as-a-Service

Emerging early-stage startups enable customers to finetune and deploy their models on a software abstraction atop GPU clouds.

Foundry: Foundry, founded in 2022, offers a public cloud purpose-built for ML workloads. In 2024, it raised an $80 million Series A round co-led by Sequoia Capital and Lightspeed Venture Partners, with a valuation of $350 million as of December 2024. Customers include Stanford, Carnegie Mellon University, and Arc Institute as of 2024. Customers can reserve compute and resell instances that they don’t use. In 2024, the company launched Foundry Cloud Platform to provide self-serve access to GPU compute. Instead of locking customers into long-term contracts like traditional cloud services, it offers its services to be used as flexibly as 3 hours at a time. The platform addresses the risk of GPU failures by proactively replacing failed nodes, guaranteeing that all the GPUs a customer reserves are reliable.

Together AI and Foundry both compete in the generative AI infrastructure space, but their focuses differ slightly. Together AI provides an open generative AI cloud platform with optimized inference solutions for open-source models, aiming to make advanced AI models accessible and efficient for developers and enterprises. Foundry, on the other hand, is known for helping enterprises operationalize AI at scale, often focusing on tools for integrating AI into business workflows, which can include proprietary and customized solutions.

OctoAI: OctoAI, founded in 2019, offers a platform that helps businesses build ML applications without worrying about the underlying infrastructure. In 2021, it raised an $85 million Series C round led by Tiger Global Management. OctoAI was acquired by NVIDIA in September 2024 for $250 million. OctoAI started as OctoML when it spun out of the University of Washington in 2019. Users can decide what they prioritize and OctoAI chooses the right hardware for their use cases. As a result, the customer can be a generalist software developer rather than an ML engineer. OctoAI offers a full-stack solution that allows businesses to run a mix of open-source and proprietary models within any cloud and any hardware target. While OctoAI focuses on inference and offers fine-tuning, it deliberately doesn't offer training from scratch, with the conviction that inference jobs will be more frequent than training jobs.

Together AI and OctoAI are competitors in the AI infrastructure and generative AI space, particularly in providing platforms that facilitate AI model deployment and training. Together AI focuses on decentralized cloud services for running and fine-tuning generative AI models, offering GPU clusters and open-source resources like RedPajama to AI developers and researchers. OctoAI similarly provides infrastructure optimized for running large AI models, aiming to accelerate AI development by streamlining deployment processes.

RunPod: RunPod, founded in 2022, is a cloud-based infrastructure service that provides cost-effective and scalable GPU resources. In May 2024, it raised a $20 million Series A, co-led by Intel Capital and Dell Technologies Capital. It offers users more than 50 templates to simplify their ML workflow. In June 2024 it announced reduced prices across its GPU offerings. Over 100k developers use RunPod's services to run their applications, as of May 2024. It enables users to create production-ready AI endpoints for their applications and provides a platform for users to train AI models, deploy AI applications, and scale inference requests.

Together AI and Runpod compete in the AI infrastructure and cloud computing space by targeting developers and enterprises looking to optimize the performance and cost of running AI models. Together AI focuses on providing an open generative AI cloud platform specifically tailored for inference and fine-tuning of LLMs. Runpod, meanwhile, is a decentralized GPU cloud platform that offers scalable compute resources, enabling users to run machine learning workloads, including training and inference, at competitive costs.

Competition arises as both platforms seek to simplify and lower the cost of accessing the computing power required for advanced AI workloads. Together AI's focus on open-source model support and community-driven innovation directly overlaps with Runpod's appeal to developers seeking flexible, cost-effective AI infrastructure. However, Together AI emphasizes seamless integration with LLMs and generative AI tasks, while Runpod's broader GPU compute offerings cater to various AI and general-purpose compute needs.

Specialized Cloud Providers

Startups also serve as next-generation specialized cloud providers that offer GPU infra for AI and HPC workloads. Though they lack the same level of software tooling, they offer raw compute power at competitive prices.

Lambda: Lambda, founded in 2012, is a cloud-based GPU company that specializes in GPU workstations, cloud services, and AI infrastructure. In February 2024, it raised a $320 million Series C at a $1.5 billion valuation. This round was led by the US Innovative Technology Fund. Lambda Labs helps enterprises, startups, and research labs, with notable customers such as Microsoft, Amazon, and Stanford.

Together AI competes with Lambda Labs in the GPU cloud computing space by offering similar services for training and deploying AI models. Both platforms provide infrastructure and support for AI workloads, but Together AI differentiates itself by offering a more comprehensive set of tools that include fine-tuning capabilities, data management, and API services for inference, which Lambda Labs does not prioritize. Additionally, Together AI's token-based pricing model allows for more flexibility, catering particularly to startups with unpredictable workloads, while Lambda Labs offers a more traditional hourly pricing scheme for raw GPU compute resources.

CoreWeave: CoreWeave is a cloud infrastructure platform optimized for GPU-based workloads. It was founded in 2017 and has raised a total of $13.4 billion as of December 2024. With a partnership with NVIDIA and over 45K high-end NVIDIA GPUs, it provides a wide range of compute options with flexible usage-based pricing. CoreWeave serves the demand for large GPU deployments, with H100s for $2.23 an hour as of 2024.

Together AI competes with CoreWeave by offering cloud-based GPU resources for AI and machine learning workloads. Both companies provide infrastructure for model training and deployment, targeting developers and businesses working with large-scale AI models. Together AI focuses on providing tools for fine-tuning models, managing models, and APIs for inference alongside GPU resources. CoreWeave, on the other hand, emphasizes cost-effective GPU instances for compute power and offers a traditional pay-per-use model for GPUs.

Competitive Differentiation

Together AI covers three types of services: the compute, the APIs to interface with the models, and the platform to deploy the model. As a result, it can offer significant cost reductions compared to competitors. According to a company blog post, Together AI can “optimize down the stack, with thousands of GPUs located in multiple secure facilities, software for virtualization, scheduling, and model optimizations that significantly bring down operating costs.”

Together AI provides the user interface for an end-to-end developer experience. As of 2024, it boasts cheaper endpoints compared to industry incumbent OpenAI. In general, its infrastructure can scale at lower prices than what’s offered by traditional hyperscalers, with users paying 1/5th of the traditional cost to train and fine-tune models. As a cloud-agnostic solution, it also serves users who want to avoid being locked into a cloud service. A key differentiator lies in its open-source mission. By providing customers access to over 100 open models and spearheading research on its own, it appeals to the open-source community.

Business Model

Together AI has a pay-as-you-use model where customers are charged based on how many tokens they use. Token-based pricing is attractive to early-stage startups and individual developers because of their unpredictable workloads from tasks like training new models and launching new products. Customers can align their costs directly with the value they receive from their AI models, compared to per-hour pricing that comes with the risk of wasting idle GPU time. Together AI sources GPUs from various providers but charges a minimal premium that allows it to offer cheaper compute compared to hyperscalers. An unverified source estimates that its compute is cheaper than hyperscalers by ~80%, and Together AI’s gross margin as of December 2024 is ~45%.

Customers using the Together Inference API are charged per million tokens. For customers hosting their model on dedicated endpoints, they pay per minute for the GPU endpoint based on the type of hardware. For fine-tuning tasks, they are charged based on model size, dataset size, and the number of epochs.

Traction

Within Together AI’s first three months in business, it reached a $10 million run rate, and by June 2023 it reached over 10K API registrations. As of September 2024, it was estimated that Together AI reached $100 million in ARR in less than 10 months. As of February 2024, the company has over 8K A100 and H100 GPUs on its platform, with the ability to deliver 20 exaflops of compute in total. As of 2023, it owns data centers in the US and EU operated by partners Crusoe Cloud and Vultr. It works with over 10 GPU cloud platforms to source its compute.

As of March 2024, more than 45K registered developers use the platform, with traffic growing 3x month over month. Together AI is integrated into AI application development frameworks such as LangChain, Vercel, and MongoDB. It has also worked within the life sciences industry in its collaboration with the Arc Institute to create Evo, a biological generative AI model for DNA. Notable customers include AI startups such as Nexusflow.ai, Voyage.ai, and Pika Labs.

Valuation

In November 2023, Together AI raised $102.5 million Series A at a ~$500 million valuation led by Kleiner Perkins with participation from NVIDIA and Emergence Capital. In March 2024, it raised another $106 million at a $1.25 billion valuation in a Series A extension led by Salesforce Ventures with participation from Coatue, Lux Capital, and Emergence Capital. This indicates that the valuation more than doubled in about four months. The company has raised a total of $228.5 million as of December 2024.

Key Opportunities

Open-Source AI

There is growing pressure to shift from closed to open-source AI, due to the need to address problems in AI such as misinformation, bias, and data privacy. On the other hand, open-source AI keeps companies accountable. Hugging Face CEO Clement Delangue stated in a testimony:

“Open science and open source AI prevent blackbox systems, make companies more accountable, and help solving today’s challenges like mitigating biases, reducing misinformation, promoting copyright, & rewarding all stake-holders including artists & content creators in the value creation process.”

Together AI’s focus on open source makes it increasingly attractive to developers who seek options that are faster, more customizable, and more private than private options.

Product Expansion

The AI infrastructure landscape is fragmented across different development platforms like LangSmith to RAG platforms like LlamaIndex. With the competitive dynamics of the AI inference market, Together AI can expand beyond its initial focus on providing infrastructure and tools for open-source models. As of April 2024, there is a growing demand for higher-level, application-specific AI services. Together AI’s platform can offer APIs geared towards common AI tasks, such as text generation and data analysis. It already offers a web playground where users can interface with models off-the-shelf. For example, users can generate and edit text, generate images, or generate code.

Key Risks

Abuse of Open-Source Models

The nature of open-source models means that they lack built-in safety barriers that private models would have, leaving them vulnerable to misuse. For example, private AI systems like ChatGPT are secured such that they wouldn’t follow user requests that violate their usage policies. Unsecured AI systems can be misused by sophisticated threat actors, who can download these AI systems and disable their safety features. While the developers who operate secured AI systems can monitor for abuses or failures in their systems, it’s harder to regulate open-source AI. As regulators navigate the risks of open-source models, Together AI should be prepared to assess the open-source models it offers on its platform.

Race to the Bottom

The LLM space is crowded with incumbents and startups that are trying to attract users with embeddings that optimize for performance and cost. OpenAI felt pressure to cut the prices of its models in 2024. In response, Together AI updated its pricing to make its open-source models available at a cheaper rate in January 2024. Though this serves as a competitive advantage for Together AI, lack of pricing power can prove problematic for LLMs due to the extreme costs involved in compute. As the LLM market competes on efficiency, companies face the risk of chasing incremental wins rather than category-defining differences. However, Together AI could stand to benefit from the commoditization of compute, as it offers customers services atop of pure GPU compute.

Summary

The AI landscape is evolving due to a surge in LLMs and the race to develop more powerful models. Significant barriers to entry persist, due to the high costs associated with training and deploying these models. Training LLMs can incur millions of dollars, exacerbated by the ongoing GPU shortage as top tech players secure a large share of available resources. In response to these challenges, there is a growing movement towards evolving AI infrastructure as well as open-source AI models.

Together AI offers an AI-inference-as-a-service platform that connects users to a decentralized network of GPUs. This approach enables users to run open-source AI models without relying on traditional cloud services, addressing both cost and accessibility issues. Together AI's platform is designed to be user-friendly, supporting developers in leveraging AI capabilities while reducing reliance on cloud vendors.