Actionable Summary

The story of America’s electric grid began in the late 19th century, when electricity was a rare luxury. But Edison’s direct current (DC) system had a fatal flaw: it couldn’t transmit power over long distances. As a result, Tesla’s alternating current (AC) design ultimately won out, enabling large, interconnected networks.

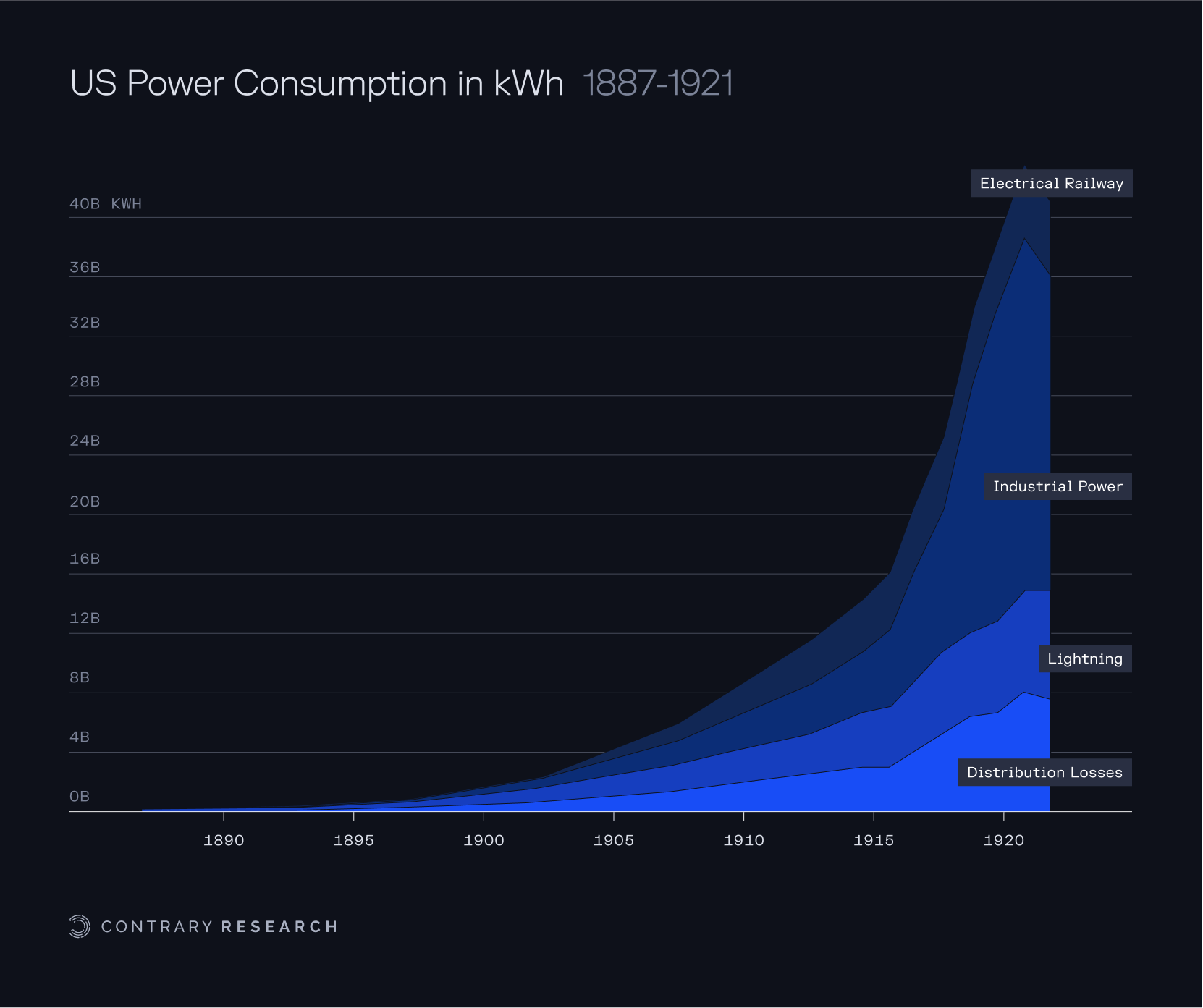

Utilities adopted a “grow and build” model: more customers and bigger plants created a virtuous cycle of cheaper power and higher demand. By the 1920s, the US had thousands of power stations, and by 1930, nearly 70% of American homes had electricity.

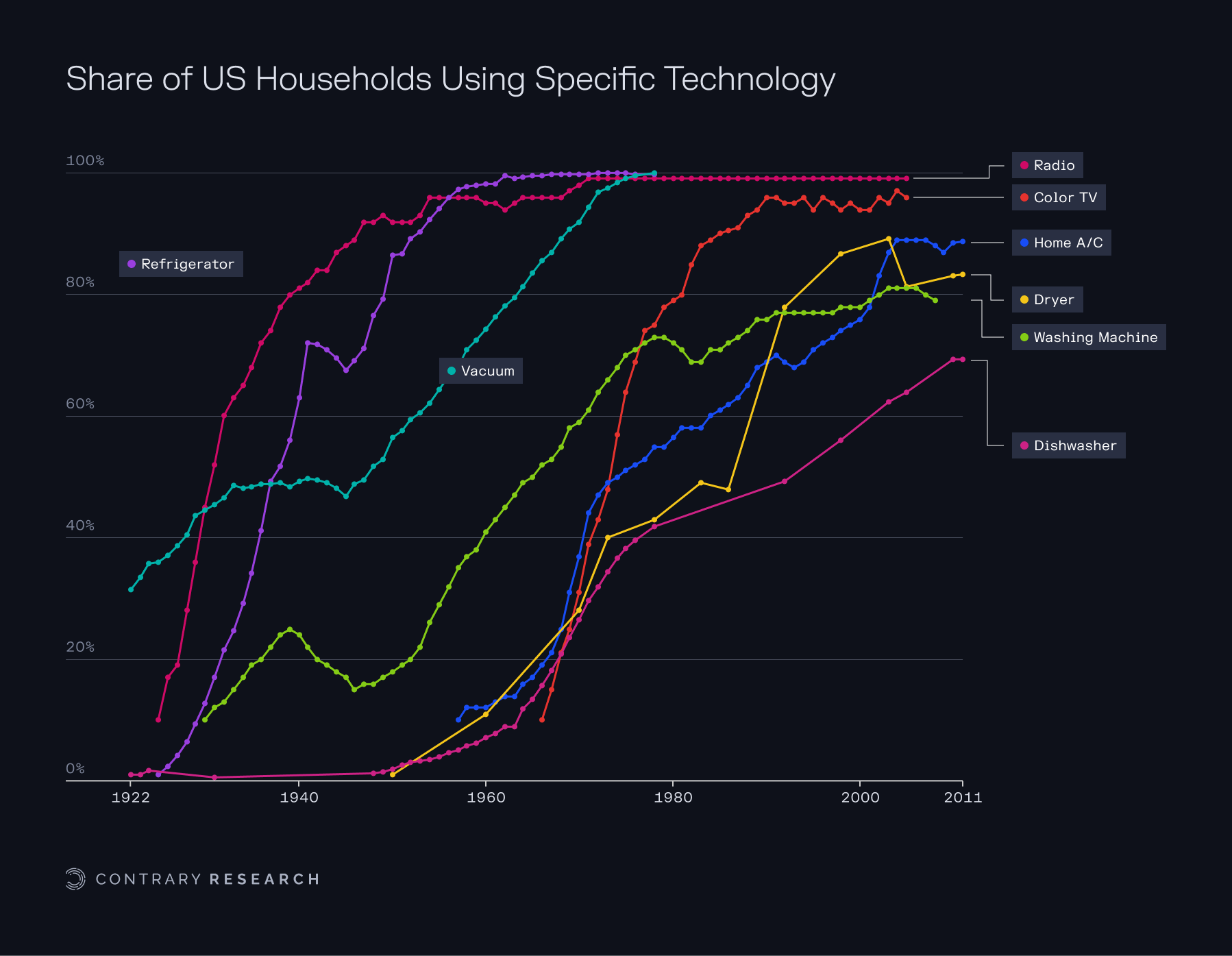

The post-WWII decades became the golden age of the US power grid. Electricity use grew roughly 7% annually through the 1950s and ’60s, the market for electrical utilities became the largest industry in the US, and virtually 100% of U.S. households had electric service by 1960.

But by the mid-1960s, cracks were starting to emerge. The 1965 Northeast blackout, which affected 30 million people, exposed a fragile, overly centralized system and marked the beginning of the end for unchallenged utility monopolies.

In the 1970s and 1980s, a combination of environmental regulation, energy crises, and deregulation efforts reshaped the industry. PURPA and later FERC Orders 888 and 889 opened the door to competition and began dismantling the vertically integrated utility model.

By the early 2000s, the grid entering the 21st century was reliable, but aging and technologically stagnant. It still largely ran on an architecture from the 1960s: big centralized plants, analog controls, and thin margins of spare capacity.

The grid today, a system built for centralized, predictable power flows, is now being asked to juggle extreme peaks, bi-directional energy flows, and an explosion of new devices plugging in at the edge. Together, these factors have created an escalating degree of fragility to the US electrical supply, which has already led to major outages.

If things continue as they are, there's worse to come. About 70% of transmission lines are over 25 years old, with many having exceeded their design life. A nationwide transformer shortage, fragile equipment, and a wave of utility retirements have made diagnosing and fixing grid problems harder, just as the grid’s complexity is exploding.

Electricity demand is accelerating. America used a record 4.05 trillion kWh of electricity in 2022, about 14x as much as in 1950. By 2030, EVs alone could draw nearly 5% of US electricity output. Peak loads are growing faster than average loads, widening the gap between normal operation and stressed conditions.

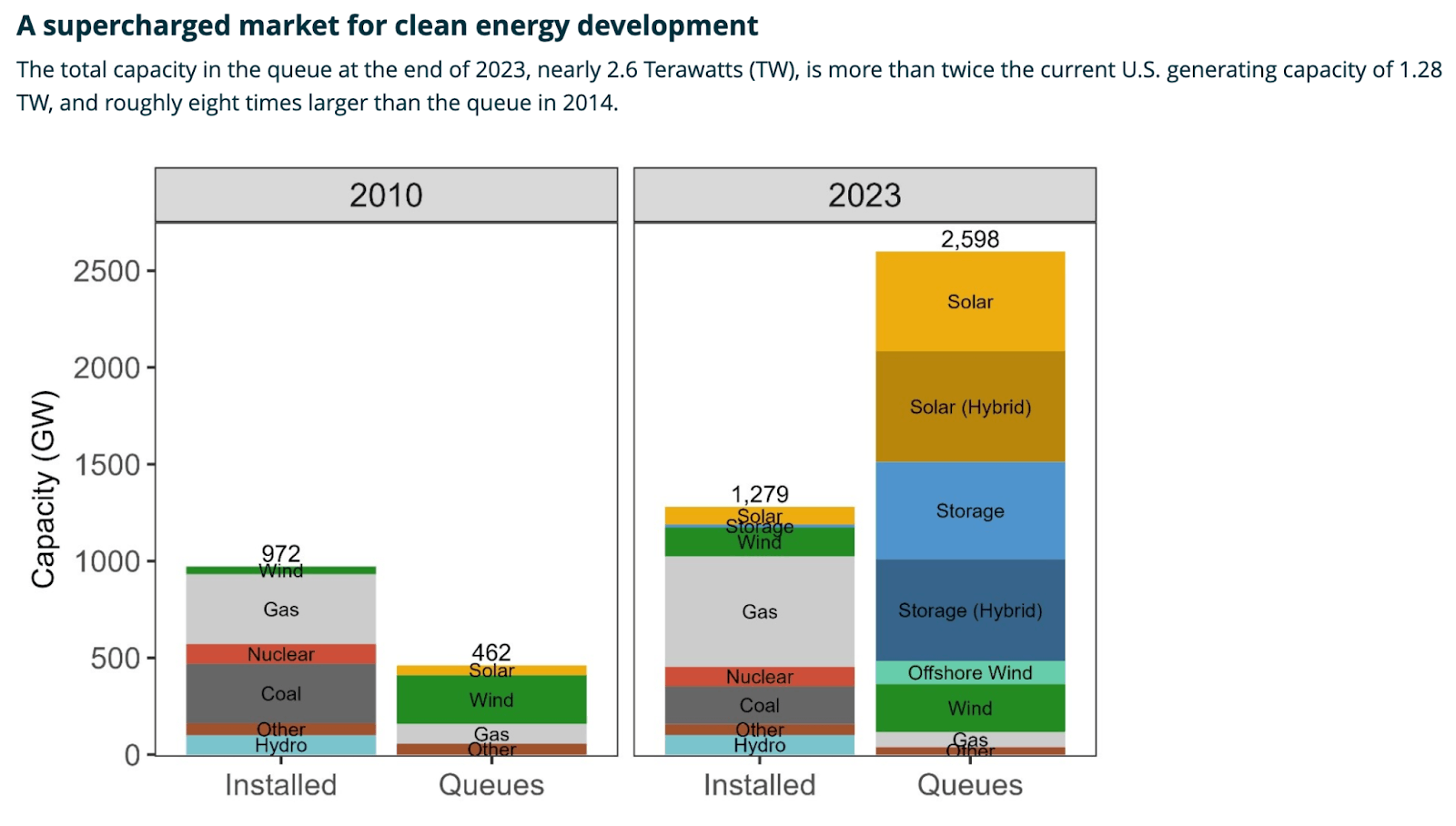

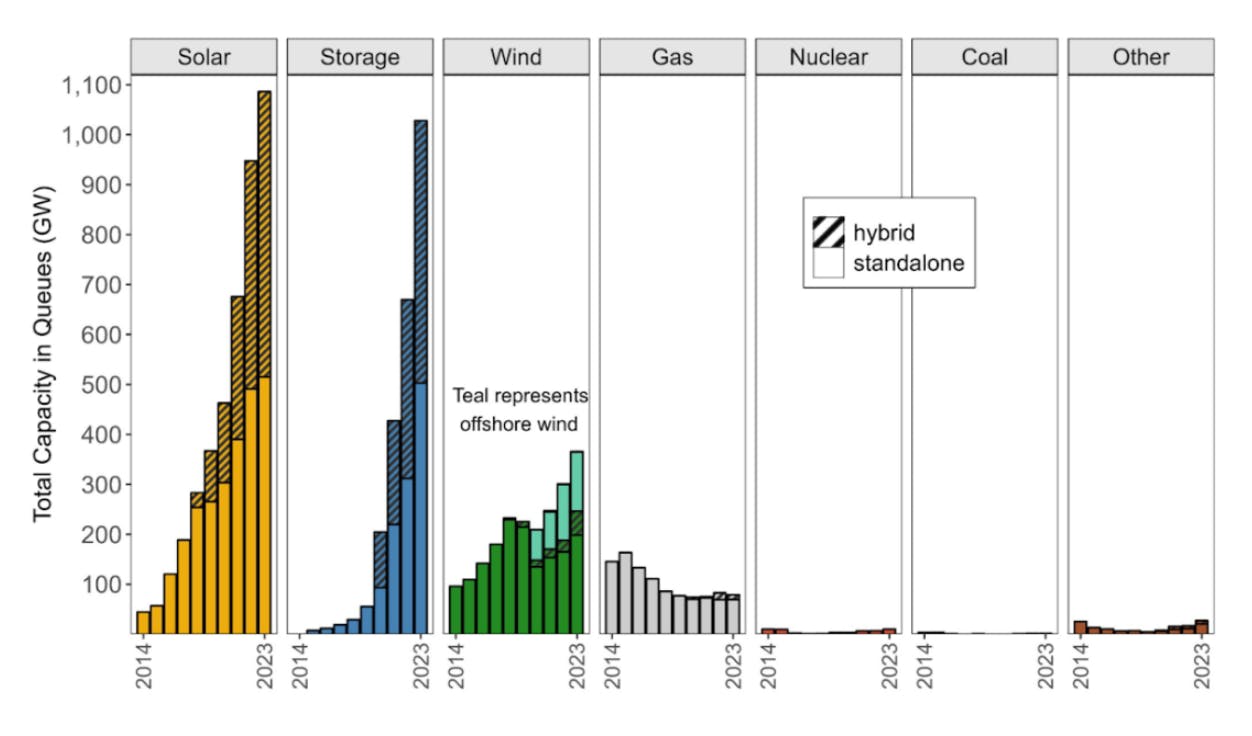

As of the end of 2023, 2,600 GW of proposed generation and storage projects were waiting for permission to connect to US grids, which is double the current installed capacity. But more than 70% of projects in queues never get built, throttled by long delays and interconnection backlogs.

We’re essentially forcing a centralized machine to behave like a distributed network, and it’s cracking under the strain. When solar panels feed excess power into neighborhood circuits, they can cause voltage spikes that the old equipment wasn’t configured to handle.

The energy grid is quickly evolving towards decentralization to meet the challenges posed by the increasing electrification of the economy. The task now is to connect the pieces — literally, through wires and wireless signals — into a coherent, adaptive whole that can power a low-carbon economy and a more resilient future.

History of the U.S. Grid

Early Electrification: The War of Currents (1882-1900)

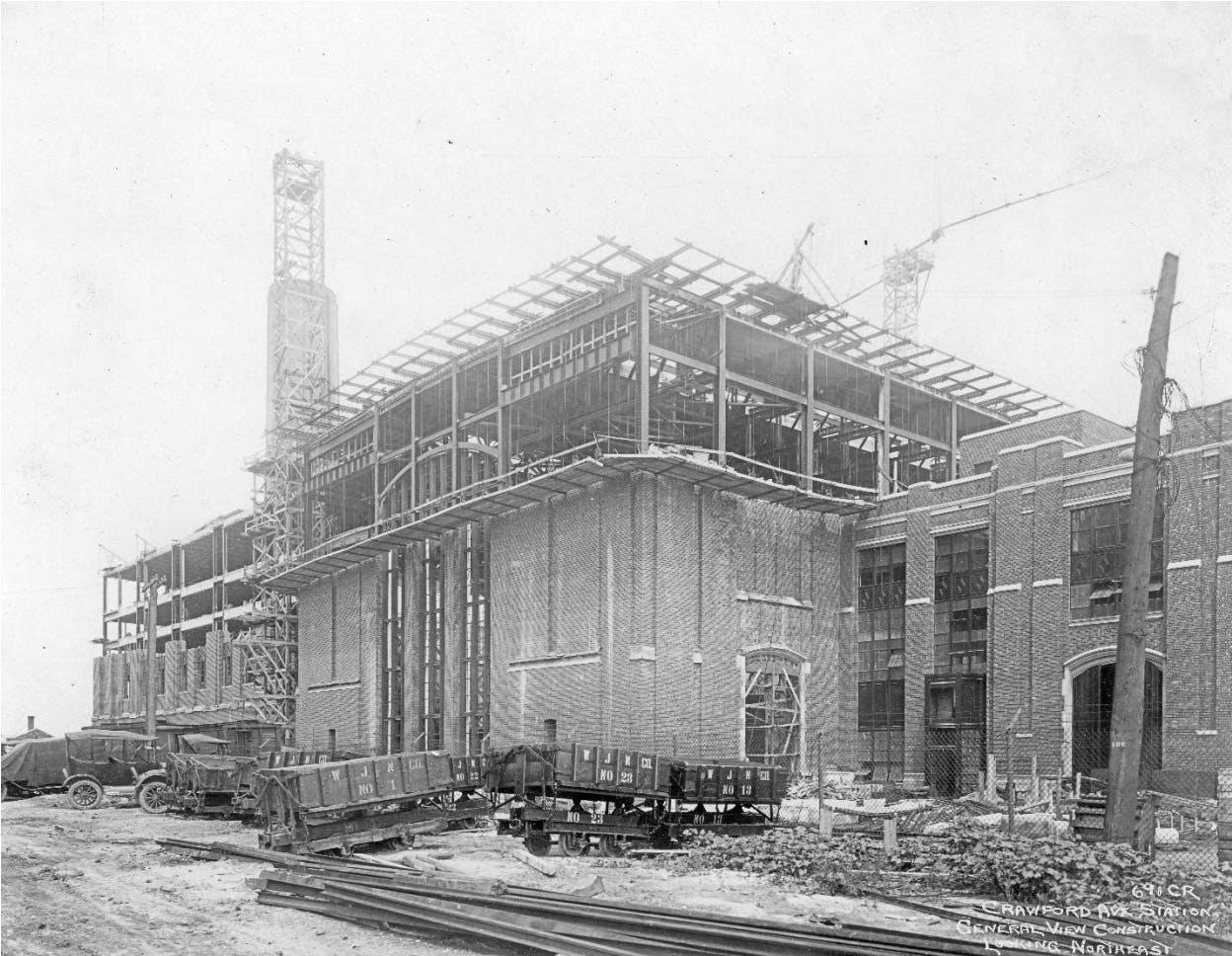

The story of America’s electric grid begins in the late 19th century, when electricity was a rare luxury. Thomas Edison opened the first commercial power plant, Pearl Street Station, in 1882, delivering direct current (DC) electricity to just 82 customers with a total of 400 lamps in lower Manhattan. The Edison design became the model for power plants built around the city. But Edison’s system had a fatal flaw: DC power couldn’t travel very far, which required power plants to be built within 1-2 miles of a customer.

Enter Nikola Tesla and George Westinghouse, champions of alternating current (AC). In the 1890s, the “War of Currents” swung decisively to AC after Tesla’s polyphase system proved it could transmit power over long distances with transformers. The 1896 Niagara Falls project became a landmark – harnessing the Falls to send AC power over 20 miles to Buffalo. Edison’s DC power took a big hit when AC power proved to be capable of stepping voltage up and down. That meant electricity was no longer confined to a single neighborhood; it could flow across cities and regions at a lower price and risk.

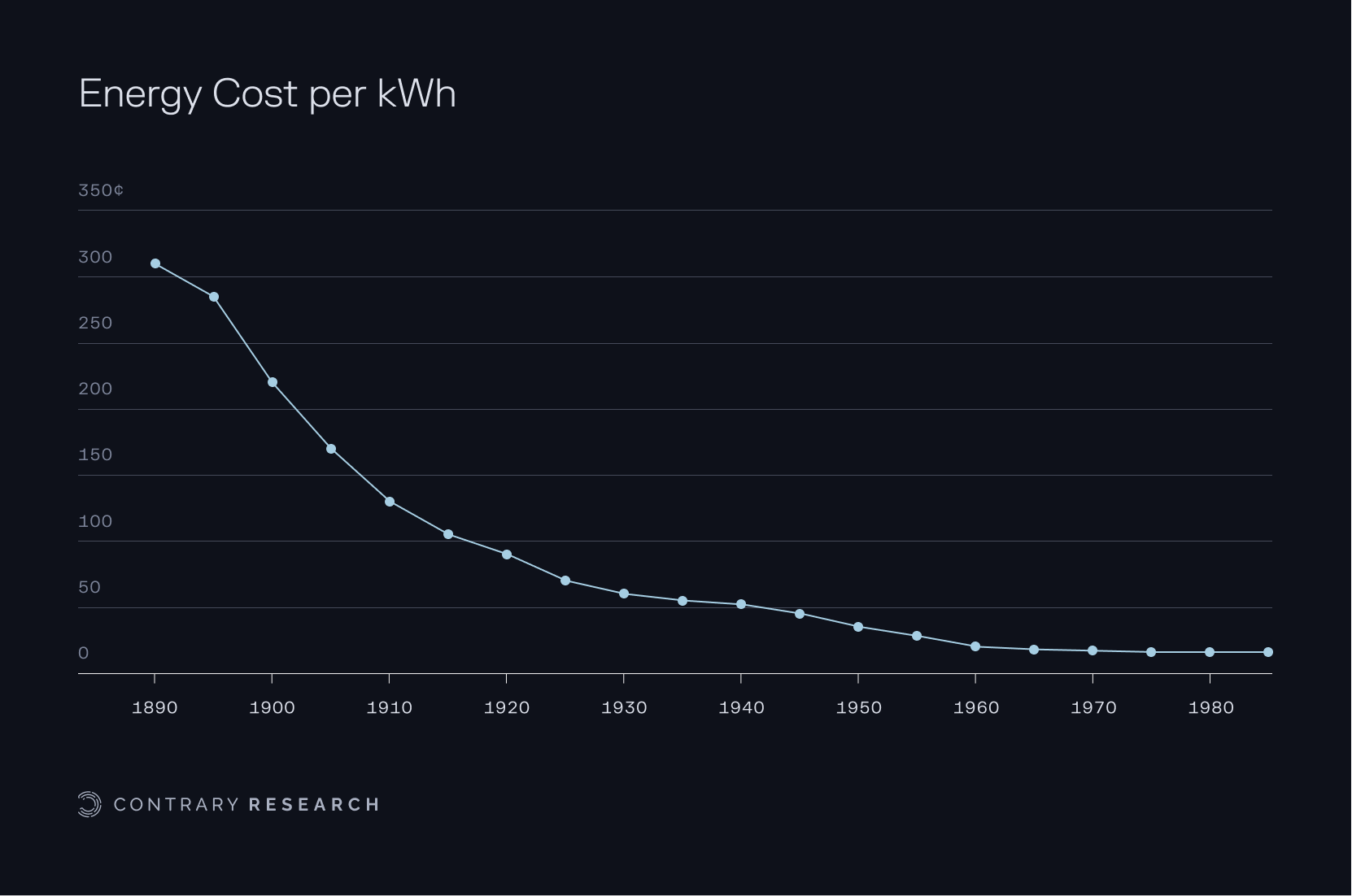

In the electricity gold rush that followed, entrepreneurs like Samuel Insull, one of Edison’s protégés, built holding companies that bought up local utilities and micro grids, seeking economies of scale from larger, interconnected networks. At its peak, Insull’s utility empire served 12% of national electricity generation, representing ~$60 billion in asset value in 2025 dollars. As newer units proved capable of higher energy output it lowered operating costs, which made more units possible. In turn, that spread the cost of new equipment over more users, driving the cost of providing electricity down consistently over time.

Source: Construction Physics, Contrary Research

By 1900, electricity still only provided under 5% of mechanical power in the US, while only 8% of homes had electric lights. But the potential was obvious. As one early 20th-century writer imagined, “the day must come when electricity will be for everyone, as the air they breathe.”

Expansion & The Rise of Utilities (1900-1929)

In the early 1900s, dozens of isolated urban grids began linking up. Technological leaps like higher-voltage transmission lines allowed power plants to grow even larger and more efficient, which drove down the cost per kilowatt-hour even further. Utilities adopted a “grow and build” model: more customers and bigger plants created a virtuous cycle of cheaper power and higher demand. By the 1920s, the US had thousands of power stations, mostly burning coal, plus a growing fleet of hydroelectric dams in areas like the Northeast and Pacific Northwest.

Source: South Shoreline Museum

The first long-distance transmission lines had started appearing back in 1889, and by 1912 nearly all high-voltage lines worldwide were using AC, many linking hydro dams to cities. However, regulation hadn’t kept up with this rapid growth. Large utility holding companies formed monopolies in many cities, sometimes with abusive practices. Meanwhile, residential electricity adoption continued growing rapidly so that by 1930, US electricity generation hit 114 billion kWh (a 20x increase from 1902) and about 68% of American homes had electric service. As President Franklin D. Roosevelt noted in 1932, electricity had become “no longer a luxury, but a definite necessity” for the daily lives of Americans.

Source: Construction Physics, Contrary Research

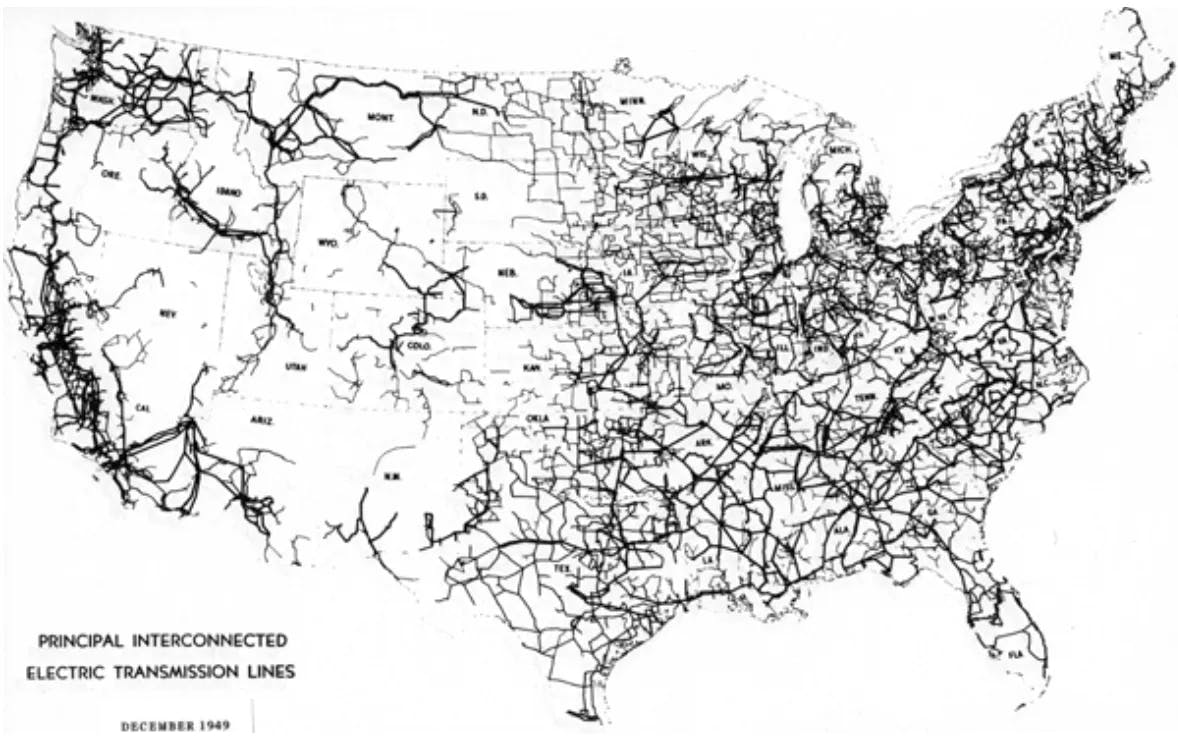

By 1949, the US had built a high-voltage transmission network, turning a once-disconnected patchwork of local grids into an interconnected web spanning the continent. In 1910, only about 10% of US households had electricity; by 1930, nearly 70% did, and by the post-WWII years electrification was virtually universal. This rapid build-out – financed by both private capital and public programs – stitched together cities, suburbs, and rural areas under a common grid, setting the stage for the modern power industry.

Source: Construction Physics

New Deal Electrification & Public Power (1930s)

The first several decades of electrification in the US were driven largely by private monopolies. Then, in the 1930s as the Great Depression took hold, the federal government began to play a bigger role in electricity’s roll out. Huge utility empires like Insull’s collapsed under financial strain during the Great Depression, exposing the need for oversight. In 1935, Congress passed the Public Utility Holding Company Act (PUHCA) to break up bloated utility holding companies, boost healthy competition, and regulate interstate power sales. At the same time, President Roosevelt’s New Deal launched programs to bring electricity to the countryside, which private utilities had previously ignored. In 1930, only about 1 in 10 farms had power. The Rural Electrification Act of 1936 provided federal loans for cooperatives to build lines into America’s heartland, raising rural electrification from 10% to nearly 90% of farms by 1950.

Large-scale public power projects were intended to realize the promise of abundant, cheap electricity. The federally owned Tennessee Valley Authority (TVA), created in 1933, built dams and power plants across a seven-state region, both to electrify poor rural areas and to spur economic development. Massive hydroelectric dams like Hoover (completed 1936) and Grand Coulee (1942) were engineering marvels that delivered affordable power to millions. These projects proved that renewable energy at scale could be a pillar of the grid. By the beginning of World War II, government programs accounted for roughly 20% of new generating capacity through the decade, creating a more balanced mix of public and private power.

Postwar Boom & The Golden Age (1945–1968)

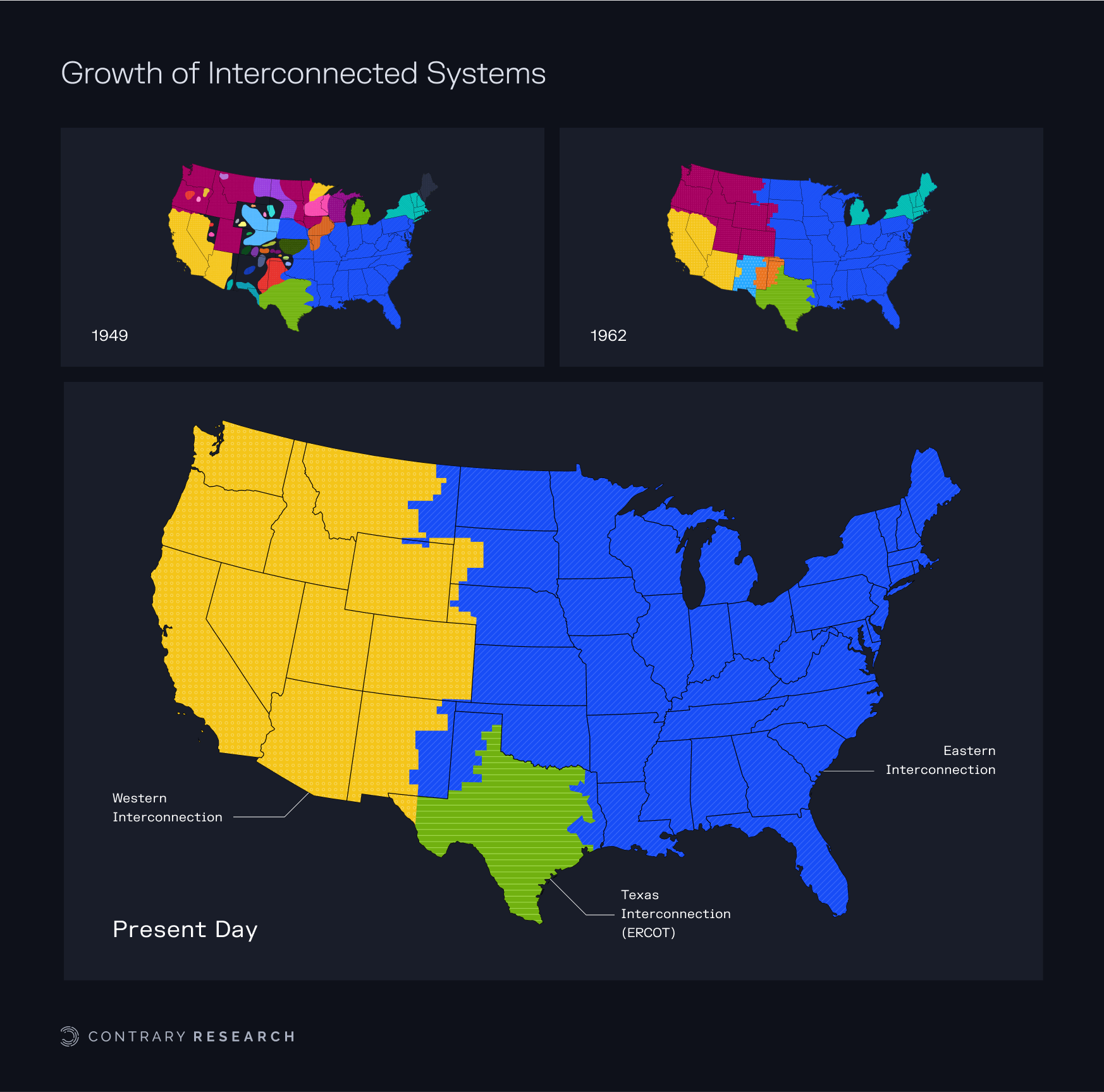

After WWII, electricity demand surged alongside economic growth. The postwar decades became the golden age of the US power grid. Utilities, still mostly regulated monopolies, built out an enormous amount of infrastructure: new coal and oil plants, the first nuclear reactors, and thousands of miles of transmission lines criss-crossing states. By the 1950s, three major synchronous energy grids (Western, Eastern, and Texas) began to take shape, interconnecting previously isolated systems for reliability and trading. Utility executives enjoyed near-hero status, and power engineering was the cutting-edge career that every college student was pining for. Steady technological progress made power cheaper each year – economies of scale were thought to be practically limitless. The average price of electricity fell steadily, keeping consumers and regulators happy. Electricity represented progress. If you had electricity, you could access modern amenities like radios, telephones, refrigerators, and more.

Source: Our World in Data, Contrary Research

Utility monopolies were justified by the theory of natural monopoly – the idea that a single operator could deliver power at lower cost due to the huge capital needed and the inefficiency of duplicate networks. This emphasized being vertically integrated: owning generation, transmission, and distribution. In return for exclusive territories, utilities accepted oversight by public commissions and a mandate to serve all customers reliably. Disparate operations across the country increasingly merged into one centralized interconnected system.

Source: Construction Physics, Contrary Research

This regulatory compact guaranteed utilities a profit as long as they met demand and kept prices low. The model worked for decades: electricity use grew roughly 7% annually through the 1950s and ‘60s, fueling American prosperity. By 1960, virtually 100% of US households had electric service, up from 67% in 1930. The market for electrical utilities had become the largest industry in the US, reaching $69 billion of gross capital and accounting for 40% of the world’s electricity consumption. It was the most extensive man-made network on earth, and it appeared to run like clockwork. Growth in energy demand was a straight line pointed up.

However, by the mid-1960s, cracks were starting to emerge in the American electrical grid. The Northeast Blackout of 1965, when a single transmission relay failure cascaded into a massive outage affecting 30 million people. In comparison, the famous Texas outage of 2021 left only 4.5 million people without power. The 1965 blackout served as a wakeup call to install better coordination and safeguards. In response, the industry formed the North American Electric Reliability Council (NERC) in 1968 to set voluntary reliability standards. But that was just the beginning of the massive shift that was bringing the era of unchallenged utility monopolies to an end.

Turbulence, Environmental Regulation & Competition (1970–2000)

As the electric grid approached its 100th birthday, more problems started to emerge, including soaring energy prices, new environmental and safety regulations, and experiments with market deregulation. In 1970, Congress passed the National Environmental Policy Act (NEPA), requiring environmental impact reviews for major projects including power lines and plants. Suddenly, building a transmission line or a generating station meant navigating years of permitting and legal challenges. The Clean Air Act of 1970 and its amendments forced utilities to invest in pollution controls on coal plants, which drove up costs.

At the same time, demand growth slowed sharply after the 1973 OPEC oil embargo and the 1979 energy crisis. The industry, which had banked on endless growth, was caught flat-footed: the 1970s saw cancelled nuclear projects, utility financial crises, and a sense that the old model was breaking. In 1978, amid concerns about energy security, Congress passed the Public Utility Regulatory Policies Act (PURPA) – a seemingly small provision that had outsized consequences. PURPA encouraged independent power producers (non-utility generators) by obligating utilities to buy power from qualifying renewable and cogeneration facilities. For the first time, utilities were forced to allow others to supply energy on their wires.

This crack was further widened by court rulings like Otter Tail in 1973, where the Supreme Court held that a utility had to transmit power from a competitor in a landmark antitrust case. By the 1990s, regulators were pushing for more competition. Policy makers believed that introducing competition would drive innovation and lower costs, as had happened in airlines and telecom. The Federal Energy Regulatory Commission (FERC) issued Orders 888 and 889 in 1996, requiring utilities to open their transmission lines to others on equal terms and establishing independent system operators (ISOs) to run regional grids.

About half of US states moved to restructure their electricity markets – separating power generation from distribution and giving customers a choice of power supplier. However, the journey was bumpy. California’s botched decentralization in 2000 led to price spikes and blackouts, exacerbated by Enron’s market gaming. Several utilities went bankrupt after being forced to buy expensive power they couldn’t pass costs for. By the early 2000s, enthusiasm for competition cooled, but the hybrid market structure remained: today, some regions (like Texas and much of the Midwest and Northeast) have competitive wholesale markets, while others (like the Southeast, and much of the West) still rely on vertically integrated utilities.

One downside of this patchwork was underinvestment. Neither regulated utilities nor merchant generators had a strong incentive to invest in long-range grid upgrades. Utilities focused on reliability over innovation, wary of spending on projects that regulators might not let them recoup. As a result, the grid entering the 21st century was reliable, but aging and technologically stagnant. The US still largely ran on an architecture from the 1960s: big centralized plants, analog controls, and thin margins of spare capacity. For a while, that seemed sufficient. But new forces, from extreme weather to renewable energy to electric vehicles, would soon push the old grid to a breaking point.

The Breaking Point: The Distributed Energy Grid

By the 2020s, the US electric grid found itself stretched to its limits in ways its original architects never imagined. A system built for centralized, predictable power flows is now being asked to juggle extreme peaks, bi-directional energy flows, and an explosion of new devices plugging in at the edge. It’s important to examine the key stress fractures: aging hardware, surging demand, bottlenecks in connecting new energy sources, and the growing complexity of managing a grid that’s no longer one-way. Together, these factors have created a perfect storm – one that has already led to major outages and warnings that worse may come. As Packy McCormick wryly noted, “the more progress we make in wind, solar, EVs, and electrification, the more unstable the grid gets”

Strained & Aging Infrastructure

Much of the US transmission and distribution infrastructure built in the mid-20th century is still in service – and it’s well past middle age. About 70% of transmission lines are over 25 years old, with many having exceeded their design life. Transformers at substations, the critical nodes that route power, are aging too, and replacements are hard to come by. In fact, a nationwide transformer shortage has become a serious concern. Old equipment is more failure-prone: insulation wears out, metal fatigues, and capacity is often constrained compared to modern standards. Utilities perform maintenance and upgrades, but investment has lagged. One reason is the fragmented ownership of the grid – no single entity is responsible for a holistic overhaul, and utility regulators often prioritize keeping rates low over proactive capital investments. The result is a grid that’s increasingly fragile.

Major blackouts have been a painful piece of key evidence. In 2003, a high-voltage line in Ohio brushed against an untrimmed tree and shut down, triggering a cascade that left 50 million people in the dark from Detroit to New York. The root cause? A combination of equipment failure, lax tree trimming when a cost-cutting utility skipped maintenance, and a software glitch in the grid control room that prevented timely response. It was a 21st-century crisis with distinctly 20th-century causes.

More recently, extreme weather has been the nemesis of aging grids. In August 2020, a heatwave forced California into rolling blackouts when several old gas plants tripped offline and there wasn’t enough reserve power – a scenario exacerbated by a reliance on imports that weren’t available at the time. The following winter, in February 2021, Texas suffered a far more lethal grid collapse: a deep freeze during Winter Storm Uri knocked out power plants and froze gas wellheads, leading to days-long outages for millions. Over 700 people died in the Texas blackout and economic losses topped $130 billion. While Texas’s isolated grid and market design were factors, a fundamental issue was infrastructure not hardened for unusual conditions: gas pipelines, wind turbines, and power lines all failed in the cold. These events underscore that reliability standards of the past (usually planning for “1-in-10-year” events) may be insufficient in an era of more frequent climate extremes.

The reliability metrics tell a similar story. The US power grid has become notably less dependable relative to peer nations. The average American electricity customer experiences around nearly 8 hours of outage per year, including everything from brief hiccups to long blackouts. While that may not sound extreme, contrast that with Germany where the average annual outage is only 13 minutes. The US grid’s “uptime,” at ~99.9% is an order of magnitude worse than the ~99.99% seen in countries with more modern grids.

Utilities in the US are finding it harder to meet even their own historical performance: blackouts are becoming more frequent in many regions. NERC has warned that parts of the country face heightened risk of insufficient power, especially as older coal plants retire and extreme weather events intensify. Aging infrastructure isn’t just about steel in the ground – it’s also about people. The utility workforce that built and maintained the 20th-century grid is itself graying. A wave of retirements by experienced grid operators and lineworkers has led to a talent crunch. Over half of utility workers now have under 10 years of experience in the field, and utilities report their highest rates of non-retirement attrition in decades. Training new hires takes time, and some specialized knowledge walks out the door with the retirees. This human factor means that diagnosing and fixing complex grid problems – already a challenge – could get even harder just as the grid’s complexity is exploding.

Rising Demand = New Peaks

While the wires and towers have been aging, the demands placed on the grid are increasing. After a plateau in the 2000s, US electricity consumption is climbing again, propelled by the electrification of transportation, heating, and industry. America used a record 4.05 trillion kWh of electricity in 2022 – about 14x as much as in 1950. Now, a new wave of electric loads is cresting: electric vehicles (EVs), data centers powering cloud computing and AI, and the electrification of everything from stoves to factory furnaces. Each of these trends threatens to reshape demand peaks in ways the grid isn’t historically designed for.

Take EVs for example. Transportation electrification is expected to add huge load demand in the evenings as drivers plug in after work. By 2030, EVs could draw nearly 5% of US electricity output, equivalent to dozens of large power plants’ worth of demand. A single electric car charging on Level 2 (240V) can pull ~7 kW from the grid – akin to adding another house to the grid for a few hours. Clusters of EVs in neighborhoods have already caused local transformers to blow out because distribution circuits were never sized for that surge of simultaneous charging.

Data centers are another behemoth: a large hyperscale data center might consume 30–50 MW continuously – as much as tens of thousands of homes – and new AI supercomputing clusters only up the ante. Tech giants are building data centers at breakneck speed, particularly in regions like Northern Virginia, Texas, and the Midwest. Often, the local grid infrastructure struggles to keep up with the connections and reliability these facilities demand. Meanwhile, cities and states pushing electrification (replacing gas heating and cooking with electric heat pumps and induction stoves) are creating new winter peak loads. The US grid, especially in warmer regions, has historically been built around summer air-conditioning peaks. Now, as electric heating grows, some systems face high winter peaks too – requiring upgrades to avoid capacity shortfalls on cold nights.

Grid planners are juggling a much less predictable load profile. Many misjudged the speed of these changes. For instance, California has repeatedly urged consumers to choose EVs, but even when adoption trails estimates, the necessary infrastructure is still insufficient – leading to scrambling to upgrade neighborhood transformers and add “make-ready” infrastructure for chargers. This is not just because of the increase in overall demand resulting from more EVs, but also because of EV adoption’s impact on where and when that demand hits. Peak loads are growing faster than average loads, meaning the gap between normal operation and stressed conditions is widening. If every driver charges right after work, or every heat pump kicks on during cold weather, the evening net load can spike sharply.

The interconnection queue backlog illustrates the challenge of serving new demand and new supply. As of the end of 2023, a staggering 2.6K GW of proposed generation and storage projects were waiting for permission to connect to US grids – an amount double the current installed capacity. While this includes mostly new supply (solar, wind, batteries), the crunch affects demand projects too, such as large industrial loads or data centers requiring new substations. The typical wait for a project to get connected had ballooned to over five years as of April 2024, and many projects languish even longer or withdraw out of frustration.

Source: Energy Markets & Policy

Historically, over 70% of projects in queues never get built. These bottlenecks mean that even when investors are ready to build clean energy or serve new loads, the grid’s expansion is limiting. Regions like California and Texas have, at times, had to halt new solar or crypto-mining hookups until upgrades occur. 95% of active capacity in queues come from solar, storage, and wind. As a result of these massive delays, bureaucratic and physical grid constraints are now a throttle on economic growth and decarbonization.

Source: Latitude Media

Two-Way Power Flows & DERs

Compounding the issue, the grid’s architecture is flipping from one-way to two-way flows. Traditionally, power flowed top-down: big power plants > high-voltage transmission > local distribution > homes and businesses at the end. Now, with the rise of distributed energy resources (DERs) like rooftop solar, home batteries, and even EVs that can send power back to the grid, electrons flow back and forth.

The legacy grid wasn’t designed for this. Edison and Tesla never imagined that homes on an energy grid network would send power back. When solar panels on your roof send excess power back into the neighborhood circuit at noon, it can cause voltage spikes that the old equipment wasn’t configured to handle. Protection systems like circuit breakers and fuses are often not set up for reverse flows, which raises the risk of faults or even dangerous backfeeds that could shock lineworkers during an outage. By 2023, over 4% of US homes had solar panels, and in some states (e.g. Hawaii, California) it’s well above 10% – enough that at certain times, distribution feeders actually become net exporters of power. This is turning the grid on its head.

Utilities have started adopting smart inverters and voltage regulators to manage the fluctuations, but many systems are behind the curve. The alternative – not integrating DERs – isn’t viable, as consumers and businesses keep installing them for cost and sustainability reasons. We’re essentially forcing a centralized machine to behave like a distributed network, and it’s creaking under the strain.

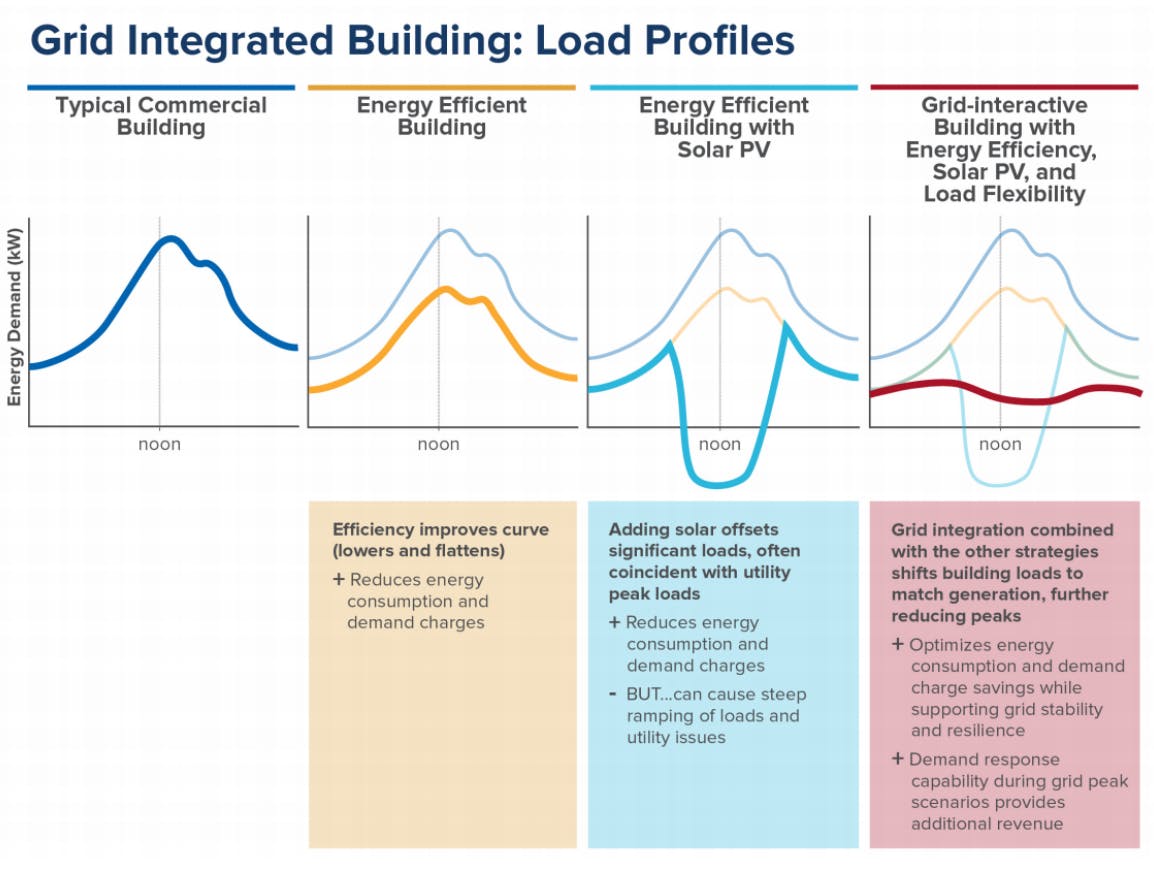

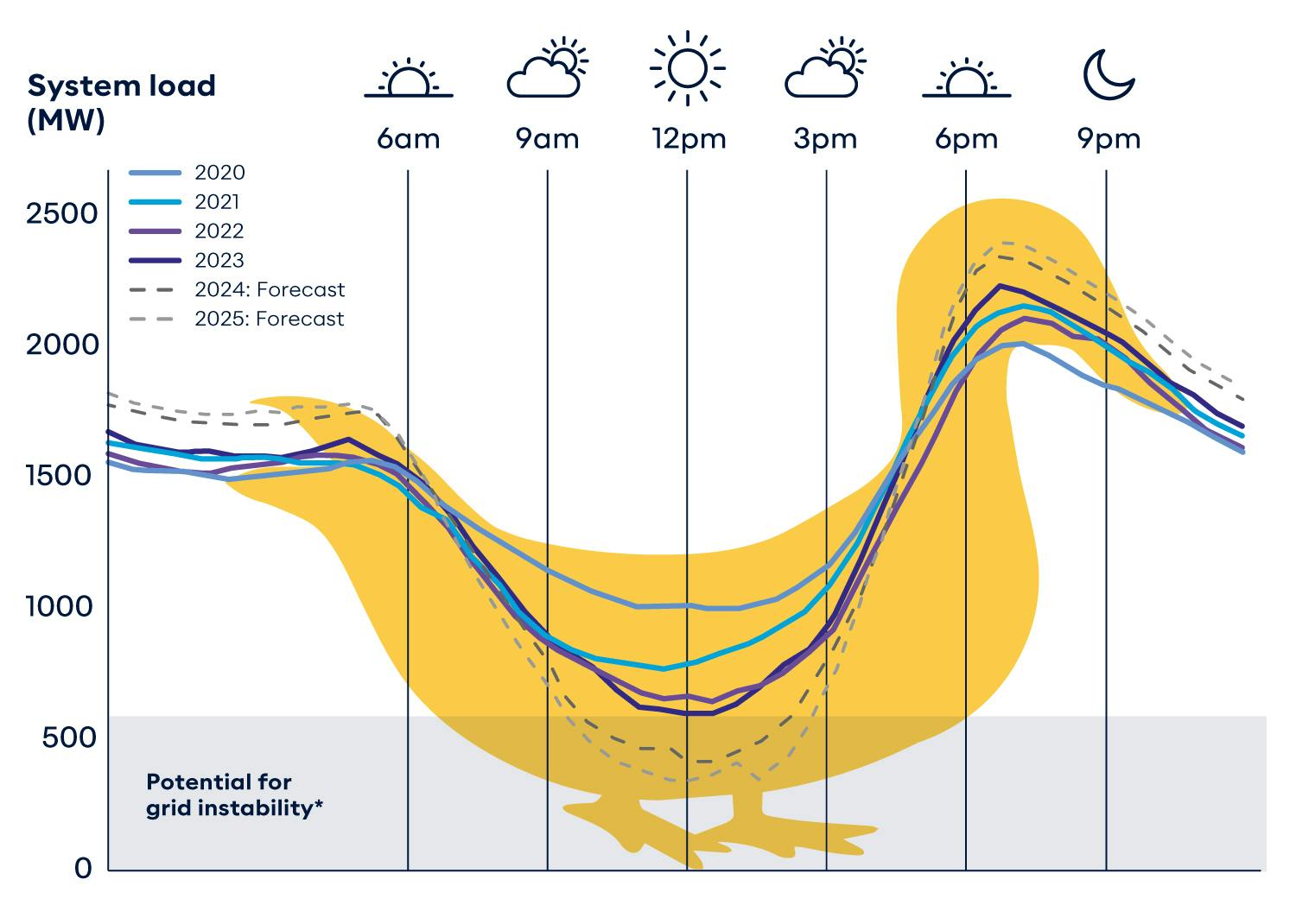

A vivid illustration of this dynamic is the famous duck curve. This graph (so-named because it resembles a duck’s silhouette) shows net grid demand over the course of a day, accounting for renewables. In solar-heavy areas, midday demand net of solar dips low (the duck’s belly) because rooftop and solar farm generation displaces a lot of grid supply. But come late afternoon and sunset, solar generation plummets just as people come home and turn on appliances – causing a rapid ramp-up in demand on traditional power plants (the duck’s neck). California’s duck curve has grown more extreme each year. In spring 2023, CAISO, the California grid operator, saw multiple days where 60–80% of demand was met by solar at midday, followed by a 13K+ MW ramp in the early evening.

Source: Synergy

Managing this steep ramp requires fast-reacting power sources and/or storage, which are in short supply. In practical terms, California sometimes has too much power at noon (leading it to curtail, or spill, solar and even pay neighboring states to take power) and then scrambles for every megawatt at 7pm (leaning on gas plants and emergency programs to avoid blackouts).

Regions like Germany face a similar “renewables duck” – Germany often has excess wind at night and must export it, then needs quick backup when the wind fades. Grid congestion is another aspect of the two-way challenge. Renewable generation is often in remote areas (wind in the plains, sun in the deserts) far from demand centers. Without sufficient transmission, we get bottlenecks. For example, in the UK, wind farms in Scotland are frequently asked to curtail output because the north-to-south power lines are maxed out.

In early 2025, British consumers paid £253 million in just two months to curtail wind farms and fire up gas plants closer to demand due to grid constraints. Germany, too, struggles with bottlenecks between its windy north and industrial south, leading to costly redispatch (turning off renewables and ramping up coal/gas elsewhere). Curtailments amounted to about 3.5% of Germany’s renewable generation in 2024 and grid management costs ran into the billions of euros. These inefficiencies ultimately hit consumers’ bills. It’s a sobering paradox: even as we build plenty of clean generation, the lack of wires to move it or storage to hold it means we sometimes waste cheap, green energy and rely on fossil fuels as a fallback.

Regulatory & Software Headaches

Finally, the grid is groaning under institutional friction and outdated IT systems. On the regulatory side, getting anything big built is notoriously hard. High-voltage lines can take 10+ years from concept to operation, navigating a maze of permits at local, state, and federal levels. Environmental reviews, while crucial, add years if not managed well. Local opposition in the form of NIMBYism adds obstacles to the building of transmission lines, wind farms, and nuclear power plants, which can often delay or kill projects. Everyone wants cleaner energy, but not the new pylons in their backyard. Even upgrading a substation can trigger lengthy proceedings if neighbors object. This sluggishness means the grid cannot adapt quickly to emerging needs.

There is also a jurisdictional split: distribution grids are managed by utilities under state oversight, while transmission often falls under multi-state Independent System Operators (ISOs), Regional Transmission Organizations (RTOs), and the Federal Energy Regulatory Commission (FERC). Coordinating investments across these domains is tough. For example, a battery on a homeowner’s wall could provide local backup (a distribution benefit) but also grid services to a regional market (a transmission-level service) – who regulates that, and how do you fairly compensate the owner for it? These questions are being worked out painfully slowly in rulemaking. An FERC order in September 2020 directed grid operators to open wholesale markets to aggregated DERs (like fleets of batteries and smart thermostats), but full implementation has been sluggish – deadlines have slipped into 2026 for energy markets and some regions are pushing back citing technical difficulties. In the meantime, many DER projects sit on the sidelines or only play on the retail side.

Equally concerning is the software and situational awareness gap. The information systems that the utility industry relies on are famously old-school. Many grid control systems date back to the 1980s or 1990s running on antiquated code and often not fully networked. Utility operational software for things like supervisory control and data acquisition (SCADA), outage management, distribution automation has evolved in silos. Different vendors supply different pieces, and they don’t always talk to each other smoothly, if at all. There were more than 3K electric utilities in the US as of July 2024, each potentially using a unique cocktail of software. As a result, data is fragmented. A rooftop solar inverter might report to one system, a smart meter to another, and a utility-scale battery to yet another – all with limited integration. And if they don’t communicate instantly then the grid could black out.

The electric grid’s digital layer today is akin to the early Internet if every company had its own proprietary network. There is no equivalent of a common “TCP/IP” for energy devices yet. One of the reasons two of the co-authors of this piece are building Voltra*, a startup in this space, points to the need for bridging this gap in this space. As thousands of distributed devices connect, utilities lack a unified way to manage them. Every ISO is different, every utility is different, and none of their software is really coordinated with each other. This leads to absurdities like a solar farm sending its status to the transmission operator, but the local distribution utility not knowing about a voltage issue until a customer calls in. The risk of cyberattack also looms – old systems were not designed with modern cybersecurity in mind, and greater connectedness means more vulnerability if not properly secured.

Lastly, as utilities try to bolt on new functionalities, like managing EV charging or battery dispatch, they often find their software simply wasn’t built for that. Imagine trying to run smartphone apps on a 1990s flip phone – that’s essentially what many grid operators are attempting. Without a significant digital overhaul, the grid’s physical upgrades alone won’t be enough. However, every crisis is an opportunity. The stresses we’ve outlined have spurred a flurry of innovation and re-thinking of how the grid should operate. From mega-scale batteries to smarter software and novel market mechanisms, the energy industry is racing to “write” the future of the grid differently.

Future-Proofing the Energy Grid

Future-proofing the energy grid is as much about software and policy as it is about steel and copper. This includes hardware innovations – like advanced transmission lines, energy storage, and next-gen nuclear reactors – as well as digital and market innovations – like smart devices, virtual power plants, and decentralized trading. The pieces of the future grid are coming into place, but stitching them together will require breaking old paradigms. It’s not just about adopting new technology, but about orchestrating an ecosystem of devices, software, and economic incentives in harmony. Think of how the internet evolved: open standards, smarter endpoints, and new business models transformed a patchwork network into a resilient web. The electric grid may be on the cusp of a similar transformation – an “Electricity Internet” where power flows as seamlessly as data, guided by intelligence at the edges.

Rebuilding the Backbone: Transmission & Big Storage

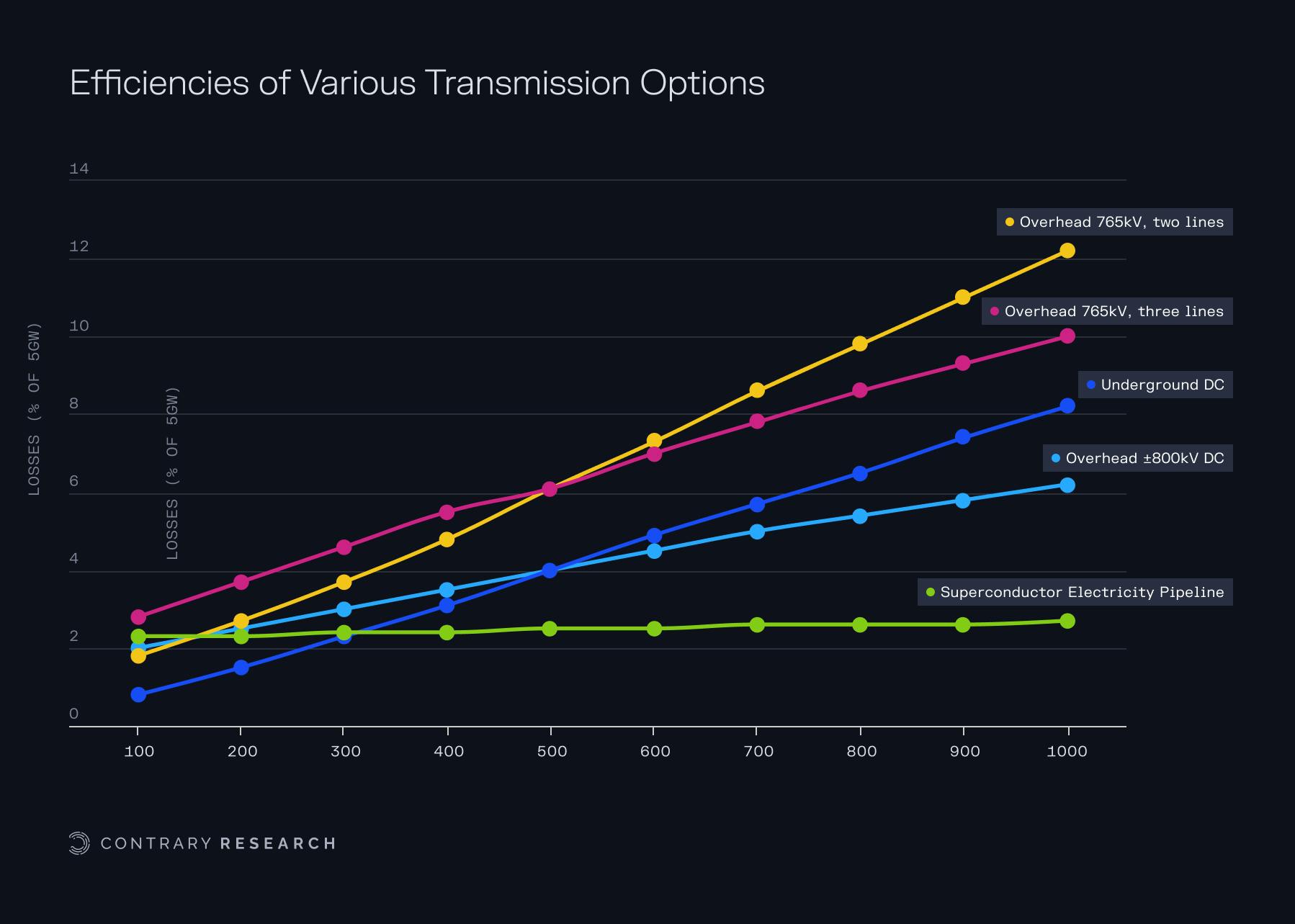

One cornerstone of a future-proof grid is strengthening its backbone – the high-voltage network and large-scale balancing resources that support it. This means expanding transmission capacity, potentially with new technologies like high-voltage direct current (HVDC), superconductors, and deploying grid-scale energy storage as a buffer for renewables.

High-Voltage Superhighways (HVDC)

Long-distance transmission is the unsung hero of grid reliability and clean energy expansion. The ability to send power from where it’s abundant (like a windy plain at night) to where it’s needed (a city two time zones away at rush hour) can smooth out fluctuations and prevent local shortages. However, traditional AC lines have limits over great distances due to losses and stability issues.

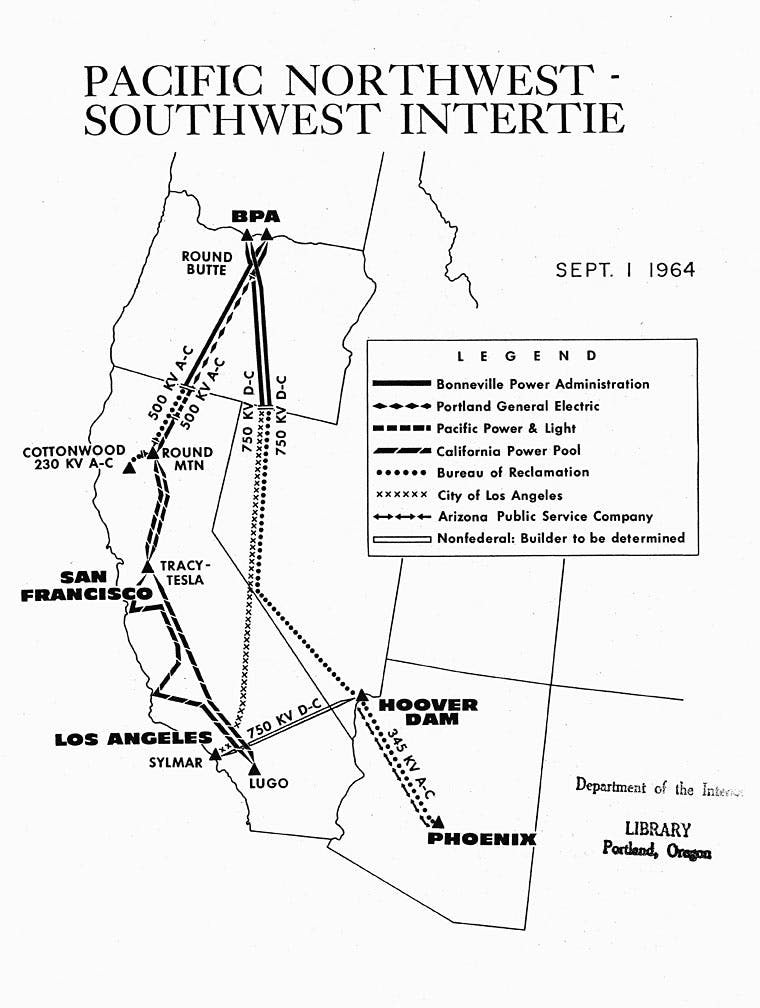

Enter high-voltage direct current (HVDC) transmission. By converting AC to DC for transport, hearkening back to Edison’s energy of choice, HVDC lines can send bulk power hundreds or even thousands of miles with lower line losses and without the need to keep two far-flung AC grids in perfect sync. HVDC is not new. The US built several large DC links in the 1970s. The Pacific DC Intertie, for example, carries hydropower from the Northwest to Southern California.

Source: Oregon History Project

But other countries have leapt ahead in recent years. China is the undisputed HVDC champion: it has built dozens of ultra-high-voltage DC lines crisscrossing the country, including a 3.3K km (2.1K mile) ±1.1 million-volt line from Xinjiang that carries 12 GW (gigawatts) of power to the east. As of 2023, China had 34 ultra high voltage (UHV) transmission lines in operation. The US, in contrast, has none at ultra-scale. The disparity is striking: while China rapidly builds “power freeways” linking its remote solar/wind bases to cities, US projects like the TransWest Express, a proposed Wyoming-to-California HVDC line, languish for over a decade in permitting.

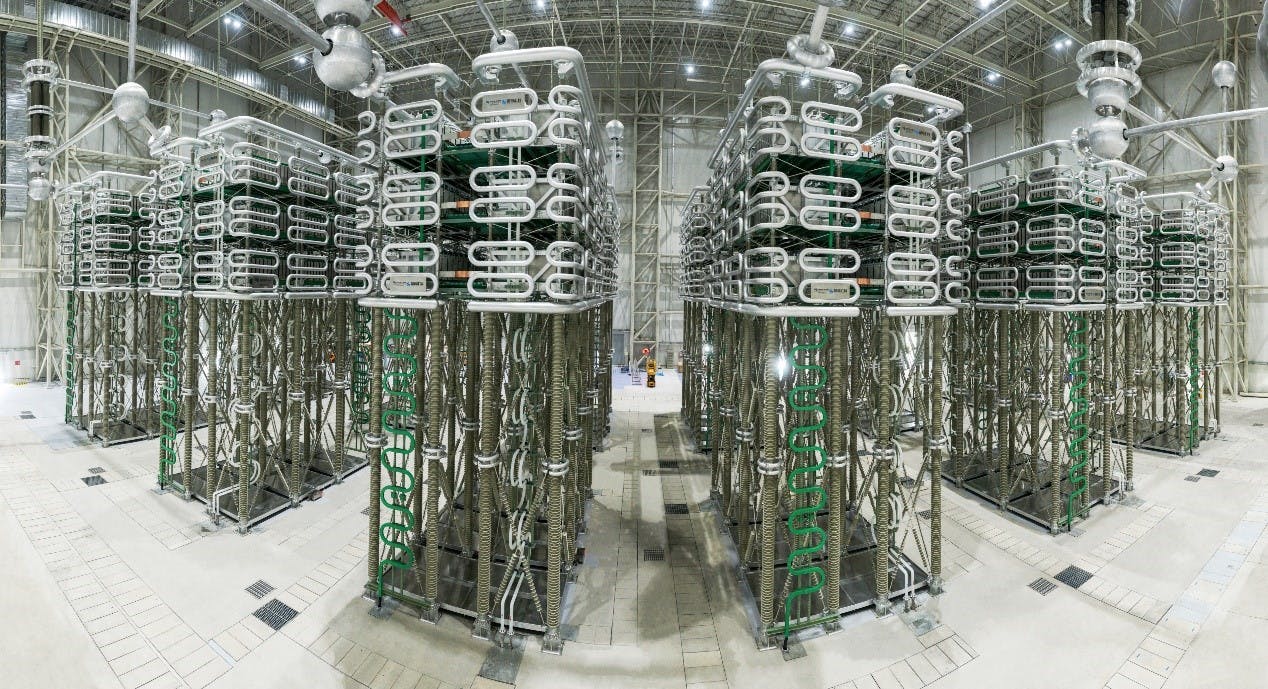

HVDC offers not just efficiency but also control. Grid operators can precisely dial power flows on a DC link, like opening or closing a valve, which is valuable for stability. Modern HVDC converter stations use advanced power electronics, thanks to companies like Hitachi Energy (formerly ABB) and Siemens, to integrate into AC grids and even support multi-terminal or meshed HVDC networks.

Looking ahead, more HVDC could enable a “macro grid” in the US – tying together regions to share resources. Studies show a national HVDC network would significantly reduce curtailment of renewables and cut the total capacity needed since regions could lean on each other’s excess. But cost and politics are hurdles: HVDC lines require expensive terminals at ~$200 million per GW each and are only economical for long hauls of over 370 miles. They also often cross state lines, inviting jurisdictional battles. Still, momentum is building. The Department of Energy has earmarked billions for “transmission corridors” and there are active proposals for offshore wind HVDC grids in the Northeast, and a Plains States HVDC network linking wind farms directly to eastern cities.

Source: RXHK

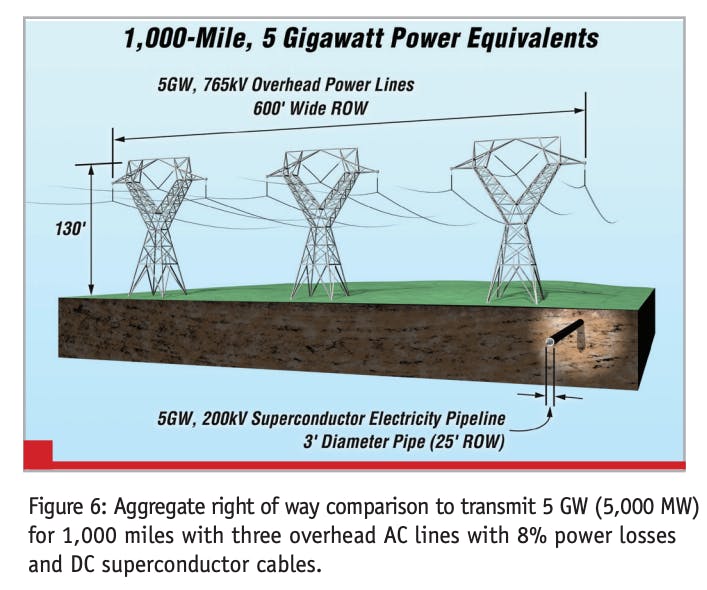

Superconducting cables are a more futuristic twist on transmission, using materials that have virtually zero electrical resistance when cooled to very low temperatures, allowing extremely dense power transfer. Imagine an underground cable carrying 5–10x the power of a conventional line with no resistive losses.

Source: Right of Way

Demonstrations of superconducting power lines have been done in cities like Chicago and Essen, Germany. These projects promise big urban payoff: you could replace a bundle of bulky cables or a forest of pylons with a few slim superconducting lines in existing rights-of-way. They eliminate the ~5–10% losses typical on long distance lines. However, they require refrigeration, usually using liquid nitrogen, and are very expensive for now. As materials improve, high-temperature superconductors could work at -200°C instead of -269°C. In addition, as manufacturing scales, we may see strategic superconducting links in dense areas or critical corridors.

Source: Right of Way, Contrary Research

For example, carrying GW-level power into Manhattan or the San Francisco Bay Area through a superconducting conduit could bypass the bottleneck of limited cable routes. Early projects are small scale, but companies are working on it.

Whether by HVDC, superconductors, or just more high-voltage AC lines, expanding transmission is non-negotiable for the future grid. Even the best batteries and solar panels won’t help if you can’t get power to where it’s needed when it’s needed. Regulators might need to get creative. Efforts like federal siting overrides or multi-state compacts are needed to break the logjam. The economic upside is huge: a stronger grid not only accommodates more clean energy but also reduces electricity prices and improves resilience.

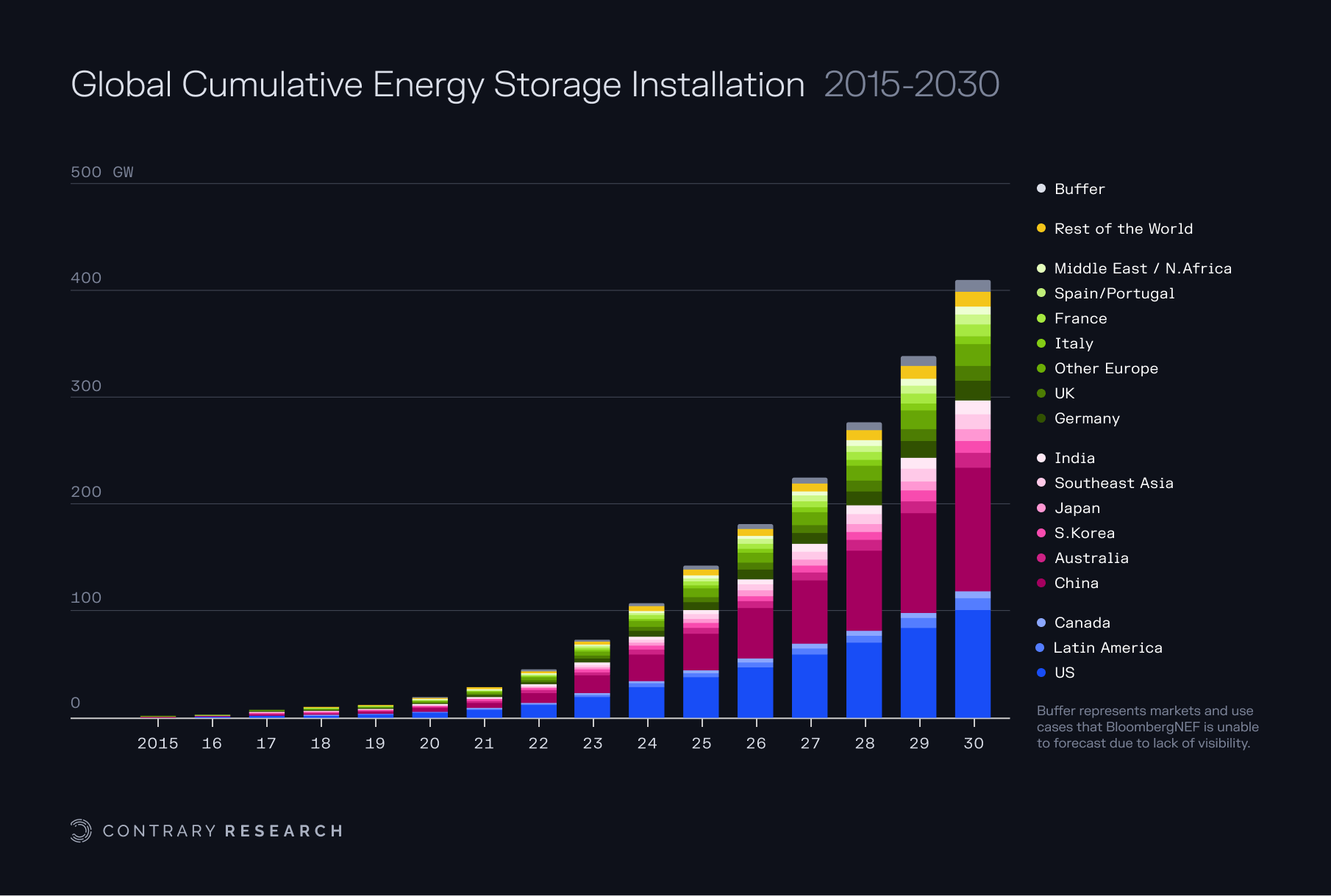

Grid-Scale Energy Storage

If transmission is the grid’s highway system, energy storage is its warehouse. Storage is what can turn renewable energy from an iffy source into a reliable one by saving excess for later. The US has actually used one form of big storage for decades: pumped hydro, which pumps water uphill when power is cheap and lets it flow down through turbines when power is needed. This practice accounts for 96% of all utility-scale storage in the country historically. But new technologies, particularly lithium-ion batteries, are changing the game.

In the last five years, large-scale battery installations have surged – especially in places like California, Texas, and Australia – to provide fast-reacting power and to store solar energy for use in the evening. Global grid-storage capacity is expected to grow 15x by 2030, reaching ~411 GW (from just tens of GW today). This includes not just lithium batteries, but also flow batteries, compressed air, thermal storage, and other emerging tech. For context, 411 GW is enormous – that’s like 410 big nuclear reactors worth of flexible output. Storage is becoming the Swiss Army knife of the grid. It can absorb surplus (preventing curtailment of renewables at midday or during windy nights), cover peaks (reducing reliance on “peaker” gas plants that run only a few hours), and provide grid stability services (frequency regulation, voltage support, spinning reserve).

For example, during California’s September 2022 heat wave, batteries provided over 3.6K MW during the critical evening peak, literally keeping the lights on as air conditioners pushed demand to record highs. One notable deployment is Tesla’s Megapack battery farms – one installation can be 100 MW/400 MWh or more, and cumulatively Megapacks exceed 31 GWh of capacity installed globally. These lithium-ion systems typically provide 2–4 hours of energy, which addresses daily fluctuations.

However, multi-day or seasonal storage remains a challenge. New chemistries, like iron-air being developed by Form Energy, aim to provide 100+ hours of storage at lower cost albeit lower efficiency. Economics of storage have improved dramatically: lithium-ion battery pack prices fell about 80% in the 2010s.

Source: Bloomberg

Still, scaling storage isn’t just buying batteries – you have to integrate them. One limitation is duration. Most of today’s batteries can’t sustain output beyond a few hours. That’s fine for bridging an evening peak, but not for a week-long lull in wind. Newer concepts, like gravity-based energy storage where heavy blocks are lifted, or thermal storage like storing heat in molten salt or solid blocks may someday overtake pumped hydro. There are pilot projects, such as iron-air batteries, which rust and un-rust metal to store energy, that promise cheap 100-hour storage, and compressed air plants that are being built in caverns to store energy as high-pressure air.

Regulators are starting to acknowledge the value of storage. Some states now mandate utilities to procure storage, and FERC allowed storage to play in energy markets as both load and supply. By smoothing supply swings, big storage can also mitigate the duck curve – charge when the duck’s belly is fat (noon solar surplus) and discharge when the duck’s neck rises (evening). This flattens net demand and eases the ramp rate needed from other plants.

If storage becomes cheap enough it reduces the need for some transmission expansion since local storage can cover local peaks. But it also changes the value of generation assets. For example, with enough storage, the difference between baseload and peaking plants blurs. Safety and supply chain are considerations too. Lithium batteries, while effective, have fire risks, such as the container fires in battery installations in Arizona and Australia. There’s a push for better fire suppression and exploring safer chemistries for large systems. Supply-wise, reliance on critical minerals like lithium, cobalt, and nickel means global supply chains need scaling and diversification. Unfortunately, those markets are currently dominated by China in processing.

Still, the momentum is clear. Large-scale storage is moving from nascent to indispensable. As one forecast put it, more aggressive policies in the US and Europe recently bumped storage deployment projections by an extra 13% above previous estimates – a sign that policymakers see storage as a linchpin for hitting climate targets. Companies like Fluence (a JV of AES and Siemens focused on battery storage solutions) are growing fast, and even oil & gas giants are investing in storage tech firms.

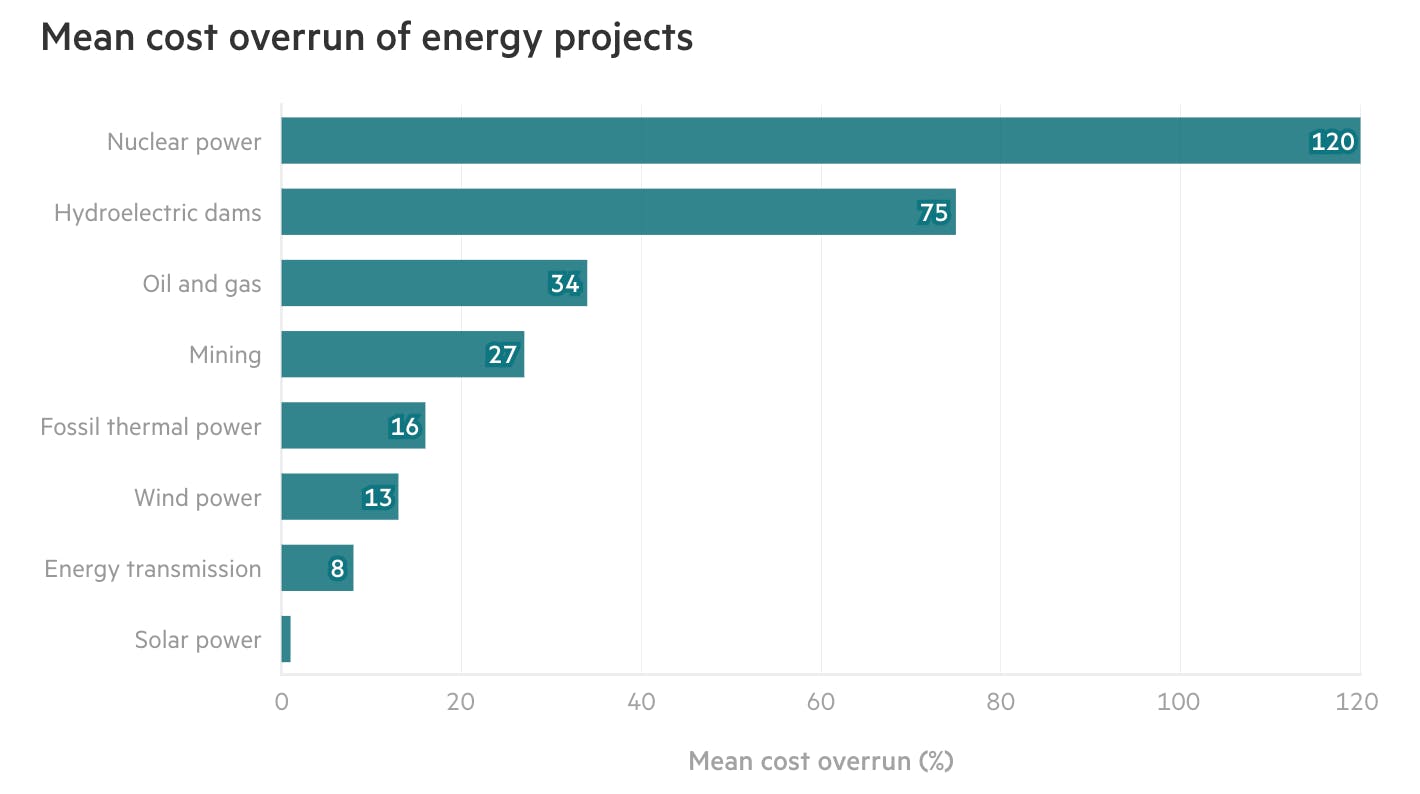

Nuclear: SMRs & Advanced Reactors

On the generation side, one wildcard for the future grid is nuclear energy, reinvented. Nuclear fission provided around 20% of US electricity as of February 2025, all from large light-water reactors built mostly in the 1970s and 1980s. They produce carbon-free, steady power, which is great for baseload. But high costs, long build times, and public wariness, after accidents like Fukushima and Three Mile Island, have stalled new projects. Just one example is the decade-late, over-budget Vogtle plant in Georgia.

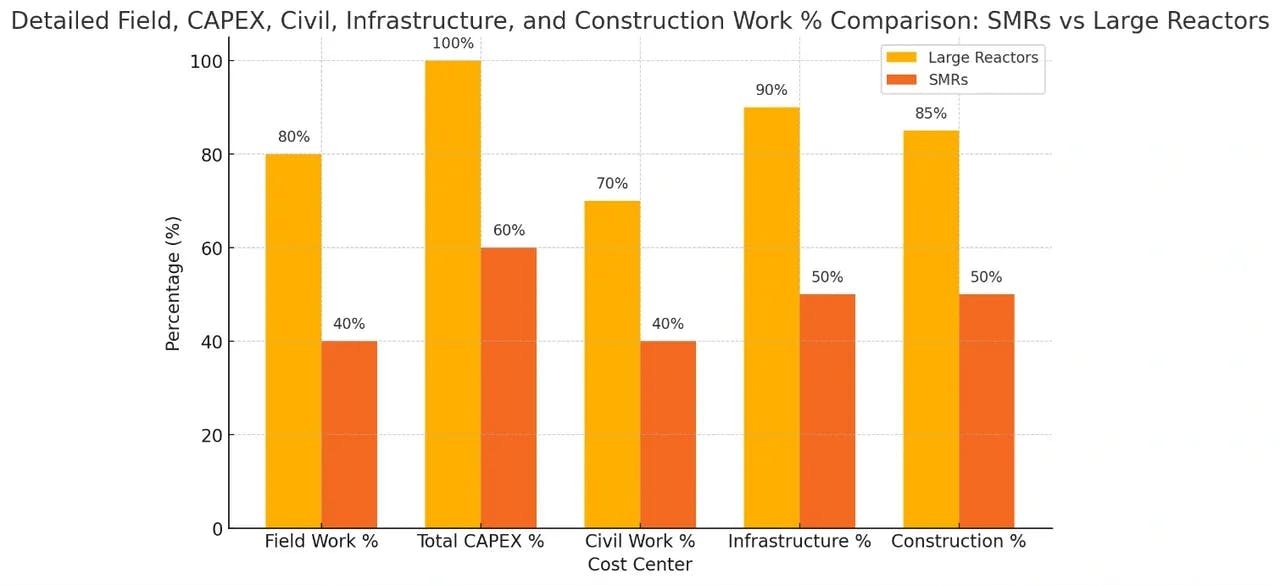

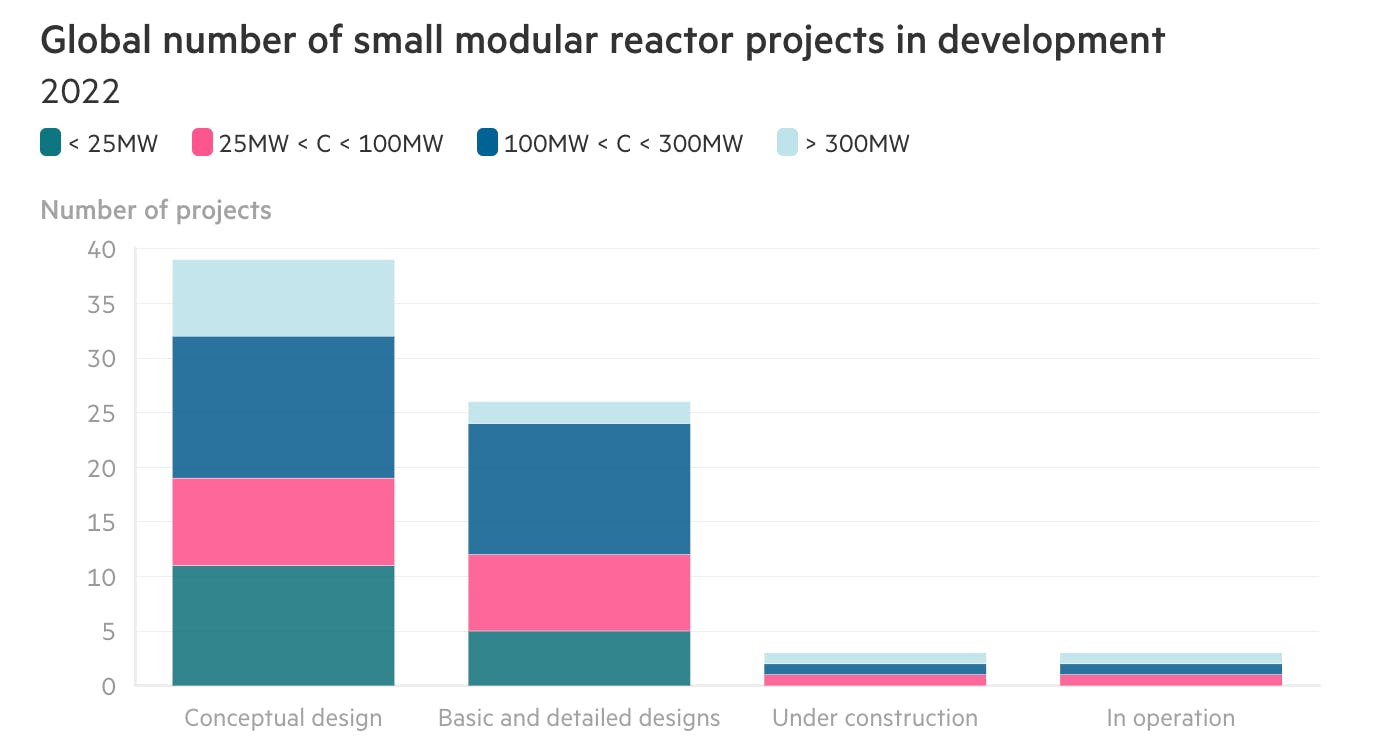

In response to these obstacles, we’ve seen the rise of Small Modular Reactors (SMRs) and advanced reactor designs. These aim to reboot nuclear power by making plants smaller, safer, and factory-produced. An SMR is basically a nuclear reactor shrunk to anywhere from 50 to 300 MW (a fraction of the 1K+ MW behemoths), designed such that components can be mass-manufactured and then assembled on-site. This modularity could drastically cut construction times and costs by using assembly-line efficiencies. Many SMRs also incorporate passive safety, which are designs that can cool themselves indefinitely without external power or human intervention, using natural convection or gravity-fed coolant. This reduces the risk of meltdowns and could ease public concerns. So what can SMRs do for the grid? They could provide reliable baseload power in a smaller footprint, complementing renewables with always-on generation.

Source: Power Generation Integrated

A big selling point is flexibility in siting: SMRs could replace retiring coal plants using the same grid connection and even the same workforce, or power remote areas and industrial sites that a giant reactor would overwhelm. Some designs are even transportable by truck or rail. With electricity demand projected to rise ~35% by 2050 (thanks to EVs, electrification, and AI), advocates see SMRs as a way to add clean firm capacity without the multi-billion-dollar bets of old nuclear. NuScale Power in the US received the first NRC design approval for its 77 MW SMR and is hoping to target a six-pack (462 MW) SMR plant. In the UK, Rolls-Royce is developing a ~470 MW SMR design targeting deployment in the 2030s. There are also advanced concepts: reactors using molten salt or liquid metal cooling, high-temperature gas reactors, etc., which promise higher efficiency or process heat for industry. Some can even load-follow (i.e. ramp output up and down daily) which traditional reactors don’t do well. That could pair nicely with variable renewables.

However, SMRs face hurdles. The first units are often still expensive because the factory-build economies only kick in after many units. Regulatory processes also remain lengthy. Even if the reactor is small, the safety review remains large. More than any other energy source, nuclear projects routinely exceed cost and time estimates, something NuScale Power has experienced first hand.

Source: Sustainable Views

There’s also the perennial issues of waste and proliferation in nuclear. Some advanced designs propose to consume spent fuel or use new fuel cycles, but these are unproven at scale. Realistically, SMRs are unlikely to contribute meaningfully in the very near term – none will likely be operating at scale before 2030. They must also compete in a market where renewables plus storage are getting cheaper and have no lingering radiation concerns. But given the need for firm, clean power, many experts argue we need to bet on all horses, including new nuclear capacity. If SMRs can achieve their cost and safety goals, they could become an important wedge in the energy mix by the 2030s, especially in regions that lack great renewable resources or want on-site generation for reliability.

Source: Sustainable Views

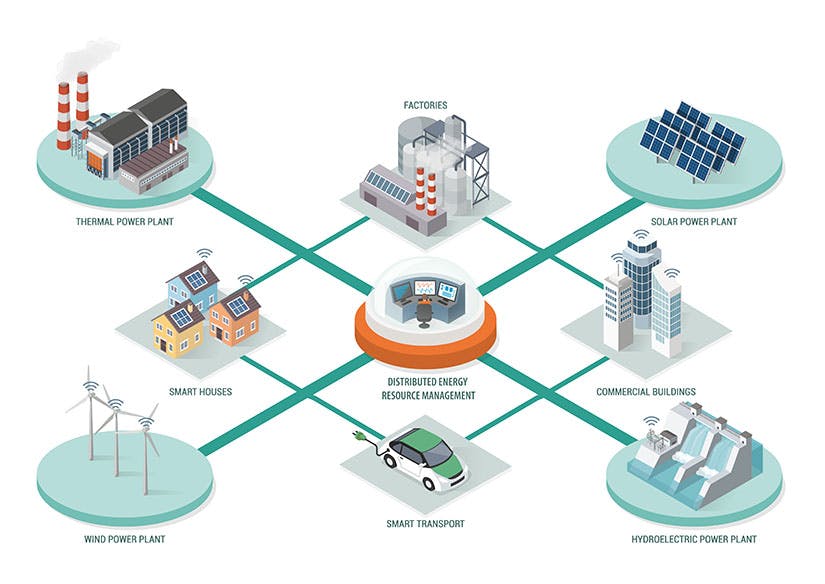

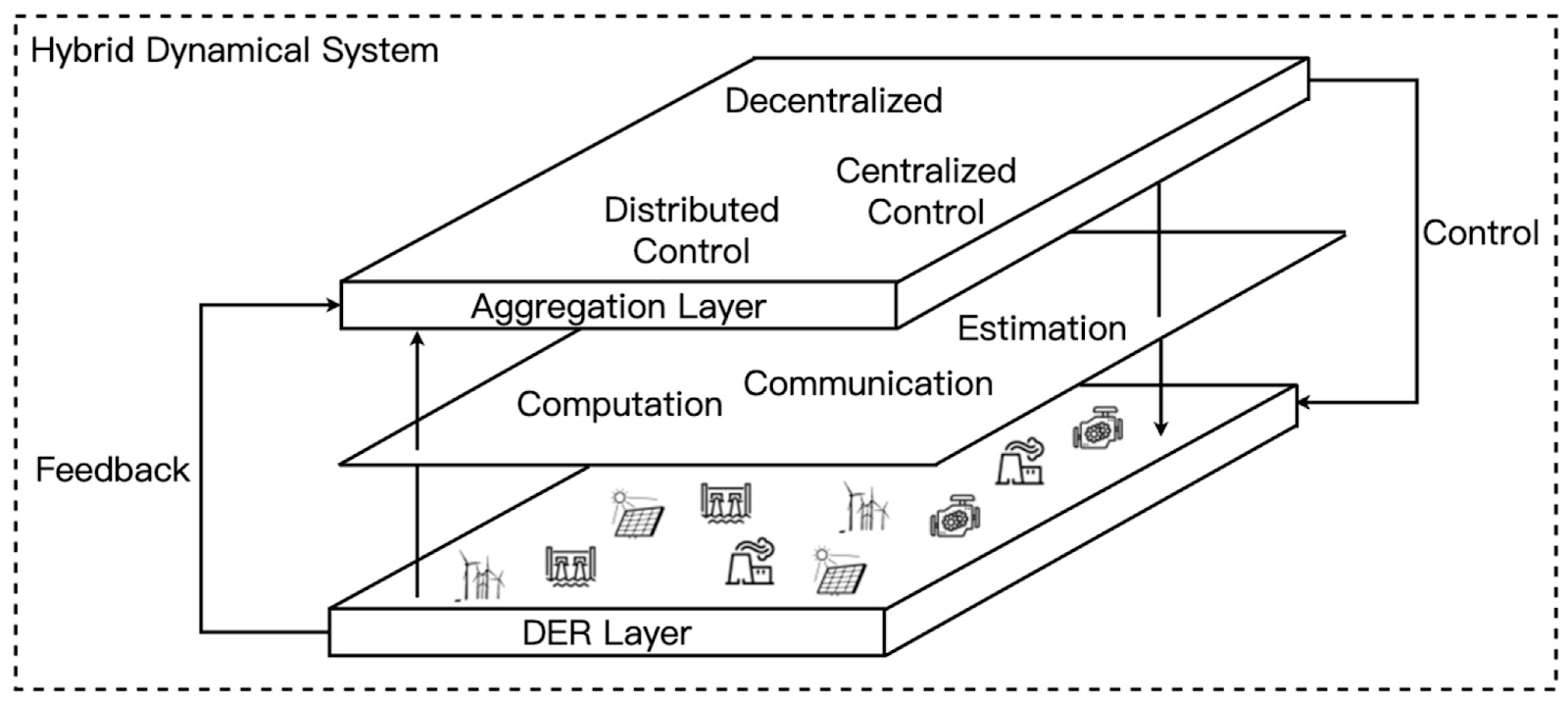

A Smarter Decentralized Grid: DERs, Virtual Power Plants, & Markets

The hardware enhancements above can certainly improve the grid’s central backbone. Equally important is making the edge of the grid smarter and more integrated. This means turning millions of distributed assets – from home solar panels to EV chargers – into coordinated resources. It also means deploying software platforms and market mechanisms that knit these pieces together in near real-time. Essentially, the grid needs a digital nervous system to manage a far more complex supply-demand equation. Key components of this emerging “software layer” include smart endpoints and appliances, Distributed Energy Resource Management Systems (DERMS) for utilities, Virtual Power Plants (VPPs) operated by aggregators, and even peer-to-peer energy marketplaces.

Smart Endpoints & Demand Flexibility

One of the cheapest forms of “new energy” is actually just choosing to not use energy at certain times. Demand response has been around for decades (think air conditioners that utilities cycle off briefly during peaks in exchange for a credit). What’s changing is the intelligence and ubiquity of control. Smart endpoints refer to the variety of internet-connected devices on the customer side that can adjust their behavior based on signals from the grid. These include smart thermostats, smart water heaters, EV charging stations, smart appliances, and building automation systems.

Individually, each of these endpoints can incrementally shift when it draws power; collectively, they can act as a huge virtual battery by flattening demand peaks. For example, an EV charger can pause for a few minutes if it sees grid frequency dip, or a smart HVAC can pre-cool a building when solar power is plentiful at noon and coast through the early evening. Unlike the blunt tools of the past that interrupted entire feeder lines, smart endpoints can be granular – responding in kilowatts here and there – and invisible to the user (your fridge doesn’t need to run its compressor this very second if it ran a minute ago, and you’d never know the difference in temperature).

Studies have shown that with smart coordination, peak demand in many systems could be cut by double-digit percentages without impacting comfort. It’s essentially shaving the duck’s neck from the demand side. The technology is largely available today. Many devices already have intelligence and connectivity. The challenge is aligning incentives and standards. Programs like Open Automated Demand Response (OpenADR) provide a common language for devices to listen for “events” (like grid stress signals). California has used automated demand response to get large commercial buildings to drop load on hot days. For EVs, developing vehicle-to-grid (V2G) standards and protocols allows not just smart charging (modulating when a car charges) but potentially feeding power back from car batteries to the grid. A Nissan Leaf or Ford F-150 Lightning can, in theory, act as a 10 kW battery on wheels for your home or grid. Some pilot programs, like in Delaware and California, compensate EV owners for providing power at peak times.

Smart endpoints are a win-win if done right: customers save money or earn rewards for flexibility, and the grid gets relief. During the Texas grid emergencies, for instance, some smart thermostat programs lowered AC usage and collectively shed hundreds of MW – small per home, but huge in aggregate. Of course, coordination is key. You don’t want all devices responding identically, causing a rebound peak later. This is where AI and algorithmic balance come in: advanced systems can tailor the response of each device to flatten overall impact and even factor in user preferences (like home temperature or EV battery level).

Privacy and control are also considerations. Consumers need trust that their smart devices won’t be hijacked or abused. Nobody wants the utility cycling their appliances without permission unless there’s a clear benefit to them. As more and more end-use loads become connected and flexible, we move toward a paradigm of “flexibility as a resource.” The grid operator in 2030 might be managing not just power plants, but also calling upon gigawatts of “negawatts” (demand reductions) and controllable load from millions of endpoints to maintain balance. Companies like Google (with Nest), OhmConnect, and various utilities are already orchestrating such programs. Importantly, this shifts some control to consumers. You’re no longer a passive receiver of energy from the grid, it’s something you actively participate in.

DERMS: The Utility’s New Control Tower

From the utility perspective, handling the influx of DERs (solar, batteries, EVs, etc.) on their networks is a massive undertaking. This has given rise to Distributed Energy Resource Management Systems (DERMS) – essentially software platforms that allow utilities to see and manage all the smaller resources on their grid.

Think of DERMS as an air traffic controller for a sky now crowded with drones in addition to airplanes. Traditionally, a utility’s control room oversaw big power plants and major substations, balancing supply and demand in a fairly top-down way. Now, with thousands of solar rooftops, EV chargers, and batteries popping up, a utility needs visibility into what’s happening at the edges: Is a neighborhood’s solar output surging and backfeeding into the main line? Are a cluster of EV chargers about to create a spike on a local transformer?

DERMS can aggregate data from smart inverters, smart chargers, and other edge devices to provide a unified situational awareness. More than that, it enables sending control signals For example, it might send an instruction to 500 rooftop solar inverters to dial back by 20% because a cloud just moved and the grid doesn’t need the sudden surge when the sun returns. Or it could command a set of home batteries to discharge during a peak to reduce strain.

In a sense, DERMS lets a utility treat a collection of DERs as if it were a power plant it can dispatch – except instead of turning a knob on one big generator, it’s coordinating many small ones. This is essential for high DER grids: without it, utilities either have to overbuild wires and transformers to handle any possible injection (which is costly), or cap the DERs (which frustrates customers and wastes potential). For example, Hawaii, which has very high rooftop solar adoption, implemented DERMS on some islands to remotely adjust solar output to maintain voltage within limits.

One way to view DERMS is as the counterpart to Virtual Power Plants (VPPs) – but on the utility side of the meter, focusing on grid reliability rather than market transactions. DERMS rollouts come with challenges like integrating many device types from different vendors when interoperability issues abound, as early DERMS projects have learned. Many legacy DERs weren’t built with remote control in mind, though new interconnection standards (like IEEE 2030.5) now require “smart” functionalities. Voltra, the company being built by two of the co-authors of this piece, is aiming to be the connective tissue: a software platform bridging edge devices with utility and market operations. In essence, creating a common software layer for the grid akin to what Android or iOS did for smartphones – an ecosystem where apps (services) can interface with hardware seamlessly.

Cybersecurity is another critical piece of the puzzle. A compromised DERMS could theoretically shut off or turn on thousands of devices, causing havoc. Another challenge is customer consent and participation – a utility might have the tech to curtail your rooftop solar briefly, but do they have the right (and your permission) to do so? Such programs typically need customers to opt-in, often in exchange for incentives or special rates. Utilities like Duke Energy have started deploying DERMS in pilot projects, and vendors like Schneider Electric (which acquired AutoGrid, a DERMS/VPP software company) and GE (with its new GridOS platform, integrating Opus One solutions) are marketing “grid operating systems” that promise to handle DER orchestration.

In the bigger picture, DERMS is part of a shift for utilities from “just keep the lights on” to actively managing a dynamic network. It’s as much an organizational and mindset shift as a technical one, often requiring new training and even new regulatory treatment..

Virtual Power Plants (VPPs)

While DERMS help utilities manage DERs for grid stability, Virtual Power Plants leverage them for market value. A VPP is created by an aggregator that pools many customer-sited assets to act like a single power plant in energy markets. For instance, imagine 10K home batteries, 1K smart commercial HVAC systems, and 500 EV chargers being collectively controlled so that together they can reduce load (or inject power) by, say, 50 MW when needed. That 50 MW can be offered into the wholesale market for energy or ancillary services just like a conventional generator would. The concept unlocks the value of scale – a single homeowner’s 5 kW battery is negligible to the grid, but an orchestrated fleet is impactful.

Source: MDPI

VPPs can deliver various services: peak shaving, frequency regulation (rapid adjustments to keep grid frequency stable), or emergency capacity during grid shortages. They effectively monetize flexibility. For participants (homes or businesses), a VPP is a way to earn money or credits from assets that otherwise just sit there. For the grid, it’s a source of on-demand capacity that can be cleaner and cheaper than firing up a peaker plant. In California, studies indicate that 7.5 GW of VPP capacity could be cost-effectively deployed by 2030 – roughly 15% of the state’s peak load – which could displace several gas plants.

Real-world examples are proliferating: Next Kraftwerke in Europe operates one of the largest VPPs, with 13K units (biogas generators, industrial loads, batteries) providing over 10 GW of flexible capacity. In Australia, Tesla has been aggregating its Powerwall home batteries – a Tesla-run VPP in South Australia plans to connect 50K homes (so far a few thousand are online, contributing ~20 MW in trials). In the summer of 2022, Tesla’s VPP in California in partnership with utility PG&E enabled 2.5K homes to feed power from their Powerwalls during a heat wave, contributing 16.5 MW and paying each homeowner $2 per kWh for the help. This demonstrated how a coordinated fleet can be an emergency resource – essentially a citizen-powered peaker plant.

Utilities are jumping in too: not just Duke Energy but AES (an energy company) uses its subsidiary Fluence to aggregate customer batteries in programs. The keys to a VPP are a robust software platform (often cloud-based) that can handle communication, forecasting, and dispatch, and a regulatory framework that lets aggregated DERs play in markets. FERC Order 2222 is meant to enable this in the US by removing barriers for aggregators to bid into wholesale markets. Internationally, some countries are ahead – Germany and Australia have relatively high VPP participation due to favorable policies and high energy prices that incentivize customers.

This approach isn’t without its challenges. Forecasting the availability of hundreds of independent devices is tricky. What if a homeowner opts out at a critical time, or a large number of EVs are unexpectedly unplugged? VPP operators mitigate this with over-recruitment and statistical forecasts (much like an insurance model). Communication failures are another risk. You need reliable connectivity (internet/cellular) to each site; a telecom outage could reduce VPP effectiveness. There’s also a trust factor. Will customers stay engaged for the long run? Attrition can undermine a VPP if not enough new sign-ups replace those who leave or lose interest. Nonetheless, the upside is enticing: VPPs blur the line between consumers and producers, potentially turning an entire distribution network into a flexible extension of the generation fleet.

Peer-to-Peer Energy Markets & Decentralized Trading

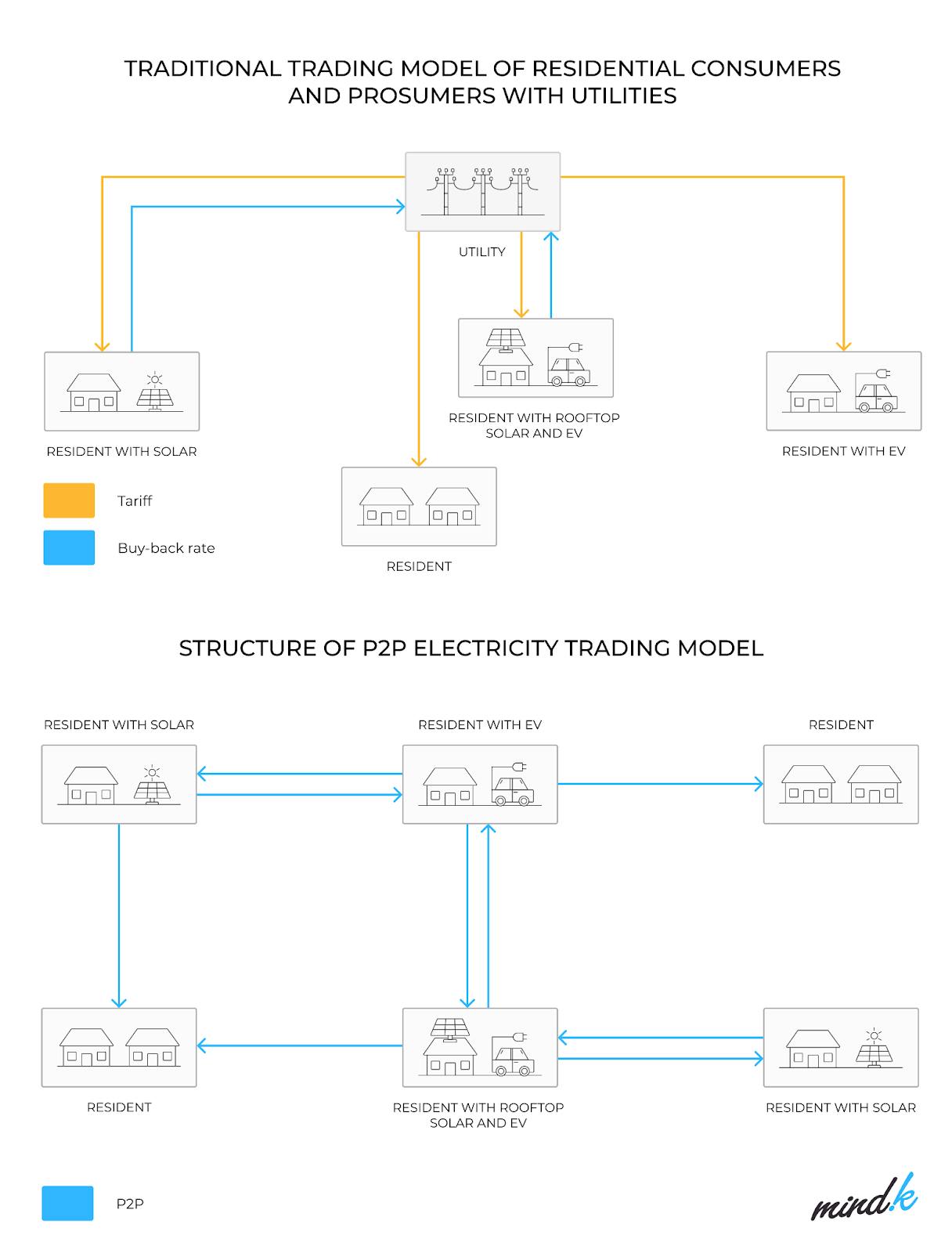

Pushing the decentralization theme even further, some envision a future where energy trading happens at the community or even peer level. Instead of solely relying on top-down utility tariffs, your solar panels could directly sell excess power to your neighbor’s battery or your EV could trade with the local grocery store’s fridge in response to price signals. These energy trading platforms create local marketplaces, often leveraging blockchain or other distributed ledger technology to handle the myriad small transactions securely.

The idea is analogous to Airbnb or Uber, but for electrons: people with surplus can sell to those with need, possibly mediated by smart contracts. For instance, in a neighborhood microgrid, households with solar might auction any stored energy in their home batteries each evening to others who didn’t generate enough, at a price lower than the utility’s but higher than zero – everyone potentially benefits.

Projects like the Brooklyn Microgrid, one of the early peer-to-peer pilots, allowed prosumers to trade solar energy via a blockchain system. In Australia, Power Ledger, a blockchain energy company, ran trials where tenants in an apartment with solar shared energy credits amongst themselves. The benefits touted include empowering consumers, encouraging local energy use (reducing strain on the main grid), and valuing DER investments more granularly. If your rooftop solar can earn a premium from your neighbor, you’re more likely to install it.

Source: Mindk

On a larger scale, decentralized markets could also allow flexibility trading – e.g., businesses offering to reduce load for a fee, with automated clearing of those offers at the feeder or community level. The technical enabler is usually advanced software to handle real-time measurement and verification and a trusted platform for settlement. Blockchain can provide an immutable record of transactions and automate execution (smart contracts pay out when certain conditions are met), though it’s not strictly necessary – one could use a centralized ledger too. So far, real-world implementation is limited and mostly experimental.

One issue is regulation. In many places, peer-to-peer energy sales are not clearly allowed under existing laws which often mandate that only a licensed utility or market can sell power. Another is volume – a local market needs a critical mass of participants to be liquid and useful. If only 5% of participants join, it may fizzle. There’s also a question of tariffs and fairness: if people trade locally and avoid utility delivery charges, are they undermining maintenance of the wider grid? Some models suggest a small transaction fee or grid usage charge could be applied to P2P trades to ensure infrastructure costs are covered. And while blockchain adds transparency, it also introduces complexity. Early trials have run into issues with transaction speed and the overhead energy use of some blockchain processes (it’s ironic if your energy trading platform consumes a lot of energy itself).

Nonetheless, as DER penetration grows, the concept of locational energy value is gaining traction. Energy is sometimes worth more in one place than another due to grid constraints. Local trading could capitalize on that. Imagine a future “app store” for energy, where you can subscribe to your neighbor’s rooftop solar for a fee instead of installing your own, or a community solar farm issues tokens that residents trade amongst each other. It’s a bit radical, but not impossible. Even without full peer-to-peer autonomy, more localized markets are emerging. For example, Texas’s ERCOT now has products and aggregators enabling homes with batteries to get wholesale prices in near-real time (effectively letting them arbitrage home vs grid energy). The next step might be those homes trading with each other in a neighborhood before ever interacting with the bulk grid.

EV Charging Infrastructure & Management Systems

Transportation electrification is another major shift in the future grid. Building out EV charging infrastructure isn’t just about sticking chargers everywhere; it’s about integrating a whole new category of flexible demand and even energy storage into the system (if you consider vehicle-to-grid). Today, EV adoption is climbing fast – the U.S. saw EV sales hit ~6% of new cars in 2022 and 10.6% in 2024– and with federal support (like the goal for 500K public chargers nationwide by 2030), infrastructure is racing to catch up.

One key to this is deploying smart, networked charging rather than just dumb outlets. Most EV charging stations are connected to the cloud via management software known as Charge Point Management Systems (CPMS). A CPMS allows the operator, whether a charging network like ChargePoint, or a utility, or site host like a parking garage, to monitor and control chargers, start/stop sessions, manage pricing, handle reservations, and crucially balance loads to avoid blowing circuits. For example, if you have 10 chargers on a site with a 200 kW service limit, the CPMS can ensure the total draw stays under 200 kW by throttling charging speeds when too many cars plug in at once. This is vital to avoid costly grid upgrades and to smoothly integrate EVs.

Smart charging can also respond to grid signals. Utility integration means charging can slow down during system peaks or even feed power back (for V2G-enabled vehicles) during emergencies. By 2030, EVs could collectively offer tens of gigawatt-hours of storage – a meaningful target for grid services if tapped. Several leading companies are building out this ecosystem: ChargePoint operates one of the largest networks with over 274K chargers under management, offering cloud software that not only serves their own stations but also clients who deploy chargers. EVConnect, now part of Shell, provides a software platform for site hosts to manage any OCPP-compliant chargers, boasting high uptime through proactive maintenance alerts. Driivz is another, used by utilities in Europe to do things like manage neighborhood charging to stay within transformer limits.

The importance of uptime and reliability can’t be overstated – one study in California found about 1 in 4 public chargers was non-functional at any given time due to hardware issues or network glitches. A smart management layer helps detect and fix those issues faster, and route drivers to working stations. In many ways, EV infrastructure is creating a new interface between transportation and electricity sectors – requiring coordination between automakers, charging providers, utilities, and software developers. Standards like Open Charge Point Protocol (OCPP) help by ensuring different brands of chargers can all talk to a central system, akin to how the internet has common protocols.

Regulators are also adjusting: utility commissions are approving “make-ready” programs where utilities build grid connections for chargers, and are considering special EV rates that encourage charging at off-peak times. Some offer super-low overnight rates or even free charging during solar noon. Smart charging effectively turns EVs into a flexible load rather than a fixed one. Many new EVs and chargers are capable of bidirectional charging (V2G), which can make EVs into mini power plants when parked. If aggregated, thousands of EVs could function as a massive VPP – although using personal car batteries for the grid raises questions about battery wear-and-tear and customer acceptance. People may not want to share their car’s energy if it might reduce range or battery life without sufficient compensation. Still, fleet vehicles (like electric school buses that sit idle most of the day) are prime candidates for V2G – several school districts are already earning revenue by feeding energy from bus batteries to the grid when prices are high, since the buses are parked in depots in the afternoon.

Strategically, building enough public charging is also critical to avoid range anxiety and enable EV adoption in all segments. This means high-power DC fast chargers along highways (which up to 350 kW each – a heavy load that might require local storage or buffer to not stress the grid when multiple operate) and ubiquitous Level 2s in cities and parking lots. There’s a network effect: as charging sites proliferate and integrate with grid management, EVs transform from a grid stressor to a grid asset. Regulators and companies that realize this are crafting policies to encourage daytime workplace charging (to soak up midday solar) and managed charging programs for homes. In Europe, some countries are mandating that all new chargers be smart and capable of load control. The U.S. is moving that direction via incentives.

In short, electrified transport is not just about cleaner cars – it’s about a more flexible, interactive grid where millions of mobile batteries play a part in balancing supply and demand. Companies like Voltra see this as the first stepping stone towards building the software layer of the decentralized energy grid. The company’s initial product focuses on open APIs for charging management, effectively bridging EV stations with energy software systems. This kind of integration is crucial so that, say, a building energy management system and an EV charging system can coordinate (e.g. turning down building HVAC slightly when a bunch of EVs start charging, to avoid demand spikes). As EVs go mainstream, expect the charging network to become tightly woven into the grid’s operation, with utilities perhaps treating large charging hubs as part of their resource mix. Imagine “demand dispatch” – the utility might delay charging a thousand cars for 15 minutes to ride through a peak. It’s a far cry from the passive “fill ’er up” gas stations of yesterday.

Building The Energy Internet

The decentralized energy grid is in the midst of a revolution. The US grid’s next chapter will not be written by any single author but co-written by utility engineers, software developers, regulators, entrepreneurs, and even everyday energy “prosumers.” It’s a narrative moving from the centralized hub-and-spoke past to a decentralized, digital, and interactive future. The energy internet. The transition won’t be without friction – technological, economic, and political. But as we’ve seen, the pressure to change is coming from all sides: aging infrastructure failing under new stresses, customers demanding cleaner energy and more control, new technologies offering better ways to manage power, and competitors from tech and automotive jumping into the fray. The winners in this new era will be those who can blend deep domain expertise in power systems with the agility of software and the customer-centric mindset of modern tech companies.

It’s a tall order, but many are rising to the challenge. And as with any revolution, there’s a sense of urgency: the grid must evolve, or it will be overwhelmed. The encouraging news is that solutions are emerging daily – the grid is becoming smarter, stronger, and more distributed year after year. If Edison or Insull could see the grid now – with rooftop solar, battery farms, and cars providing energy – they might be astonished. Yet it’s a natural evolution of their dream: making electricity ubiquitous and harnessing every innovation to serve society’s needs. In that sense, the energy revolution is less a sudden coup and more an accelerated maturation – an old giant of infrastructure learning new tricks to thrive in a new world.

The pieces are actively falling into place. The task now is to connect them – literally, through wires and wireless signals – into a coherent, adaptive whole. The coming decade will be decisive. With the right moves, by 2035 we could have an electric grid that is a marvel of flexibility and reliability, underpinning a prosperous, low-carbon economy. That is the prize of the energy revolution, and it’s one worth fighting for. The grid made the 20th century; re-making the grid will define the 21st.

*Contrary is an investor in Voltra through one or more affiliates.