Thesis

The release of foundation models from providers like OpenAI and Google has made building AI products much easier. Prior to the availability of large language models (LLMs), companies needed to hire specialized machine learning engineers, collect vast amounts of data, and train proprietary models. With LLMs, development teams can simply make an API call to ready-to-use models and follow the steps of selecting a suitable foundation model, adding context or fine-tuning it, and employing prompt engineering.

Still, this approach presents its own challenges. These include choosing the right model to balance cost and capabilities and integrating appropriate context via retrieval augmented generation (RAG) to mitigate hallucinations, where the model fabricates answers. Another complexity lies in identifying effective prompts, as different models, such as GPT-4 or LLaMA 2, may produce divergent outputs as a result of differences in training methods. Additionally, teams must address continuity: some models eventually become deprecated, so previously built infrastructure needs to be adapted to newer versions.

Addressing these issues is essential because AI holds significant promise for both societal and economic gains. The National Bureau of Economics Research suggests that AI could generate savings of 5-10% in U.S. healthcare, which translates to over $200 billion in annual cost reductions. McKinsey estimates AI could boost global corporate profits by over $2.6 trillion each year. AI agents, or autonomous intelligent systems performing specific tasks without human intervention, currently support complex workflows across industries, particularly those requiring qualitative and quantitative analysis, and the market for AI agents is forecasted to grow from $5.1 billion in 2024 to $47.1 billion by 2030, with a CAGR of 44.8%.

To take advantage of all these productivity gains, organizations need to be able to orchestrate the AI models best suited to their businesses. That’s where LangChain comes in. LangChain is an open-source orchestration framework for the development of AI applications using LLMs. It offers tools for developers to orchestrate components, build flexible control flows, and gain visibility into development cycles for AI application development. Overall, LangChain’s tools and APIs simplify the process of building LLM-driven applications like chatbots and virtual agents.

Founding Story

LangChain was founded by Harrison Chase (CEO) and Ankush Gola (co-founder) in 2023.

Chase developed a passion for data-driven insights when he was young. Growing up, he played soccer and was a fan of the NBA and NFL, and when he arrived at Harvard University—where he received his bachelor’s degree in 2017—his interest in sports led him to join the Sports Analytics Collective. In the club, he learned basic statistics, data science, and machine learning techniques to predict NFL season outcomes.

After graduating, Chase worked as a Machine Learning Engineer at Kensho Technologies from July 2017 to December 2019 and then joined Robust Intelligence in October 2019. At Robust Intelligence, he focused on testing and validating machine learning models until the end of 2022. During his time at Robust Intelligence, he created a bot capable of querying internal data from Notion and Slack at a company hackathon. This project evolved into Notion QA, an open-source tool enabling natural language queries on internal Notion databases.

Around September 2022, Chase had plans to depart Robust Intelligence. A series of events and meetups he attended exposed him to common abstractions that developers faced while working on generative language models. He released his own side project in October 2022, aiming to solve these challenges, and that prototype laid the foundations for LangChain. In November 2022, ChatGPT launched, attracting the first wave of developers to LangChain. Its GitHub stars tripled from 5K in February 2023 to 18K by April 2023. By December 2024, LangChain had accrued over 96K stars on GitHub and 28 million monthly downloads.

Given the project’s rapid growth, Chase officially incorporated LangChain and brought Gola on board. Gola was a former co-worker at Robust Intelligence and worked as a software engineer at Facebook from 2015 to 2019. He received his B.S.E. in electrical engineering from Princeton University in 2015. In April 2023, Benchmark led a seed round of $10 million for LangChain, providing the founders with the capital to further empower developers to build applications powered by language models.

Product

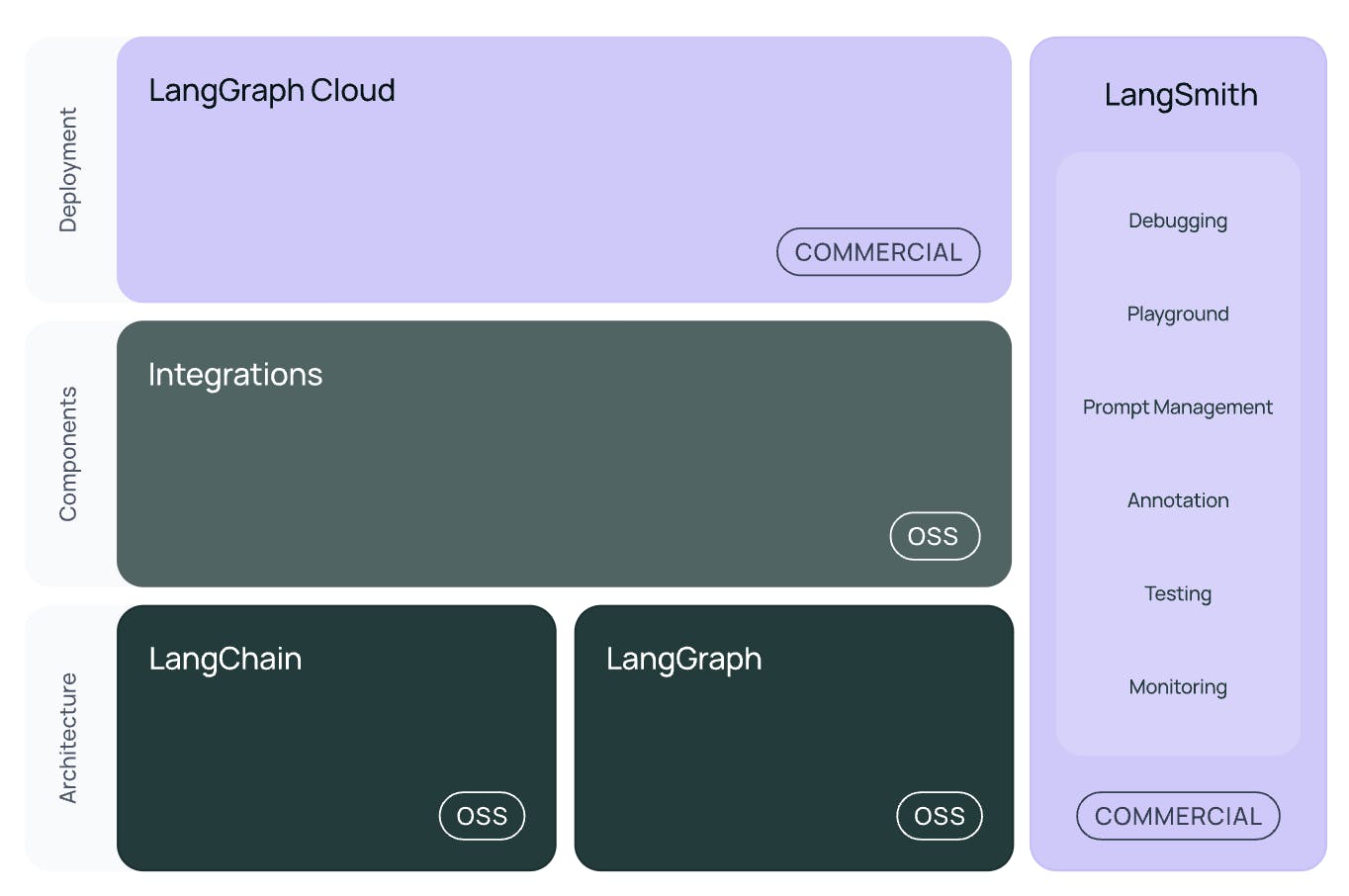

Source: LangChain

LangChain

LangChain offers a versatile, open-source framework that empowers LLM application developers to streamline their development and deployment processes. At the core of this framework are its libraries, available in both Python and JavaScript, which serve as the foundational building blocks for assembling chains and agents. These libraries come with over 600 plug-and-play integrations and benefit from the contributions of more than 3K community members. By offering prebuilt implementations to handle tasks like natural language understanding, text generation, and data retrieval, the libraries free developers from wrestling with complex back-end intricacies.

In addition to these libraries, LangChain provides a collection of templates—a repository of reference architectures designed to shorten the development cycle. These templates enable developers to quickly deploy solutions for a range of use cases, from RAG chatbots to GPT-based research assistants. Beyond these high-level templates, LangChain also offers specialized prompt templates to ensure user inputs are optimally formatted for language models. These prompt templates can incorporate few-shot examples, compose multiple prompts, or partially format prompts, further accelerating and simplifying the customization of LLM-driven applications.

For smooth and scalable deployment, LangChain offers LangServe, a dedicated library that is designed to facilitate the deployment of LangChain chains as RESTful APIs. RESTful APIs (Representational State Transfer Application Programming Interfaces) are widely used in modern software development to enable seamless communication between applications and services. LangServe supports these APIs to ensure that chains can be effortlessly integrated into existing systems, providing compatibility and scalability across diverse application environments. This makes it possible to deploy sophisticated LLM-powered applications in a production setting without the need for extensive infrastructure modifications.

Collectively, these elements empower a range of features. LangChain’s standardized component interfaces simplify the process of working with different models and related components while making it easy to switch between providers like Anthropic, Cohere, and OpenAI. Its flexibility supports complex control flow, persistence for both short- and long-term memory, and human-in-the-loop configurations. Furthermore, LangChain’s built-in ingestion and retrieval capabilities let developers augment AI applications with company knowledge or user data, offering over 150 document loaders, 60 vector stores, 50 embedding models, and 40 retrievers. This breadth of tools ensures that integrating LLMs into existing data sources and infrastructure is both efficient and scalable.

LangGraph

LangGraph, released in January 2024, is a low-level framework that empowers developers to create agent and multi-agent workflows, offering fine-grained control over both the flow and state of AI applications. Built-in persistence capabilities enable advanced human-in-the-loop controls and memory features, making it possible to manage complex interactions and ensure continuity over time.

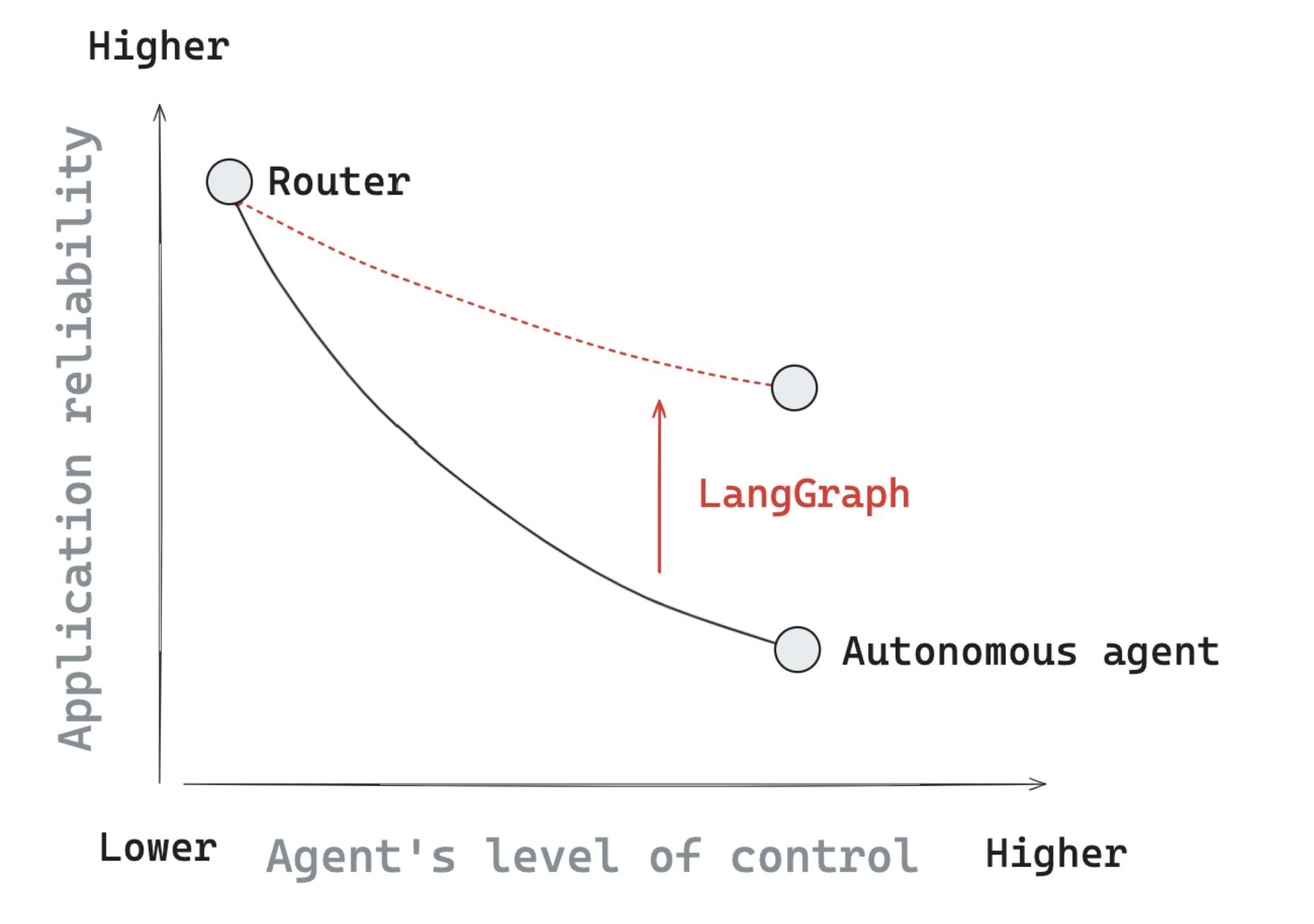

LangGraph addresses the increasing complexity of LLM-powered applications. While LLMs are particularly powerful when connected to external systems—like a retriever or APIs—developers often create a control flow of steps before or after LLM calls to ensure correctness and reliability. Traditional fixed workflows, commonly called “chains”, can be too rigid, limiting adaptability. By contrast, agents allow LLMs to determine their own paths through a set of potential steps. This results in more intelligent and efficient systems that can use the LLM to route between paths, decide which tools to call, and decide when further work is needed. However, increasing LLM autonomy can reduce reliability, as models may behave unpredictably or choose ineffective tools. LangGraph is designed to balance this trade-off, preserving reliability while granting agents more freedom.

Source: LangChain

Among the key features that LangGraph provides is greater controllability, enabling developers to represent workflows as nodes and edges where nodes share memory and edges dictate deterministic or conditional flows. LangGraph also introduces persistence, allowing short- or long-term storage of application state. This makes it possible to maintain continuity and recall past interactions effectively. The framework’s human-in-the-loop support ensures developers or users can pause and review agent actions, injecting oversight to enhance accuracy and accountability. Additionally, LangGraph offers streaming functionality so developers and end-users can observe the application’s state, including tool usage and token emissions, in real time.

In October 2024, LangChain announced support for long-term memory in LangGraph. Previously, checkpointers provided only “short-term memory,” limiting an agent’s ability to learn across multiple conversations. With the introduction of cross-thread memory, LangGraph now enables agents to recall information from past conversations. This relies on a persistent document store where developers can put, get, and search for historical data, allowing agents to improve over time based on user feedback and preferences.

LangGraph’s components support developers throughout the entire lifecycle of an application. The LangGraph Platform provides the infrastructure for deploying LangGraph agents built on the open-source framework. Complementing this, the LangGraph Server exposes APIs, while the LangGraph SDKs act as clients to these APIs. Developers can leverage the LangGraph CLI to build servers more efficiently and use LangGraph Studio as a UI and debugging environment. LangGraph Cloud offers a simplified method for deploying LangGraph objects, making it easier to scale and manage applications in production environments.

LangSmith

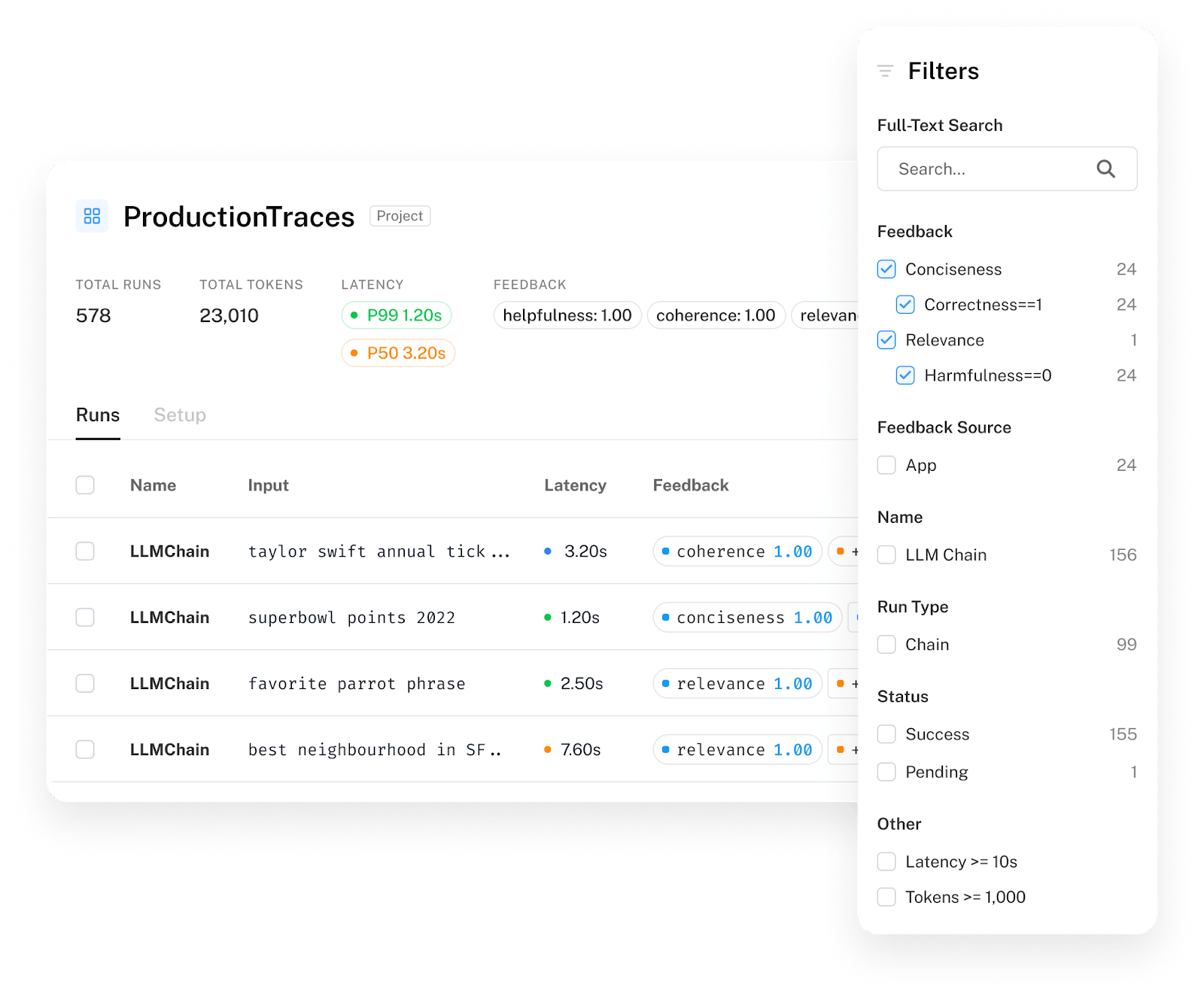

LangSmith, released in July 2023, is a platform designed for building production-grade LLM applications. Its primary goal is to help developers confidently move from prototype to production, allowing them to closely monitor and evaluate their applications. While LangChain’s libraries, integrations, and templates enable rapid prototyping—sometimes in as few as 5 lines of code—scaling these prototypes into reliable production systems remains challenging.

Developers working with LLM-based applications often face several obstructions. It can be difficult to know the exact final prompt an LLM receives after template formatting, or to fully understand the output returned at each step before it undergoes post-processing. Additionally, the sequence of calls—whether to the LLM itself or to other integrated resources—can be opaque. Tracking token usage, managing costs, and debugging latency issues are all tasks made more complicated by the nature of LLMs. Furthermore, developers may struggle with building suitable datasets for testing, establishing meaningful metrics for evaluating performance, and gaining insights into how end-users interact with the product.

Traditional debugging, testing, and monitoring solutions are not designed with these unique LLM-driven complexities in mind. LangSmith addresses these concerns by providing debugging features that offer full visibility into model inputs and outputs at every step. To strengthen the development process, LangSmith also includes testing capabilities, enabling developers to easily run chains and prompts against curated or custom datasets to build intuition about LLM behavior.

For performance measurement, LangSmith integrates with open-source evaluation modules that enable heuristic evaluations like regexes or LLM evaluation. While there are concerns about the LLM-assisted evaluation, LangChain cites evidence from Anthropic to defend its approach and expects the time and capital spent on this method to dramatically come down. Finally, LangSmith delivers comprehensive monitoring functionalities. Developers can track latency, costs, and application-level metrics to understand how users engage with the product, identify bottlenecks, and make informed improvements.

Source: LangChain

To enable close collaboration with development teammates, LangSmith also allows developers to share a chain trace with colleagues, clients, or end users, bringing explainability to anyone with the shared link. LangSmith Hub is used to craft, version, and comment on prompts without the need for engineering experience. Annotation Queues add human labels and feedback on traces, and Datasets allow teams to collect examples and construct datasets from production data or existing sources to be used for evaluations, few-shot prompting, and even fine-tuning. In doing so, LangSmith bridges the gap between quick prototypes and stable, scalable production LLM applications in the context of large development teams.

Market

Customer

As of February 2025, LangChain caters to enterprises of all sizes across diverse industries, including financial, business, legal tech, medical tech, enterprise technology, and more. Its customers include companies like Morningstar, Boston Consulting Group, Wordsmith, OpenRecovery, Microsoft, and Paradigm (YC24).

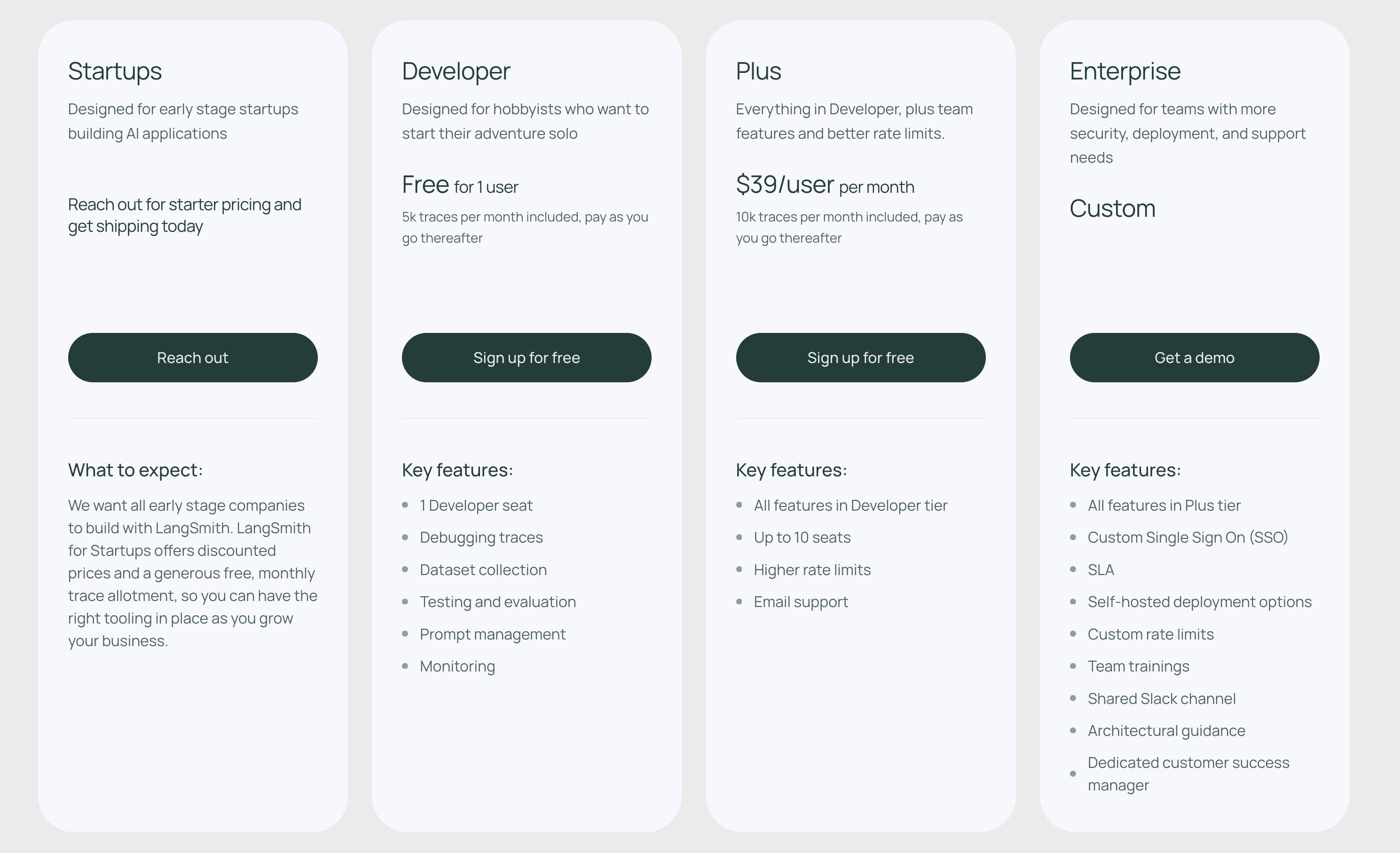

By serving both large and small enterprises, LangChain is positioned to assist large enterprises in transitioning to new AI technologies and also help startups building AI agents scale rapidly. This strategy lets LangChain secure collaborations with mature enterprises like Microsoft, as well as become early partners with nascent, agent-focused startups as they scale and use LangChain’s products. For instance, on its pricing page for LangSmith, the company states that “[LangChain] wants all early-stage companies to build with LangSmith. LangSmith for Startups offers discounted prices and a generous free, monthly trace allotment, so you can have the right tooling in place as you grow your business.”

Market Size

The global application development software market was valued at approximately $255 billion in 2024 and is projected to grow at a CAGR of 20.9%, reaching around $1.7 trillion by 2034. More specifically, LangChain operates in the AI application development tools industry, which was valued at $4.8 billion in 2023 and is estimated to grow at a CAGR of over 23% between 2024 and 2032 to $30 billion by 2032. As of December 2023, North America dominated the AI code tools market with around 35% of the market share.

With the rapid advancements in AI capabilities, exemplified by the September 2024 release of OpenAI’s o1 reasoning model and the development of AI agents experiencing a market growth of 44.8% CAGR, Gartner predicts that by 2028, 33% of enterprise software applications will incorporate agentic AI. This is up from less than 1% in 2024 and will enable 15% of day-to-day work decisions to be made autonomously.

Given these nascent capabilities, investors have invested over $8.2 billion into AI agent startups from October 2023 to October 2024, spread over 156 deals, representing an 81.4% increase YoY. LangChain is positioned to offer these AI agent startups the tools they need to scale their operations.

Competition

Incumbents

OpenAI

OpenAI, the company behind ChatGPT and foundation models GPT-4o and o1, offers its own software and APIs for building AI-powered applications. Through its platform, developers can access models like GPT-4o or GPT-4o mini, and leverage a range of APIs tailored to different workflows. These include a Chat Completions API for building chatbots, a Realtime API for low-latency, multimodal interactions, an Assistants API for executing complex multistep tasks, and a Batch API for high-volume data processing. Additional capabilities cover knowledge retrieval through Assistants File Search (beta), iterative code execution via code interpreter, custom function calling for interacting with codebases, and vision for image-related queries.

Developers can ensure structured outputs with JSON mode and leverage streaming for real-time responses. OpenAI’s Playground simplifies model exploration, while fine-tuning APIs and a custom model program provides further flexibility. Lastly, community libraries, built and maintained broadly by developers who use OpenAI’s platform, offer more choices for teams with the option to add new community libraries. A collection of common use cases of OpenAI’s foundation models is also published in OpenAI’s Cookbook by its employees.

OpenAI’s developer platform is used by startups and large enterprises like Harvey and Salesforce. The platform’s pricing is based on the number of tokens and the type of model used to develop applications. For instance, its GPT-4o model costs $1.25 per 1 million input tokens.

Amazon Bedrock

Amazon AWS uses its cloud infrastructure to deliver Amazon Bedrock, an end-to-end AI application orchestration solution. It integrates models from multiple leading providers—AI21 Labs, Anthropic, Cohere, Meta, and Stability AI—alongside Amazon’s own Titan models, all accessible through a single API. Developers can test model suitability across modalities via interactive playgrounds and customize models using fine-tuning and continuous pre-training on proprietary data. Bedrock’s Knowledge Bases feature provides fully managed RAG capabilities, automating ingestion, retrieval, prompt augmentation, and citations, to remove the need for developers to write custom code to integrate data sources and manage queries. This is achieved while ensuring encryption and control over encryption keys with AWS KMS.

Additionally, Agents for Amazon Bedrock orchestrate and execute multistep tasks, maintain memory, securely generate code, and dynamically create prompts from user instructions, action groups, and knowledge bases. Unlike OpenAI’s developer platform, Amazon Bedrock does not offer a dedicated open-source community library as of February 2025. Instead, it offers a Heroes Content library that provides developers with authored content across topics like analytics and databases in the form of blogs, videos, slide presentations, podcasts, and more. AWS also provides a community forum.

Amazon Bedrock serves large enterprises across a variety of industries, including Cisco, Workday, and NASDAQ. The platform’s pricing model contains two plans based on model inference and customization. The first plan, called On-Demand and Batch, allows developers to use foundation models on a pay-as-you-go basis without having to make any time-based term commitments. The second plan, called Provisioned Throughput, lets developers provision sufficient throughput to meet their application's performance requirements in exchange for a time-based term commitment.

Startups

Braintrust

Founded in 2023 by Ankur Goyal, Braintrust is an end-to-end platform for building and evaluating LLM-based applications. With $45 million in total funding as of February 2025 from investors including Andreessen Horowitz and Databricks Ventures, Braintrust provides support for iterative experimentation, performance insights, real-time monitoring, and data management for building AI applications. With Braintrust’s Prompt playground, developers can prototype with different prompts and models. Built-in tools including tracing, logging, evaluations, function calling, and self-hosting help evaluate how models and prompts perform in production. Braintrust’s product is built to cater to both technical and non-technical teams with code and UI solutions including prompting, scoring, and curating datasets. Finally, its developer community contains a Cookbook with common use cases of Braintrust that is open-source and hosted on GitHub.

Braintrust serves AI teams at large technology companies like Instacart, Stripe, Zapier, Notion, and Ramp*. Its two-tiered pricing structure gives options for individual developers, students, and enterprises. For individual developers up to 5 users, Braintrust offers the Builder plan for free. This includes 1K spans per week, access to prompt playground, custom scorers and tools, shareable experiments, and a discord community. The student plan has everything in the Builder plan but does not have a limit on the number of users. The custom Enterprise plan has increased usage limits, flexible pricing, private VPC, and a shared Slack channel.

Humanloop

Founded in 2020 by Raza Habib, Jordan Burgess, and Peter Hayes, Humanloop specializes in LLM evaluation for enterprise development teams. In November 2023, Humanloop raised $5 million in a seed plus round led by Y-Combinator, bringing total funding to $7.9 million. The platform helps developers develop prompts and agents through an interactive prompt editor, with full version tracking. It supports popular models like GPT-4o and Llama-3.5. For evaluation, it integrates continuous integration and deployment to prevent regressions, offers automatic AI and code assessments, and provides UI tools for human review. And for observation, Humanloop features alerts, guardrails, online evaluations, and robust tracing and logging to identify and address issues in RAG systems.

Humanloop has customers such as Gusto, Vanta, and Macmillan Education. Its eval products have helped Gusto see a 25% performance improvement, increase Filevine’s revenue by 200%, and increase product velocity 3x for Dixa. Humanloop offers a two-tiered pricing model for individual developers and enterprise. The basic plan is free for 2 users and includes 50 eval runs and 10K logs per month. The custom-priced Enterprise plan includes SSO and SAML, role-based access controls, hands-on support with SLA, and VPC deployment add-on, as well as enterprise support. To support early-stage VC-backed startups, Humanloop offers a specialized program that requires companies to apply to receive custom pricing.

Differentiation

Compared to LangChain, incumbent players like OpenAI and Amazon Bedrock offer fewer out-of-the-box templates and open-source libraries compared to LangChain. While these platforms provide integrated environments and foundation models, LangChain’s open-source community and extensive library of integrations and templates present more options—from model choices to pricing structures—offering teams of all types greater customizability for rapid prototyping.

While Braintrust and Humanloop both target the enterprise layer of LLM application development, Braintrust caters to both technical and non-technical teams with a robust playground to experiment with applications, and Humanloop offers robust guardrails, logging, and role-based access for regulated environments. Compared to its competition, LangChain maintains a competitive edge due to its large open-source community, wider range of API libraries, and templates that support rapid prototyping and integration with various models—advantages that cater to performance-focused teams from early experimentation to production-scale deployment.

Business Model

LangChain’s business model revolves around offering paid tiers for its complementary SaaS platforms, LangSmith and LangGraph, while the core LangChain framework remains open-source.

LangSmith operates on a tiered pricing structure. Startups benefit from discounted custom pricing and a free monthly trace allotment, allowing them to begin experimenting without a large upfront cost. Individual developers and hobbyists can adopt the Developer plan, which is free and includes 5k traces per month, with the option to pay-as-you-go for additional usage. The Plus plan builds on the Developer plan by providing 10K included traces, higher rate limits, support for up to 10 seats, and dedicated email assistance for $39 per user per month, while still offering pay-as-you-go scalability.

For larger enterprises, a custom Enterprise plan includes all Plus-level features, along with single sign-on (SSO), SLAs, self-hosting options, custom rate limits, personalized team training, a shared Slack channel, architectural guidance, and a dedicated customer success manager. All LangSmith plans provide access to debugging traces, dataset collection, human labeling, testing and evaluation tools, prompt management, monitoring capabilities, and bulk data export options, except the latter is not available for the Developer plan.

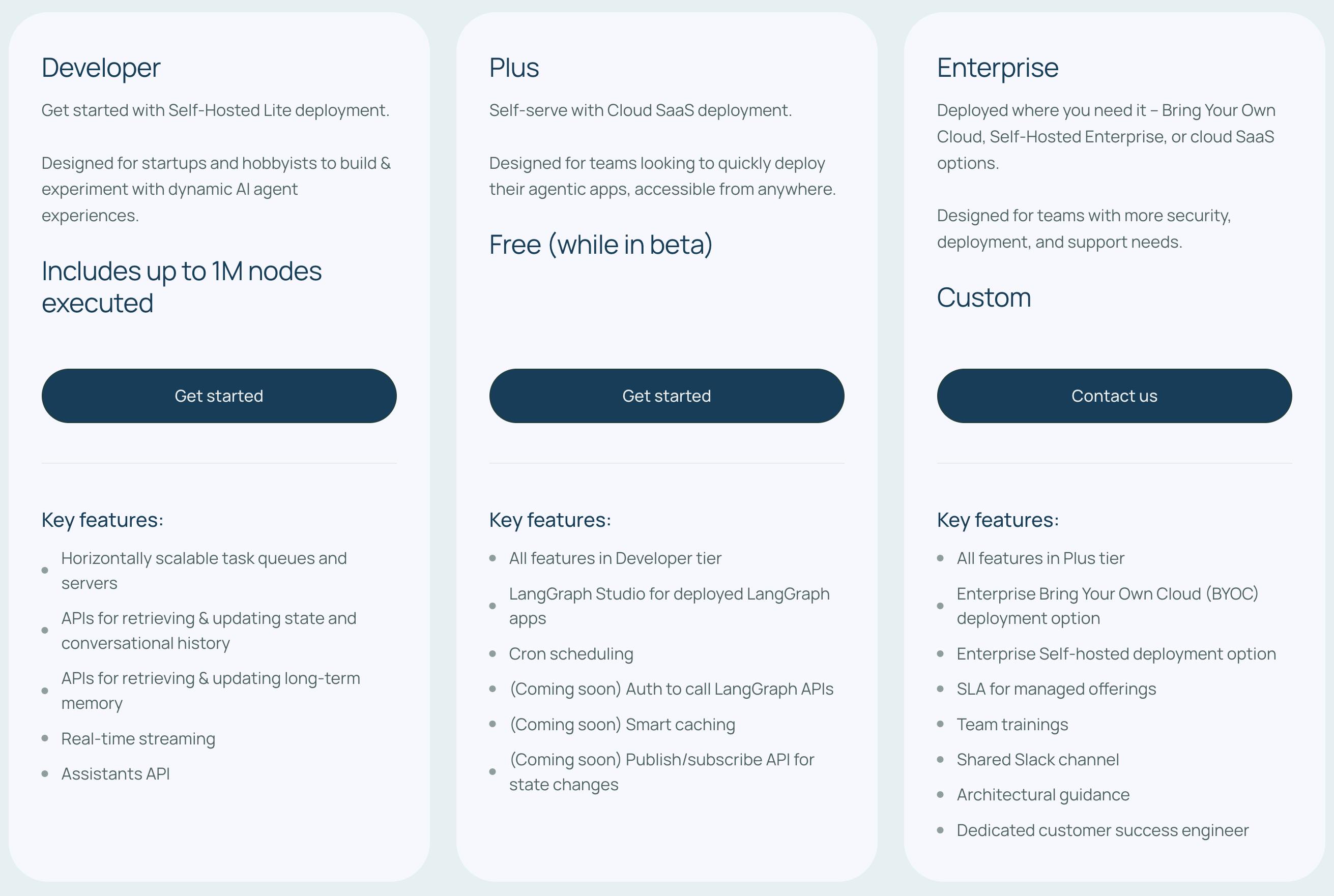

Source: LangChain

LangGraph, LangChain’s platform for dynamic AI agent experiences, follows a similar tiered approach. The Developer plan, intended for startups and hobbyists, allows for self-hosted lite deployment and includes up to 1 million nodes executed. It comes with features like horizontally scalable task queues and servers, APIs for state and conversational history management, long-term memory APIs, real-time streaming, and the Assistants API. The Plus plan is free as of February 2025 while in beta. It supports a cloud SaaS deployment model and includes all Developer plan features alongside LangGraph Studio for deployed LangGraph applications and Cron scheduling. Planned enhancements to the Plus plan include authentication for calling LangGraph APIs, smart caching, and a publish/subscribe API for state changes.

For organizations requiring greater security, deployment flexibility, and support, the Enterprise plan offers all Plus-tier features with additional options for Bring Your Own Cloud (BYOC), self-hosted enterprise deployments, SLA-backed managed offerings, team training sessions, a shared Slack channel for support, architectural guidance, and a dedicated customer success engineer. Like LangSmith’s Enterprise tier, LangGraph’s Enterprise pricing is custom and requires direct outreach.

Source: LangChain

Traction

As of October 2024, more than 132K LLM applications have been built using LangChain, with 4K open-source contributors in its community. Total downloads have also increased to more than 130 million across Python and JS platforms. On its GitHub page as of February 2025, LangChain has over 99K stars, over 16K forks, and 28 million downloads per month.

As of February 2025, LangSmith has over 250K user signups, 1 billion trace logs, and more than 25K monthly active teams. Some notable customers as of December 2024 include Klarna, Snowflake, and Boston Consulting Group (BCG). LangChain announced LangSmith’s closed beta in July 2023. By February 2024, it had 70K users signed up and more than 5K companies using LangSmith every month.

Valuation

LangChain raised a $25 million Series A round in February 2024 led by Sequoia Capital, putting the company’s post-money valuation at $200 million and bringing its total amount raised to $35 million. New investors also participated in this round, including Lux Capital and Conviction Partners. Prior to its Series A, LangChain raised a $10 million seed round led by Benchmark Capital in March 2023.

According to an unverified revenue estimate, LangChain’s ARR is under $5 million as of 2023. Although official figures are not available, it is helpful to note that LangChain launched its first paid offering—LangSmith—for general availability in February 2024, which has achieved early traction with over 5K monthly enterprise users. Considering the recent introduction of LangSmith and the company’s early stage of monetization, it is reasonable to estimate that LangChain’s current ARR remains limited.

Key Opportunities

Ecosystem Lock-In

LangChain’s large open-source contributor base and its extensive library of integrations (600+ plug-ins) present an opportunity to create a powerful ecosystem effect. By continuing to encourage community involvement—such as sharing templates and adding connections to new language models, vector databases, and retrieval tools—LangChain can establish itself as a key resource. This community-led innovation can translate into faster development cycles, enhanced product offerings, and a lasting moat as new AI application orchestration and workflows emerge.

Deepening Enterprise Integration

With a growing enterprise customer base (e.g., Morningstar, Boston Consulting Group, and collaborations with Microsoft), there’s a clear opportunity to offer more enterprise-grade features. Research from IBM shows that while there is growth in enterprise adoption due to widespread deployment by early adopters, barriers keep 40% in the exploration and experimentation phases. The top barriers preventing deployment include limited AI skills and expertise (33%), too much data complexity (25%), and ethical concerns (23%).

In addition, 35% of companies say that a lack of skills for implementation is a big inhibitor. Given these statistics, LangChain can include advanced security and compliance capabilities, custom SSO, SLA-backed support, architectural guidance, and on-premise or bring-your-own-cloud deployments, to address these large inhibitors. As AI adoption accelerates in large organizations, bundling LangChain’s existing frameworks (LangGraph, LangSmith) into robust, turnkey enterprise solutions can drive significant revenue and customer loyalty.

Commercializing the Developer Ecosystem

LangChain already provides rich templates, workflows, and integrations—both for newcomers and advanced developers. By creating a marketplace for premium templates, specialized agent architectures, or certified integrations, LangChain can monetize the ecosystem it has built. Such a marketplace could attract professional developers and enterprise IT teams, accelerating adoption and subscription growth.

Key Risks

Reliance on External Model Providers

LangChain’s core value proposition hinges on easy integration with foundation models like OpenAI’s GPT-4 or Anthropic’s Claude. Any sudden changes—model pricing shifts, API deprecations, or stricter usage policies—could disrupt existing user workflows. This dependency on external model providers makes LangChain vulnerable to supplier-driven market changes.

Competitive Pressure from Incumbents

Established tech giants (e.g., AWS’s Bedrock, OpenAI’s integrated offerings) typically provide end-to-end AI solutions that may reduce the need for a separate orchestration framework. As these incumbents roll out features like native retrieval-augmented generation, integrated debugging, and agent management, LangChain risks losing market share if it cannot differentiate on flexibility, ecosystem breadth, or cost-effectiveness.

Complexity & Specialization

While LangChain aims to simplify development, the ecosystem of LLM-based tools and orchestration frameworks is becoming increasingly crowded. As a result, there is a need to differentiate. For instance, while Braintrust and Humanloop both focus on evaluation and observability, Braintrust caters to both technical and non-technical teams while Humanloop aims to minimize costs and shipping time. LangChain, on the other hand, covers everything from prototyping to production. If LangChain’s toolset becomes too undefined or complex, developers may find steeper learning curves and gravitate toward more specialized solutions for particular tasks or teams. If LangChain broadly increases complexity without specialization, it could deter new users and erode market share.

Summary

LangChain is an open-source framework that streamlines the development of LLM-based applications by offering standardized component interfaces, flexible orchestration, and a rich ecosystem of integrations. Its products, including LangGraph and LangSmith, help developers go from prototype to production more easily. Despite intense competition from major cloud providers and specialized startups, LangChain’s open-source community advantage and rapid innovation have propelled it to a significant position in the space with the opportunity to expand its customer base.

*Contrary is an investor in Ramp through one or more affiliates.