Actionable Summary

Since the release of ChatGPT in November 2022, artificial intelligence startups have received an outsized proportion of investor capital and attention, with 49% of all venture funding in Q2 2024 going to AI and machine learning startups.

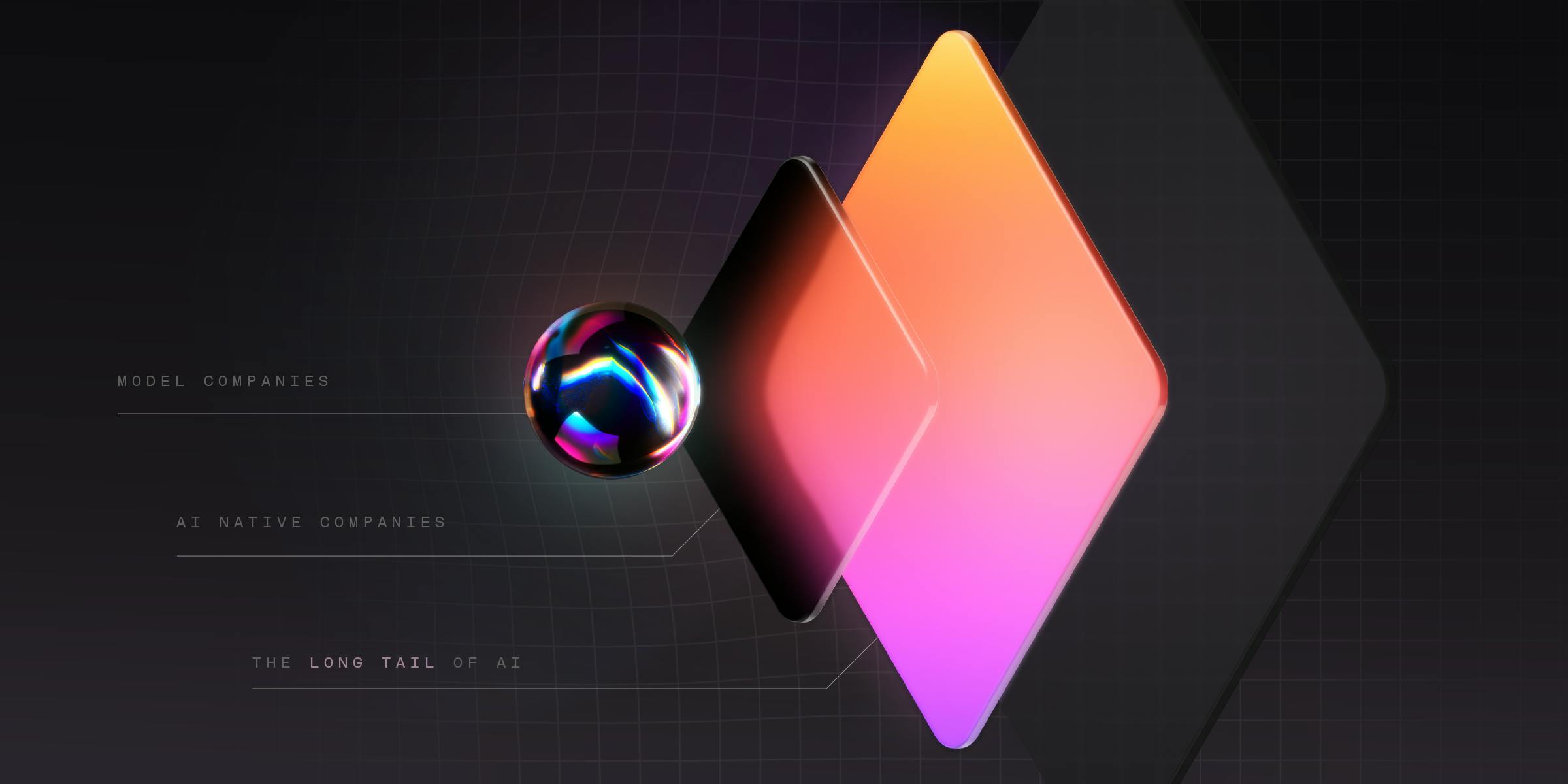

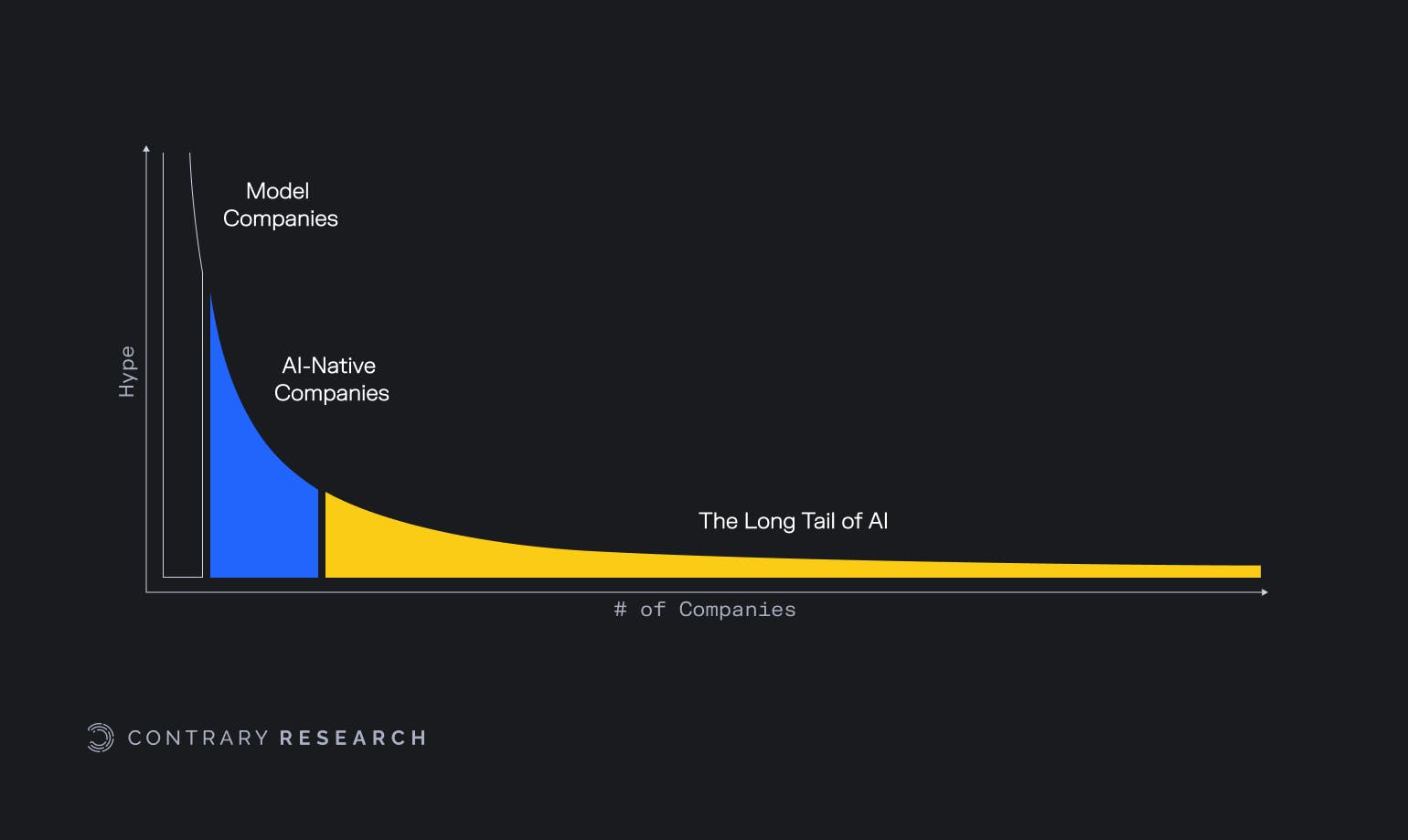

While all eyes are on model providers like OpenAI and AI-native companies like Perplexity and Jasper, the number of “non-AI” companies that will be impacted by artificial intelligence far outnumbers their AI-focused counterparts.

We collectively refer to the impact of artificial intelligence on these other companies as “the long tail of AI.” This long tail can be divided into four categories, based on how companies choose to integrate AI: building independent models, leveraging existing models like OpenAI’s GPT-4, building on top of open-source models like Meta’s Llama, and using pre-built AI tools like ChatGPT.

Building an independent, proprietary model is the most resource-intensive way to leverage artificial intelligence. This is generally reserved for companies that have large, novel data sources from which they can derive unique insights and the human and financial capital needed to train a new model from scratch.

Closed-source models, such as OpenAI’s GPT models or Anthropic’s Claude, which have been trained on billions, and possibly trillions, of parameters, can generate accurate, detailed outputs across a variety of fields, from coding to customer service, and they are easy to access via API.

Open-source models, like Mistral or Meta’s Llama, are also powerful tools, with Llama 3.1 being trained on 405 billion parameters. Unlike closed-source LLMs, however, open-source models provide companies with increased transparency and flexibility, as model weights can be adjusted to meet specific customer needs.

Third-party AI tools, such as ChatGPT, are the easiest to integrate as customers can simply pay to use a fully developed tool instead of investing in building or adjusting models internally.

While AI strategies vary from company to company, those that have shown success so far are using AI to complement their underlying businesses, they are replicating peers’ successes, and they’re remaining flexible as artificial intelligence continues to develop.

The Power Law of Hype in AI

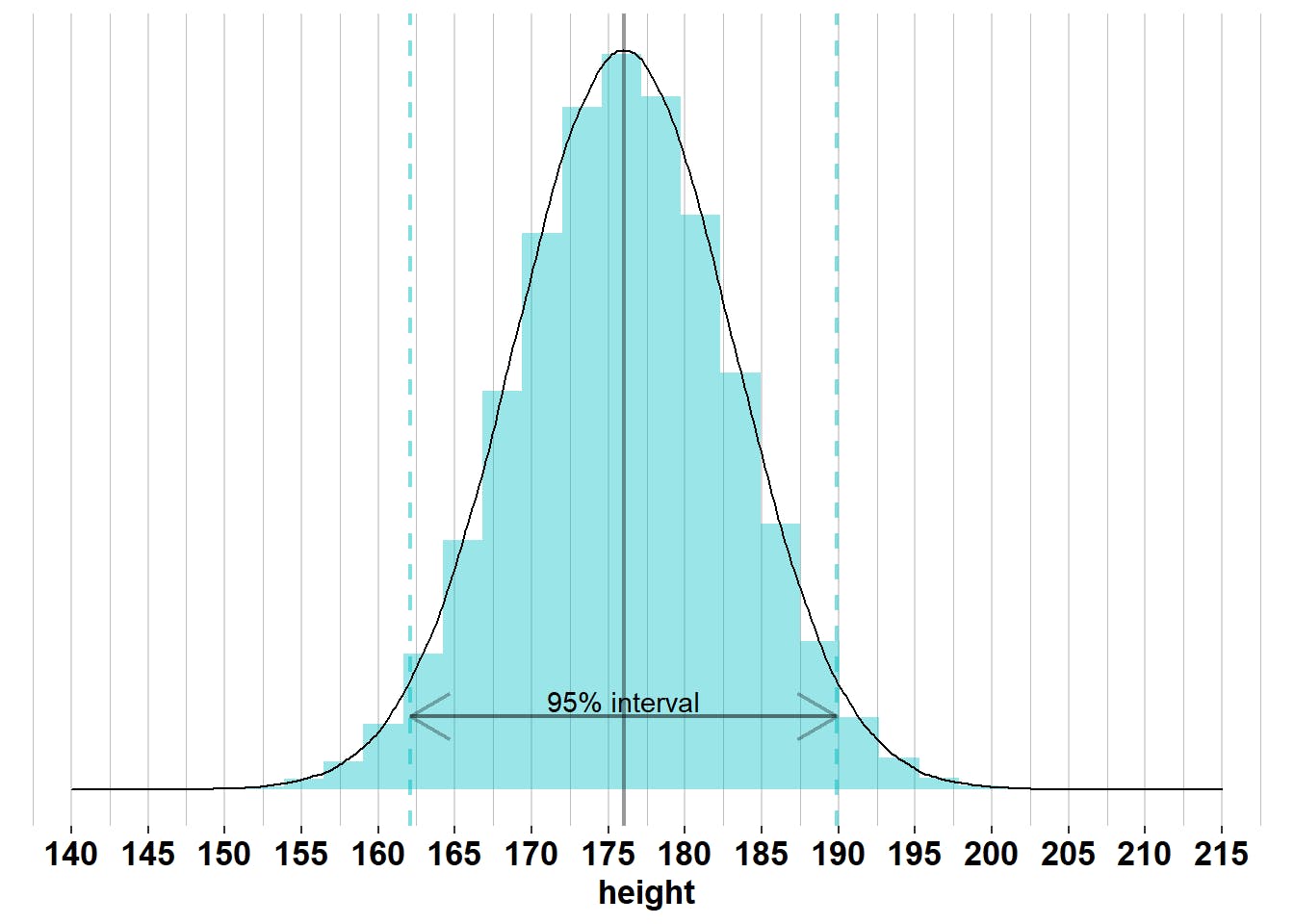

Normal distributions exist all around us, from IQs, to standardized test scores, to blood pressure levels across a population. It’s easy for most people to grasp phenomena subject to normal distributions because they’re intuitive and easy to understand. The “average” outcome is the most common result, and probabilities decline equally in both directions as you move further from the average point. Height is another classic example of a normal distribution.

Source: Bookdown

However, much of the world is defined not by normal distributions, but by less intuitive power laws where a small number of outliers determine the majority of results. Finance, specifically, is defined by extreme power laws. In public equities, just seven stocks, or roughly 1.4% of the S&P 500, were responsible for more than half of the index’s gains in 2023. At the other end of the financial market spectrum, venture capital is a hit-driven business that works because a few outliers generate the lion’s share of a fund’s returns.

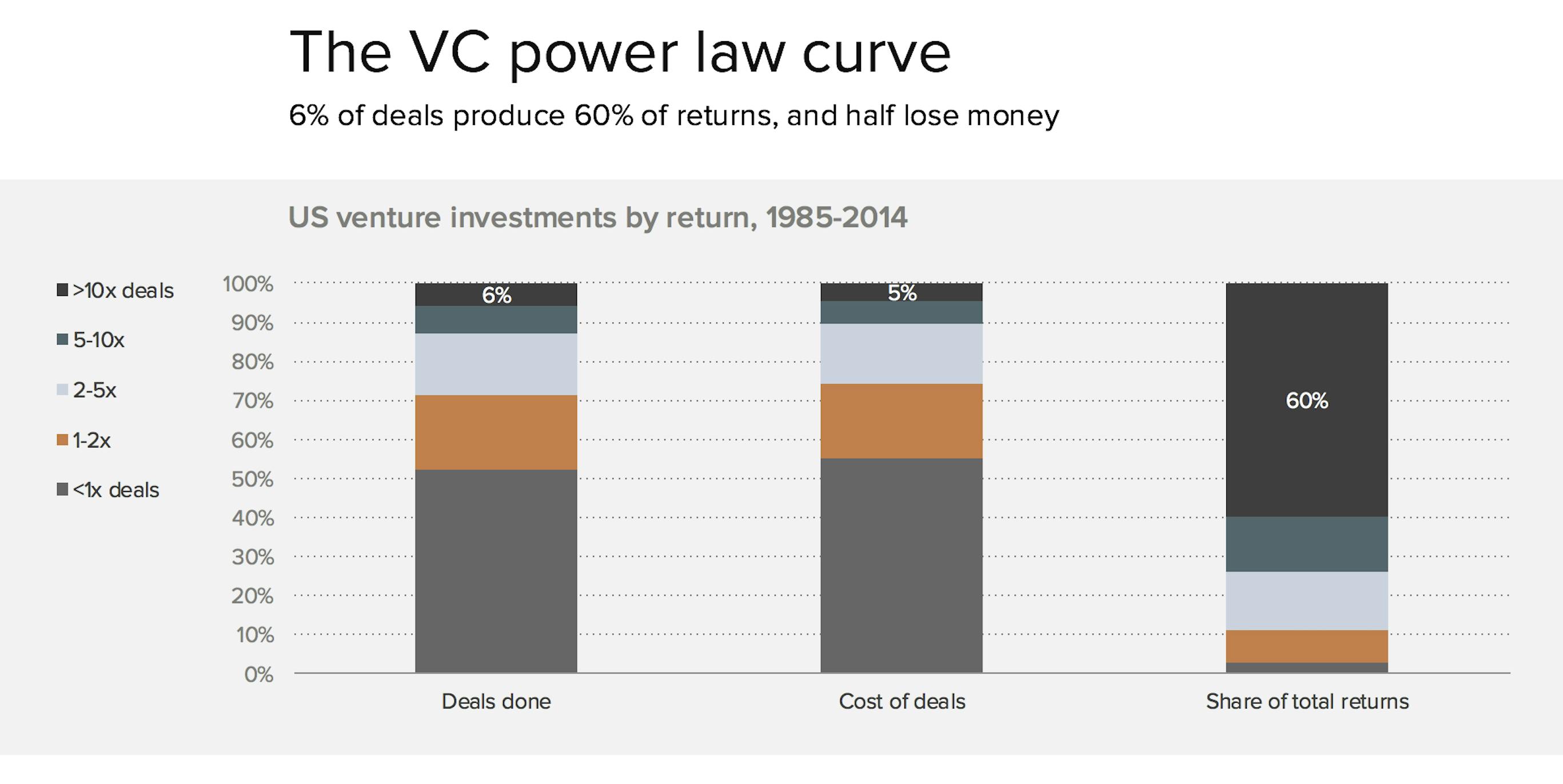

In 2008, Union Square Ventures founder Fred Wilson noted that “every really good venture fund…has had one or more investments that paid off so large that one deal single handedly returned the entire fund.” Wilson’s point was later echoed in 2016 by then-a16z partner Benedict Evans, who noted that in venture, “6% of deals produced at least a 10x return, and those returns made up 60% of total venture returns.”

Source: Andreessen Horowitz

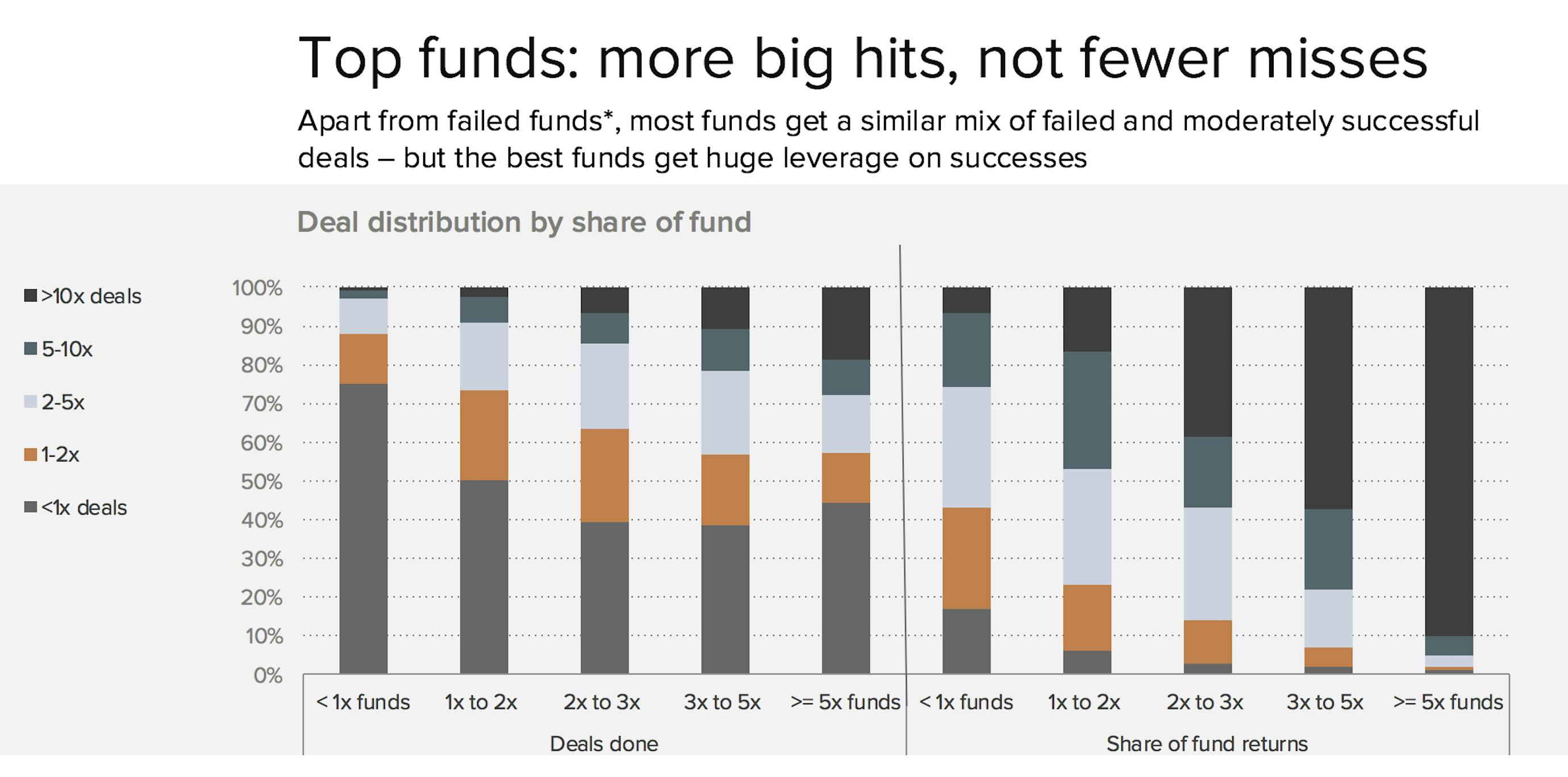

Evans also pointed out that deals that generated 10x or greater returns were responsible for 90% of top-performing funds’ returns.

Source: Andreessen Horowitz

Because venture is a hit-driven business, capital tends to be concentrated in the sectors that investors believe offer the best opportunities for outsized returns. This creates a “power law of hype” in venture capital. In 1999, for example, as the Dot Com bubble was roaring, 39% of all venture investments went to “internet” companies. In 2024, the sector with equivalent hype is AI. Ever since OpenAI released ChatGPT in November 2022, funding for AI companies has skyrocketed. For example:

75% of the startups in YCombinator’s Summer 2024 cohort (156 out of 208) were working on AI-related products.

In Q2 2024, 49% of all venture capital went to artificial intelligence and machine learning startups, up from 29% in Q2 2022.

In 2020, the median pre-money valuation for early-stage AI, SaaS, and fintech companies was $25 million, $27 million, and $28 million, respectively. In 2024, those figures are $70 million, $46 million, and $50 million.

OpenAI, which was unprofitable and on pace to generate $4 billion in annual revenue, was able to raise new capital at a $157 billion valuation in October 2024 (implying a 39x revenue multiple).

As we’ve written about in a previous deep dive about AI-native startups, much attention in the early 2020s has focused on generative AI. The advent of LLMs has made it possible for AI to increasingly serve as the heart of a company’s product.

However, while these AI-native startups are receiving an outsized proportion of investor attention, they don’t tell the full story of what is happening with AI. This is because most companies are not AI companies, at least not at their core. For the vast majority of businesses, artificial intelligence is not a central part of their business, but such companies will be impacted by developments in AI technology nevertheless. Having previously covered AI-native companies, this report is intended to explore the long tail of companies impacted by AI.

If you think back to the Dot Com bubble, some of the biggest winners of the internet boom ended up being non-internet companies that adapted to trends like ecommerce. Walmart, for example, which was founded in 1962, generated $73 billion in ecommerce revenue in 2023, up five-fold from 2017. Walmart and the other non-internet companies who benefited from the explosion in global internet access fall into a group of companies that we call “the long tail” of the internet.

Today, a new “long tail” has emerged: the long tail of AI, which encompasses non-AI companies across all industries, including fintech, SaaS, industrial, healthcare, ecommerce, hospitality, defense tech, media, fashion, logistics, and transportation, that will be impacted by artificial intelligence. In this deep dive, we explore this long tail of AI. From using pre-built AI tools to integrating artificial intelligence into internal workflows, companies across the economy are using, or attempting to use, AI. Just as the internet had far-reaching ramifications for non-internet companies, AI’s impact will not be limited to companies building AI models or products.

Defining the Long Tail

AI companies – those that have received the lion’s share of the “hype” and investor attention around AI – can be broken into two categories:

AI-native companies like conversational search engine Perplexity and marketing solutions platform Jasper, whose products and services are built around AI models.

For companies in the first category, the model is the product. But for companies in the second category, the product is reliant on an AI model to function. Tuhin Srivastava, CEO and co-founder of ML model deployment platform Baseten, said that one way to think about the definition of AI-native companies is that with such companies; “take away the model, and the company doesn’t exist.”

However, most companies, from Bloomberg, to Walmart, to Canva, don’t fall into one of these two groups; they exist in the much broader category of everything else. It’s the intersection of “everything else” and artificial intelligence that we are referring to as “the long tail of AI.”

Source: Contrary Research

The emergence of this long tail invites plenty of questions, such as:

How will advancements in artificial intelligence impact companies whose business models have long-preceded the launch of ChatGPT?

How are employees across different industries, from analysts at consulting firms to software engineers at tech companies, using artificial intelligence today? How will they use artificial intelligence in the future?

How are companies positioning themselves in the AI marketplace? Are they building their own models from scratch, or using tools developed by outside parties? How should these companies be positioning themselves?

The answers to these questions are as diverse as the companies themselves. Some companies, such as expense management platform Ramp* and Australian SaaS giant Atlassian, have layered generative AI on top of their existing data and workflows.

Other companies, such as Klarna, are investing in AI to improve their own internal operational efficiencies. In February, the Swedish fintech claimed that its AI chatbot does the work of 700 full-time customer service employees, and in September 2024, it announced plans to replace SaaS platforms Workday and Salesforce with internal AI tools.

Large and small businesses alike are using AI tools to improve employee productivity as well. In May 2024, PwC signed a deal to provide access to ChatGPT Enterprise’s advanced capabilities to more than 100K employees across the US and UK. Meanwhile, 98% of small businesses that participated in a 2024 US Chamber of Commerce survey reported using a tool enabled by AI, with Jordan Crenshaw, senior vice president of the US Chamber’s Technology Engagement Center, saying, “AI allows small businesses — who many times do not have the staff or resources of their competitors — to punch above their weight.”

Thanks to more than 20 years of hindsight, we can look back at the internet boom of the 90s and early 2000s and accurately identify winners, losers, and market-defining trends that emerged, such as cloud storage, ecommerce, and social media. AI, however, doesn’t have the same benefit of hindsight. While AI is widespread, with PwC noting that 54% of companies had implemented generative AI solutions according to its 2023 Emerging Technology Survey, the companies implementing AI solutions are still figuring out what these use cases might look like, much to the benefit of consulting firms. Accenture generated an annualized $3.6 billion in AI bookings in Q3 2024, and BCG projected that 20% of its 2024 revenue and 40% of its 2026 revenue would come from AI integration projects.

Because AI is evolving so quickly, companies are still finding their footing, and today’s data may very well be antiquated tomorrow. The purpose of this report is therefore neither to crown winners and losers nor to adjudicate any company’s AI strategy. Our goal is to provide a framework for categorizing and understanding various use cases that exist within the long tail of AI, so readers can more effectively consider what AI use cases in their own businesses might look like.

Through this deep dive, we hope to help readers who work in non-AI native companies better understand the ways in which different companies are implementing AI solutions, providing them with tools to help determine how they could integrate AI in their own companies.

We have divided the long tail of AI into four “layers” that group AI use cases by descending costs and resource requirements:

Companies that have built their own internal AI models

Companies that have leveraged closed-source models, like OpenAI or Anthropic

Companies that have leveraged open-source models, such as Llama, Mistral, or those available on Hugging Face

Companies that have incorporated pre-built AI tools into their workflows.

These layers are dynamic, not static, and some companies can be classified into two or more of them if they are using generative AI in more than one way. Given this ever-changing state of artificial intelligence across companies, we believe the simplest way to understand how companies are using AI is by studying specific use cases of how a variety of companies are incorporating AI into their businesses.

Layer 1: Build Your Own Model

The most resource-intensive AI strategy for companies in the long tail of AI is building an independent model from scratch. The companies best suited for this strategy are (1) well-capitalized and (2) have valuable, unique proprietary datasets.

Companies building custom models need to be resource-rich. Custom models are expensive to train – according to Sam Altman, GPT-4 cost $100 million to train – and they require highly skilled talent and large datasets. For companies planning to use generative AI for tasks that are common across multiple businesses, there are probably cheaper alternatives.

However, for well-capitalized companies with large, proprietary datasets, proprietary models (A) can generate more detailed, company-specific outputs than what would be available through generalized, publicly available models, (B) leave the company in full control of the model and its weights, and (C) create the potential for cost savings on inference compute compared to the largest closed-source models.

Bloomberg

Bloomberg is a New York City-based financial data and media company that generates over $12 billion in annual revenue, which made it the 33rd largest private company in the United States by revenue in 2023. The Bloomberg Terminal, its flagship financial markets information product, is responsible for approximately two-thirds of that revenue. Smaller components of Bloomberg’s business include a news agency and Bloomberg Industry Group, which provides software for legal, tax, and government use cases. Bloomberg has leveraged its datasets to build its own large language models and AI tools.

Out of more than 8K engineers at Bloomberg, over 350 are part of the AI engineering team. Bloomberg’s AI team has published numerous AI research papers every year since 2017, showing Bloomberg’s longstanding commitment to investing in AI long before the release of ChatGPT.

In March 2023, Bloomberg announced the launch of BloombergGPT, a finance-specific LLM. The 50-billion parameter model was trained from scratch on a combination of a 345 billion public token dataset and a 363 billion token dataset stemming from 40 years of financial language documents that Bloomberg had collected. Estimates on the cost of the compute required to train BloombergGPT range from over $2.7 million to over $10 million.

Despite this level of investment, as of November 2024, Bloomberg has not explicitly stated whether or not any of its features are currently powered by BloombergGPT – which may indicate that the product is primarily meant for external use.

In its April 2023 “AI 50 list,” Sequoia mentioned Bloomberg as a key example of a company with “large and unique data stores” that will see advantages to training its own models as a moat. However, a deeper look at the BloombergGPT research paper reveals that a small fraction (0.70%) of the training data came from Bloomberg itself, with the rest coming from other sources such as the web, press, news, and filings.

Additionally, despite Bloomberg’s model being trained specifically on financial data, a paper by Queen’s University and JPMorgan AI Research from October 2023 found that ChatGPT outperformed BloombergGPT on many financial tasks. This may have been a result of Bloomberg’s training data not being as proprietary as some have reported.

In January 2024, Bloomberg launched AI-generated summaries of company earnings transcripts within the Bloomberg Terminal after a beta period in Q4 2023. Bloomberg has not publicly shared which model powers this feature, though it’s likely an in-house model given the amount of resources the company expended on building BloombergGPT and the fact that Bloomberg Intelligence analysts helped train the LLM on nuances of financial language.

Bloomberg has also incorporated generative AI tools across Bloomberg Tax, Bloomberg Law, and Bloomberg Government, enhancing the summarization and search functions across these products. Like BloombergGPT, these appear to use custom LLMs trained on Bloomberg data, with Bloomberg Government noting that:

“Bloomberg Government’s cutting-edge AI technology is built on a foundation of comprehensive primary and secondary sources, award-winning unbiased news, and expert analysis… Our large language models are training on this specialized content, and every answer they provide is sourced from expert-vetted materials.”

Replit

Replit* is a browser-based integrated development environment (IDE) focused on collaboration, new programmers, and building MVPs. Replit offers a collection of AI-powered features to help coders, including code autocomplete, chat functionality, and automatic debugging. Replit’s AI features are powered by a combination of custom models, fine-tuned open-source models, and closed-source models from Anthropic and OpenAI.

The company is to be pursuing a flexible strategy with AI that involves selecting the right “layer” of AI for a particular use case based on desired characteristics such as output quality, speed, and cost. Although Replit announced a partnership with Google Cloud in March 2023, the company’s current AI features are not powered by Google’s models.

Replit’s code completion models, released in April 2023 and October 2023, were originally developed in-house, and the company has since shared them on Hugging Face. For its automatic debugging feature, the company fine-tuned an open-source model and released it in April 2024. For other features, like code generation and chat, the company uses a “Basic” AI model for free users and provides access to top-performing closed-source models for paid users.

In September 2024, Replit also launched an autonomous pair-programmer built on a ”proprietary” seven billion parameter model. Under the hood, the model may actually be calling more powerful closed-source models, given that the documentation includes a screenshot of the agent requesting the user’s OpenAI API key. Some early users expressed frustration with the product’s early capabilities, but they also showed optimism about where it is headed.

Canva

Canva is an Australian web-based design platform that was valued at $49 billion in October 2024. In October 2023, Canva introduced Magic Studio, its generative AI design studio. Canva noted that Magic Studio is built on “proprietary models,” and the company has an interesting training dataset: content created by users on its platform. While Canva’s Magic Studio was trained on proprietary models, the design platform also offers tools that leverage existing closed-source models.

While Canva has stated that it won’t use its creators’ data without their permission, users who opt-in are paid by Canva’s Creator Compensation Program to have their content used to train Canva’s proprietary AI models. Canva’s Magic Studio can turn text prompts into image, video, and presentation designs, and users can also make adjustments within static images, such as resizing or moving parts within an image.

In July 2024, Canva announced its acquisition of Leonardo.Ai, an image generation platform that had launched its own foundation AI model one month prior. Canva acquired Leonardo with the intention of integrating Leonardo’s Phoenix foundational model into its existing Magic Studio product, though Leonardo will continue to operate independently as well.

Walmart

Walmart has been building customer-facing conversational AI tools since Google’s BERT models were released in 2018. In April 2023, the company was reportedly using closed-source models, including GPT-4, for a wide assortment of use cases, but by January 2024, the company had begun using fine-tuned open-source models alongside GPT-4.

Walmart continued moving away from closed-source models, and by June 2024, the retailer transitioned to custom, in-house models for all of its generative AI features. In October 2024, Walmart announced a collection of retail-specific LLMs it refers to as Wallaby. The company says that:

“Wallaby is trained on decades of Walmart data and understands how Walmart employees and customers talk. It is also trained to respond in a more natural tone to better align with Walmart’s core values of customer service.”

Walmart’s goal with Wallaby is to “create a unique homepage for each shopper making the online shopping experience as personalized as stepping into a store designed exclusively for each customer.” What this might look like is customers typing queries like “can you help me plan a football watch party?” or “what supplies do I need for a newborn?” and being presented with a list of needed items, instead of having to search for all of the pertinent items one by one.

Internally, Walmart is leveraging AI to build customer support chatbots and assistants to help employees find information like the availability and location of products in stores, and the company has also used AI to revise and clean up its extensive product catalog. LLMs touched over 850 million pieces of data to complete this catalog work, which Walmart CEO Doug McMillon said would have taken “nearly 100 times the current headcount to complete in the same amount of time” without AI.

Walmart is also incorporating generative AI into in-store augmented-reality shopping experiences and online virtual shopping experiences on platforms like Roblox. Despite its investments in custom models, Walmart is still open to using third-party models when those are the best options for a particular use case.

Layer 2: Use an Existing Closed-Source Model

Beyond building their own models from scratch, companies can also access closed-source AI models like OpenAI and Anthropic via API and build their own solutions on top of these models.

Large closed-source models like OpenAI’s GPT and Anthropic’s Claude are capable of generating accurate outputs across a variety of fields, making them useful for general tasks such as transcription, customer service, data extraction, and other tasks that don’t require extreme, niche domain expertise. They produce the highest quality outputs available from any AI model.

Closed-source models are also the easiest on-ramps for AI adoption from an engineering perspective due to their typically high-quality outputs and relative ease of access via API. To compete with smaller open-source models like Llama 3 8b that offer cheaper, faster inference, closed-source model providers are also releasing smaller models such as GPT-4o mini. Whereas GPT-4 has 1 trillion parameters, GPT-4o mini’s size is similar to competing smaller models like Llama 3 8b.

Zapier

Founded in 2011, Zapier is a San Francisco-based workflow automation startup that was valued at $5 billion as of August 2023. The company provides integrations between over 7K apps, with G-Suite products, Slack, Notion, and Mailchimp among its most popular integrations. Users can also build no-code automated workflows between multiple apps. Zapier’s mission is to “make automation work for everyone,” and the company’s incorporation of AI supports that goal by adding LLMs to users’ automation toolkits.

Zapier workflows can include steps that call AI models, and Zapier itself actually uses AI-enabled workflows for internal processes. In a September 2023 interview, for instance, Zapier’s Head of AI talked about how Zapier uses AI internally. He mentioned that he felt that AI excels at summarizing and extracting structured information from large volumes of unstructured data. To mitigate the risk of hallucinations, he suggested focusing on use cases that naturally require a human-in-the-loop.

Some examples of Zapier’s internal AI-enabled workflows, which are built on OpenAI’s models, include:

Automated meeting transcriptions and summaries: When a file containing a recording is uploaded to a particular Dropbox folder, Zapier transcribes the recording using OpenAI, uploads the transcript to Dropbox, summarizes the transcript using OpenAI, and sends a message in Slack. This workflow, which is fully automated, helps Zapier’s customer success team members to stay up-to-date on customer calls.

Article summaries: Members of Zapier’s content team use a Zapier workflow to summarize web articles as part of their research processes. After the “Zap” is triggered, Zapier’s Chrome extension parses the given webpage for text and sends a prompt to OpenAI to summarize the data.

Natural language workflow creation: Zapier’s Copilot tool also helps users construct workflows based on natural language prompts. For example, a user could type: “when I get a new lead in Webflow, create a contact in Hubspot and send a Slack message to the sales team,” and Copilot would populate a workflow to execute that task. Given that Zapier’s other AI features are powered by OpenAI, it’s likely that the Copilot is, too.

Aside from its workflows product, Zapier also lets users create simple AI chatbots that are lightweight wrappers around an OpenAI model. Users can also schedule syncing between their chatbots and a knowledge source to maintain up-to-date information. These chatbots can be embedded in web pages, and they are capable of running Zapier workflows and collecting user information. Zapier itself also uses internal chatbots to generate press releases, write blog posts, provide feedback to colleagues, and translate company jargon to simple language.

Klarna

Klarna is a $6.7 billion Swedish fintech company that provides buy now, pay later services. In September 2024, the company made waves when its co-founder and CEO, Sebastian Siemiatkowski, explained that it had removed Salesforce and Workday from its tech stack to replace them with AI after the company had experienced success with its OpenAI-powered customer service assistant in February 2024.

On the surface, there’s nothing unique about Klarna that would have predicted its heavy internal investment in AI. It could have been any company that decided to roll the dice on fast-paced LLM adoption. The results of Klarna’s experiment will likely serve as a learning opportunity for other businesses to understand the upside of operational efficiency gains from AI.

In early 2024, Klarna launched an AI assistant, powered by OpenAI, that could tackle various customer service issues like “refunds, returns, payment-related issues, cancellations, disputes, and invoice inaccuracies.” In its first month after launch, this AI assistant handled 2.3 million conversations, representing two-thirds of Klarna’s customer service chats, or the workload of 700 full-time workers. Klarna also claims that its AI assistant reduced repeat inquiries by 25% and time-to-resolution from 11 minutes to 2 minutes. Klarna believes this new product will contribute $40 million to its bottom line over the course of 2024.

Tech analyst Gergely Orosz tested Klarna’s AI assistant in February 2024 and described it as “well-built,” but he also pointed out that LLMs may not have been necessary to automate the low level of support that this tool handles. In September 2024, Klarna expanded the AI assistant’s functionality by using it to power a chat-based shopping experience that helps consumers search for and research products they’re considering purchasing.

Internally, Klarna launched an AI assistant known as Kiki, also powered by OpenAI, in June 2023. As of May 2024, it was being used daily by 85% of employees and answering over 2K queries per day.

Ramp

Founded in 2019, Ramp is a $7.7 billion B2B fintech startup offering a wide range of financial software products, including tools for expense management, travel management, bill pay, and procurement. These products store financial information, such as corporate card transactions, that Ramp has layered generative AI on top of. The company has also integrated generative AI throughout many areas of its internal operations.

Ramp isn’t just experimenting with AI; it has heavily invested in it. The company has a dedicated applied AI team led by the former CEO of Cohere, an AI-driven customer support platform acquired by Ramp in June 2023. Additionally, Ramp expanded its procurement capabilities by acquiring the AI-powered procurement platform Venue in January 2024.

In an August 2024 interview, Ramp’s CTO Karim Atiyeh noted that the company had spent “some time fine-tuning in many places” and “quickly found out that our time was worth way more and that we should just wait for other generations of models.” He added that the GPT-4o mini model performs well for around 90% of Ramp’s needs.

One notable example of Ramp’s AI implementation is its use of GPT-4 to fine-tune open-source models, like Meta’s Llama 2, for improved custom models that are more cost effective and accurate. As of November 2023, Ramp reported using GPT-4 to process thousands of messy receipt samples to finetune an open-source model, resulting in a cheaper, more effective model than GPT.

As of July 2023, Ramp leverages GPT-4 and Claude for contract document analysis. These models extract and structure contract data, automatically notifying customers about important dates like termination deadlines. Additionally, Ramp has streamlined financial transaction categorization and receipt memo captions, using GPT-4 to classify transactions and suggest memos based on context.

Ramp is also developing a GPT-4o-powered UI navigation feature that guides users through tasks on its website with text prompts, like changing corporate credit card branding. Still in internal beta as of August 2024, this feature is 60% to 90% effective, which Ramp’s CEO finds too low for public release.

Although the UI navigation is still in beta, Ramp already offers a GPT-powered natural language copilot to answer queries and perform basic actions. For example, users might ask, “Show me all gas purchases above $100” or “How can I reduce costs?”

Some of Ramp’s internal AI tools are shared across teams, too. For instance, Ramp uses a language model to generate a weekly internal podcast summarizing customer calls in three to five minutes, and the company built an AI agent that team members can query to access transcripts of customer calls. This agent, which is integrated with Slack, can access Ramp’s vast repository of call transcripts, supporting employee inquiries.

Atlassian

Atlassian is a $50 billion enterprise SaaS company headquartered in Australia that offers software products for team collaboration such as Jira and Trello (product management), Confluence (company wiki), Loom (video recording sharing), Jira Service Management (customer service), and BitBucket (code hosting). The company uses a range of open-source, self-hosted models and closed-source models.

Atlassian first released its generative AI features, known as Atlassian Intelligence, in April 2023. Atlassian Intelligence was built on a combination of OpenAI’s models and Atlassian’s own internal models, and after a beta period, it was made available to the general public in December 2023. Atlassian’s AI features include natural language search in Jira and Confluence, the ability to take basic actions like opening pull requests, and summarization. Notably, Atlassian’s Jira search function is powered by a fine-tuned OpenAI model, and it works by translating the user’s query into Jira Query Language (JQL) below the surface.

In May 2024, Atlassian unveiled its new AI assistant, Rovo, before fully releasing it in October 2024. Built on top of Atlassian Intelligence, two of Rovo’s key improvements include deep search capabilities across Atlassian and third-party apps and no-code “Agents” that can be used “to generate and review marketing content ... collate feedback from various sources, or streamline processes such as clearing up Jira backlogs and organizing Confluence pages.” All of Atlassian’s AI features that provide model information appear to be built on OpenAI’s models.

Atlassian also acquired video recording tool Loom for nearly $1 billion in October 2023. Loom facilitates video-based collaboration by allowing users to easily capture and share recordings of their screens. At the time of acquisition, Loom was already incorporating AI to help with transcript generation, summarization, and editing. However, as of November 2024, Loom now feeds video transcripts to OpenAI to generate summaries and titles for videos. Loom does not specify which model it uses for transcription.

Finally, in August 2024, Atlassian acquired Rewatch, a product that lets users record and summarize video meetings using AI, to augment Loom. After the Rewatch acquisition, Loom’s head of product expressed a vision of automatically storing relevant information from meetings in the customer’s knowledge base.

Canva

While Canva’s Magic Studio, as previously discussed, was built on proprietary models, Canva leverages closed-source models as well for specific tools. For example, users have access to Canva’s OpenAI-powered writing editor, which can match the tone of uploaded writing samples while generating copy.

Canva also has a third-party app marketplace which includes over 100 AI-powered apps as of November 2024. Simple applications include OpenAI’s DALL-E app and Google’s Imagen app, but the marketplace also has specific tools such as T-shirt Designer for generating t-shirt designs, MelodyMuse for composing music from a text prompt, and OpenRep for captioning images. The AI models used vary from app to app, with many apps not disclosing their models, so it’s likely that the marketplace offers various closed-source, open-source, and custom models.

Layer 3: Build on an Open-Source Model

Beyond building their own models from scratch or leveraging a closed-source model such as OpenAI’s GPT-4o, some companies have chosen to build their own tools on top of open-source models like Mistral, Meta’s Llama, models from Hugging Face’s library, and others.

Open-source solutions such as these are popular, with Databricks noting in a 2024 report that 76% of companies that use LLMs were now choosing open-source. Why? Because open-source models offer increased customization and transparency, stronger data privacy, and the potential for lower costs.

Building a new model from scratch is time-consuming, expensive, and often unnecessary unless a particular company’s model needs to be based almost entirely on proprietary data. Additionally, the costs of using closed-source models like OpenAI’s GPT-4o can quickly grow expensive. Llama, Mistral, and other open-source models offer massive datasets with billions of parameters that span a wide range of topics, from foreign language to coding, and those open-source models can then be refined to meet specific company’s needs.

Put simply, for most companies, it’s more cost-effective to tweak an existing open-source model that almost certainly has relevant data than it is to build a new model from scratch. Additionally, open-source models are free from the licensing and API fees associated with closed-source models like GPT, allowing companies with the resources to self-host their models to do so cheaply.

For enterprise customers, privacy controls that reduce the risk of copyright violations, licensing disputes, and data leaks are an important factor for enterprise customers with sensitive data, and open-source models offer more transparency and greater control over these risks.

VMware

VMware is a virtualization software provider that was acquired by Broadcom for $69 billion in 2023. VMware has two flagship products, Cloud Foundation and vSphere Foundation. The former offers private cloud solutions (meaning computing resources are managed internally instead of on a public cloud provider like Amazon Web Services or Microsoft Azure), and the latter improves the operational efficiencies of companies’ IT infrastructures.

One commonality across both of these products is an emphasis on security, with the company noting that its private cloud solutions offer greater security than their public provider counterparts, and its vSphere Foundation prioritizes “protecting data confidentiality, maintaining data integrity, and ensuring data availability.” By November 2023, staying in line with its security-first ethos, VMware had noticed an opportunity to create a more secure generative AI coding assistant built on open-source models.

VMware knew that coding assistants were one of the most widely adopted generative AI tools, noting that 85% of developers felt more confident in their code quality when using GitHub’s Copilot tool. However, Copilot, which is powered by AI models developed by Microsoft and OpenAI, processes users’ personal data and retains user engagement, feedback, prompts, and suggestion data for all customers, including customers with Business and Enterprise plans.

VMware identified four key risk factors preventing enterprise customers from using third-party closed-source coding assistants:

Privacy: The primary apprehension enterprises have about using closed-source models centers around the protection of proprietary code and data. Enterprises are wary of exposing their intellectual property to public cloud services, necessitating a more secure approach.

Copyright Violations: There is uncertainty regarding the code generated by these assistants and whether it falls under copyright protection. It is conceivable that code may be extracted from private proprietary websites (such as Stack Overflow, GitHub, etc.), raising concerns about potential copyright violations.

Legal & Licensing Agreements: Enterprises often have intricate legal and licensing agreements with partners and third parties. These agreements can dictate the specific usage of software (for example, device drivers) only in certain areas, where training and coding assistants are not included.

Cost Considerations: The expense of maintaining 24/7 cloud services to support a large number of engineers in an enterprise becomes a substantial financial burden, making on-premises solutions more appealing.

VMware’s solution was to partner with Hugging Face to offer an on-premises solution called SafeCoder which was built upon the open-source codebase LLM StarCoder. StarCoder is a 15.5 billion parameter LLM trained across 80 programming languages.Its training data was curated from open-source projects to exclude data that could raise commercial concerns, minimizing the risk of copyright violations.

Customers can also fine-tune StarCoder using proprietary code, allowing it to acquire domain-specific knowledge. VMware fine-tuned the StarCoder model using source code from its own projects, enabling it to acquire domain knowledge to aid with internal work.

Mathpresso

Mathpresso is a South Korean education technology company that raised $8 million from Korean telecom giant, KT, in 2023, bringing its total funding to about $130 million. The edtech company is best known for its AI-powered education app, QANDA, which offers solutions to math problems uploaded by students as screenshots. QANDA boasts around 130 million searches monthly, and it has 8 million monthly users.

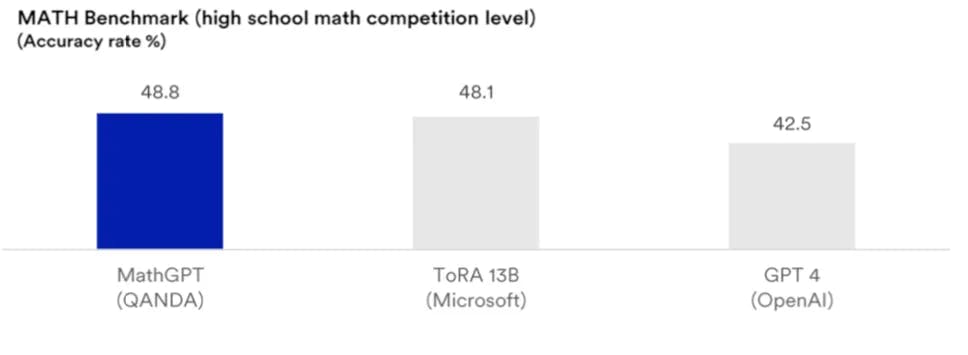

In November 2023, Mathpresso sought to launch a math-focused LLM that could outperform existing proprietary models, and it worked with its investor KT, as well as Korean AI startup Upstage, to build what would eventually become its MathGPT tool. Mathpresso’s primary dataset was its collection of math solutions that it had accumulated from QANDA, and the company trained MathGPT by combining this proprietary dataset with Meta’s Llama 2 open-source model.

Given the size and depth of Mathpresso’s proprietary dataset of math solutions, it made sense from a customization standpoint for the company to leverage an open-source model, with co-founder Jake Yongjae Lee having said that “commercial LLMs like ChatGPT lack the customization needed for the complex education landscape.” Lee also noted that student learning is impacted by hyperlocal factors like curriculum, school district, exam trends, and teaching style, and Llama’s open-source models provided more flexibility to build flexible tools that would be accurate across different learning environments.

In January 2024, MathGPT outperformed Microsoft’s Tora, the previous record holder in math benchmarks such as MATH (high school math) and GSM8K (grade school math), achieving first place among models under 13 billion parameters.

Source: Mathpresso

As of November 2024, Mathpresso is planning to continue enhancing the accuracy and performance of MathGPT, eventually integrating it into the company’s current solutions like its QANDA app.

Brave

Brave is a privacy-first web browser that blocks trackers, cookies, fingerprinting, and third-party ads on all websites, and it offers a firewall and VPN subscription for paying customers. In August 2023, Brave released its browser-native AI assistant, Leo, which allows users to summarize web pages, generate new content, translate, analyze text, and more, without leaving the page.

Source: Brave

Maintaining its privacy-first policy, Brave built its browser AI assistant on open-source models to maximize user privacy. Notably, Brave initially trained Leo on Meta’s open-source Llama 2 model before switching to make Mistral’s open-source Mixtral 8x7B its default LLM for Leo users in January 2024. When clients use Brave-hosted models (Llama 3 and Mixtral), their IP addresses are obscured through reverse proxies, conversations aren’t stored, and conversation data is immediately discarded after a chat ends. In other words, zero user data is stored.

While Brave has also supported Anthropic since 2023, allowing customers to switch models if they would like to leverage Claude’s advanced reasoning and coding capabilities, the company has made its default models self-hosted and open-source to emphasize privacy and data security. For customers who do opt to use Claude instead of Brave-hosted models, their queries still aren’t used to train Anthropic’s AI models, but Anthropic retains those conversations for 30 days.

Replit

While Replit’s* custom code completion models were discussed earlier, the company also fine-tuned an open-source 7 billion model from Hugging Face in April 2024 to launch its Code Repair AI agent, which automatically fixes bugs in users’ code. Paid customers have the option to switch between Replit’s fine-tuned model and other closed-source models, which shows that companies can offer a combination of open-source, closed-source, and custom models to treat different customer needs.

Layer 4: Use Pre-Existing AI tools

This brings us to our final layer: businesses that purchase and use fully-developed generative AI tools developed by other companies. While the previous examples have involved companies building their own AI tools, and, in some cases, entire AI models, this final layer involves little internal development. Companies simply identify and leverage tools created by third parties.

The obvious benefit of external tools is cost: it’s cheaper to pay for a specific tool than to invest in training or fine-tuning models. However, breaking the decision down further, there are three specific reasons why companies choose external tools:

First, if a company’s workers need a general knowledge research tool and/or brainstorming partner. As shown in a September 2024 study by BCG, access to generative AI tools makes workers more productive, especially in situations where they might otherwise lack the technical expertise to get the job done. Excluding situations where company or domain-specific knowledge is needed, tools like ChatGPT are more than sufficient for many knowledge workers.

Second, if a company would benefit from a generalized business tool such as chat-assisted customer service, but the return on investment wouldn’t justify building its own internal model. For example, not every company has the resources or willingness to build its own chatbots and proprietary models from scratch like Walmart did with Wallaby. However, third-party tools, like Zendesk’s Answer Bot, can still provide immediate benefits by handling straightforward service requests.

Third, when a company has a niche, specific need, but a third party has developed an industry-specific tool that it can buy from them at a lower cost than producing it themselves. As we’ll discuss below with the Kira use case, for example, since legal contracts among different law firms use similar terms and language, Kira can be trained on legal documentation to provide highly accurate results concerning loan terms and M&A deals for many different law firms.

Boston Consulting Group

Boston Consulting Group (BCG) is one of the world’s largest consulting firms, with 32K employees and $12.3 billion in revenue in 2023. Advising clients on AI integration is quickly becoming one of BCG’s most lucrative verticals, with CEO Christoph Schweizer noting that the company expects AI integration projects to generate 20% of its 2024 revenue. However, BCG has also been investing heavily in training its own employees on how to use GenAI. According to a press release discussing the company’s 2023 performance, “BCG invested heavily to train the firm’s consultants to harness AI / GenAI internally, including on a range of proprietary tools for knowledge management and content creation.” One tool, specifically, that BCG found to be immensely useful was OpenAI’s ChatGPT.

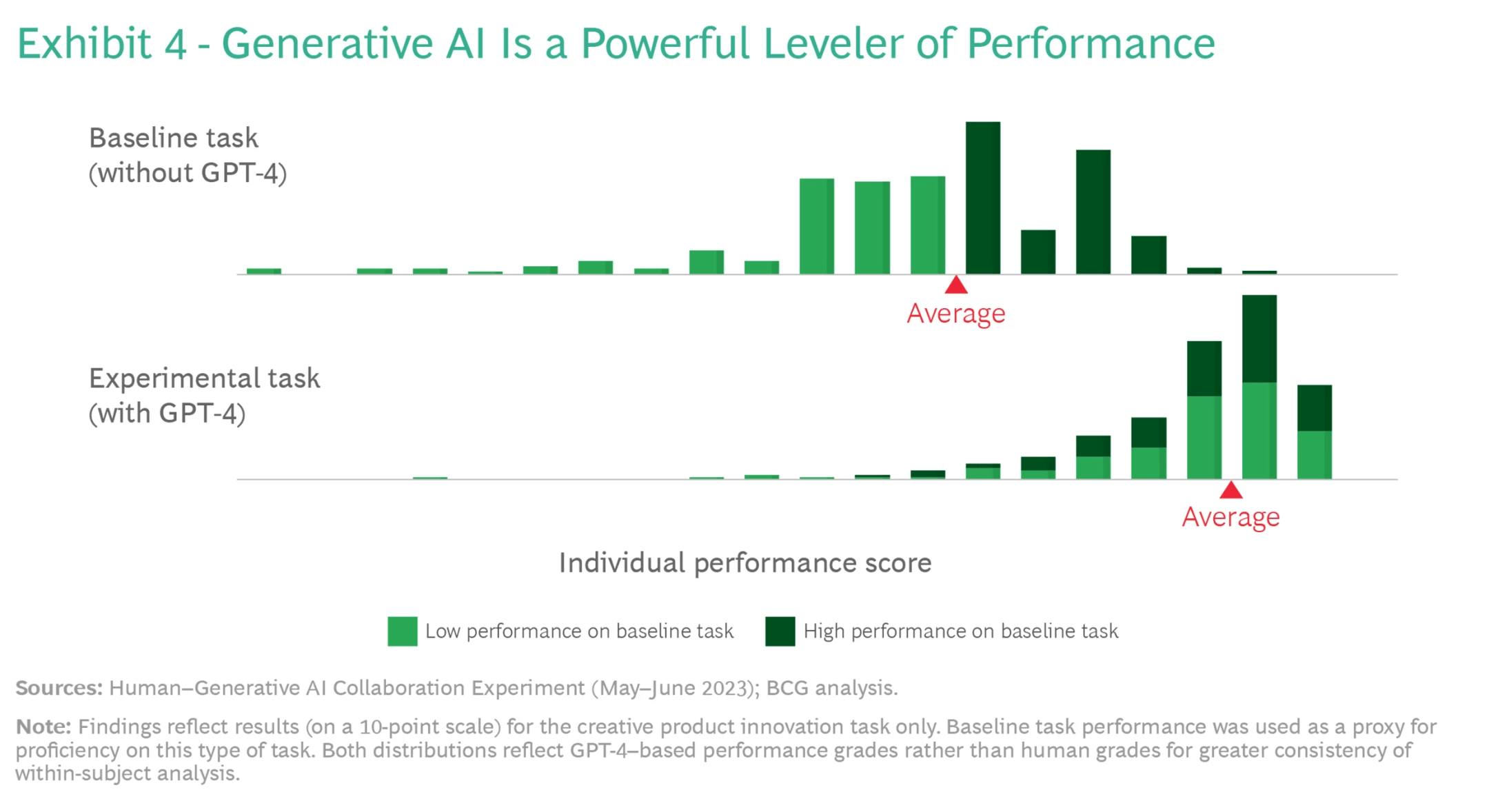

In September 2023, BCG published the results of an internal experiment in which 758 BCG consultants around the world were studied to test the impact of leveraging ChatGPT to solve different “creative product innovation” and “business problem solving” tasks. In this study, the consultants used a platform developed on OpenAI’s GPT-4 to enable similar capabilities to ChatGPT, and the results were impressive: while the performance of consultants using it for business problem-solving was 23% worse than the control group (according to BCG, this is likely because there’s more room for error when LLMs are asked to weigh nuanced qualitative and quantitative data to answer complex questions), 90% of participants showed improved performance on creative tasks, with an average performance boost of 40%.

Source: Boston Consulting Group

You can read the full study here, but for context, the following are a few examples of creative problems that the consultants had to solve:

You are working for a footwear company in the unit developing new products. Generate ideas for a new shoe aimed at a specific market or sport that is underserved. Be creative and give at least ten ideas.

Come up with a list of steps needed to launch the product. Be concise but comprehensive.

Use your best knowledge to segment the footwear market by users. Develop marketing slogans for each segment you are targeting.

Suggest three ways of testing whether your marketing slogan works well with the customers you have identified.

Write marketing copy for a press release of the product.

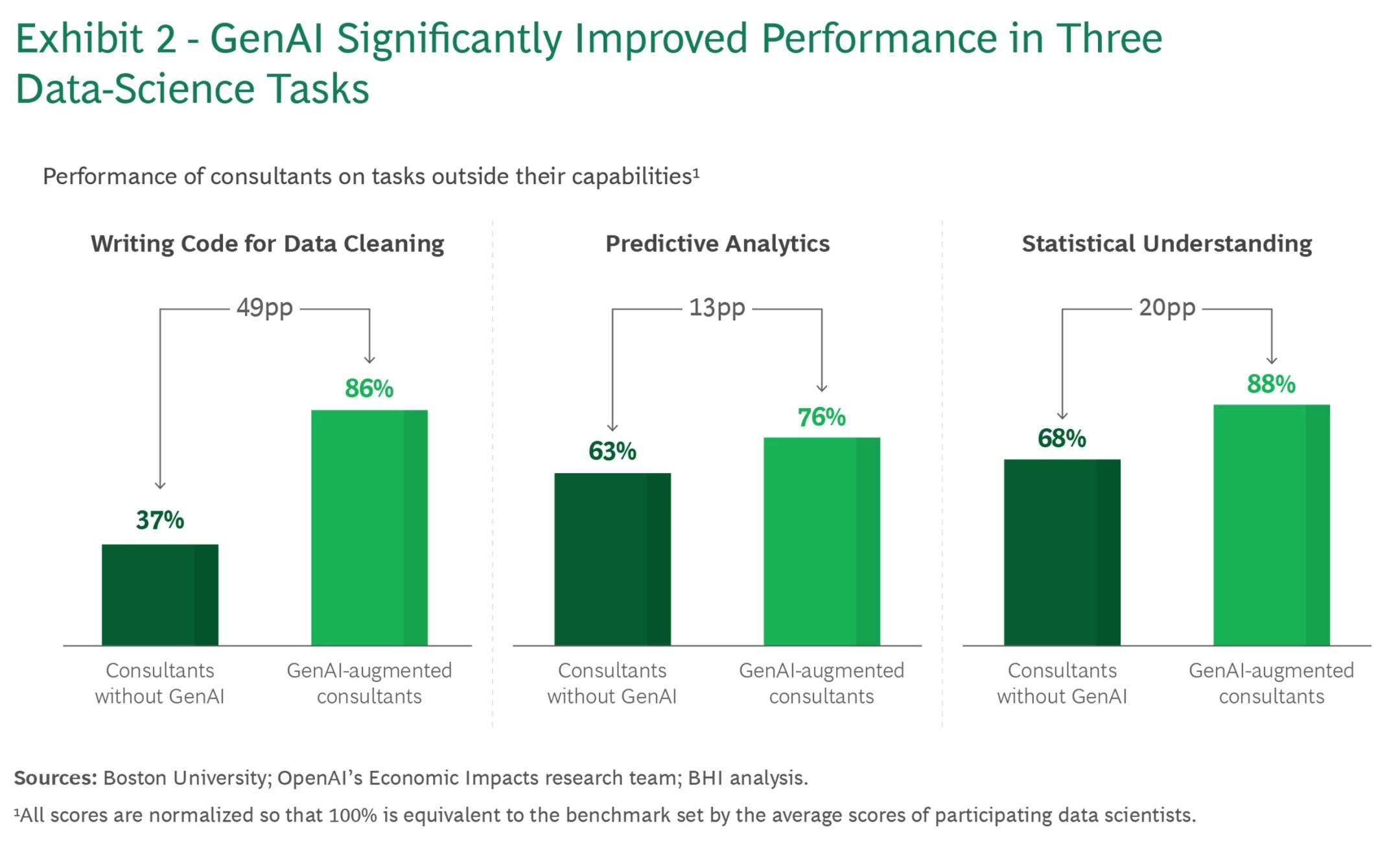

One year later, BCG conducted another experiment to study the impact of using generative AI for work. While the first experiment studied the impact of AI on tasks that workers could complete on their own, this second experiment evaluated the impact of using AI on projects that an employee otherwise lacked the capabilities to do on their own.

480 BCG consultants participated in this second study, where they were randomly assigned to either a GenAI-augmented group that had access to ChatGPT, or a control group that only had access to traditional resources like Stack Overflow. These consultants had three tasks:

Writing Python code to merge and clean two data sets.

Building a predictive model for sports investing using analytics best practices (e.g. machine learning).

Validating and correcting statistical analysis outputs generated by ChatGPT and applying statistical metrics to determine if reported findings were meaningful.

Results from this study showed that access to ChatGPT yielded impressive performance boosts. Compared to a benchmark set by the average scores of a panel of data scientists who regularly coded and worked with analytics, the performance of consultants who didn’t know how to code on the coding exercise jumped from 37% to 86%. Smaller, but directionally similar, increases were shown in exercises concerning predictive analytics and statistical understanding.

Source: Boston Consulting Group

BCG’s global people chair Alicia Pittman noted that in October 2023, after the results of the first study, the consulting firm rolled out ChatGPT Enterprise to all of its staff, demonstrating how valuable the consulting firm found the tool for its own workers.

Dollar Shave Club

Dollar Shave Club is a direct-to-consumer shaving goods supplier that was acquired by Unilever for $1 billion in 2016 before being sold to private equity firm Nexus Capital Management in 2023.

Instead of building its own internal chatbot from scratch on proprietary data, Dollar Shave Club opted to use a tool developed by customer support SaaS platform Zendesk: Answer Bot. In March 2024, Zendesk released its Answer Bot, a chatbot that can reply to customer questions with content from a company’s knowledge base.

Answer Bot was trained on 12 million customer interactions, allowing it to reply to uncomplicated customer support questions in a matter of seconds. While Answer Bot is a third-party tool, it was designed to answer client-specific support questions from customers by leveraging support documents and FAQs within clients’ knowledge bases.

Trent Hoerman, Dollar Shave Club’s director of engagement operations, noted that Zendesk’s Answer Bot was most effective at handling service tickets that the customers could handle themselves, such as pausing or canceling their accounts, and it allowed the company to expand its support hours without growing headcount.

While Answer Bot could not handle escalated tickets such as fraud or shaving injuries, its ability to automate straightforward tasks freed up customer service representatives to focus on more detailed complaints. As of March 2024, Zendesk’s Answer Bot was resolving 4.5K Dollar Shave Club tickets per month, successfully deflecting 10% of the company’s total ticket volume.

Law Firms

The legal field is notorious for long, dense contracts filled with complex jargon and clauses. Given the consistency of vocabulary, terms, and clauses across legal contracts, there is an opportunity for generative AI tools trained on legal documents to expedite the contract review process by helping identify important data points and suspicious language.

However, law firms lack the engineering resources needed to develop these tools in house. For example, while Bloomberg committed 350 of its 8K engineers to building its AI model, the sixth-largest law firm in the US by revenue, Skadden, only had 3.5K total employees as of November 2023.

Given the need for quicker contract reviews, law firms such as Skadden, Hogen Lovells, Osler, Chapman and Cutler, and Paul, Weiss have turned to the AI review tool Kira to streamline contract review processes, allowing them to reduce their contract review times by up to 60%.

Kira patented its own machine learning contract analysis system, developed by a team of engineers, lawyers, and accountants, and its Built-In-Intelligence tool can quickly scan documents to identify more than 1K common clauses, provisions, and data points to answer lawyers’ questions about various legal documents.

Kira’s use cases for lawyers include M&A due diligence, loan agreement due diligence, compliance and regulatory review, lease abstraction, and deal point studies, and lawyers can upload contracts in any format and instantly generate summary texts, search for specific terms and clauses across all of one’s documents, and filter through contracts by different tags such as specific parties or phrases.

Three Ways “Long Tail” Companies Are Using AI

Companies in the long tail of AI are finding innovative ways to leverage generative AI, from Bloomberg developing specialized tools for financial professionals to consultants using ChatGPT as a personal productivity assistant. The models powering these tools vary greatly, from Canva’s custom models fine-tuned for image generation to Ramp’s various GPT integrations.

In surveying how various companies in the long tail of AI are currently incorporating AI, a few patterns have emerged. Although the use cases will likely continue to evolve, especially given the breakneck pace of advancement in the underlying AI technologies being implemented, those patterns are likely to persist and hint at the direction the long tail of AI companies (i.e. the bulk of the economy) will take when implementing AI.

1. Companies Are Using AI to Complement Their Underlying Businesses, Not Replace Them

The companies shown in the examples above all share one thing in common: they offered well-established products and services before leveraging AI, and they have used artificial intelligence to complement and augment those products and services.

Take Replit, for example. Replit had already seen success and was able to achieve an $800 million valuation in December 2021 well before launching its AI-powered coding assistant in October 2022. Prior to that launch, the company had spent six years solving technical challenges for developers, including managing dependencies, deploying projects, and storing a granular version history of code repositories. AI was simply the next iteration of product improvement, and Replit’s AI tools were literally built on the foundation of its existing products, considering that Replit’s version history serves as a source of training data for its AI models.

To take another example, in the web browser market, there are a multitude of non-AI features that can play into an individual’s browser choice, including speed, browser extensions, developer tools, cross-platform availability, and privacy. While Brave’s Leo is an effective tool for drawing insights directly from one’s browser, the company’s success has stemmed from its eight-year commitment to privacy. Leo is just Brave’s generative AI extension of its privacy-first principles.

Additionally, September 2024 and October 2024 external reviews of Jira, Atlassian’s product management software, didn’t mention AI whatsoever. Instead they focused on other, non-AI aspects of the product, like ease-of-use, integrations, no-code workflows, and timeline planning features. And in Walmart’s case, low prices through bulk purchases and a lax return policy are key pillars of its business that AI can support, but AI is not fundamental to them.

While “AI strategy” is the business question with the most hype right now, non-AI companies still need to prioritize the core aspects of their user experience. Companies can adopt AI tools internally that improve process efficiencies and complement existing products, but the foundation needs to precede any AI implementation.

2. Companies Are Replicating Peers’ AI Strategies

Eleanor Roosevelt once said, “Learn from the mistakes of others. You can’t live long enough to make them all yourself.” In the early innings of a fast-changing AI market, it’s impossible to experiment with every possible iteration of AI. To stay ahead, companies can replicate strategies that other businesses have found to be beneficial.

Companies in most industries can be broken down into similar functions: sales, marketing, customer service, product, engineering, and design. Meanwhile, companies in the same industry often share similar customers, suppliers, and resources.

These similarities across companies allow teams to pattern-match successful AI use cases from their peers and competitors. For example, considering that BCG found that using ChatGPT yielded strong performance improvements in creative and coding tasks, it would make sense for other consulting firms like McKinsey and Bain to leverage generative AI tools for their consultants, too.

Relevant use cases aren’t limited to other companies within the same industry, either. Brave and VMware, for example, offer very different core products: the former is a search browser, and the latter offers virtualized computing solutions. However, both prioritize data privacy, hence their decisions to build Leo and SafeCoder on open-source models.

First movers can gain short-term competitive advantages through AI adoption, and if those advantages prove to be sustainable, competition will force other companies to adopt similar AI strategies or fall behind. If, for example, the results of BCG’s AI productivity study remained true over time, and BCG was to supply its consultants with ChatGPT subscriptions, McKinsey and Bain would have to adopt the same AI tools to remain competitive. If Ramp’s sales development reps are actually four times more productive than the industry average, and competitors don’t adapt, Ramp will quickly establish a powerful competitive advantage.

3. Companies Are Staying Flexible in Their AI Strategies

Our four-layer breakdown of AI usage is a helpful framework for organizing how companies use artificial intelligence, but these layers aren’t rigid, and companies can implement more than one strategy, or change strategies, to address different pain points. The dynamics between layers, as well as the underlying models themselves, are also evolving in real time.

For example, through early 2024, model companies were focused on building bigger and bigger models. With OpenAI, for example, GPT-2 had 1.5 billion parameters, GPT-3 had 175 billion parameters, and GPT-4 reportedly has 1 trillion parameters. However, for customers, larger and larger models offer diminishing returns because excess parameters aren’t relevant to their particular needs, and now, model companies are releasing smaller, more efficient alternatives that can process information more quickly. For example, in July 2024, OpenAI released GPT-4o mini, which it said is “advancing cost-efficient intelligence.”

GPT-4o mini is “roughly in the same tier as other small AI models” like Llama’s 8 billion parameter model, as well as “an order of magnitude more affordable than previous frontier models and more than 60% cheaper than GPT-3.5 Turbo.” This strategy appears to be having an effect on the market – Ramp’s CTO, for instance, described GPT-4o mini as good enough for 90% of use cases and expressed a belief that finetuning is generally a waste of time compared to waiting for generalized improvements to LLMs.

Because AI tools and models are evolving quickly, and switching costs when choosing AI models are low, a mistake to avoid is going too big too soon.

Baseten CEO Tuhin Srivastava, for example, told Contrary Research that he has seen a pattern of companies overestimating the benefits of building their own model and underestimating the resources required for it, and companies can spend millions of dollars and many months attempting to train their own model before they can even think about how to productionize it.

Srivastava’s advice is to “start with something you know will work and then slowly rip things out.” In his view, “smart engineering organizations realized they can take things off the shelf and abstract away the ‘model’ question, instead focusing on defining the experience and then retrofitting that with fine-tuned open source tools.” He recounts examples of companies that started with OpenAI’s models but later achieved better, faster performance at lower cost by switching to fine-tuned open-source alternatives.

Companies that remain flexible will likely be better positioned to take advantage of improving AI technologies as they are launched.

The End Goal for Companies in the Long Tail

While AI hype at the moment is concentrated on model and AI-native companies, AI use cases among non-AI-native companies within the “long tail” are growing more prominent and commonplace, ranging from customer service chatbots to augmented reality shopping experiences. Given the pace of AI innovation, we believe the easiest way to understand how companies in the long tail are using AI is by grouping their use cases into one of four layers that we outlined in this deep dive: custom models, closed-source models, open-source models, and third-party AI tools.

The examples highlighted in this deep dive demonstrate how different organizations have built and adopted different AI tools so readers can better understand how to think about AI integration in their own organizations. All four layers present trade-offs involving training times, costs, customization, privacy, and quality, but given the low switching costs between models, customers have the flexibility to experiment with different solutions. The end goal for companies in the long tail is not to become AI companies themselves but to leverage AI tools to complement and accelerate their core businesses.

*Contrary is an investor in Ramp and Replit through one or more affiliates.

Special thank you to Tuhin Srivastava, the CEO and co-founder of Baseten, and his team for contributing to this report.